Appraisal in the IEHIAS

- The text on this page is taken from an equivalent page of the IEHIAS-project.

It is rarely the role of those who carry out the assessment to make decisions about how to respond in the light of the results. The outcomes of integrated assessments, however, are often relatively complex, and therefore need to be provided to decision-makers in a form that they can understand. In many cases, also, the end-users may request help in interpreting the findings, and in further assessing the possible consequences of their decisions. For these reasons, the boundary between assessment and decision-making is neither absolute nor impervious, but instead involves a dialogue between scientists, decision-makers and the other stakerholders concerned. The purpose of appraisal is to bring together, communicate and interpret the results of the assessment as an input to this dialogue. This involves two key steps:

- Reporting the assessment results - i.e. delivering them to the end-users in a synthesised and understandable form;

- Comparing and ranking outcomes - i.e. identifying and interpreting the messages that the results imply.

Neither of these is a wholly objective process. Each involves some degree of selection of what matters, and the methods (and even the langauge) used in each case almost inevitably act to shape the conclusions. In the apprtaisal stage, as much as at any other stage in an assessment, it is therefore important to guard against bias. To ensure this:

- appraisal procedures need to be set out well in advance, ideally as part of the assessment protocol, and these procedures need to be adhered to;

- appraisal should be an open process, involving the stakeholders;

- access should always be available to the underlying, more detailed data (i.e. the assessment results) on which the appraisal is based.

Reporting assessment results

The outcomes of assessments need to be reported both to the eventual end-users (i.e. decision-makers) and to the other stakeholders involved in the assessment. The way in which this is done is important for what is reported, and how it is communicated, determine what the users think they are being told and, thereby, the decisions that they make.

Given this, it is important to bear in mind a number of precepts. These state that the information must be:

- relevant to the users

- balanced and accurate

- concise yet complete

- unambiguous and understandable

- credible and open to scrutiny

In reality, devising achieving these is not easy. Problems include:

- the complexity of the issues being addressed and of the science used to assess them;

- the large volume and range of results that assessments often produce;

- the differing expectations, needs, experience and skills of the stakeholders concerned.

It also has to be recognised that the scientists responsible for conducting assessments are not always skilled in communicating with non-expert audiences, and do not always have a clear view of what effective communication implies (see Appraisal in the IEHIAS#Myths in risk communication).

A number of useful strategies can, however, be adopted. These include:

- Ensuring that the reporting methods are defined, and agreed with stakeholders, early in the assessment (at the issue-framing and design stages), and are specified in the assessment protocol - and that the procedures are then adhered to;

- Using a range of different communication methods and media, including both textual reports and visual means (diagrams, maps, animations) - but making sure that these are consistent in their messages;

- Producing a structured set of materials, ranging from simple headline messages through to more detailed scientific reports, each designed to target a specific audience - but, again, ensuring that these are consistent;

- Making available all the relevant supporting information needed to explain how the results were derived and justify any decisions made in the process - and offering direct access to this information as part of the reporting process;

- Evaluating the effectiveness of all communication materials, via a panel of stakeholders, before they are released - and adapting the materials in the light of the comments received;

- Involving professional communicators in the process of designing, preparing and disseminating the materials.

Myths in risk communication

The literature on risk communication, especially in the business field, is littered with lists of the 'myths' of communication. How many myths, and what they are, varies greatly, inevitably raising questions about their validity. Below, however, is a list of five frequent 'misapprehensions' about risk communication, matches against what is often the more common reality.

| The misapprehansion | The reality |

|---|---|

| Telling is informing | People need to feel that the information has relevance to them, and they understand what it means, if they are to listen to what they are being told |

| Informing is persuading | People need to be able to challenge the information, and see and accept the basis on which it was obtained, if they are to trust it and accept its implications |

| More information is better information | People need to have clear and concise messages - but with the option to obtain more information when they need it |

| One means of communication is sufficient | Different people understand and respond to different media and forms of communication in different ways, so the means of communication needs to be attuned to the audience |

| We read the same words and see the same things | Any form of communication is imperfect due to ambiguities in the message given and distortions in the message received, so different people hear and see different messages |

Designing effective assessment reports

Some form of written report (or set of reports) is almost inevitably a primary product of any integrated environmental health impact assessment. It would be misleading and banal (and probably impossible) to provide detailed guidance, or rigid templates, on what form these reports should take. How results are presented depends on the nature of the assessment and the specific (and often varied) user interests. It is therefore wholly inappropriate to force reports into a standard format, unless the type of issue and assessment is similar, and the stakeholders and their interests the are same. Instead, the scope, format and style of the reports should be discussed with the stakeholders early in the process of designing the assessment, and should reflect their priorities and needs.

That said, it is useful to outline the purpose of assessment reports, provide a checklist of the materials that should be considered for inclusion, and give some examples of how the material can be presented.

What are assessment reports intended to do?

Assessment reports clearly serve a number of different roles, not only for those doing the assessment and those on whose behalf it has been done, but also for other, would-be future assessors or commissioners of new assessments. For the assessors, for example, the report may have a number of contractual functions, as well as providing a detailed catalogue of what has been done and what results have been obtained.

For the 'customers' of the assessment, the report is both an insight into the results and process, and a signpost to the implications that follow and the actions that might be needed. For them, therfore, it needs to:

- describe the results of the assessment, in a useful and intelligible manner;

- show how the assessment was done, and explain and justify the decisions made on the way;

- indicate what the results mean in relation to the question which was originally posed;

- spell out any limitations or other considerations that need to be borne in mind when interpreting and using the results.

Because integrated assessment is a relatively new field, and one that (perhaps wisely) has not yet established a dominant paradigm or set of formal practices, the assessment report is also a valuable learning tool - a means of helping others work out what assessments involve, what sorts of issues they can be applied to, and what sort of results they can provide. Whilst informing this wider body of interested people will rarely be the primary objective of the reports, there are clearly benefits in ensuring that the reports are disseminated as widely as possible. And for this audience, it will also be helpful (as far as this can be done without jeopardy) to provide some reflection on the lessons learned - the things that went well, the things that failed, the problems that were encountered and how they were overcome.

What should be included?

Given these objectives, and given the general process of IEHIA that has been proposed here, it follows that a number of key elements are likely to be needed in the assessment report. The table below summarises some of the main elements that should be considered for inclusion, and suggests how some of these might best be dealt with.

| Item | Description | Comment |

|---|---|---|

| Context | ||

| The question and the neeed | A reprisal of the question that is being addressed, and the reason it was raised, together with a summary of who the main stakeholders are. | |

| Scope and content of the assessment | ||

| The issue | A detailed description of the issue, as it was ultimately defined, both textually and in the form of a diagram of the causal model. | May be supplemented (e.g. in an Appendix) by a description of how the final causal model was agreed, showing also (some of) the precursor or intermediate versions. |

| Scenario and type of assessment | An outline of the scenario(s) used as a basis for assessment, including the assumptions involved; specification of what type of assessment was done. | Appendices may need to be provided giving further details of the scenarios, and justifying their use (e.g. in the light of serious limitations or assumptions). |

| Geographical and temporal scope | Description of the study area, population and timeframe for the assessment. | Maps, statistical data and photographs should be included or appended, where appropriate. |

| Environmental exposures | Description of the main agents (e.g. hazards, pollutants), their sources, release and transport pathways, and exposure routes considered in the assessment. | May be supplemented by an explanation of which other exposures were excluded from consideration and why. |

| Health outcomes | Specification (including ICD codes if appropriate) of health outcomes selected for assessment. | May be supplemented by an explanation of which other health outcomes were excluded from consideration and why. |

| Assessment methodology | ||

| Exposure assessment | Description of data and methods used to model causal chain from source to exposure. | Appendix should provide copy of assessment protocol, together with a clear explanation and justification of any deviations from the original plan.

Appendices may also provide details of individual data sets and models used in the assessment. |

| Health effect assessment | Description of how health effects were estimated, including presentation and justification of exposure-response functions used and how baseline health data were derived. | |

| Impact assessment | Specification of the impact measures used, and description of how they were derived (including weights, aggregation procedures). | |

| Uncertainty analysis | Outline of methods uded to characterise and assess uncertainties. | |

| Results | ||

| Main findings | Description of main findings, including quantitative information (e.g. as tables, graphs, maps) and an outline of the main uncertainties involved. | Use of 'indicator scorecards' to summarise the results of different scenarios is often helpful (see link to left).

More detailed, quantitative results (including results of sensitivity analyes) should be provided in an Appendix. |

| Interpretation | ||

| Implications | Summary of main conclusions arising from the assessment, and their implications (e.g. ranking of different scenarios in terms of scale of impact). | Note: reports should in most cases stop short of making recommendations (e.g. about which policy measure to adopt), unless this is specified in the contract. |

| Caveats | Listing and explanation of limitations to assessment, or other factors that need to be borne in mind when applying the results. | |

| Lessons learned | Outline of important lessons regarding process of the assessment, that might aid future assessments (e.g. issues faced and ways in which they were overcome; continuing gaps in knowledge) | |

| Additional materials | ||

| Executive summary | Brief overview of main content, highlighting the qustion, nethodological approach and findings. | |

| Assessment protocol | Full copy of the original protocol, including information on any changes made during the assessment, and the reasons for doing so. | Should usually be provided as an appendix or on-line. |

| Stakeholder engagement | Listing of stakeholders engaged in the assessment, and an outline of how stakeholder consultation was done. | |

| References/ bibliography | Full listing of references cited, and indications of further reading. | |

It should be stressed that reporting will rarely be adequately served by a single, comprehensive report. Instead, it is often more effective to produce a number of different sets of materials, at different levels of detail, designed for different audiences and purposes. Given this, it is crucial that these materials are properly harmonised, so that they give consistent messages (albeit in different language or by different means), and so that users can move between them reasonably seamlessly.

Reports can also be made available through different media, including paper, digital and on-line versions. On-line versions can in most cases be enhanced by judicious use of hyperlinking (e.g. to link summary gindings to more detailed information in tables or appendices), though care is needed to ensure that this does not impair the linear logic of the report. In many cases, also, digital media offer better options to view and explore complex materials such as detailed causal diagrams and mapped results.

Examples

As has been noted, integrated environmental health impact assessment is relatively young, so most assessors are likely to be finding their way about how best to design and structure reports. Unfortunately, this also means that there are as yet few examples of assessment reports to use as a starting point. The INTARESE and HEIMTSA projects, which underlay the development of this Toolbox, however, carried out a number of case studies, aimed at testing and illustrating the approach. Because these were essentially experimental studies, done in a research context, they do not for the most part relate to real 'client-led' issues - though they all deal with major policy questions in environmental health. For the same reason, they also did not involve full stakeholder engagement and are often limited in their scope. They nevertheless provide useful worked examples both of assessments and of assessment reports (broadly following the structure outlined above). A key component of all the reports is also a reflection on the lessons learned, which may help both to forewarn assessors what might lie in store, and give clues about how both assessment procedures and the reports can be improved.

Reports are included in the Toolkit section of the Toolbox.

Indicator scorecards

One of the most important elements of an assessment report is invariably a summary of the main findings, as indicated by the various measures of health impact used to describe the outcomes. In most assessments, a wide range of health impacts need to be considered. Typically, moreover, these impacts relate to several different scenarios, so users need to be able to compare not only between health effects but also between the scenarios, in order to draw conclusions about how best to respond. Often (though not always), trades-off need to be made in this process, between different sets of interests or objectives, or between different types of outcome. For example, different scenarios may impact differently on morbidity and mortality, on short-term and long-term health, or in different areas or on different population sub-groups.

One way of dealing with these multiple outcomes is by aggregating the impacts into a single, summary measure (e.g. DALYs or monetary value) to represent the overall burden of disease. This is certainly useful in most cases, and in so far as these measures can be considered consistent they provide a means of comparing directly between the different scenarios (e.g. in terms of lives or money saved). Compound measures such ad these, however, hide as much as they reveal, and are inevitablky based on a series of weights (to reflect the severity etc of different diseases), which imply some form of value judgements. To interpret the results, and understand how they have arisen, users will often need additional information. In addition, policy makers and other stakeholders often expect and demand more freedom of choice than these summary measures imply, and they may wish to challenge or change the weights that are inherent (and often buried) in these measures - for example, to reflect different value-systems (see comparing and ranking outcomes). Even where aggregate measures of impact are used, therefore, it is helpful to provide information on the components that make up these overall impacts (e.g. on the main disease or population sub-grou ps).

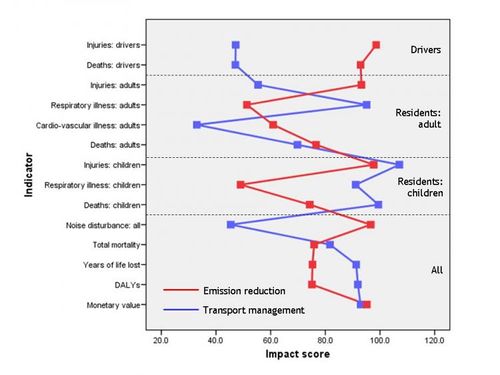

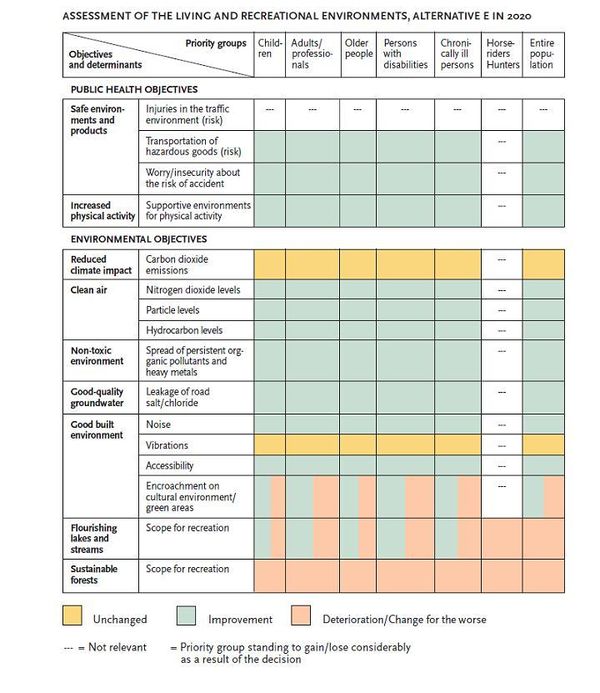

A useful technique in this context is to construct some form of indicator scorecard. Scorecards can be designed in various ways, depending on the purpose. They are most useful in showing the changes in impact under different scenarios. To compare a small number of different scenarios or policy options, graphs can be used (as in Figure 1 below). Where the number of comparisons is larger (e.g. in terms of impacts between different population groups or areas), it may be better to develop a series of graphs or matrices - one for each scenario. In this case, scores can be presented in different ways - from smiley/sad faces, to colour coding or actual indicator values. Figure 2 shows an example from a study of the health impacts of a road project in Sweden. However they are designed, however, the goal of scorecards must be to summarise the results in a way that both provides insight into the findings of the assessment and forms a basis for further discussion. In this way, the scorecards can feed into the process of comparing and ranking outcomes, and thence into evaluation of the different policy options.

Figure 1. An example of an indicator scorecard showing health impacts of two road transport scenarios. Impact scores represent the attributable burden of disease for each measure, relative to the business-as-usual scenario.

Figure 2. An example of an indicator scorecard, showing the health and environmental impacts of a road project in Sweden (Swedish National Institute of Public Health 2005).

Mapping

Maps are increasingly used as a means of exploring and analysing environmental health data, and these days are almost essential parts of health impact assessment. Amongst other things, they help to check the quality of the data being used in the assessment (e.g. by revealing unexpected discontinuties or patterns in the data), to develop hypotheses about possible causal relationships or processes, and to test and validate the results of modelling.

Maps are also important tools at the appraisal stage, for they are useful and powerful ways of presenting results of assessments. Amongst other things, they:

- communicate information in a consistent and accessible visual form to a wide range of users, since most people have the ability to read and interpret maps;

- show spatial variations in impacts and highlight hotspots and geographic patterns or trends, thereby helping users to understand the inequalities in impact that may occur and who might be more-or-less affected;

- indicate spatial relationships between health effects and causal factors (e.g. sources, pollution levels or socio-economic conditions), and thus help users to understand how impacts develop.

Designing and interpreting maps of health impact

If mapping is to be used , however, it needs to be planned early in the analysis, in order to ensure that the data are appropriate - in particular that they are properly georeferenced (i.e. linked to recognisable geographical features, and/or to a knwon co-ordinate system). It also has to be remembered that maps can lie (or, to be more accurate, people can easily misinterpret maps). So great care is always needed both in designing maps (in order to minimise the risk that they will mislead), and in the actual interpretation process (especially when trying to infer patterns or spatial relationships).

In terms of design, important issues include:

- The choice of metric. It rarely makes sense, for example, to map absolute measures, such as excess risk, total number of deaths or DALYs, because much of the spatial variation they show is likely to be due simply to differences in the population size. Maps of health impact invariably make much more sense when they are based on relative measures or on rates - e.g. average life expectancy, relative risk, standardised mortality rate, or DALY rates.

- The choice of denominator. Point 1, above, means that most maps need a denominator. Often this will be population (e.g. deaths or DALYs per 100,000 people), since the aim will be to compare risks across the study population. In some cases, however. other denominators are more appropriate. To show risks associated with road accidents, for example, it may make more sense to use road length or traffic volume (e.g. vehicle kilometres driven) in order to highlight areas where the roads are more inherently dangerous. Likewise, it may be more meaningful to map the number of deaths per unit area if the intention is to indicate which areas contribute most to the overall burden of disease. As these examples indicate, different denominators give different messages. The choice of denominator must reflect the purpose of the map.

- Zone design. Information on health impacts is usually best expressed in the form of area data (i.e. averaged or aggregated to a defined set of zones). In many cases, the zones used comprise irregular polygons, relating to administrative regions, such as census tracts, health authority areas or provinces. In other cases, data can be represented as a regular grid. The choice of zone system greatly affects the structure of the map, and the message it conveys. Irregular polygons, for example, can be useful because they often help to ensure that each area has an approximately equivalent population, thus avoiding the so-called 'small number problem' where rates tend to be very unstable because the areas contain only a small population. On the other hand, the zones often differ greatly in size, with the consequence that the map tends to be dominated by the larger (more sparsely populated zones). Spatial patterns and relationships may also vary depending on the choice of zone design (see Zone design systems), so the choice of zone system must always be made on the basis of a clear understanding both of the data and of the purpose for which the map is to be used.

- Map scale and resolution1. In the same way, maps are very sensitive to the spatial scale and resolution on which they are constructed. Broad scale maps, which cover large areas at a relatively low resolution, may be useful to show general trends, but inevitably obscure much of the detail. Fine scale maps, at high resolution, retain the detail, but in the process may become difficult to interpret because the user 'cannot see the wood for the trees'. Fine scale maps may also exacerbate the small number problem, mentioned above, making estimates of rates unstable in many of the areas because the underlying population in each map zone will be small.

- Symbolisation and colour. Care is also needed in selecting the symbols and colour schemes used in maps, for these not only affect how attaractive the map is, but also how easy it is to read. They may also may carry implict (and unintended) messages: red, for example, is often seen as dangerous or bad, while green is seen as benign or good. Symbols and colours thus need to be matched to the scale of the map, and the amount of detail it contains, and selected to ensure that they convey information both clearly and without bias.

1 Note that scale and resolution are different: scale refers to the ratio of the size of the features on the map to their real size; resolution refers to the amount of detail shown in the map (i.e. smallest observable size of feature). It is thus possible (though not very helpful) to have a fine scale map with a low resolution - i.e. the map would be large, but it would only show large features. Equally it is possible to have a broad scale map with a high resolution - in this case, the map would be relatively small, but would (try to) show a great deal of detail. As this indicates, map scale and resolution ideally need to be balanced. As it further indicates, care is needed in describing map scales. The terms large and small scale, for example, are often used ambiguously (or simply wrongly). Technically, a large scale map is one that has a large ratio between the feature size on the map and that in reality (it is large relative to real space): e.g. it may have a scale of 1:10,000, meaning that each 1 cm on the map represent 10,000 cm (0.1 km) on the ground. A small scale map, conversely, will be small relative to real space: e.g. it may have a scale of 1:1,000,000, meaning that each cm on the map represents 10 km on the ground. To avoid this confusion, it is often better to refer to coarse and fine scales, or high and low levels of resolution.

Mapping tools

A wide range of mapping software is now available, both in the form of fully functional geographical information systems (GIS) and as more specific map tools. An increasing number of these are designed as Web-based tools, thereby avoiding the need to purchase and instal potentially expensive systems, and facilitating sharing and collaborative analysis of spatial data.

Effective map design

The importance of effective map design

Crucial to effective communication of spatial information is the use of suitable mapping techniques that convey results objectively. Effective mapping requires both an understanding of the mapped phenomena as well as the mechanisms to present the data appropriately. This is particularly true for any maps that display data related to epidemiological risk in order to avoid misinterpretation or to over or under-emphasise particular results, as the classic examples by Monmonier (1996) illustrate.

Mapping has become far easier with the development and adoption of GIS. Nevertheless, although data visualisation is integral to all stages of GIS analysis, it is important to remember that data views and screen grabs are not maps and might best be described as working documents forming part of exploratory data analysis. A map will usually be required to display results or to convey information to a third party and each will require a different approach to the design. It is for this reason that cartographic skills are just as important in GIS as analytical ability in order to communicate effectively. Every map should be individually designed, using cartographic principles which enable the creation of a product that works with human cognitive processes to create meaning. Design should reflect both the needs of the audience and the intended purpose and should include all relevant aspects of spatial and attribute data organisation, symbolization, application of colour, typography, and design and layout. This is as true for web-based maps as print maps though each brings with it different considerations (Kraak and Brown 2000).

Map design can be directed by ‘good practice’ and the following factors should always be considered as part of the process:

The purpose of the map: Who is the intended audience (e.g. what is their experience with map use) and how much will they interact with the map (e.g. presenting results or exploring data). There are numerous options for data display and the map purpose must be closely tied to an appropriate mapping technique (MacEachren and Kraak 1997, Kraak and Ormeling 2003).

Map controls: Who is the audience, what output media are you using, what is the viewing distance, what is the map scale? Is some generalisation/simplification required? It is highly likely that manipulation of the map detail will be required to ensure it is fit for display at certain scales and for certain purposes.

Projection: Map projection distortions alter fundamental properties such as: area, distance, shape, direction, size, scale (Maher, 2010). The choice of map projection will, therefore, be related to the map purpose and can have a fundamental impact upon how data are portrayed. Ignoring the projection is a major fault of many maps and one that can ruin the message.

Variable representation: Map components need to be assigned meaning. They usually co-notate a particular meaning such as bigger than, more than etc. Remember that all the features of a variable can be changed, including size, shape, spacing, orientation, hue, lightness (Dent et al. 2008). Using effective representation depending on whether the data is nominal, ordinal, Interval or ratio. The combination of data type and level of measurement will play an important part in how symbolisation is performed so it is both mathematically and cognitively appropriate (Bertin, 2010)

Classification: The choice of classification scheme will affect the displayed spatial pattern, particularly for the display of thematic data using choropleth mapping techniques. Classification scheme choice should be an informed choice based on appreciation of available alternatives and of the overall message (Slocum et al, 2004).

Colour: Colour has meaning, and there are a number of colour conventions that should not be ignored as this will confuse the reader. Additionally, colour use should be handled very carefully to ensure effective design. Many GIS default colour choices are cartographically poor but alternatives exist to assist with more appropriate colour choices (Brewer, 2005; Brown and Feringa, 2003). Remember that a significant proportion of the population is green-red colourblind, so avoid this colour combination where possible.

Typography: A number of different factors should be considered such as map purpose (i.e. what needs to be labelled), font size, type, style and case, letter spacing, curvature, orientation, resolving type of feature conflicts. Typography on a map often receives little attention but it is vital to communicate meaning so users can understand the map. (Robinson et al. 1995).

Overall arrangement: Emphasise what is important on the map - e.g. use contrast and build a hierarchy. Frame and balance the map, not the page. Structure the figure and ground effectively using the overall space and, minimising wasted space on the map (Kraak and Ormeling, 2009). Consider any inclusions that will add to the effectiveness of the map such as insets, titles, scale, explanatory text copyright notice etc.

Legend: The legend is a crucial part of the map and needs careful design. Readers should be able to clearly interpret variable meanings and the legend should add information (Brewer, 2005)

Maps are informative and persuasive tools. They are especially useful for revealing spatial pattern in information, and thus highlighting inequalities and targeting hotspots. Conversely, maps can be notoriously misleading; either intentionally or unintentionally they can lie. Cartographic decision-making for effective mapping can be both time-consuming and complex. Getting results is not the only goal of geographical analysis; communicating them is just as important and inappropriate cartography can negate effective analysis (Darkes and Spence, 2008).

Comparing and ranking outcomes

The comparative nature of assessments means that, in most cases, results from a number of scenarios need to be compared, in order to determine their relative tolerability or pereferability, so that policy choices can be made. It is not the role of the assessors to make these choices, but at the very least the results of the assessment need to be made available in a form that enables comparison. In some cases, assessors will also need to contribute to, or carry out, the actual comparison either with or on behalf of the users. An understanding of how the comparison will be done, and what forms of information are needed for this purpose, is therefore crucial.

This becomes all the more important when the issues being considered are complex, and when a large range of impacts are involved, for in these circumstances the process of comparison may be difficult. Three specific challenges are likely to occur:

- How to assess the significance (in statistical terms) of apparent differences between the impacts of different scenarios, in the light of the inherent uncertainties;

- How to balance and make trades-off between different outcomes (or areas or population groups), which may show contradictory impacts;

- How to enable the comparisons to be done in a way that involves, and gives fair consideration to, the different stakeholders concerned

Formulaic answers to these difficulties are unlikely to be universally applicable. For example, the significance of the apparent impacts can in some cases be tested using standard statistical techniques, but these depend on quantitative understanding of the uncertainties involved and are only valid where the impacts themselves have been measured in quantitative terms. Likewise, the issue of trades-off can (to some extent) be avoided by generating and using aggregate measures of impact (e.g. DALYs or monetary value), but in reality these merely internalise some of the value judgements that need to be made (e.g. the weights attached to different health outcomes), and still leave open the question of who wins and who loses (in relative if not absolute terms), and how such distributional issues should be dealt with. In principle, also, stakeholders can freely contribute to the processes of comparison and ranking, but differences in expertise and practical constraints of time and finance often mean that this is an imperfect process.

Different approaches to comparing and ranking the outcomes may therefore need to be applied, depending on the nature of the issue and the needs of users (and the underlying value systems). For example:

- Cost-benefit analysis (comparing the monetary value of total costs and benefits) can be used, where it is agreed that all relevant aspects of the impacts can be converted to monetray values, and where an essentially utilitarian approach has been adopted;

- Cost-effectiveness analysis (minimising the cost to achieve a specified health gain, or avoid a specified health loss) can be used where costs can be monetised but where benefits cannot be, or where a clear health objective exists;

- Multi-criteria assessments can be carried out, where costs and benefits cannot be monetised, where monetisation is considered by be incomplete (or unacceptable), and where several different outcomes have to be considered.

Multi-criteria assessment

Multi-criteria assessement (MCA), as its name implies, is a way of evaluating and comparing options on the basis of a number of different criteria or objectives. The ways to do this are varied, and there is no single, established procedure for MCA, so it is better seen as an approach, underpinned by a set of general principles, than as a specific methodology.

In broad terms, MCA can be seen as a three stage process, as follows:

- A set of criteria are first defined, reflecting the policy (or other) objectives that need to be achieved;

- The different strategies (e.g. assessment scenarios) are then scored against each of these criteria;

- The scores for each criterion are then aggregated (with or without weighting of some kind) to provide an overall assessment of each option, which can be used to rank the option in terms of their performance.

Defining the criteria

Selecting the criteria is clearly a crucial part in this process, for these set thew framework within which the assessment is done. In most MCA, this is done by an expert team, though other stakeholders can be involved to ensure that their various interests are taken into account. For the most part, criteria selection is therefore done by standard group-consultation techniques, such as questionniares, Delphi surveys, brain-storming meetings and structured meetings of various types, suppplemented as appropriate by rerviews of relevant literature. To be effective, the criteria thus selected need both to reflect the objectives or ambitions of those concerned (and the people they represent), and to be amenable to judgement in some at least semi-quantiative way.

In the context of environmental health impact assessment, it is evident that criteria selection is to a large extent carried out as an inherant part of the initial issue-framing, for this stage includes clear specification of the issue that is to be assessed, the scenarios to be used and the indicators that will provide the means of describing the outcomes. If well-conceived, the outcome indicators should therefore be the criteria.

Scoring the scenarios

The second step in MCA is to score each option (i.e. assessment scenario) against these criteria. This can be done either qualitatively or quantitatively, usually by filling in a 'performance matrix' dimensioned to show the criteria on one axis and the options on the other. In the case of integrated environmental health impact assessment, the materials needed for this matrix come directly from the analysis, for the assessment is designed to provide estimates for each impact indicator. In some cases, however, the indicators may need to be reversed (to ensure that they all run in the same direction), or transformed (e.g. to a log scale) to adjust for non-linearity.

====Aggregation====,

The third stage comprises aggregation of the scores for each option (scenario) to provide an overall measure of performance. This can be done simply additively (without weighting), though the obvious weakness of this is that each criterion is assumed to have equal importance (whether it relates to mortality or morbity, and no matter what units it is measured in). Alternatively, some means of weighting is required, to translate the different criteria onto equivalent (and additive) value scales. A wide range of methods may be used for this purpose. One of the most widely used. sometimes referred to as swing-weighting, involves making comparisons between the best and worst scores for each pair of criteria, selecting in each case the one with the larger perceived importance, and elimiating the other. This process continues until only one criterion (the most important remains). Participants then score all the other criteria against this reference.

However obtained, the weights are then applied to the scores, and the results summed to provide an overall measure of performance for each option:

Pi = Σ Sij*Wj

where: Sij is the score for criterion j on option i, and Wj is the weight attached to criterion j.

The different scenarios can then be ranked on the basis of these scores to identify that which offers the greatest health gain (or minimum loss). If appropriate, sensitivity analyses may also be carried out to determine how robust the final ranking is in the face of changes in the weights.

Advantages and limitations

MCA has a number of strengths as a means of comparison and ranking. In particular it is highly flexible, enabling both quantitative and non-quantitative measures to be combined, and for health, monetary and other (e.g. exposure) measures to be used. Because weights are defined by the stakeholders themselves (or their representatives), the method also helps to ensure that the aggregation procedure reflects the preferences and interests of those concerned. By the same token, it helps the stakeholders understand the aggregation process, and is thus likely to engender greater trust in its results.

At the same time, there are a number of limitations and weaknesses. Its success depends, for example, on how effective the process of stakeholder engagement is: biases in this process are likely to feed through into the results. Localisation of the aggregation to these stakeholders may also mean that the weights applied may differ greatly from those used in other situations, making comparison between studies or areas difficult. Reproducibility of the resuts may also be low, both between different stakeholder groups and between the same stakeholders at different times. For the most part, therefore, the approach is most appropriate where relatively local issues are being assessed which affect a clearly defined group of stakeholders.