General assessment processes

This page is a encyclopedia article.

The page identifier is Op_en2085 |

|---|

| Moderator:Mikko Pohjola (see all) |

|

|

| Upload data

|

Assessment sub-processes

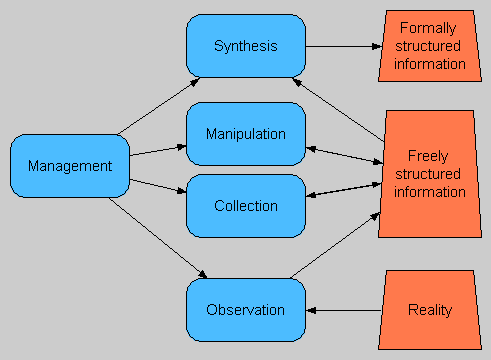

The process of carrying out an assessment can be considered as consisting of several simultaneously on-going sub-processes that are more or less continuous throughout the whole assessment R↻ :

- Collection of scientific information and value judgments according to the assessment questions

- Manipulation of scientific information and value judgments to provide answers to the assessment questions

- Synthesis of scientific information and value judgments as an answer to the principal question of the assessment

- Communication of the outcomes of collection, manipulation, and synthesis sub-processes among assessment participants and users

- Management of collection, manipulation, synthesis and communication sub-processes

Underlying all these is the process of observation (and experimentation). This is not, however, considered as being a part of assessment, but rather the basic process of scientific studies that produces the scientific information used in assessments. All these sub-processes are in interaction with each other, and present throughout the whole assessment process, although their roles and appearances may vary significantly during different phases of the process. A sketch of the relationships between these sub-processes (excluding communication) and the object of assessment is given below.

Collection of scientific information and value judgments can be considered as gathering these information types or meta-information about them and bringing it available for the participants of the assessment. The collection sub-process often deals with information that is freely structured, i.e. not formalized as explicit answers to specific assessment questions, or not the same questions as answers are sought for in the particular assessment.

Manipulation takes place as formatting the collected information, as is necessary, so that it addresses the specific questions of the particular assessment as well as possible. The term manipulation should not be interpreted here in its often used negative sense of distorting information, but rather as extracting the essence of the information in terms of the information needs of the assessment. D↷

Synthesis of scientific information and value judgments can then be described as adapting the collected, and manipulated if necessary, information as explicit answers to specific assessment questions. Synthesis takes place on two levels. Firstly, there is the synthesis of different pieces of information as an explicit answer to one specific questions (In the form of a variable). Secondly, there is the synthesis of different question and answer compounds as an overall answer to the principal question of the assessment (In the form of a causal network of variables, constituting an assessment). This sub-process produces formal information in the form of variables and assessments.

Communication is a sub-process that is inevitably intertwined with all the other sub-processes. As an assessment is an activity taking place in social context, there is a need to explicate the outcomes of the intermediate steps within different sub-processes. This is necessary to enable collaborative and iterative development of the assessment. The communication is most reasonably arranged as happening primarily through a shared object of work, an explication of the assessment collaboratively worked on.

Management sub-process is about organizing all the sub-processes to contribute as well as possible to the overall purpose of the assessment, finding the best possible answer to the principal assessment question and conveying that to its intended use. Management also includes the technical facilitation of the other sub-processes.

The flow of information is primarily considered to happen from plain observations towards formally structured synthesized information, providing answers to specific questions determined by practical information needs. However, there is also flow of information happening in the other direction. The identified specific questions determine the requirements for manipulation and collection, and all the way to observations. This other flow of information is too often neglected and the consideration of assessment work starts from available information. Taking account of the flow of requirements is necessary if assessments are to be considered as demand-driven and consequently addressing the real practical information needs.

Phases of assessment

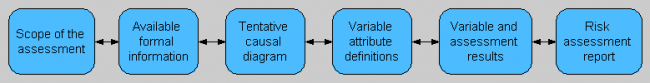

The assessment process is continuous interplay of different sub-processes throughout the whole assessment, although their roles and appearances may vary significantly during different phases of the process. The assessment has six phases. The work during a particular phase is always built on the results of the previous phases. The phases are not clear-cut periods in time, because the work is iterative in nature, and the previous phases must frequently be revisited when the development of the product and feedback brings in new understanding. Thus, the work of the next phase usually starts already when the outcomes of the previous phase are still in the form of a draft, at least in some aspects.

The phases of assessment are called:

- Scoping of the assessment. In this phase the purpose of the assessment, the question(s) asked in the assessment, intended use of the output, the temporal and spatial boundaries of the scope of the assessment, and the participatory width of the process are defined and described.

- Applying previously produced information about the issues being assessed. In this phase the existing information that is available, whether in freely sructured or formally structured format, is sought out and applied.

- Drawing a causal diagram. In this phase the decisions, outcomes, and variables of importance related to the assessment are described in the form of a sketch of a causal network diagram.

- Designing variables. In this phase the variables included in the assessment (the causal network diagram) are described more precisely, including defining causal relations between them. This phase may also include definition of quality criteria and plans for collecting the necessary data or models to estimate the results of the variables.

- Executing variables and analyses. This phase is actually about collecting the data needed, executing the models described in the Definition attributes of different variables, and storing the results in the result database. Assessment-specific analyses such as optimisation, decision analysis, value-of-information analyses and so on are carried out.

- Reporting. In this phase, the results of indicators, the outcomes of particular interest, and assessment-specific analyses are communicated to the users of the outputs. The results are discussed and conclusions are made about them, given the scope of the assessment. The communication includes also necessary background information needed to sufficiently interpreting the assessment information.

The phases 1-3 are collectively referred to as the issue framing phase.

Object of assessment

The outcome of the whole process, the assessment product, is a causal network description of the relevant phenomena related directly or indirectly to the endpoints of the assessment. The causal network and analyses about the whole network or its parts constitutes the assessment as an explication of the principal assessment question and an answer to it. The assessment product should thus:

- Address all the relevant issues as variables

- Describe the causal relations between the variables

- Explain how the variable result estimates are come up with

- Report the variables of greatest interest and conclusions about them to the users

This approach to assessment explicitly emphasises the importance of identifying the causal relations throughout the whole network. Although it may often be very difficult to exactly describe causal relations in the form of e.g. mathematical formulae, the causalities should not be neglected. By means of coherent causal network descriptions that cover the interrelated phenomena from stressors to outcomes it is possible to better understand the phenomena and the possibilities for intentional interaction with the system.

It can be quite a long, and not necessarily at all a straightforward, way from the first idea of the assessment question to creating a complete causal network description of the phenomena of interest. Some of the challenges on this way are:

- What are the all the relevant issues that should be covered in the assessment?

- How are the causal relations between variables defined and described?

- How are the individual variables defined and described?

- What is the right level of detail in describing variables?

- What are the most important issues within the assessment that should be communicated to the users of the output?

Below is a diagram that schematically describes the evolution of an assessment product developing from identification of the assessment purpose to a complete causal network description. The diagram is a rough simplification of the process, although the arrows between the nodes pointing both ways try to emphasize the iterative nature of the process. This diagram rather describes a gradual transition of focus along the progress of work than subsequent events taking place in separate phases. The boxes represent different developmental steps of the assessment product which are explicit representations of the improving understanding about the assessed phenomena. Simultaneously the focus of attention shifts as the process progresses towards its goal of providing the answer to the principal assessment question. The diagram is a work-flow description and it neglects e.g. questions of collaboration and interaction between the contributors to the assessment.

An open collaboration approach to assessment considers it as a process of collective knowledge creation, and consequently emphasizes the role of shared information objects in mediating the process. The flow of information and development of collective knowledge among the assessment participants is best supported with formal information objects that structure, explicate, and represent the current state of collective knowledge to all participants at any given point of time during the assessment process. The primary formal information objects in assessments are assessment, variable, and method. They are formal objects that describe the essential aspects of assessments, including descriptions of reality and the work needed for making the descriptions.

Assessment

Assessment is a process for describing a particular piece of reality in aim to fulfill a certain information need in a decision-making situation. The word assessment can also mean the end product of this process, i.e. an assessment report of some kind. Often it is clear from the context whether the term assessment refers to the making of the report or the report itself. Methodologically, these are two different objects, called the assessment process and the assessment product, respectively.

| Attribute | Subattributes | Comments |

|---|---|---|

| Name | Identifier for the assessment | |

| Scope |

| |

| Definition | Causal diagram

Other parts |

Note: Causal diagram and Other parts are not attributes but just descriptive subtitles. D↷ |

| Result |

|

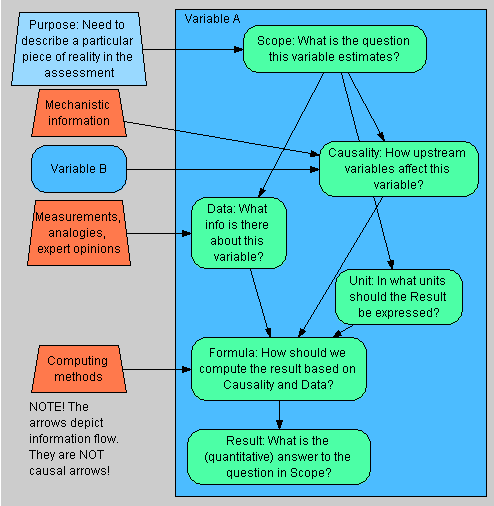

Variable

Variable is a description of a particular piece of reality. It can be a description of a physical phenomenon, or a description of value judgements. Also decisions included in an assessment are described as variables. Variables are continuously existing descriptions of reality, which develop in time as knowledge about them increases. Variables are therefore not tied into any single assessment, but instead can be included in other assessments. A variable is the basic building block of describing reality.

| Attribute | Sub-attribute | Comments specfic to the variable attributes |

|---|---|---|

| Name | ||

| Scope | This includes a verbal definition of the spatial, temporal, and other limits (system boundaries) of the variable. The scope is defined according to the use purpose of the assessment(s) that the variable belongs to. | |

| Definition | Dependencies | Dependencies R↻ tells what we know about how upstream variables (i.e. causal parents) affect the variable. Dependencies list the causal parents and expresses their functional relationships (the variable as a function of its parents) or probabilistic relationships (conditional probability of the variable given its parents). The expression of causality is independent of the data about the magnitude of the result of the variable. |

| Data | Data tells what we know about the magnitude of the result of the variable. Data describes any non-causal information about the particular part of reality that is being described, such as direct measurements, measured data about an analogous situation (this requires some kind of error model), or expert judgment. | |

| Unit | Unit describes, in what measurement units the result is presented. The units of interconnected variables need to be coherent with each other given the functions describing causal relations. The units of variables can be used to check the coherence of the causal network description. This is a so called unit test. | |

| Formula | Formula R↻ is an operationalisation of how to calculate or derive the result based on Dependencies, Data, and Unit, making a synthesis of the three. Formula uses algebra, computer code, or other explicit methods if possible. | |

| Result | A result is an estimate about the particular part of reality that is being described. It is preferably a probability distribution (which can in a special case be a single number), but a result can also be non-numerical such as "very valuable". |

In addition, it is practical to have additional subtitles on a variable page. These are not attributes, though.

- See also

- References

Plausibility tests are procedures that clarify the goodness of variables in respect to some important properties, such as measurability, coherence, and clarity. The four plausibility tests are clairvoyant test, causality test, unit test, and Feynman test.

- Clairvoyant test (about the ambiguity of a variable): If a putative clairvoyant (a person that knows everything) is able to answer the question defined in the scope attribute in an unambiguous way, the variable is said to pass this test. The answer to the question is equal to the contents of the result attribute.

- Causality test (about the nature of the relation between two variables): If you alter the value of a particular variable (all else being equal), those values that are altered are said to be causally linked to the particular value. In other words, they are directly downstream in the causal chain, or children of the particular variable.

- Unit test (the coherence of the variable definitions throughout the network): The function defining a particular variable must result (when the upstream variables are used as inputs of the function) in the same unit as implied in the scope attribute and defined in the unit attribute.

- Feynman test (about the clarity of description): If you cannot explain it to your grandmother, you don't understand it well enough yourself. (According to the quantum physicist and Nobel laureate Richard Feynman.)

Method

Method is a systematic procedure for a particular information manipulation process that is needed as a part of an assessment work. Method is the basic building block for describing the assessment work (not reality, like the other universal objects). In practice, methods are "how-to-do" descriptions about how information should be produced, collected, analysed, or synthesised in an assessment. Some methods can be about managing other methods.

| Attribute | Subattribute | Comments |

|---|---|---|

| Name | An identifier | |

| Scope |

|

|

| Definition |

|

|

| Result |

|

|

References