Open policy practice

In Opasnet many pages being worked on and are in different classes of progression. Thus the information on those pages should be regarded with consideration. The progression class of this page has been assessed:

|

The content and quality of this page is/was being curated by the project that produced the page.

The quality was last checked: 2016-04-09. |

| Moderator:Jouni (see all) |

|

|

| Upload data

|

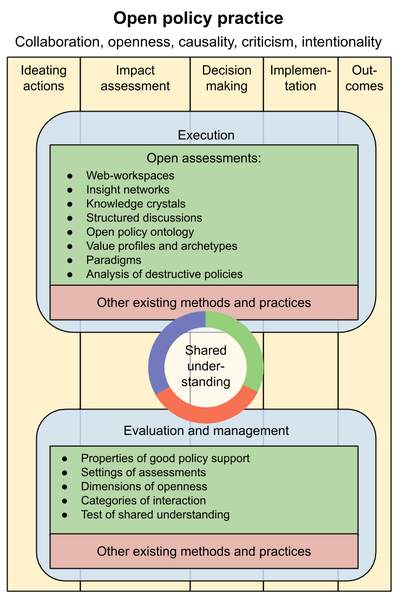

- Open policy practice is a method to support societal decision making in an open society. One part of open policy practice is open assessment, which focuses on producing relevant information for decision making. However, open policy practice is a larger concept and it is especially focused on promoting the use of information in the decision making process.

- This page tells what open policy practice is and how it should be implemented. However, at the moment little is said about why it should be implemented, i.e. why it is a better practice than many others. In the text we just assume that it is. The Rationale section will be improved to answer the why question.

Question

What is open policy practice method such that it

- applies open assessment as the main knowledge producing process,

- gives practical guidance for the whole decision process from initiation to decision support to actual decision making to implementation and finally to outcomes,

- is applicable to all kinds of societal decision situations in any administrative area or discipline?

Answer

Previous research has found that a major problem of the science-policy interface actually lies in the inability of the current political processes to utilise scientific knowledge in societal decision making (Mikko Pohjola: Assessments are to change the world – Prerequisites to effective environmental health assessment. Doctoral dissertation. THL, 2013. http://urn.fi/URN:ISBN:978-952-245-883-4). This observation has lead to the development of a pragmatic guidance for closer collaboration between researchers and societal decision making. The guidance is called open policy practice and it was developed by National Institute for Health and Welfare (THL) and Nordem Ltd in 2013. The main points of the practice are listed below.

With the word policy we refer to societal decision making, i.e. decision making by authorities, governments, parliaments, municipalities etc. However, in the text we mostly use the term decision making because open policy practice is a method that can be used also in many other kinds of decisions by e.g. private companies and individual citizens. Actually, most policy processes should look at both the decision making by the authorities and the related decision making by companies and citizens. Often there are interactions that either enhance or deteriorate the impact of decisions made by policy makers, and poor policies will be chosen if there is no understanding of these interactions of decisions by different players. Therefore, all kinds of decisions are considered in open policy practice even if it focusses on helping societal decisions.

Insight network

- Main article: Insight network

Insight networks describe complex situations and their causal and other relations between relevant items.

Knowledge crystal

- Main article: Knowledge crystal

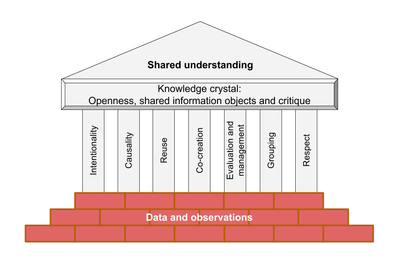

Knowledge crystal is an open web page that uses co-creation to produce an answer to a specific question. The answer is backed up by any relevant data, discussions, and critique that are needed to convince a critical rational reader that the answer is plausible.

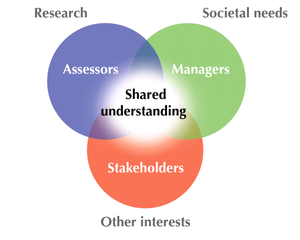

The objective of open policy practice is to produce shared understanding about the decision at hand. Shared understangding means a situation where all participants can understand what decision options are considered, what outcomes are of interest, what objectives are pursued, what facts, opinions, and disagreements exist and why, and finally why a particular decision option was selected. And particularly, this shared understanding is written down and shared with everyone. There is no need for people to agree on things.

In a way, shared understanding is the alpha and omega of open policy practice, as it is the starting point and the ultimate goal. To reach the goal, however, also other things are needed than just the direction. Therefore, the content of the method is divided into four main parts.

- Shared understanding.

- The execution of decision support. It is mostly about collecting, organising and synthesising scientific knowledge and values in order to inform the decision maker to reach her objectives.

- Evaluation and management of the work (of decision support and decision making). It continues all the way through the process. The focus is on evaluating whether the work produces the intended knowledge and helps to reach the objectives. The evaluation and management happens before, during, and after the actual execution to make sure that right things are done.

- Co-creation skills and facilitation (sometimes known as interactional expertise). It is needed to organise and synthesise the information. This requires specific skills that are typically available neither among experts nor decision makers. It also contains specific practices and methods that may be in wide use in some areas, such as the use of probabilities for describing uncertainties, discussion rules, or quantitative modelling.

This guidance focuses on the decision support part, although the whole chain of decision making from decision identification to decision support, actual making of the decision, implementation, and finally to outcomes of the decision are considered during the whole process.

Execution

Six principles

The execution of decision support may take different forms. Currently, the practices of risk assessment, health impact assessment, cost-benefit assessment, or public hearings all fall under this broad part of work. In general, the execution aims to answer these questions: "What would the impacts be if decision option X was chosen, and would that be preferable to, or clearly worse than, impacts of other options?"

In open policy practice, the execution strictly follows six principles: intentionality, shared information objects, causality, critique, openness, and reuse. Each of them is sometimes implemented already today, but so far they have not been implemented systematically together. Opasnet web-workspace was developed to support all these principles in practical work.

| Principle | Explanation |

|---|---|

| Intentionality | The decision maker explicates her objectives and decision options under consideration. All that is done aims to offer better understanding about impacts of the decision related to the objectives of the decision maker. Thus, the participation of the decision maker in the decision support process is crucial. |

| Causality | The focus is on understanding and describing the causal relations between the decision options and the intended outcomes. The aim is to predict what impacts will occur if a particular decision option is chosen. |

| Critique | All information presented can be criticised based on relevance and accordance to observations. The aim is to reject ideas, hypotheses - and ultimately decision options - that do not hold against criticism. Critique has a central role in the scientific method, and here we apply it in practical situations, because rejecting poor statements is much easier and more efficient than trying to prove statements true. |

| Shared information objects | All information is shared using a systematic structure and a common workspace where all participants can work. Information objects such as assessments, variables, and methods are used for this. The structure of an assessment is based on substance rather than on persons, organisations, or processes (e.g. people should not use personal folders to store data). Objectives determine the information needs, which are then used to define research questions to be answered in the assessment. The assessment work is collaboration aiming to answer these questions in a way that holds against critique. |

| Openness | All work and all information is openly available to anyone interested. Participation is free. If there are exceptions, these must be publicly justified. Openness is crucial because a priori it is impossible to know who may have important information or value judgements about the topic. |

| Reuse | All information is produced in a format that can easily be used for other purposes by other people. Reuse is facilitated by shared information objects and openness, but there are specific requirements of reuse that are not covered by the two previous principles alone. For example, some formats such as PDF files can be open and shared, but for effective reuse better formats such as Opasnet variable pages must be used. |

These principles aim to ensure that the decision support is justified, acceptable, and based on the best available information. Open policy practice helps in collecting expert knowledge, local information, and opinions, so that they are combined according to the needs of decision making. Thus, the implementation brings together

- information pull from decision making and

- information push from decision support (typically in the form of an environmental health assessment).

In other words, open policy practice combines the so called technical and political dimensions of decision making,[1] which are usually kept separate. During a decision support process, these dimensions have different weights. In the beginning, the objectives and information needs of the decision makers (political dimension) are on focus. When these things are clarified, the focus moves onto expert-driven research and thus technical dimension. Finally, the interpretation of results is mostly about opinions and values and thus within the political dimension.

Examples and clarifications

Intentionality

The intentionality is achieved by recognising the objectives of the decision maker and the information needs arising from the objectives. The information needs are formulated as research questions that are then answered during the decision support process. Also the objectives and questions can be openly discussed and criticised. In open assessment, this work produces the attributes for the assessment scope: question, intended users and use, participants, boundaries, decisions and scenarios, and timing. In assessments for societal decision support the questions are typically about planned decision options and objectives that are desired outcomes of these decisions. However, scoping an assessment may be difficult and it could reveal information that the decision maker would like to keep to herself due to tactical or other reasons. Therefore, mutual trust and commitment to an open, organised process is important.

Causality

Causal relations form the backbone of an assessment. The topics of interest are causally linked to decision options and the outcomes of interest. An assessment describes these links and the impacts of different actions by using a network of variables with quantitative, causal connections. Typically, variables are described as separate pages in Opasnet, and the assessment page contains links to those pages. For example, see a typical assessment Climate change policies and health in Kuopio.

Critique

In the execution, all presented statements (scientific or moral claims about how things are or should be, respectively) are considered valid, i.e. potentially true, until proven otherwise. A successful critique may only be based on two criteria: showing that the statement is irrelevant in its context (relevance) or incompatible with observations (evidence). This approach requires that participants are willing to reveal their information and also justify and defend their statements. Quantitative statements are used when possible, because it enables quantitative critique, which is more precise and efficient than non-quantitative claims. It is recommended that uncertainties are described explicitly by using probabilities. When a statement is invalidated, it stops affecting the results and conclusions of the assessment, but it is still kept visible to prevent repetition of the same invalid statements. Instead of shouting or harping, the participants are encouraged to try to invalidate the critique against their statement, if they want to have the statement back in the assessment.

Shared information objects

Shared understanding is best achieved if all information is shared to everyone in a systematic, practical, and predictable form. The form should facilitate learning of important issues and understanding of causal connections. An important feature is that there is a shared workspace so that everyone participates in developing a shared description rather than separate reports or memorandums. An open assessment organises the participants to collaborate and produce useful information that is relevant and organised according to the needs of the decision making. The information structure of Opasnet is designed to promote this with its wiki, database, modelling functionalities, and standardised information objects such as assessments, variables, and methods.

Openness

In the execution, all information is openly shared and no contribution is a priori prevented: all statements, arguments, and opinions are welcome. There are several reasons for this. First, openness builds trust among participants and outside observers. Second, it is an efficient way to ensure that all relevant issues are raised and handled properly. Third, it facilitates learning when all information is easily available to everyone. However, this creates challenges to the collection and synthesis of contributions. The six principles help in many of the challenges, but there is also need for specific work to organise contributions from whatever format they are into the standardised structure. Such work is called co-creation and facilitation, and it is described in more detail below. One part of the work is to organise free-format comments and discussions into structured discussions according to pragma-dialectic argumentation theory. In Opasnet, there are also tools designed to help open online work, such as talk pages, comment boxes, or evaluation polls.

Reuse

The information produced should be easily available for learning and further use. This creates some requirements to the format. Data must be machine-readable and in a standardised structure. Information must be organised in a modular way so that e.g. a large assessment can be split into small independent parts (in practice variables) that are reusable in different contexts. And of course information must be available whenever and wherever it is needed. In Opasnet, variables are independent objects that are described both in a human-readable format on a wiki page and in a machine-readable format in the database or the open modelling environment. These two formats are tightly linked together, and the former part is actually metadata for the latter. The standardised structure of question-answer-rationale facilitates the production and interpretation of these objects and thus their reuse.

Evaluation and management

In many work processes, the quality of the output is achieved by extensive training of the workers who have their distinct roles and tasks. In open policy practice, there must be other means to ensure quality, because participation is free and most participants are untrained to their tasks. The solution is to have clear quality criteria that can and should be used in all phases of the work: from the planning to the making of an assessment during decision support to decision to implementation and finally to evaluation of the outcomes. These quality criteria are used to design and adjust the work process toward its objectives. It is based on understanding of properties of good decision support (Table 2.), which then goes into more detail in some aspects (Tables 3. - 6.).

Although many participants are untrained in such a process, it is important that there are also people who are familiar with the methods of execution and evaluation and management. In other words, they must have the co-creation skills and they must be able to facilitate the work of others. These skills are described in more detail below, while this section describes the criteria that should be used.

Properties of good decision support

- Main article: Properties of good assessment.

Understanding properties of good decision support is the core of evaluation and management in open policy practice. These properties have been developed after an extensive theoretical work, literature review, and practical testing. Fulfilling all these criteria is of course not a guarantee that the outcomes of the decision will be a success. However, we have analysed several cases of problematic decision making processes. In all cases, the properties of good decision support were not fulfilled, and specific properties could be linked to particular problems observed. In other words, evaluating these properties made it possible to analyse what exactly went wrong with the decision making process. A good news is that this evaluation could have been done before or during the process, and the problems with specific properties would have been visible already then. Thus, using this evaluation scheme proactively makes it possible to manage the decision making process toward higher quality of content, applicability, and efficiency.

| Category | Description | Guiding questions | Suggestions by open policy practice |

|---|---|---|---|

| Quality of content | Specificity, exactness and correctness of information. Correspondence between questions and answers. | How exact and specific are the ideas in the assessment? How completely does the (expected) answer address the assessment question? Are all important aspects addressed? Is there something unnecessary? | Work openly, invite criticism (see Table 1.) |

| Applicability | Relevance: Correspondence between output and its intended use. | How well does the assessment address the intended needs of the users? Is the assessment question good in relation to the purpose of the assessment? | Characterize the setting (see Table 3.) |

| Availability: Accessibility of the output to users in terms of e.g. time, location, extent of information, extent of users. | Is the information provided by the assessment (or would it be) available when, where and to whom is needed? | Work online using e.g. Opasnet. For evaluation, see Table 4. | |

| Usability: Potential of the information in the output to generate understanding among its user(s) about the topic of assessment. | Would the intended users be able to understand what the assessment is about? Would the assessment be useful for them. | Invite participation from the problem owner and user groups early on (see Table 5.) | |

| Acceptability: Potential of the output being accepted by its users. Fundamentally a matter of its making and delivery, not its information content. | Would the assessment (both its expected results and the way the assessment planned to be made) be acceptable to the intended users. | Use the test of shared understanding (see Table 6.) | |

| Efficiency | Resource expenditure of producing the assessment output either in one assessment or in a series of assessments. | How much effort would be needed for making the assessment? Would it be worth spending the effort, considering the expected results and their applicability for the intended users? Would the assessment results be useful also in some other use? | Use shared information objects with open license, e.g. Ovariables. |

Settings of assessments

- Main article: Assessment of impacts to environment and health in influencing manufacturing and public policy.

All too often a decision making process or an assessment is launched without clear understanding, what should be done and why. An assessment may even be launched hoping that it will somehow reveal what the objectives or other important things are. Settings of assessments tries to help in explicating these things so that useful decision support can be provided. The scope attribute of an assessment guides the participants to clarify the setting.

| Attribute | Example categories | Guiding questions |

|---|---|---|

| Impacts |

|

|

| Causes |

|

|

| Problem owner |

|

|

| Target users |

|

|

| Interaction |

|

|

Dimensions of openness

In open assessment, the method itself is designed to facilitate openness in all its dimensions. The dimensions of openness help to identify if and how the work deviates from the ideal of openness (the fifth principle in execution), so that the work can be improved in this respect. However, it is important to notice that currently there is a large fraction of decision makers and experts who are not comfortable with open practices and openness as a key principle, and they would like to have a closed process. Dimensions of openness does not give direct tools to convince them. But it identifies issues where openness makes a difference and increases understanding about why there is a difference. This hopefully also leads to wider acceptance of openness.

| Dimension | Description |

|---|---|

| Scope of participation | Who are allowed to participate in the process? |

| Access to information | What information about the issue is made available to participants? |

| Timing of openness | When are participants invited or allowed to participate? |

| Scope of contribution | To which aspects of the issue are participants invited or allowed to contribute? |

| Impact of contribution | How much are participant contributions allowed to have influence on the outcomes? In other words, how much weight is given to participant contributions? |

One obstacle for effectively addressing the issue of effective participation may be the concept of participation itself. As long as the discourse focuses on participation, one is easily misled to considering it as an independent entity with purposes, goals and values in itself, without explicitly relating it to the broader context of the processes whose purposes it is intended to serve. The conceptual framework we call the dimensions of openness attempts to overcome this obstacle by considering the issue of effective participation in terms of openness in the processes of assessment and decision making.

The framework bears resemblance e.g. to the criteria for evaluating implementation of the Aarhus Convention principles by Hartley and Wood [4], the categories to distinguish a discrete set of public and stakeholder engagement options by Burgess and Clark [5], and particularly the seven categories of principles of public participation by Webler and Tuler [6]. However, whereas they were constructed for the use of evaluating or describing existing participatory practices or designs, the dimensions of openness framework is explicitly and particularly intended to be used as a checklist type guidance to support design and management of participatory assessment and decision making processes.

The perspective adopted in the framework can be characterized as contentual because it primarily focuses on the issue in consideration and describing the prerequisites to influencing it, instead of being confined to only considering techniques and manoeuvres to execute participation events. Thereby it helps in participatory assessment and decision making processes to achieve their objectives, and on the other hand in providing possibilities for meaningful and effective participation. The framework does not, however, tell how participation should be arranged, but rests on the existing and continually developing knowledge base on participatory models and techniques.

While all dimensions contribute to the overall openness, it is the fifth dimension, the impact of contribution, which ultimately determines the effect on the outcome. Accordingly, it is recommended that aspects of openness in assessment and decision making processes are considered step-by-step, following the order as presented above.

Categories of interaction

- Table originally from Decision analysis and risk management 2013/Homework.

The situation with interaction is similar to openness: the open policy practice method itself facilitates shared interaction, so that the default process leads to good practices. However, many current practices are against this thinking, and arguments are needed to defend such close interaction between all participants.

| Category | Explanation |

|---|---|

| Isolated | Assessment and use of assessment results are strictly separated. Results are provided to intended use, but users and stakeholders shall not interfere with making of the assessment. |

| Informing | Assessments are designed and conducted according to specified needs of intended use. Users and limited groups of stakeholders may have a minor role in providing information to assessment, but mainly serve as recipients of assessment results. |

| Participatory | Broader inclusion of participants is emphasized. Participation is, however, treated as an add-on alongside the actual processes of assessment and/or use of assessment results. |

| Joint | Involvement of and exchange of summary-level information among multiple actors in scoping, management, communication and follow-up of assessment. On the level of assessment practice, actions by different actors in different roles (assessor, manager, stakeholder) remain separate. |

| Shared | Different actors involved in assessment retain their roles and responsibilities, but engage in open collaboration upon determining assessment questions to address and finding answers to them as well as implementing them in practice. |

Acceptability

- Main article: Shared understanding.

Acceptability can be measured with a test of shared understanding. In a decision situation there is shared understanding when all participants of the decision support or decision making process will give positive answers to the following questions. Because shared understanding about the decision situation is the ultimate goal of the decision support process, also the test of shared understanding can be viewed as an ultimate measure of the success of the process.

| Question | Who is asked? |

|---|---|

| Is all relevant and important information described? | All participants of the decision support or decision making processes. |

| Are all relevant and important value judgements described? | |

| Are the decision maker's decision criteria described? | |

| Is the decision maker's rationale from the criteria to the decision described? |

Co-creation skills and facilitation

Co-creation skills and facilitation (which is sometimes also called interactional expertise) is a particular set of capabilities that are needed in open policy practice. Established capabilities of typical participant groups such as specific expertise, administrative skills, or citizen activism skills are not separately or even combined enough to handle all the tasks of creating collaboration, information objects, or shared understanding. Co-creation skills are needed to manage the decision making process to produce good, informed decisions and ultimately good outcomes. Co-creation skills and facilitation is often the rate limiting step of these improved practices, and therefore it warrants special emphasis in open policy practice.

Co-creation skills and facilitation is not a method of its own but rather a collection of skills that are needed to execute and manage an open decision process in practice. It includes many practices and methods that are in common use, while some are novel and developed specifically for open policy practice. There is already practical experience about implementing open policy practice in several different decision situations and assessments. Some of the skills that have been found important are briefly described below. They can be divided into four categories encouragement, synthesis, open data, and modelling. The University of Eastern Finland organises a course Decision analysis and risk management every two years. This course covers - at least superficially - most of the issues mentioned below.

There must be enough of co-creation skills among the participants of a decision process. Often it may be necessary to hire these capabilities from outside as an expert service. Actually these co-creation skills can be considered as a new area of expertise.

Encouragement

Encouragement includes skills to help people participate in a decision process, produce useful information, and learn from others.

- Increasing participation by convincing that openness does not prevent but enhances expertise.

- Increasing participation by convincing that openness does not cause chaos.

- Helping participants to focus on expressing their own knowledge and interests.

- Increasing participation by helping participants with unfamiliar tools or letting them use their own tools and then translating the information into the workspace.

- Organising discussions around specific statements that are both interesting and important to the users and relevant for the assessment.

- Seeing how the information and statements connect to the causal network of the assessment.

- Maintaining operative capacity so that there are enough people to wikify contributions, build models, and participate in other ways.

- Maintaining the operative capacity over the life span of a decision process so that also the implementation and outcomes are evaluated and managed properly.

- Creating means for online participation such as online questionnaires and comment boxes. See R-tools and op_fi:Kommentointityökalu.

- Organising public hearings, expert meetings, decision maker panels, and other traditional ways of collecting and distributing information among participants.

- Encouraging people to feel ownership about the web-workspace so that they would dare and want to maintain and improve it.

Synthesis

Synthesis includes skills that are needed to synthesise the information obtained into a more structured and useful format (discussions, variable pages, and nuggets).

- Communicating with experts, understanding expert contributions and knowing how to include them into the synthesis (skills of a good generalist).

- Understanding the big picture of the assessment content and identifying important missing pieces.

- Producing and converting information into practical textual formats within the web-workspace (also known as "wikifying" texts). See e.g. Help:Wiki editing.

- Organising comments and discussions into structured discussions using pragma-dialectic argumentation theory.

- Guiding the information collection and synthesis tasks based on understanding about what information objects are useful for the assessment and what approaches do not work.

- Using the main object structure (question, answer, and rationale) to clarify issues and guide further assessment work.

- Making searches to scientific literature, administrative reports, online discussions, and other sources of relevant information.

- Creating information objects from existing material.

- Using links, categories, templates and other wiki tools to make the new information objects efficient and easily available pieces of information for reuse.

Open data

Open data includes skills to convert data into machine-readable formats so that the data can be read into assessment models as meaningful variables.

- Converting existing data into a correct format with indices and result column(s).

- Uploading data to the Opasnet Base using Table2Base tables or OpasnetBaseImport.

- Uploading files to Opasnet wiki to make them available to the users and modelling.

- Using effectively the different ways for data input in ovariables: data, ddata, dependencies+formula.

- Using effectively the data sources that are embedded in the OpasnetUtils package, such as Sorvi and connection to the Statistics of Finland.

- Creating and saving ovariables to the Opasnet server with data produced by the means mentioned above.

- Creating an rcode to fetch ovariables from the server for plotting, printing, and modelling.

Modelling

Modelling includes skills for developing actual assessment models based on generic methods and case-specific data.

- Using probabilities in describing uncertainties.

- Developing models based on ovariables. Running the models from an Opasnet page or from one's own computer. See Modelling in Opasnet.

- Adding user interfaces to models on Opasnet by using the R-tools functionality.

- Incorporating statements from discussions into models by making explicit links to particular ovariables (i.e. making an index of a statement and explicating how different hypotheses of the statement would change the variable result).

- Using methods and other universal objects for modular working.

- Applying Health impact assessment module for performing health impact assessments.

- Knowing when to use generic and when case-specific objects.

- Adjusting generic objects for case-specific use.

- Permanently storing important model runs by making PDF files or uploading result figures to the assessment page.

Web-workspaces supporting open policy practice

This is not a category of skills, but a brief description of web-workspaces that can help in implementing these skills in practice. Actually, it is very hard to imagine how open policy practice could be implemented without strongly utilising such workspaces.

There are two web-workspaces that directly support open policy practice:

- Opasnet, which is especially designed for decision support,

- Innovillage, which is especially designed for improvement, evaluation, and management of decision practices.

Opasnet is specifically designed to implement functionalities and requirements of open policy practice. Opasnet supports A) the creation of assessment questions for decision support, B) the work to find answers to the questions, and C) the interpetation of the answers for practical decision needs. Opasnet is a workspace for decision support and it combines the political dimension (interests, opinions, local information, mainly parts A and C) and the technical dimension (expert knowledge, mainly part B). Both dimensions are combined into a single knowledge creation process.

Innovillage development workspace helps in the development, evaluation, and management of decision practices. This includes all phases of a decision process: preparation, actual decisions, implementation, and evaluation of outcomes. The framework evaluates each phase in respect to all other phases that will follow.

Rationale

Established decision processes work reasonably well related to aspects they are designed for. For example, performers of environmental impact assessment can organise public hearings and include stakeholder views. A city transport department is capable of designing streets to reduce congestion or negotiating about subsidies to public transport with bus companies. This is their job and they know how to do it.

But including openly produced knowledge about e.g. health impacts into these processes is another matter. A city transport department has neither resources nor capability to assess health impacts. It is not enough that researchers would know how to do it or even that most of the information needed exists already. The motivation, expertise, relevant data, and resources must meet in practice before any health assessment is done and included in a decision making process.

This is the critical question: how to make all these meet in practical situations? It should even be so easy that it would become a routine and a default rather than an exception. The Opasnet website was developed to enable the kind of work described above (http://en.opasnet.org). On the Opasnet web-workspace, several environmental health assessments have been performed using probabilistic models that are open (and open source code) to the last detail. It has also developed tools for making routine health and other impact assessments in such a way that all generic data and knowledge is already embedded in the tool, and the decision maker or expert only has to add case-specific data to make the assessment model run.

Most of the technical problems have been solved, so it is possible to start and perform new assessments as needed. A lot of work has been done to develop tools for open assessment. There are also several example assessments available for learning and reuse.

However, we have also identified urgent development needs.

First, the proposed practice would change many established practices in both decision making and expert work. We have found it very difficult to convince people to try the new approach. It is clearly more time consuming in the beginning because there are a lot of new things to learn, compared with practices that already are a routine. In addition, many people have serious doubts whether the practice could work in reality. The most common arguments are that open participation would cause chaos; learning the workspace with shared information objects is not worth the trouble; the authority of expertise (or experts) would decline; and that new practices are of little interest to experts as long as a decent assessment report is produced.

Second, there are many development needs in the co-creation and facilitation. There is theoretical understanding about how assessments should be done using shared information objects, and there is already practical experience about how to do this in practice. But the number of people who already have training to co-creation and facilitation is very small. The skills needed are very versatile. All of the information collected is expected to be transformed into a shared description that is in accordance with causality, critique, openness, and other principles listed above. Research, practical exercise, and training is needed to learn and teach co-creation and facilitation.

Third, the decision making process must be developed in such a way that it supports such assessments and is capable of including their results into the final comparison of decision options. Also the decision making process itself must be evaluated. These ideas are critical, because the need has not even been understood very well in the society. Researchers cannot solve this by themselves, they have to collaborate closely with decision makers. The good news is that recently there has been an increasing demand for evidence-based decision making. Now it is a good time to show that open policy practice is an excellent way to produce evidence-based decisions. In order to make effective changes in decision making, it requires more than just producing an openly created knowledge-base to support the decision making. It requires that the practices of decision making need to be revised.

The open policy practice can be considered as an attempt to solve the problem of management of natural and social complexity in societal decision making. It takes a broad view to decision making covering the whole chain from obtaining the knowledge-base to support decisions to the societal outcomes of decisions.

Within the whole, there are two major aspects that the framework emphasises. First is the process of creating the knowledge that forms the basis of decisions. In the view of the framework, the decision support covers both the technical dimension and the political dimension of decision support [7]. Here technical dimension refers to the expert knowledge and systematic analyses conducted by experts to provide information on the issues addressed in decision making. Political dimension then refers to the discussions in which the needs and views of different societal actors are addressed and where the practical meanings of expert knowledge are interpreted. This approach is in line with the principles of open policy practice described above.

The second major aspect is the top-level view of evaluating decisions. The evaluation covers all parts of the overall decision making process: decision support, decisions, implementation of decisions, as well as outcomes. In line with the principles of relational evaluation approach REA applied in Innovillage, the evaluation covers the phases of design, execution and follow-up of each part. When this evaluation is done to each part independently as well as in relation to other parts of the chain from knowledge to outcomes, all parts of the chain become evaluated in terms of four perspectives: process, product, use, and interaction (cf. Pohjola 2001?, 2006?, 2007?).

See also

- Maijaliisa Junnila & Sakari Hänninen & Reijo Väärälä (2013): Säädösehdotusten vaikutusten ennakkoarviointi THL:ssä Impact assessment of legislation in THL (password-protected)

- Open science and research roadmap 2014–2017

- Open assessment

- Properties of good assessment

- Participating in assessments

- Portal:Open assessments

- op_fi:Avoin päätöksentekokäytäntö

- Pragmatic knowledge services

- State of the art in benefit–risk analysis: Consumer perception

- State of the art in benefit–risk analysis: Food and nutrition

- State of the art in benefit–risk analysis: Environmental health

- Openness in participation, assessment, and policy making upon issues of environment and environmental health: a review of literature and recent project results

- Facilitating mass collaboration in assessments

References

- ↑ CITATION NEEDED!

- ↑ Mikko V. Pohjola. (2015?) Assessment of impacts to health, safety, and environment in the context of materials processing and related public policy. Comprehensive Materials Processing, Volume 8. Health, Safety and Environmental issues (00814)

- ↑ Mikko V. Pohjola and Jouni T. Tuomisto: Openness in participation, assessment, and policy making upon issues of environment and environmental health: a review of literature and recent project results. Environmental Health 2011, 10:58 http://www.ehjournal.net/content/10/1/58.

- ↑ Hartley N, Wood C: Public participation in environmental impact assessment - implementing the Aarhus Convention. Environmental Impact Assessment Review 2005, 25:319-340.

- ↑ Burgess J, Clark J: Evaluating public and stakeholder engagement strategies in environmental governance. In Interfaces between science and society. Edited by: Guimarães Pereira A, Guedes Vaz S, Tognetti S. Sheffield: Greenleaf Publishing; 2006:225-252.

- ↑ Webler T, Tuler S: Fairness and Competence in Citizen Participation - Theoretical Reflections From a Case Study. Administration & Society 2000, 32:566-595.

- ↑ Collins H, Evans R. Rethinking expertise. The University of Chicago Press, Chicago, 2007.