From open assessment to shared understanding: practical experiences: Difference between revisions

mNo edit summary |

|||

| Line 69: | Line 69: | ||

=== Extended causal diagrams === | === Extended causal diagrams === | ||

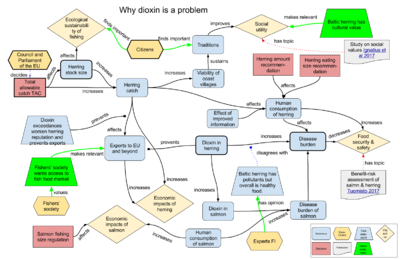

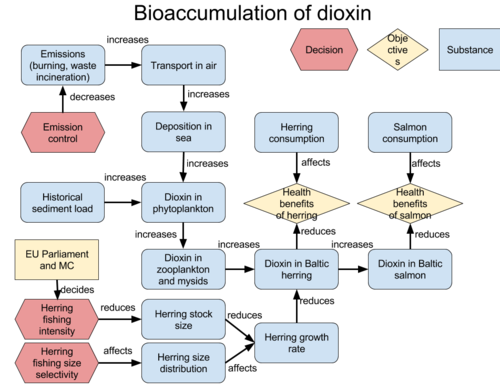

[[image:Bioaccumulation of dioxin.png|thumb|500px|Figure 1. Extended causal diagram about dioxins, Baltic fish, and health as decribed in the | [[image:Bioaccumulation of dioxin.png|thumb|500px|Figure 1. Extended causal diagram about dioxins, Baltic fish, and health as decribed in the BONUS GOHERR project<ref name="goherr2018"/>. Decisions are shown as red hexagons, decision makers and stakeholders as yellow rectangles, decision objectives as yellow diamonds, and substantive issues as blue round-cornered rectangles. The relations are written on the diagram as predicates of sentences where the subject is at the tail of the arrow and the object is at the tip of the arrow. For other extended causal diagrams about the same topic, see Appendix 1.]] | ||

Policy issues are complex, which makes it difficult to understand, analyse, discuss and communicate them effectively. The first innovation that we implemented to tackle this difficulty was ''extended causal diagram''<sup>b</sup>. Extended causal diagrams are based on an idea that irrespective of a decision situation at hand, core issues always include some actions based on the decision, and these actions have causal effects on some objectives. In a diagram, actions, objectives, and other issues are depicted with nodes (aka vertices), and their causal relations are depicted with arrows (aka arcs). For example, a need to reduce dioxins in human food may lead to a decision to clean up emissions from waste incineration (Figure 1.). Reduced emissions improve air quality and dioxin deposition into the Baltic Sea, which has a favourable effect on concentrations in the Baltic herring and thus human food and health, which is an ultimate objective (among others). | Policy issues are complex, which makes it difficult to understand, analyse, discuss and communicate them effectively. The first innovation that we implemented to tackle this difficulty was ''extended causal diagram''<sup>b</sup>. Extended causal diagrams are based on an idea that irrespective of a decision situation at hand, core issues always include some actions based on the decision, and these actions have causal effects on some objectives. In a diagram, actions, objectives, and other issues are depicted with nodes (aka vertices), and their causal relations are depicted with arrows (aka arcs). For example, a need to reduce dioxins in human food may lead to a decision to clean up emissions from waste incineration (Figure 1.). Reduced emissions improve air quality and dioxin deposition into the Baltic Sea, which has a favourable effect on concentrations in the Baltic herring and thus human food and health, which is an ultimate objective (among others). | ||

Causal modelling as such is an old idea, and there are various methods developed for it, both qualitative and quantitative. However, the additional ideas with extended causal diagrams were that a) they can effectively be used in communication especially with complex cases, and b) also all non-causal issues can and should be linked to the causal core in some way, if they are relevant to the decision. In other words, a participant in a policy discussion should be able to make a reasonable connection between what they are just saying and some node in an extended causal diagram developed for that policy issue. | Causal modelling as such is an old idea, and there are various methods developed for it, both qualitative and quantitative. However, the additional ideas with extended causal diagrams were that a) they can effectively be used in communication especially with complex cases, and b) also all non-causal issues can and should be linked to the causal core in some way, if they are relevant to the decision. In other words, a participant in a policy discussion should be able to make a reasonable connection between what they are just saying and some node in an extended causal diagram developed for that policy issue. | ||

The first implementations of extended causal diagrams were about toxicology of dioxins<ref name="tuomisto1999"/> and restoration of a closed asbestos mine area<ref name="paakkila1999"/><sup>c</sup>. In the early cases, the main purpose was to give structure to discussion and assessment rather than to be a backbone for quantitative models. In later implementations, such as in the composite traffic assessment<ref name="tuomisto2005"/> or | The first implementations of extended causal diagrams were about toxicology of dioxins<ref name="tuomisto1999"/> and restoration of a closed asbestos mine area<ref name="paakkila1999"/><sup>c</sup>. In the early cases, the main purpose was to give structure to discussion and assessment rather than to be a backbone for quantitative models. In later implementations, such as in the composite traffic assessment<ref name="tuomisto2005"/> or BONUS GOHERR project<ref name="goherr2018"/>, diagrams have been used in both purposes. | ||

For more examples and the description of the current notation, see Appendix 1. | For more examples and the description of the current notation, see Appendix 1. | ||

| Line 512: | Line 512: | ||

| Benefit-risk assessment of Baltic herring and salmon intake<ref name="goherr2018">Assessment: http://en.opasnet.org/w/Benefit-risk_assessment_of_Baltic_herring_and_salmon_intake. Accessed 24 Jan 2018.</ref> | | Benefit-risk assessment of Baltic herring and salmon intake<ref name="goherr2018">Assessment: http://en.opasnet.org/w/Benefit-risk_assessment_of_Baltic_herring_and_salmon_intake. Accessed 24 Jan 2018.</ref> | ||

| 2018 | | 2018 | ||

| | | BONUS GOHERR | ||

|---- | |---- | ||

| rowspan="2"| Dioxins, fine particles | | rowspan="2"| Dioxins, fine particles | ||

Revision as of 12:57, 29 January 2018

This page is a nugget.

The page identifier is Op_en7836 | |

|---|---|

| Moderator:Jouni (see all) | |

|

| |

| Upload data

|

Unlike most other pages in Opasnet, the nuggets have predetermined authors, and you cannot freely edit the contents. Note! If you want to protect the nugget you've created from unauthorized editing click here |

From open assessment to shared understanding: practical experiences is a manuscript of a scientific article. The main point is to offer a comprehensive summary of the methods developed at THL/environmental health to support informed societal decision making, and evaluate their use and usability in practical examples in 2006-2017. Manuscript is to be submitted to BMC Public Health as a technical advance article [1].

Title page

- Title: From open assessment to shared understanding: practical experiences

- List full names, institutional addresses and emails for all authors

- Jouni T. Tuomisto1, Mikko Pohjola2, Arja Asikainen1, Päivi Meriläinen1, Teemu Rintala3.

- National Institute for Health and Welfare, P.O.Box 95, 70701 Kuopio, Finland

- Santasport, Rovaniemi, Finland

- Aalto University, Espoo, Finland

- emails: jouni.tuomisto[]thl.fi, mikko.pohjola[]santasport.fi, arja.asikainen[]thl.fi, paivi.merilainen[]thl.fi, teemu.rintala.a[]gmail.com

- Corresponding author: Jouni Tuomisto

- Jouni T. Tuomisto1, Mikko Pohjola2, Arja Asikainen1, Päivi Meriläinen1, Teemu Rintala3.

TO DO

- Make a coherent ontology in Opasnet: Each term in the ontology should be found in Opasnet with a description. In addition, a specific link should be available to mean the object itself rather than its description. http://en.opasnet.org/entity/...? Make clear what things should link to Opasnet and what to Wikibase (if we get one running soon)

- Describe OPP using the new ontology and make a new graph out of that. Is this possible/necessary?

- All terms and principles should be described in Opasnet in their own pages. Use italics to refer to these pages.

- Upload Tuomstio 1999 thesis to Julkari. And Paakkila 1999

Letter to the Editor

Abstract

Background

Evidence-based decision making and better use of scientific information in societal decisions has been an area of development for decades but is still topical. A decision support work can be viewed from the perspective of information collection, synthesis, and flow between decision makers, experts, and stakeholders.

Methods

We give an overview of and describe practical experiences from the open policy practice method that has been developed in National Institute for Health and Welfare for more than a decade. Open assessments are online collaborative efforts to produce information for decision makers by utilising e.g. quantitative models, structured discussions, and knowledge crystals. Knowledge crystal is a web page that has a specific resarch question, and an answer is continually updated based on all available information. Shared understanding is used to motivate decision makers and stakeholders to common dialogue and inform about conclusions and remaining disagreements.

Results

Technically the methods and online tools work as expected, as demonstrated by the numerous assessments and policy support processes conducted. The approach improves the availability of information and especially of details. Acceptability of openness is ambivalent among experts: it is an important scientific principle, but it goes against many current publishing practices. However, co-creation and openness are megatrends that are changing decision making and the society at large. Against many experts' fears, open participation has not caused problems in performing high-quality assessments. In contrast, a key problem is to motivate more people, including experts and decision makers, to participate and share their views.

Conclusions

Shared understanding has proved to be a useful concept that guides policy processes toward more collaborative approach, whose purpose is wider understanding rather than winning. There is potential for merging open policy practice with other open science and open decision process tools. Active facilitation, community building and improving the user-friendliness of the tools were identified as key solutions for improving usability of the method in the future.

- Keywords

- environmental health, decision support, open assessment, open policy practice, shared understanding, modelling, online tools, policy, method development, evaluation

Background

In this article, we describe and evaluate open policy practice, a set of methods and tools for improving science-based policy making. They have been developed in the National Institute for Health and Welfare (THL, located in Finland) for more than 16 years especially to improve environmental health assessmentsa.

Science-based decision support has been a hot and evolving topic for a long time, and its importance is not diminishing any time soon. The area is complex, and all the key players – decision makers, experts, and citizens or other stakeholders – all have different views on the process, their own roles in it, and how information should be used in the process. For example, researchers often think of information as a way to find the truth, while politicians see information as one of the tools to promote political agendas ultimately based on values.[1] Therefore, any successful method should provide functionalities for each of the key groups.

In the 1970's, the focus was on scientific knowledge and an idea that political ambitions should be separated from objective assessments especially in the US. Since the 1980's, risk assessment has been a key method to assess human risks of environmental and occupational chemicals[2]. National Research Council specifically developed a process that could be used by all federal US agencies. Although it was generic in this sense, it typically focussed on a single chemical at a time and thus provided guidance for administrative permissions but less so to complex policy issues.

This shortcoming was tackled in another report that acknowledged this complexity and offered deliberation with stakeholders as a solution, in addition to scientific analysis[3]. However, despite these intentions, practical assessments have found it difficult to successfully perform deliberation on a routine basis[4]. On the contrary, citizens often complain that even if they have been formally heard during a process, they have not been listend to and their concerns have not affected decisions made.

In the early 2000's, several important books and articles were published about mass collaboration[5], wisdom of crowds[6], crowdsouring in the government[7], and co-creation[8]. A common idea of the authors was that voluntary, self-organised groups had knowledge and capabilities that could be much more effectively harnessed in the society than what was happening at the time.

These ideas were seen as potentially important for environmental health assessment in THL (at that time National Public Health Institute, KTL), and they were adopted in the work of the Centre of Excellence for Environmental Health Risk Analysis (2002-2007). A technical milestone was achieved in January 2006 when we launched our own wiki site Opasnet for environmental health assessments, inspired by the success of Wikipedia. This enabled the intertwining of both theoretical and practical work to improve assessment methods and test openness and co-creation as elementary parts of the previously closed expert work[9]. This research soon lead to a summary report about the new methods and tools developed to facilitate assessments[10].

The main ideas of our approach was to facilitate both the scientific work about policy-related facts and policy support about finding out what could and should be done and why. We identified three critical needs for development in the scientific enterprise, namely a) data sharing, b) criticism, and c) common platform for discussion, data collection, and modelling. For decision making, we identified needs to explicate better a) decision makers' values and objectives, b) connections between scientific and other relevant issues, and c) disagreements between individuals and their potential resolutions. Decision making was essentially seen as an art of balancing knowledge with values in a coherent and explicit way.

In this article, we will give the first comprehensive, peer-reviewed description about the methodology and tools of open policy practice. Case studies have been published along the way, and the key methods have been described in each article. Also, all methods and tools have been developed online and the full material have been available for interested readers since each piece was first written. However, there has not been a systematic description since the 2007 report[10], and a lot of development has taken place since.

We will also take a step back and critically evaluate the methods used during the last 16 years since the start of the Centre of Excellence.

Finally, we will discuss some of the main lessons learned and give guidance for further work to improve the use of scientific information in societal decision making.

Methods

Extended causal diagrams

Policy issues are complex, which makes it difficult to understand, analyse, discuss and communicate them effectively. The first innovation that we implemented to tackle this difficulty was extended causal diagramb. Extended causal diagrams are based on an idea that irrespective of a decision situation at hand, core issues always include some actions based on the decision, and these actions have causal effects on some objectives. In a diagram, actions, objectives, and other issues are depicted with nodes (aka vertices), and their causal relations are depicted with arrows (aka arcs). For example, a need to reduce dioxins in human food may lead to a decision to clean up emissions from waste incineration (Figure 1.). Reduced emissions improve air quality and dioxin deposition into the Baltic Sea, which has a favourable effect on concentrations in the Baltic herring and thus human food and health, which is an ultimate objective (among others).

Causal modelling as such is an old idea, and there are various methods developed for it, both qualitative and quantitative. However, the additional ideas with extended causal diagrams were that a) they can effectively be used in communication especially with complex cases, and b) also all non-causal issues can and should be linked to the causal core in some way, if they are relevant to the decision. In other words, a participant in a policy discussion should be able to make a reasonable connection between what they are just saying and some node in an extended causal diagram developed for that policy issue.

The first implementations of extended causal diagrams were about toxicology of dioxins[12] and restoration of a closed asbestos mine area[13]c. In the early cases, the main purpose was to give structure to discussion and assessment rather than to be a backbone for quantitative models. In later implementations, such as in the composite traffic assessment[14] or BONUS GOHERR project[11], diagrams have been used in both purposes.

For more examples and the description of the current notation, see Appendix 1.

Knowledge crystals

Although an extended causal diagram provides a method to illustrate a complex decision situation, it offers little help in describing quantitative nuances within the nodes or arrows, such as functional or probabilistic relations or estimates. There are tools with such functionalities, e.g. Hugin (Hugin Expert A/S, Aalborg, Denmark) for Bayesian belief networks and Analytica® (Lumina Decision Systems Inc, Los Gatos, CA, USA) for Monte Carlo simulation. However, commercial tools are typically designed for a single desktop user rather than for open co-creation. In addition, they have limited possibilities for adding non-causal nodes and links or free-format discussions about the topics.

There was a clear need for theoretical and practical development in this area, so we combined three open source softwares into a single web-workspace named Opasnet for discussions, modelling and data storage of scientific and policy issues: Mediawiki, R statistical software, and MongoDB database. The workspace will be described in more detail in the next section and appendices 2–4. Here we will first look at how the information is structured in this system.

The second major innovation was the concept of a knowledge crystal. Its purpose is to offer a versatile information structure for a node in an extended causal diagram. It should be able to handle any topic and to systematically describe all causal and non-causal relations to other nodes, whether they are quantitative or qualitative. It should contain mathematics, discussions, illustrations, or other information as necessary. Also, it should handle both facts and values, and withstand misconceptions and fuzzy thinking as well. Its main structure should be universal and understandable for both a human and a computer. It should be manageable using scientific practices, notably criticism and openness, i.e. in a way that anyone can read and contribute to its content. And finally, it should be easy enough for an interested non-expert to find it online and to understand and use its main message.

After some experiments, we identified a few critical features a knowledge crystal should have to fulfil its objectives. First, a knowledge crystal is a web page with a permanent identifier or URL. Second, it has an explicit topic, which is described in the format of a research question. Importantly, the topic does not change over time (in practice, adjustments to the wording are allowed especially if the knowledge crystal is not yet established and used in several different assessments). This makes it possible for a user to come later to the same page and find an up-to-date version of the same topic.

Third, the purpose of a page is to give an informed answer to the question presented. The answer is expected to change as new information becomes available, and anyone is allowed to bring in new relevant information as long as certain rules of co-creation are followed. In a sense, the answer of a knowledge crystal is never final but it is always usable.

A standardised structure is especially relevant for the answer of a knowledge crystal, because it enables its direct use in assessment models or internet applications. So even though the content is updated as knowledge increases, the answer remains in the same, computer-readable format. So far, such interpretations of particular research topics have been very rare: open data contains little or no interpretation, and scientific reviews or articles are not machine-readable (at least not until artificial intelligence develops further).

Fourth, an answer is based on information, reasoning, and discussion presented on the page under the heading rationale. The purpose of rationale is to contain anything that is needed to convince a critical rational reader about the validity of the answer. It is also the place for new information and discussions that may change the answer.

| Attribute | Description |

|---|---|

| Name | An identifier for the knowledge crystal. Each Opasnet page has two identifiers: the name of the page (e.g. Mercury concentrations in fish in Finland; for humans) and the page identifier (e.g. Op_en4004; for computers). |

| Question | Gives the research question that is to be answered. It defines the scope of the knowledge crystal. When possible, the question should be defined in a way that it has relevance in many different situations, i.e. makes the page reusable. (For example, a page about mercury concentrations can be used in several assessments related to fish consumption.) |

| Answer | Presents an understandable and useful answer to the question. It is the current best synthesis of all available data. Typically it has a descriptive easy-to-read summary and a detailed quantitative result published as open data. An answer may contain several competing hypotheses, if they all hold against scientific criticism. This way, it may include an accurate description of the uncertainty of the answer. |

| Rationale | Contains any information that is necessary to convince a critical rational reader that the answer is credible and usable. It presents the reader the information required to derive the answer and explains how it is formed. It may have different sub-attributes depending on the page type, some examples are listed below. Rationale may also contain lengthy discussions about relevant details.

|

| Other | In addition to attributes, it is practical to have clarifying subheadings on a knowledge crystal page. These include: See also, Keywords, References, Related files |

It is useful to compare a knowledge crystal to a scientific article, which is organised around a single dataset or an analysis and is expected to stay permanently unchanged after publication. Further, articles offer little room for deliberation about the interpretation or meaning of the results after a manuscript is submitted: reviewer comments are often not published, and further discussion about an article is rare and mainly occurs only if serious problems are found. Indeed, the current scientific publishing system is poor in correcting errors via deliberation[15].

In contrast, knowledge crystal is designed to support continuous discussion about the science (or values, depending on the topic) backing up conclusions, thus hopefully leading to improved understanding of the topic.

This process is similar to that in Wikipedia, but the information structure is different, as Wikipedia articles describe issues rather than answer specific questions. Another difference is that Wikipedia relies on established sources such as textbooks rather than interprets original results. There is a clear reason for this difference: knowledge crystals are about some details of a decision or an assessment, and there is no established textbook knowledge about most of the information needed.

There are different kinds of knowledge crystals for different uses. Variables contain substantive topics such as emissions of a pollutant, food consumption or other behaviour of an individual, or disease burden in a population (for examples, see Figure 1.) Assessments describe the information needs of particular decision situations and work processes designed to answer those needs. They also may describe whole models (consisting of variables) for simulating impacts of a decision. Methods describe specific procedures to organise or analyse information. The question of a method typically starts with "How to..." For a list of all knowledge crystal types used in Opasnet web-workspace, see Appendix 1.

Knowledge crystals are designed to be modular and reusable. This is important for the efficiency of the work. Variables are used in several assessments where possible, and methods are used to standardise and facilitate the work. For this reason, all software in Opasnet are widely used open source solutions. An R package OpasnetUtils was developed to contain the most important methods, functions, and information structures needed to use knowledge crystals in modelling (for details, see Appendix 2).

Opasnet web-workspace

In 2006, we set an objective to develop an assessment system that has a solid theoretial basis and coherent practices that are supported by functional tools. We assumed that the different parts need to be developed side by side so that theories are tested with practical work and assessment practices and tools are evaluated against the theoretical framework. This lead our team to simultaneously develop information structures, assessment methods, and evaluation criteria; perform assessment case studies; and build software libraries and online tools.

The first part of our web-workspace, namely a wiki, was launched in January, 2006. Its name Opasnet is a short version of Open Assessors' Network. The purpose was to test and learn co-creation among environmental health experts and also start opening the assessment process to interested stakeholders. There were several reasons to choose Mediawiki platform. First, it is the same as used by Wikipedia, so a large group of people had already tested and developed many novel practices and we could directly learn from them. Second, open code and wide use elsewhere would help our practices spread to new communities because the installation and learning costs were presumably low. Third, Mediawiki has necessary features to implement good research practices, such as talk pages to clearly separate content and discussions about the content, and automatic and full version control. And fourth, the maintenance and development of the software itself seemed to be certain for several years.

We launched several wiki instances for different projects, as participing researchers didn't want to write to a website that was visible to other projects as well. However, this caused extra maintenance burden and confusion with no real added value, so we soon started to discourage against this practice. Instead, we moved to a system with three wiki instances. English Opasnet (en.opasnet.org) contained all international projects and most scientific information. Finnish Opasnet (fi.opasnet.org) contained mostly project material for Finnish projects and pages targeted for Finnish audiences. Heande (short for Health, the Environment, and Everything) was a password-protected project wiki, which contained information that could not be published (yet) for a reason or another. We used a lot of effort to encourage researchers to write directly to open wikis, but most were hesitant to do so at the time (and many still are).

In the beginning, Opasnet was mainly used to document project content. All environmental health assessments were performed using commercial software, notably Analytica®. However, it was clear that the contents of all assessment models to the very detail should be published and opened up for public scrutiny. Although it was possible to upload model files for people to download and examine, this never actually happened in practice because any interested reader would have had to obtain, install, and learn the software first. So, it was necessary to switch to an open source modelling software that enabled online working with model code.

The statistical software R was chosen, as it was widely used, it had object-oriented approach (thus supporting modularity) and it enabled complex modelling with fairly simple code, thanks to hundreds of packages that volunteers had written and shared to enhance the functionalities of R. There was no inherent support for R in Mediawiki, so we had to write our own interface. As a result, we could write R code directly to a wiki page, save, and run it by clicking a button. The output of the code would appear as a separate web page, or embedded on the wiki page with the code. Resulting objects could also be stored to the server and fetched later by another code. This made it possible to run complex models online without installing anything on your own computer (except a web browser). It also enabled version control and archival of both the model code and the model results.

We also developed an R package OpasnetUtils (available from CRAN repository cran.r-project.org) to support knowledge crystals and decision support models. It has a special object type for knowledge crystals (called ovariable) that implements the functionalities described above. For example, the answer can be calculated based on data or functional dependencies; it "understands" its own dependencies and is able to fetch its causal parents to the model from separate Opasnet pages; a model can be adjusted afterwards by implementing one or more decision options to relevant parts of the model, and this is done on an Opasnet page with no changes to the model code; and if input values are uncertain, it automatically propagates uncertainties through the model using Monte Carlo simulation.

The modelling functionalities created a need to store data to the web-workspace. A database called Opasnet Base was created using MongoDB no-sql softwared. It has several user interfaces. A user can write a table directly on a wiki page, and the content will be uploaded to the database in a structured format. This is often used to give parameter values to variables in assessment models. A benefit is that the data is located in an intuitive place, typically under the Rationale subheading on a knowledge crystal page.

Another interface is especially used to upload large datasets (some population datasets used contain ca. 10 million rows) to Opasnet Base. It is noteworthy that each dataset must be linked to a single wiki page, which contains all the necessary descriptions and metadata about the data. All datasets are also downloadable to R for calculations irrespective of whether R is run from an Opasnet page or from user's own computer.

This data structure facilitates coherent practices of daily work with little or no extra effort needed to link datasets to relevant topics and document and archive them. Functionalities are deliberately organised in a way that all assessment-related work and research can be performed using the same tools.

Open assessment

Open assessment is a method for performing impact assessments using extended causal diagrams, knowledge crystals, and open online assessment tools. Here we use "assessment of impacts" for ex ante consideration about what will happen if a particular decision is made, and "evaluation of outcomes" for ex post consideration about what did happen after a decision was implemented. Open assessments are typically performed before a decision is made. The focus is necessarily on expert knowledge and how to organise that, although prioritisation is only possible if the objectives and valuations of the decision maker are known.

As a research topic, open assessment attempts to answer this research question: "How can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?" This question was in our minds when we developed many of the ideas presented in this article. As can be seen, openness, participation, and values are taken as given premises. In the early 2000's, this was far from common practice, although these ideas had been proposed before we started to develop practices based on them[3].

The main focus since the beginning was to think about information and information flows, rather than jurisdictions, roles, or hierarchies. So, we deliberately ignored questions like what kinds of scientific committees are needed to support relevant high-quality advice; or how expert opinions should be included in the work of e.g. a government, parliament, or municipality council. The idea was rather that if the information production process is completely open, it can include information from any committee or individual as long as the quality aspect is successfully resolved. And if all useful information related to a decision can be synthesised and made available to everyone, then any kind of decision-making body could use that information. Generic approach was chosen to be helpful irrespectice of the structure of the administration.

Of course, this does not mean that any kind of organisation or individual is equally prone or capable of using assessment information. It simply means that we considered it as a separate question. Having said that, there was also a thought that if a good assessment is able to produce some clear and unequivocal conclusion that the whole public can see and understand, it will become much harder for any decision maker to deviate from that conclusion.

Principles in open assessment

There are guidance about crowdsourced policymaking[16], and similar ideas have been utilised in open assessment. Openness, causality, knowledge crystals, and reuse are principles that have been built in the functionalities of the tools in aim to make it easy to obey them (Table 2). Drawing extended causal diagrams puts emphasis on causalities, and publishing them on open web-workspaces complies with openness automatically. Knowledge crystals promote reuse of information. Some other principles require more understanding and effort from the participants.

Intentionality is about making the values and objectives of decision makers visible and under scrutiny. There exist models for describing facts and values in a coherent dual system, and such methods should be encouraged[17]. However, this often requires extra effort, and also it may be tactically useful for a decision maker not to conceal all their values.

Criticism based on observations and rationality is a central idea in the scientific method, and therefore it is also part of open assessment. However, its implementation into the tools is difficult. For example, only in rare cases it is possible or practical to develop logical propositions that would automatically rule out a statement if another statement is found true. So, most critique is verbal or written discussion between participants and difficult to automate. Still, we have found some useful information structures for criticism.

Discussions can be organised according to pragma-dialectical argumentation rules[18], so that arguments form a hierarchical thread pointing to a main statement or statements. Attack arguments are used to invalidate arguments, and defends are used to prevent from attacks, while comments are used to clarify issues. At the moment, such hierarchical structures are built by hand based on what people say. But it has clear added value for a reader, because even a lengthy discussion can be summarised into a short statement after a resolution is found, and any thread can be individually scrutinised.

Grouping and respect are principles that aim to motivate and guide individuals to collaborate online. It has been found out that being part of an identifiable group clearly does both: people participate more actively and are more aware of what they can and are expected to do[7]. Respect includes the idea that merit based on contributions should be measured and evaluated, and respect should be given to participants based on these evaluations. Respect is needed also because systematic criticism easily affects people emotionally, even when the discussion is about substance rather than person. It is therefore important to show that all contributions and contributors are valued even when a contribution is criticised. Although these two principles have been identified as very important, they are currently only implemented in face-to-face meetings by facilitators giving direct feedback; Opasnet web-workspace does not have good functionalities for them.

| Principle | Explanation |

|---|---|

| Intentionality | The decision maker explicates their objectives and decision options under consideration. All that is done aims to offer better understanding about impacts of the decision related to the objectives of the decision maker. Thus, the participation of the decision maker in the decision support process is crucial. |

| Causality | The focus is on understanding and describing the causal relations between the decision options and the intended outcomes. The aim is to predict what impacts will occur if a particular decision option is chosen. |

| Criticism | All information presented can be criticised based on relevance and accordance to observations. The aim is to reject ideas, hypotheses - and ultimately decision options - that do not hold against criticism. Criticism has a central role in the scientific method, and here we apply it in practical situations, because rejecting poor statements is much easier and more efficient than trying to prove statements true. |

| Knowledge crystals | All information is shared using a systematic structure and a common workspace where all participants can work. Knowledge crystals are used for this. The structure of an assessment and its data is based on substance rather than on persons, organisations, or processes (e.g. data is not hidden in closed institute repositories; see also causality). Objectives determine the information needs, which are then used to define research questions to be answered in the assessment. The assessment work is collaboration aiming to answer these questions in a way that holds against critique. |

| Openness | All work and all information is openly available to anyone interested for reading and contributing all the time. If there are exceptions, these must be publicly justified. Openness is crucial because a priori it is impossible to know who may have important information or value judgements about the topic. |

| Reuse | All information is produced in a format that can easily be used for other purposes by other people. Open data principles are used when possible[19]. For example, some formats such as PDF files are not easily reusable. Reuse is facilitated by knowledge crystals and openness. |

| Grouping | Facilitation methods are used to promote the participants' feeling of being an important member of a group that has a meaningful purpose. |

| Respect | Contributions are systematically documented and their merit evaluated so that each participant receives the respect they deserve based on their contributions. |

Properties of good assessment

There is a need to evaluate the assessment work before, during, and after it is done[20]. First we take a brief look at what makes a good assessment and what criteria could be used (see Table 3)[21].

Quality of content refers to the output of an assessment, typically a report, model or summary presentation. Its quality is obviously an important property. If the facts are plain wrong, it is more likely to misguide than lead to good decisions. But it is more than that. Informativeness and calibration describe how large the remaining uncertainties are and how close the answers probably are to the truth (compared with some golden standard). In some statistical texts, similar concepts have been called precision and accuracy, respectively, although with assessments they should be understood in a flexible rather than strictly statistical sense.[22] Coherence means that the answers given are to the questions asked. Although not always easy to evaluate, coherence is an important property to keep in mind because lack of it is common: politician's are experts in answering other questions than asked, and researchers tend to do the same if the research funding is not sufficient to answer the actual, hard question.

Applicability is a large evaluation area. It looks at properties that affect how well the assessment work can and will be applied to support decisions. It is independent of the quality of content, i.e. despite high quality, an assessment may have very poor applicability. The opposite may also be true, as sometimes faulty assessments are actively used to promote policies. However, usability typically decreases rapidly if the target audience evaluates an assessment to be of poor quality.

As coherence evaluates whether the assessment question was answered, relevance asks whether a good question was asked in the first place. Understanding what the right question actually is is surprisingly hard, and often its identification requires lots of discussion and deliberation between different groups, including decision makers and experts. Typically there is always too little time available for such discussions, and online forums may potentially help in this.

Availability is more technical property and describes how easily a user can find the information when needed. A typical problem is that a potential user does not know that information exists even if it could be easily accessed. Usability may differ from user to user, depending on e.g. background knowledge, interest, or time available to learn the content.

Acceptability is a very complex issue and most easily detectable when it fails. A common situation is that stakeholders feel that they have not been properly heard and therefore any output from an assessment process is perceived faulty. Also doubts about the credibility of the assessor fall into this category.

Efficiency evaluates resource use when performing an assessment. Money and time are two common measures for this. Often it is most useful to evaluate efficiency before an assessment is started. Is it realistic to produce new important information given the resources and schedule available? If more (less) resources were available, what added (lost) value would occur? Another aspect in efficiency is that if assessments are done openly, reuse of information becomes easier and the marginal cost and time of a new assessment decrease.

All properties of good assessment, not just efficiency, are meant to guide planning, execution, and evaluation of the whole assessment work. If they are kept in mind always, they can improve daily work.

| Category | Property | Description | Question |

|---|---|---|---|

| Quality of content | Informativeness | Specificity of information, e.g. tightness of spread for a distribution. | How many possible worlds does the answer rule out? How few possible interpretations are there for the answer? |

| Calibration | Exactness or correctness of information. In practice often in comparison to some other estimate or a golden standard. | How close is the answer to reality or real value? | |

| Coherence | Correspondence between questions and answers. Also between sets of questions and answers. | How completely does the answer address the assessment question? Is everything addressed? Is something unnecessary? | |

| Applicability | Relevance | Correspondence between output and its intended use. | How well does the information provided by the assessment serve the needs of the users? Is the assessment question good? |

| Availability | Accessibility of the output to users in terms of e.g. time, location, extent of information, extent of users. | Is the information provided by the assessment available when, where and to whom is needed? | |

| Usability | Potential of the information in the output to trigger understanding in its users about what it describes. | Can the users perceive and internalise the information provided by the assessment? Does users' understanding increase about the assessed issue? | |

| Acceptability | Potential of the output being accepted by its users. Fundamentally a matter of its making and delivery, not its information content. | Is the assessment result (output), and the way it is obtained and delivered for use, perceived as acceptable by the users? | |

| Efficiency | Intra-assessment efficiency | Resource expenditure of producing the assessment output. | How much effort is spent in the making of an assessment? |

| Inter-assessment efficiency | Resource expenditure of producing assessment outputs in a series of assessments. | If another (somewhat similar) assessment was made, how much (less) effort would be needed? |

Open policy practice

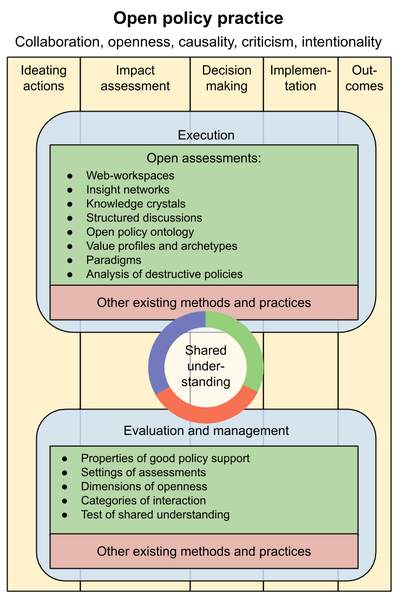

During Intarese project (2005-2011), it became more and more clear to us based on literature and own practical experience that assessments themselves were not enough to convey the information to decision processes. The scientific and political realms are based on different premises and objectives, and we identified a need to evaluate when the information does not flow well and what are typical problems with it. So, new theoretical work was done on decision processes, roles of assessments and information in them, and guidance for participants (Figure 2).

Open policy practice is a method to support societal decision making in an open society, and it is the overarching concept covering all methods, tools, practices, and terms presented in this article[23]. One part of open policy practice is open assessment, which focuses on producing relevant information for decision making. Open policy practice is a larger concept and it is especially focused on promoting the use of information in the decision making process. It gives practical guidance for the whole decision process from initiation to decision support to actual decision making to implementation and finally to evaluation of outcomes. Our aim was that it is applicable to all kinds of societal decision situations in any administrative area or discipline.

Open policy practice is divided into four main parts, which are brefly described here (see also Figure 3).

- Shared understanding (a structured documentation of different facts, values, and disagreements related to a decision situation) is the main objective of open policy practice. It is a product that guides the decision and also is basis for evaluation of outcomes. For more details, see the next section.

- The execution of decision support. It is mostly about collecting, organising and synthesising scientific knowledge and values in order to inform the decision maker to help them reach their objectives. In practice, most of open assessment work happens in this part. It also contains the execution of the decision process itself and the integration of these two processes.

- Evaluation and management of the work (of decision support and decision making). It focusses on looking at what is being done, whether the work produces the intended knowledge and helps to reach the objectives, and what needs to be changed. It continues all the way through the decision process (before, during, and after the actual execution).

- Co-creation skills and facilitation (sometimes known as interactional expertise). Information has to be collected, organised, and synthesised; and facilitators need to motivate and help people to share their information. This requires specific skills and work that are typically available neither among experts nor decision makers. It also contains specific practices and methods, such as motivating participation, facilitating discussions, clarifying and organising argumentation, moderating contents, using probabilities and expert judgement for describing uncertainties, or developing extended causal diagrams or quantitative models.

When we thought about the decision making process from planning to implementation and evaluation, we realised that the properties of good assessment (see previous section) can be easily adjusted to this wider context. Target object in this wider context is not an assessment report but a shared understanding about the decision option to be chosen and implemented. The evaluation criteria are valid in both contexts. The adjusted list of criteria is presented on Opasnet page Open policy practice. We have also developed other evaluation criteria for important aspects of open policy practice; the most important are described below.

Settings of assessments

All too often a decision making process or an assessment is launched without clear understanding, what should be done and why. An assessment may even be launched hoping that it will somehow reveal what the objectives or other important things are. Settings of assessments are a part of evaluation and management (Table 4). They try to help in explicating these things so that useful decision support can be provided[24]. Also the subattributes of an assessment scope help in this:

- Question: What is the actual research question?

- Boundaries: What are the limits within which the question is to be answered?

- Decisions and scenarios: What decisions and options will be assessed and what scenarios will be considered?

- Timing: What is the schedule of the assessment work?

- Participants: Who are the people who will or should contribute to the assessment?

- Users and intended use: Who is going to use the final assessment report and for what purpose?

| Attribute | Guiding questions | Example categories |

|---|---|---|

| Impacts |

|

Environment, health, cost, equity |

| Causes |

|

Production, consumption, transport, heating, power production, everyday life |

| Problem owner |

|

Policy maker, industry, business, expert, consumer, public |

| Target users |

|

Policy maker, industry, business, expert, consumer, public |

| Interaction |

|

Isolated, informing, participatory, joint, shared |

Dimensions of openness

In open assessment, the method itself is designed to facilitate openness in all its dimensions. The dimensions of openness help to identify if and how the work deviates from the ideal of openness, so that the work can be improved in this respect (Table 5)[25]. However, it is important to notice that currently there is a large fraction of decision makers and experts who are not comfortable with open practices and openness as a key principle, and they would like to have a closed process. Dimensions of openness do not give direct tools to convince them. But it identifies issues where openness makes a difference and increases understanding about why there is a difference. This hopefully also leads to wider acceptance of openness.

| Dimension | Description |

|---|---|

| Scope of participation | Who are allowed to participate in the process? |

| Access to information | What information about the issue is made available to participants? |

| Timing of openness | When are participants invited or allowed to participate? |

| Scope of contribution | To which aspects of the issue are participants invited or allowed to contribute? |

| Impact of contribution | How much are participant contributions allowed to have influence on the outcomes? In other words, how much weight is given to participant contributions? |

Openness can also be examined based on how intensive it is and what kind of collaboration is aimed at between decision makers, experts, and stakeholders. Different approaches are described in Table 6. During the last decades, the trend has been from isolated to more open approaches, but all categories are still in use.

| Category | Explanation |

|---|---|

| Isolated | Assessment and use of assessment results are strictly separated. Results are provided to intended use, but users and stakeholders shall not interfere with making of the assessment. |

| Informing | Assessments are designed and conducted according to specified needs of intended use. Users and limited groups of stakeholders may have a minor role in providing information to assessment, but mainly serve as recipients of assessment results. |

| Participatory | Broader inclusion of participants is emphasized. Participation is, however, treated as an add-on alongside the actual processes of assessment and/or use of assessment results. |

| Joint | Involvement of and exchange of summary-level information among multiple actors in scoping, management, communication and follow-up of assessment. On the level of assessment practice, actions by different actors in different roles (assessor, manager, stakeholder) remain separate. |

| Shared | Different actors involved in assessment retain their roles and responsibilities, but engage in open collaboration upon determining assessment questions to address and finding answers to them as well as implementing them in practice. |

Shared understanding is a situation where all participants' views have been described and documented well enough so that people can know what facts, opinions, reasonings, and values exist about a particular topic; and what agreements and disagreements exist and why.

Shared understanding is always about a particular topic and produced by a particular group of participants. With another group it could be different, but with increasing number of participants, it should approach shared understanding of the whole society. Each participant should agree that the written description correctly contains their own thinking about the topic. Participants should even be able to correctly explain what other thoughts there are and how they differentiate from their own. Ideally any participant can learn, understand, and explain any thought represented in the group. Importantly, there is no need to agree on things, just to agree on what the disagreements are about. Therefore, shared understanding is not the same as consensus or agreement.

Shared understanding has potentially several purposes that all aim to improve the quality of societal decisions. It helps people understand complex policy issues. It helps people see their own thoughts from a wider perspective and thus increase acceptance of decisions. It improves trust in decision makers; but it may also deteriorate trust if the actions of a decision maker are not understandable based on shared understanding. It dissects each difficult detail into separate discussions and then collects resolutions into an overview; this directs the time resources of participants efficiently. It improves awareness of new ideas. It releases the full potential of the public to prepare, inform, and make decisions. How well these purposes have been fulfilled in practice in assessments will be discussed in Results.

Test of shared understanding can be used to evaluate how well shared understanding has been achieved. In a successful case, all participants of the decision process will give positive answers to the questions in Table 7. In a way, shared understanding is a metric for evaluating how well decision makers have embraced the knowledge base of the decision situation.

| Question | Who is asked? |

|---|---|

| Is all relevant and important information described? | All participants of the decision processes. |

| Are all relevant and important value judgements described? (Those of all participants, not just decision makers.) | |

| Are the decision maker's decision criteria described? | |

| Is the decision maker's rationale from the criteria to the decision described? |

Shared understanding may be viewed from two different levels of ambition. On an easier level, shared understanding is taken as general guidance and an attitude towards other people's opinions. Main points and disagreements are summarised in writing, so that an outsider is able to understand the overall picture.

On an ambitious level, the idea of documenting all opinions and their reasonings is taken literally. Participants' views are actively elicited and tested to see whether a facilitator is able to reproduce their thought processes. The objective here is to document the thinking in such a detailed way that a participant's response can be anticipated from the description they have given. The purpose is to enable effective participation via documentation, without a need to be present in any particular hearing or other meeting.

Written documentation with an available and usable structure is crucial in spreading shared understanding among those who were not involved in discussions. Online tools such as wikis are needed especially in complex topics, among large groups, or if the ambition level is high.

Good assessment models are able to quickly and easily incorporate new information or scenarios, so that they can be run again and again and learn from these changes. In a similar way, a comprehensive shared understanding can incorporate new information from the participants. A user should be able to quickly update the knowledge base, change the point of view, or reanalysise how the situation would look like with alternative valuations. Such level of sophistication necessarily requires a few concepts that have not yet been described.

Value profile

Value profile is a list of values, preferences, and choices made and documented by a participant. Voting advice applications produce a kind of value profiles. The candidates answer questions about their values, worldviews, or decisions they would do if elected. The public can then answer the same questions and analyse which candidates share their values. Nowadays, such applications are routinely developed by all major media houses for every national election in Finland. However, these tools are not used to collect value profiles from the public between elections although such information could be used in decision support. Value profiles are mydata, i.e. data about which an individual themself may decide who is allowed to see and use it. This requires trusted and secure information systems.

Archetype

Archetypes are collections of values that are shared by a group, so that an individual can explicate their own values by simply saying that they are equal to those of an archetype. For example, political parties can develop archetypes to describe their political agendas and programs. Of course, individuals may support an archetype in most issues but diverge in some and document those separately. In this way, people may easily document value profiles in a shared understanding system. Practical tools for this do not yet exist, so little is known about how practical and accepted they would be.

Archetypes aim to save effort in gathering value data from the public, as not everyone needs to answer all possible questions, when archetypes are used. It also increases security when there is no need to handle individual people's answers but open aggregated value data instead.

Paradigm

Paradigms are collections of rules to make inferences from data in the system. For example, scientific paradigm has rules about criticism and priority over hypotheses that are in accordance with data and rational reasoning. However, participants are free to develop paradigms with any rules of their choosing, as long as they can be documented and operationalised within the system. For example, a paradigm may state that in a conflict, priority is given to the opinion presented in a holy book. And in a hypothetical case where there is disagreement about what the holy book says, separate sub-paradigms may be developed for each interpretation. Mixture paradigms are also allowed. For example, a political party may follow the scientific paradigm in most cases but when economic assessments are ambiguous, the party will choose an interpretation that emphasises the importance of an economically active state (or alternatively market approach with a passive state).

This work is based on an idea that although the number of possible values and reasoning rules is very large, most of people's thinking can be covered with a fairly small amount of archetypes and paradigms. As a comparison, there are usually from two to a dozen parties in a democratic country rather than hundreds. In other words, it is putatively an efficient system. If this is true, resources can be used to get the critical issues precise and informative, and then apply these information objects in numerous practical cases.

It should be emphasised that although we have used wording such as "make inferences in the system", this approach does not need to rely on artificial intelligence. Rather, numerous contributors who co-create content and make inferences based on the rules described in paradigms, are the "system" we refer to. Parts of the work described here may be automated in the future, but the current system is mostly based on human work.

By now it seems clear that information in a description of shared understanding is very complex and often very large. So, a new research question emerges: how can all this information be written down and organised in such a way that it can easily be used and searched by both a human and a computer? A descriptive book would be too long for busy decision makers and unintelligible for computers. An encyclopedia would miss relevant links between items. A computer model would be a black box for humans.

Ontology

We suggest that in addition to all other information structures presented above, there is a need for an ontology that would acknowledge these information structures, special needs of decision situations, informal nature of public discussions, and computational needs of quantitative models. Such an ontology or vocabulary would go a long way in integrating readability for humans and computers.

World Wide Web Consortium has developed the concepts of open linked data and resource description framework[26]. These have been used as the main starting points for ontology development. This work is far from final, but we will present the current version.

Ontologies are based on vocabularies with specified terms and meanings. Also the relations of terms are explicit. Resource description framework is based on the idea of triples, which have three parts: subject, predicate, and object. These can be thought as sentences: an item (subject) is related to (predicate) another item or value (object), thus forming a claim. Claims can further be specified using qualifiers and backed up by references. Such a block of information is called a statement. Extended causal diagrams can be described automatically as triples, and vice versa. Triple databases enable wide, decentralised linking of various sources and information. There is an open source solution for linking such a database directly to wiki platform. This software is called Wikibase.

The current version of the open policy practice ontology focusses on describing all information objects and terms described above, and making sure that there is a relevant item or relation to every piece of information that is described in an extended causal diagram, open assessment, or shared understanding. However, the strategy here is not to describe all possible information with such a structure, but only the critical part related to the decision situation.

For example, Figure 1 shows that the EU Parliament and Ministry Council have a role in fishing regulation, but this graphical representation of the triple does not attempt to tell anything else about these large and complex organisations, except that they have a role in the case and they make a decision about herring fishing intensity. Also, in Figure A1-1 in appendix 1 there are two scientific articles listed. The content of these articles is not described, because already with little effort (connecting an article identifier and a topic) the availability and usability of the article increases a lot. In contrast, a further step of structuring the article content into the causal diagram would take much more work and give less added value, unless someone identifies an important new statement in the article, making the effort worth it.

Thus, the aim is that any publication or another piece of new information would fairly easily and with little work find a proper place within this comples network of triples. The internal structure of the information within each information piece is only documented as necessary.

For a full description of the current vocabulary in the ontology, see Appendix 1.

Results and evaluation

| Topic | Assessment | Year | Project |

|---|---|---|---|

| Vaccine effectiveness and safety | Assessment of the health impacts of H1N1 vaccination[27] | 2011 | In-house, collaboration with Decision Analysis and Risk Management course |

| Tendering process for pneumococcal conjugate vaccine[28] | 2014 | In-house, collaboration with the National Vaccination Expert Group | |

| Energy production, air pollution and climate change | Helsinki energy decision[29] | 2015 | In-house, collaboration with city of Helsinki |

| Climate change policies and health in Kuopio[30] | 2014 | Urgenche, collaboration with city of Kuopio | |

| Climate change policies in Basel[31] | 2015 | Urgenche, collaboration with city of Basel | |

| Availability of raw material for biodiesel production[21] | 2012 | Jatropha, collaboration with Neste Oil | |

| Health impacts of small scale wood burning[32] | 2011 | Bioher, Claih | |

| Health, climate, and economic effects of traffic | Gasbus - health impacts of Helsinki bus traffic[33] | 2004 | Collaboration with Helsinki Metropolitan Area |

| Cost-benefit assessment on composite traffic in Helsinki[14] | 2005 | In-house | |

| Risks and benefits of fish eating | Benefit-risk assessment of Baltic herring in Finland[34] | 2015 | Collaboration with Finnish Food Safety Authority |

| Benefit-risk assessment of methyl mercury and omega-3 fatty acids in fish[35] | 2009 | Beneris | |

| Benefit-risk assessment of fish consumption for Beneris[36] | 2008 | Beneris | |

| Benefit-risk assessment on farmed salmon[37] | 2004 | In-house | |

| Benefit-risk assessment of Baltic herring and salmon intake[11] | 2018 | BONUS GOHERR | |

| Dioxins, fine particles | TCDD: A challenge to mechanistic toxicology[12] | 1999 | EC ENV4-CT96-0336 |

| Comparative risk assessment of dioxin and fine particles[38] | 2007 | Beneris | |

| Plant-based food supplements | Compound intake estimator[39] | 2014 | Plantlibra |

| Health and ecological risks of mining | Paakkila asbestos mine[13] | 1999 | In-house |

| Model for site-specific health and ecological assessments in mines[40] | 2013 | Minera | |

| Risks of water from mine areas [41] | 2018 | Kaveri | |

| Drinking water safety | Water guide[42] | 2013 | Conpat |

| Organisational assessments | Analysis and discussion about research strategies or organisational changes within THL | 2017 | In-house |

| Transport and communication strategy in digital Finland[43] | 2014 | Collaboration with the Ministry of Transport and Communications of Finland | |

| Information use in government decision support | Case studies: Assessment of immigrants' added value; Real-time co-editing, Fact-checking, Information design[44] | 2016 | Yhtäköyttä, collaboration with Prime Miniter's Office |

The methods described above have been used in several research projects (see the funding declaration) and health assessments (some mentioned in Table 8) since 2004. They have also been taught on international Kuopio Risk Assessment Workshops for doctoral students in 2007, 2008, and 2009 and on a Master's course Decision Analysis and Risk Management (6 credit points), organised by the University of Eastern Finland (previously University of Kuopio) in 2011, 2013, 2015, and 2017.

As methods and tools were developed side by side with practical assessment work, there is extensive experience about some parts of the method. Some newer parts (e.g. value profiles) are merely ideas with no practical implementation yet. This evaluation is based on the experience accumulated during the scientific, expert, and teaching work. We will follow the properties of good assessment and apply them to the method itself.

Quality of content

Open policy practice does not restrict the use of any previous methods that are necessary for successful decision support. Therefore, it is safe to say that the quality of the produced information is at least the same as with some other method. We have noticed in numerous cases that the structures offered for e.g. assessments or knowledge crystals help in organising and understanding the content.

Criticism is emphasised in open policy practice as an integral part of the scientific method. Giving critique is made as easy as possible. Surprisingly, we still see fairly little of it in practical assessments. There seem to be several reasons: experts have little time to actually read other people's assessment and give detailed comments; people are reluctant to interfere with other people's work even within a joint project, so they rather keep to a strict division of tasks; there are no rewards or incentives for giving critique; and when an assessment spans several web pages, it is not clear where and how to contribute.

We have seen lack of criticism even in vaccine-related assessments that are potentially emotive. With active facilitation we were able to get comments and critique from both drug industry and vaccine citizen organisations, and they were all very matter-of-fact. This was interesting, as the same topics cause outrage in social media, but we did not see that on structured assessments. However, one of the most common objections and fears against open assessment is that outside contributions will be ill-informed and malevolent. They have never been in our assessments.

Lack of contributions limits the amount of new views and ideas that potentially could be identified with open participation. However, even if we do not see all potential of criticism, we don't think that the lack of open critique would hamper the quality of assessments, because all the common practices of e.g. experts' source-checking are still in place. Actually, the current practices in research and assessment are even worse in respect of open criticism: it rarely happens. Pre-publishing peer review is almost the only time when scientific work is criticised by people outside a research group, and those are typically not open. A minute fraction of published works are criticised openly in journals; a poor work is simply left alone and forgotten.

However, there are some active platforms for scientific discussion, such as pre-publishing physics forum ArXiv.org, and similar platforms are emerging on other disciplines. Such practice suits to continually updating knowledge crystals much better than what is typically done in most research areas. Open discussion is increasing, and knowledge crystals are one way to facilitate this positive trend.

Relevance

A major issue with relevance is that the communication between decision makers and experts is not optimal, and therefore experts don't have good knowledge about what questions need answers and how the information should be provided[1]. Also, decision makers prefer information that supports views that have already been selected on political grounds.

In open policy practice, the questions and structures aim to explicate relevant questions, so that experts get a clear picture what information is expected and needed. They also focus discussions and assessment work to questions that have been identified as relevant. On the other hand, shared understanding and other explicit documents of relevant information make it harder for a decision maker to pick only favourable pieces of information, at least if there is political pressure. The pneumococcal vaccine assessment (see Table 8) had clear political pressure, and it would have been difficult for the decision maker to deviate from the conclusions of the assessment. However, typically assessments are taken as just one piece of information rather than a normative guidance; this clearly depends on political culture, how strong the commitment to evidence-based decision making is, and how well an assessment succeeds in incorporating all relevant views.

Shared understanding and detailed assessments challenge participants to clarify what they mean and what is found relevant. For example, the composite traffic and biodiesel assessments were already quite developed, when discrepancies between objectives, data, and draft conclusions forced rethinking about the purposes of the assessments. In both cases, the main research questions were adjusted, and more relevant assessments and conclusions were produced. Political questions and arguments are also expected to clarify in a similar way when more of it is incorporated into systematic scrutiny.

Availability

A typical problem with availability is that a piece of information is designed for a specific user group and made available in a place targeted to that group. In contrast, open policy practice is designed with an idea that anyone can be a reader or contributor in a user group, so by default everything is made openly available on the Internet. To our experience, such approach works well in practice as long as there are seamless links to also repositories for non-publishable data and contributors know the open source tools such as wiki and R. Such openness is, however, a major perceived problem to many experts; this issue is discussed more under acceptability.

Another problem is that even if users find an assessment page, they are unsure about its status and whether some information is missing. This is because many pages are work in progress and not finalised for end users. We have tried to clarify this by adding status declarations on the tops of pages. Declaring drafts as drafts has also helped experts who are uncomfortable to show their own work before it is fully complete.

The information structure using knowledge crystals and one topic per page has proven a good one. Usually it is easy to find the page of interest even if there is only a vague idea of its name or specific content. Also, it is mostly straightforward to see which information belongs to which page.

There are some comments about not being able to find pages in Opasnet, but to our experience these problems are surpassed by benefits for people being able to easily find detailed material with e.g. search engines without prior knowledge about Opasnet. The material seems to be fairly well received, as there were 52000 visits to and 90000 pageviews of the Finnish and English Opasnet websites in 2017. The most interesting topics seemed to be ecological and health impacts of mining and drinking water safety. Also the pneumococcus vaccine assessment, Helsinki energy decision, and transport and communication strategy in digital Finland were popular pages when they were prepared.

Lack of participation among decision makers, stakeholders, and experts outside the assessment team is a constant problem in making existing information available. Assessments are still seen as a separate part from the decision process, and the idea that scientific assessments could contain value judgements from the public is unprecedented. The closest resemblance are environmental impact assessments, where the law requires public hearings, but many people are sceptic about the influence of comments given. Also Wikipedia has noticed that only a few percent of readers ever contribute, and the number of active contributors is even lower[45].

Experts are a special group of interest, as they possess vast amounts of relevant information that is not readily available to decision makers. Yet, we have found that experts are not easily motivated to policy support work.

This emphasises the need for facilitation and active invitations for people to express their views. Importantly, direct online participation is not an objective as such but one way among others to collect views and ideas. It is also more important to cover all major ideas than to represent every person individually. In any case, there is a clear need to inform people about new possibilities for participation in societal decisions. We have found it very useful to simply provide links to ongoing projects to many different kinds of user groups across and outside organisations.

Open policy practice was designed so that it could incorporate any relevant information from experts and stakeholders. In practice, it is not used for that purpose, because it does not have an established role in decision process. For many, non-public lobbying, demonstrations and even spreading faulty information are more effective ways of influencing the outcome of a decision. All these methods deviate from the ideal of evidence-based policy, and therefore further studies are needed specifically on how this information is better incorporated into and made available via shared understanding and whether that improves decision processes. If shared understanding is able to offer acceptable solutions to disagreeing parties, it reduces the need to use political force, but so far we have too little experience on that to make conclusions. We also don't yet know whether quiet people or marginal group have better visibility with such a web-workspace, but in theory, their capabilities are better.

It is also critical that the archival process is supported by the workspace. In Opasnet, it requires little extra work, as the information is produced in a proper format for archiving, backups are produced automatically, and it is easy to produce a snapshot of a final assessment. There is no need to copy information from one repository to another, but it is also easy to store final assessments in external open data repositories.

Usability

⇤--#: . Boundary object lisättävä. Myös viite.

Myös maininta erilaisista työkaluista, joita jo nyt on tarjolla (Appendix 4.) --Jouni (talk) 14:50, 24 January 2018 (UTC) (type: truth; paradigms: science: attack)