Information structure of Open Assessment: Difference between revisions

mNo edit summary |

m (categorised to Category:Open assessment) |

||

| Line 1: | Line 1: | ||

[[Category:Open assessment]] | |||

<accesscontrol>Members of projects,,Workshop2008,,beneris,,Erac,,Heimtsa,,Hiwate,,Intarese</accesscontrol> | <accesscontrol>Members of projects,,Workshop2008,,beneris,,Erac,,Heimtsa,,Hiwate,,Intarese</accesscontrol> | ||

[[Category:Manuscript]] | [[Category:Manuscript]] | ||

Revision as of 14:29, 29 August 2008

<accesscontrol>Members of projects,,Workshop2008,,beneris,,Erac,,Heimtsa,,Hiwate,,Intarese</accesscontrol>

This is a manuscript about the information structure of Open Assessment.

Information structure of open assessment

Jouni T. Tuomisto1, Mikko Pohjola1, Alexandra Kuhn2

1National Public Health Institute, P.O.Box 95, FI-70701 Kuopio, Finland

2IER, Universitaet Stuttgart, Hessbruehlstr. 49 a, 70565 Stuttgart, Germany

Abstract

Background

Methods

Results

Conclusions

Background

Many future environmental health problems are global, cross administrative boundaries, and are caused by everyday activities of billions of people. Urban air pollution or climate change are typical problems of this kind. The traditional risk assessment procedures, developed for single-chemical, single-decision-maker, pre-market decisions do not perform well with the new challenges. There is an urgent need to develop new assessment methods that could deal with the new fuzzy but far-reaching and severe problems[1].

A major need is for a systematic approach that is not limited to any particular kind of situation (such as chemical marketing), and is specifically designed to offer guidance for decision-making. Our main interest is in societal decision-making related to environmental health, formulated into the following research question: How can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?

As the question shows, we find it important that the approach to be developed covers wider domains than environmental health. In addition, the approach should be flexible enough to subsume the wide range of current methods that are designed for specific needs within the overall assessment process. Examples of such methods are source-apportionment of exposures, genotoxicity testing, Monte Carlo simulation, or elicitation of expert judgement. As openness is one of the starting points for method development, we call the new kinds of assessments open assessments. Practical lessons about openness have been described in another paper[2]

In another paper from our group, we identified three main properties that the new assessment method should fulfil [3]. These are the following: 1) The whole assessment work and a subsequent report are open to critical evaluation by anyone interested at any point during the work; 2) all data and all methods must be falsifiable and they are subject to scientific criticism, and 3) all parts of one assessment must be reusable in other assessments.

In this study, we developed an information structure that fulfils all the three criteria. Specifically, we attempted to answer the following question: What are the object types needed and the structures of these objects such that the three criteria of openness, falsifiability, and reusability are fulfilled; the resulting structure can be used in practical assessment; and the assessment can be operationalised using modern computer technology?

Methods

The work presented here is based on research questions. The main question presented in the Background was divided into several smaller questions, which were more detailed questions about particular objects and their requirements. The majority of work performed was a series of exploratory (and exhaustive, according to most observers) discussions about the essence of the objects. The discussions were iterative so that we repeatedly came back to the same topics until we found that they are coherent with other parts of our information structure, covered all major parts of assessment work and report, and seemed to be conceptually clear enough to be practical.

The current answers to these questions should be seen as hypotheses that will be tested against observations and practical experience as the work goes on. The research questions are presented in the Results section together with the current answer. In the Methods section, we briefly present the most important scientific methodologies that were used as the basis for this work.

PSSP is a general ontology for organising information and process descriptions[4]. PSSP offers a uniform and systematic information structure for all systems, whether small details or large integrated models. The four attributes of PSSP (Purpose, Structure, State, Performance) enable hierarchical descriptions where the same attributes are used in all levels.

A central property in science is asking questions, and giving answers that are falsifiable hypotheses. Falsification is a process where a hypothesis is tested against observations and is falsified if it is inconsistent with them[5]. The idea of falsification and scientific criticism is the reason why we have organised the whole assessment method development as research questions and attempts to answer them.

Bayesian network is a probabilistic graphical model that represents a set of variables and their probabilistic independencies. It is a directed acyclic graph whose nodes represent variables, and whose arcs encode conditional independencies between the variables[6]. Nodes can represent any kind of variable, be it a measured parameter, a latent variable, or a hypothesis. They are not restricted to representing random variables. Generalizations of Bayesian networks that can represent and solve decision problems under uncertainty are called influence diagrams.

Decision analysis is concerned with identifying the best decision to take, assuming an ideal decision maker who is fully informed, able to compute with perfect accuracy, and fully rational. The practical application is aimed at finding tools, methodologies and software to help people make better decisions[7].

The pragma-dialectical theory is an argumentation theory that is used to analyze and evaluate argumentation in actual practice[8]. Unlike strictly logical approaches (which focus on the study of argument as product), or purely communication approaches (which emphasize argument as a process), pragma-dialectics was developed to study the entirity of an argumentation as a discourse activity. Thus, the pragma-dialectical theory views argumentation as a complex speech act that occurs as part of natural language activities and has specific communicative goals.

Results

Universal objects

| Moderator:Jouni (see all) |

|

|

| Upload data

|

This page is about different types of universal objects. The question about the attributes of universal objects is discussed on the page Attribute.

<section begin=glossary />

- Universal object describes a kind of object with a particular purpose and a standardised structure according to its purpose and the PSSP ontology. The open assessment contains the following kinds of objects: assessment, variable, method, study, lecture, nugget, and encyclopedia article.

<section end=glossary />

Question

What are the kinds of objects that are needed to describe an assessment (including all its content about reality and all the work needed for creating the content) in such a way that all objects comply with the PSSP ontology?

Answer

Assessment is a process for describing a particular piece of reality in aim to fulfil a certain information need in a decision-making situation. The word assessment can also mean the end product of this process, i.e. some kind of assessment report. Often it is clear from the context whether assessment means the doing of the report or the report itself. Methodologically, these are two different objects, called the assessment process and the assessment product, respectively. Unlike other universal objects, assessments are discrete objects having defined starting and ending points in time and specific contextually and situationally defined goals. Decisions included in an assessment are described within the assessment, and they are no longer described as variables. In R, there was previously an S4 object called oassessment, but that is rarely if at all used nowadays.

Variable is a description of a particular piece of reality. It can be a description of physical phenomena, or a description of value judgments. Variables are continuously existing descriptions of reality, which develop in time as knowledge about them increases. Variables are therefore not tied into any single assessment, but instead can be included in other assessments. Variable is the basic building block of describing reality. In R, variables are implemented using an S4 object called ovariable.

Method is a systematic procedure for a particular information manipulation process that is needed as a part of an assessment work. Method is the basic building block for describing the assessment work (not reality, like the other universal objects). In practice, methods are "how-to-do" descriptions about how information should be produced, collected, analysed, or synthesised in an assessment. Some methods can be about managing other methods. Typically, methods contain a software code or another algorithm to actually perform the method easily. Previously, there was a subcategory of method called tool, but the difference was not clear and the use of tool is depreciated. In R, methods are typically ovariables that contain dependencies and formulas for computing the result, but some context-specific information about dependencies are missing. Therefore, the result cannot be computed until the method is used within an assessment.

Study is an information object that describes a research study and its answers, i.e. observational or other data obtained. The study methods are described as the rationale of the object. Unlike traditional research articles, there is little or no discussion, because the interpretation of the results happens in other objects, typically in variables for which the study contains useful information. A major difference to a variable is that the rationale of the study is fixed after the research plan has been fixed and work done, and also the answer including the study results is fixed after the data has been obtained and processed. The question (or scope) of a study reflects the generalisability of the study results, and it is open to discussion and subject to change also after the study has been finished. In contrast, in a variable the question is typically fixed, and the answer and rationale change as new information comes up.

Lecture contains a piece of information that is to be mediated to a defined audience and with a defined learning objective. It can also be seen as a process during which the audience learns, instead of being a passive recipient of information.

Encyclopedia articles are objects that do not attempt to answer a specific research question. Instead, they are general descriptions about a topic. They do not have a universal attribute structure.

Nugget is an object that was originally designed to be written by a dedicated (group of) person(s). Nuggets are not freely editable by others. Also, they do not have a universal structure.

Rationale

In general, descriptions of reality are described as products, while the work needed to produce these descriptions is described as processes. Assessment, variable, and class are product-type objects, and method is a process-type object.

In addition to the object types listed above we could add context as the object type that is above assessments in the hierarchical structure. In practice contexts typically do not need to be explicitly defined as such, rather only their influence in the assessments needs to be explicated, and therefore they are not considered here in any more detail.

Class is a set of items (objects) that share the same property or properties. The membership in a class is determined by an inclusion criterion. The property is utilised as a part of all objects that fulfill the criterion. Classes can be used in describing general information that is shared by more than one object. Class efficiently reduces the redundancy of information in the open assessment system. This improves the inter-assessment efficiency of the assessment work. However, classes are still ambiguous as objects, and there has not bee enough need for them. Therefore, they are currently not used in open assessment. Another object type with similar problems is hatchery.

See also

| Help pages | Wiki editing • How to edit wikipages • Quick reference for wiki editing • Drawing graphs • Opasnet policies • Watching pages • Writing formulae • Word to Wiki • Wiki editing Advanced skills |

| Training assessment (examples of different objects) | Training assessment • Training exposure • Training health impact • Training costs • Climate change policies and health in Kuopio • Climate change policies in Kuopio |

| Methods and concepts | Assessment • Variable • Method • Question • Answer • Rationale • Attribute • Decision • Result • Object-oriented programming in Opasnet • Universal object • Study • Formula • OpasnetBaseUtils • Open assessment • PSSP |

| Terms with changed use | Scope • Definition • Result • Tool |

Keywords

Open assessment, object, information object, PSSP, ontology

References

- ↑ Briggs et al (2008) Manuscript.

- ↑ Tuomisto et al. (2008) Open participation in the environmental health risk assessment. Submitted

- ↑ Pohjola and Tuomisto. (2008) Purpose determines the structure of environmental health assessments. Manuscript.

- ↑ Pohjola et al. (2008). PSSP - A top-level event/substance ontology and its application in environmental health assessment. Manuscript

- ↑ Popper, Karl R. (1935). Logik der Forschung. Julius Springer Verlag. Reprinted in English, Routledge, London, 2004.

- ↑ Pearl, Judea (2000). Causality: Models, Reasoning, and Inference. Cambridge University Press. ISBN 0-521-77362-8.

- ↑ Howard Raiffa. (1997) Decision Analysis: Introductory Readings on Choices Under Uncertainty. McGraw Hill. ISBN 0-07-052579-X

- ↑ Eemeren, F.H. van, & Grootendorst, R. (2004). A systematic theory of argumentation: The pragma-dialectical approach. Cambridge: Cambridge University Press.

Related files

<mfanonymousfilelist></mfanonymousfilelist>

Structure of an attribute

| Moderator:Nobody (see all) Click here to sign up. |

|

|

| Upload data

|

<section begin=glossary />

- Attribute is a property, an abstraction of a characteristic of an entity or substance. In open assessment in particular a characteristic of an assessment product (assessment, variable or class), and assessment process (method). In open assessment all these objects have the same set of attributes:

<section end=glossary />

Question

- The research question about attributes

- What are attributes and their structure for objects in open assessment such that

- they cover all information types that may be needed for an object in an assessment,

- they allow for open participation and discussion about any attribute,

- their contents are subject to scientific criticism, i.e., falsifiable,

- they comply with the PSSP ontology?

- The research question about the attribute contents

- What are parts of the attribute contents such that the attribute

- contains the necessary description of the property under the attribute,

- allows for open participation about the property,

- allows for additional information that increases the applicability of the attribute content,

- allows for evaluation of performance of the attribute content.

Answer

| Attribute | PSSP attribute | Question asked | Comments |

|---|---|---|---|

| Name | - | How should the object be called? | Name of an object must be unique, and it should be descriptive of what the object is intended to contain, in particular give hints about the scope of the object. |

| Question | Purpose | What is the research question that this object attempts to answer? | Contains a description of the physical and abstract boundaries of the object. For assessment and variable objects, scope is an expression of what part of reality the object is intended to describe. Scope does not have a true counterpart in reality, it is always referential to the instrumental use purpose of the object it relates to. |

| Answer | State | What is the answer to the research question? | Is an expression of the state of the part of reality that the object describes. It is the outcome of the contents under the definition attribute. |

| Rationale | Structure | How can you find out the answer to the research question? | Attempts to describe the internal structure of the part of reality that the object is intended to describe and the relations of the interior with reality outside the scope. For assessment objects, definition appears in practice as a list of contents. For variables, it is a description of how the result of the variable can be derived or calculated. |

Despite all the object types mentioned above do have the same unified set of attributes, the sub-attributes can differ (see Assessment and Variable). This derives from the differences in the nature and primary purpose of different object types as well as practical reasons.

In addition to the formally structured objects (e.g. assessments and variables), there may exist objects that do not have a standardized format related to open assessments, such as data or models that are used in defining formally structured objects and their attributes. These freely structured objects are outside the information structure, but can be e.g linked or referred to within the formally defined objects.

Each attribute has three parts:

- Actual content (only this will have an impact on other objects), depending on the nature of the object actual content can be qualitative or quantitative

- Narrative description (to help understanding the actual content). Includes evaluation of performance (e.g. uncertainty analysis, e.g. e.g. evaluation of the calibration of the result against an external standard).

- Discussion (argumentation about issues in the actual content). The resolutions of the discussions are transferred to the actual content. Discussion is described in detail elsewhere.

Rationale

Based on a lot of thinking and discussion especially in 2006-2009.

See also

| Help pages | Wiki editing • How to edit wikipages • Quick reference for wiki editing • Drawing graphs • Opasnet policies • Watching pages • Writing formulae • Word to Wiki • Wiki editing Advanced skills |

| Training assessment (examples of different objects) | Training assessment • Training exposure • Training health impact • Training costs • Climate change policies and health in Kuopio • Climate change policies in Kuopio |

| Methods and concepts | Assessment • Variable • Method • Question • Answer • Rationale • Attribute • Decision • Result • Object-oriented programming in Opasnet • Universal object • Study • Formula • OpasnetBaseUtils • Open assessment • PSSP |

| Terms with changed use | Scope • Definition • Result • Tool |

References

Related files

<mfanonymousfilelist></mfanonymousfilelist>

Structure of an assessment product

| Moderator:Nobody (see all) Click here to sign up. |

|

|

| Upload data

|

<section begin=glossary />

- Assessment is a process for describing a particular piece of reality in aim to fulfill a certain information need in a decision-making situation. The word assessment can also mean the end product of this process, i.e. an assessment report of some kind. Often it is clear from the context whether the term assessment refers to the making of the report or the report itself. Methodologically, these are two different objects, called the assessment process and the assessment product, respectively.

<section end=glossary /> R↻

Question

- What is a structure for an assessment so that it

-

- contains a description of a certain piece of reality R↻ ,

- the description is produced according to the use purposes of the product,

- describes all the relevant phenomena that connect the decision under consideration and outcomes of special interest (called indicators),

- combines value judgements with the descriptions of physical phenomena

- can be applied in any domain,

- inherits the main structure from universal objects,

- complies with the PSSP ontology,

- complies with decision analysis,

- complies with Bayesian networks?

Answer

All assessments aim to have a common structure so as to enable effective, partially automatic tools for the analysis of information. An assessment has a set of attributes, which have their own subattributes.

| Attribute | Sub-attribute | Description |

|---|---|---|

| Name | Identifier for the assessment. | |

| Scope | Defines the purpose, boundaries and contents of the assessment. Why is the assessment done? | |

| Question | What are the research questions whose answers are needed to support the decision? What is the purpose of the assessment? | |

| Intended use and users | Who is the assessment made for? Whose information needs does the assessment serve? How do we expect them to use the information? | |

| Participants | Who is needed to participate to make the assessment a well-balanced and well-informed work? Also, if specific reasons exists: who is not allowed to participate? The minimum group of people for a successful assessment is always described. If some groups must be excluded, this must be explicitly motivated. | |

| Boundaries | Where are the boundaries of observation drawn? In other words, which factors are noted and which are left outside the observation. Spatial, temporal and population subgroup boundaries are typical in assessments. | |

| Decisions and scenarios | Which decisions and their options are considered by the decision maker? Also, if scenarios (defined here as deliberate deviations from the truth) are used: which scenarios are used and why? In scenarios, some possibilities are excluded from the observation in order to clarify the situation (e.g. what-if scenarios). For example, one could ask which climate change adaptation acts should be executed in a situation where the average temperature rises over two degrees centigrade. In this case, all the scenarios where the temperature does not rise over two degrees are excluded from the assessment. | |

| Timing | Description of the work process. When does the assessment take place? When will it finish and when will the decision be made? | |

| Answer | Gives the best possible answers to the research questions of the assessment based on the information collected. Answer is divided into two parts: | |

| Results | Answers to all of the research questions asked in the scope and the analyses described in the rationale. If possible, a numerical expression or distribution using clear units. | |

| Conclusions | What are the conclusions about the question based on the results obtained regarding the purpose of the assessment? | |

| Rationale | Anything that is needed to make a critical reader understand the conclusions and to convince that the conclusions are valid. Includes all the information that is required for a meaningful answer. | |

| Stakeholders | What stakeholders relate to the subject of the assessment? What are their interests and goals? | |

| Dependencies | What are the causal connections between decision actions and endpoints of interest? What issues must be studied to be able to predict the impacts of decisions actions? These issues are operationalised using structured objects. A few different types of objects are needed:

| |

| Analyses | What statistical or other analyses are needed to be able to produce results that are useful for making conclusions about the question? Typical analyses are decision analysis (which of the decision alternatives produces the best - or worst - expected outcome) and value of information analysis (how much would the decision making benefit if all uncertainties were resolved?). | |

| Indices | What indices are used in the assessment and what are cut out as unnecessary and when? | |

| Calculations | Actual calculations to produce the result. Typically an R code. |

Rationale

See also

Structure of a variable

Structure of a discussion

| Moderator:Jouni (see all) |

|

|

| Upload data

|

<section begin=glossary />

- Discussion is a method to organise information about a topic into a form of hierarchical thread of arguments trying to resolve whether a statement is true or not. In discussion, anyone can raise any relevant points about the topic. Discussion is organised using the pragma-dialectical argumentation theory[1]. A discussion usually consists of three parts: 1) opening statement(s); 2) the actual discussion organised as hierarchical threads of arguments; and 3) closing statement(s), which is updated based on the discussion, notably any valid arguments pointing to it. When a closing statement is updated, the content should be accordingly portrayed within texts that refer to the discussion.

<section end=glossary />

Contribution in the form of remarks or argumentative criticism on the content of wiki pages is most welcome. It can change the outcome of an assessment; it will improve it and make the assessment better understandable for decision makers and other stakeholders. The discussions will show the reasoning behind the work done in an assessment; it will indicate the objective and normative aspects in an assessment. In this way, decision makers and stakeholders can judge themselves whether they agree on such normative weightings. Discussion rules and formats facilitate the execution and synthesis of discussions.

Question

How should discussions be organised in such a way that

- they can capture all kinds of written and spoken information, facts and valuations related to a specific topic,

- there are straightforward rules about how the information should be handled,

- the approach facilitates the convergence to a shared understanding by easily identifying and describing differing premises and other reasons behind disagreements,

- the appraoch can be applied both a priori (to structure a discussion to be held) and a posteriori (to restructure a discussion already held)?

Answer

Discussion structure

| Fact discussion: Example discussion showing a typical structure (Disc1) |

|---|

| Opening statement: Opening statements about a topic. This is the starting point of a discussion.

Closing statement: Outcome of the discussion, i.e. opening statement updated by valid arguments pointing to it. (Resolved, i.e., a closing statement has been found and updated to the main page.) |

| Argumentation:

⇤--arg1: . This argument attacks the statement. Arguments always point to one level up in the hierarchy. --Jouni 17:48, 8 January 2010 (UTC) (type: truth; paradigms: science: attack)

|

Discussion rules

- Freedom of opinion. Everyone has the right to criticize or comment on the content of a discussion.

- A discussion is organised around an explicit statement or statements. The purpose of a discussion is to resolve which of the opening statements, if any, are valid. The statement(s) are updated according to the argumentation; this becomes the closing statement.

- A statement is defended or attacked using arguments, which themselves also can be defended and attacked. This forms a hierarchical thread or tree-like structure.

- Critique with a supporting, attacking, or commenting argument is stated in connection to what is being criticized.

- Argumentation must be relevant to the issue that they target.

- Only statements made and arguments given can be attacked.

- An argument is valid unless it is attacked by a valid argument. Defending arguments are used to protect arguments against attacks, but if an attack is successful, it is stronger than a defense.

- Attacks must be based on one of the two kinds of arguments:

- The attacked argument is claimed to be irrelevant in its context.

- The attacked argument is claimed to be not true, i.e. it is not consistent with observations.

- Other attacks such as those based on evaluation of the speaker (argumentum ad hominem) are weak and are treated as comments rather than attacks.

- Argumentation can not be redundant. If arguments are repeated, they should be merged into one.

- You are supposed to be committed to your statements, that is:

- if someone doubts your statement or argument (comment), you must explain it (edit or defend).

- if someone attacks your statement or argument (attack), you must defend it (defend).

- A discussion is called resolved, when someone writes a closing statement based on the opening statement and the current valid arguments targeting it, and updates the text (typically on a knowledge crystal page) that is targeted by the discussion.

- However, discussions are continuous. This means that anyone can re-open a discussion with new arguments even if a closing statement has been written.

Rationale

The structure of the discussion follows the principles of the pragma-dialectics.[1]R↻

A discussion is typically an important detail of a larger whole, such as a knowledge crystal in an assessment, but does attempt to give a full answer to the knowledge crystal question. The purpose of a discussion is to identify which of the opening statements are valid, or how they should be revised to become valid.

Arguments are actually statements; the only differences is that the target of an argument is another argument or statement within a particular discussion, while the target of a statement is some explicated use outside the discussion, such as in the rationale of a knowledge crystal. Therefore, an argument can be upgraded into a statement of a new discussion, if it is needed elsewhere.

How to discuss

Open policy practice embraces participation, in particular deliberative participation. Therefore all contributions in the form of remarks or argumentative criticism on the content of the assessments, variables, methods as well as other content are most welcome. The contributions can change the outcome of the assessments by improving their information content and making it better understandable for decision makers, stakeholders and public. Documented discussions also show the reasoning behind the work done in assessments making it possible for decision makers, stakeholders and public to judge for themselves whether they agree with the reasoning behind the outcomes. In order to obtain an orderly discussion, rules and format for discussion in open policy practice have been created based on pragma-dialectics, a systematic theory of argumentation.

Discussion has a central role in the collaborative process of formulating questions, developing hypotheses as answers to these questions, and improving these hypotheses through criticism and corresponding corrections. When a diverse group of contributors participate in an assessment, it is obvious that disputes may arise. Formal argumentation offers a solution also to clarify and potentially solve disputes. In collaborative assessments, every knowledge crystal and every part of them are subject to open criticism according to the rules modified from pragma-dialectics[1]): see Answer above.

When a discussion goes on, there is often a need to clarify the opening statement to make it better reflect the actual need of the discussion. Within a small group of actively involved discussants, the statement can be changed with a mutual agreement. However, this should be done with caution to not distort the original meaning of any existing arguments. Rather, it should be considered whether a new discussion with the revised opening statement should be launched.

Discussion structure

A discussion has three parts: opening statement(s), argumentation, and closing statement(s). Often also references are added to back up arguments. These are briefly described below using a discussion template. Argumentation consists of defending and attacking arguments and comments.

| Fact discussion: Statments accepted except if toldya. (Disc2) |

|---|

| Opening statement: Opening statement contains one statement or several alternative, conflicting statements. This explicates the dispute at hand. In Opasnet it must be relevant for the page where the discussion is located.

Closing statement: Closing statement contains the current valid statement of the discussion, revised based on the opening statements and the valid arguments targeting it. In this example, the current arguments indicate that the opening statement is accepted, except if you apply paradigm toldya then it is not. The content of a closing statement is transferred to the texts that refer to this discussion (in Opasnet, such references typically come from a knowledge crystal page to its own talk page where the discussion is); after this, the discussion is called resolved. It should be noted that resolutions are always temporary, as discussions can be opened again with new arguments. (Resolved, i.e., a closing statement has been found and updated to the main page.) |

Argumentation:

|

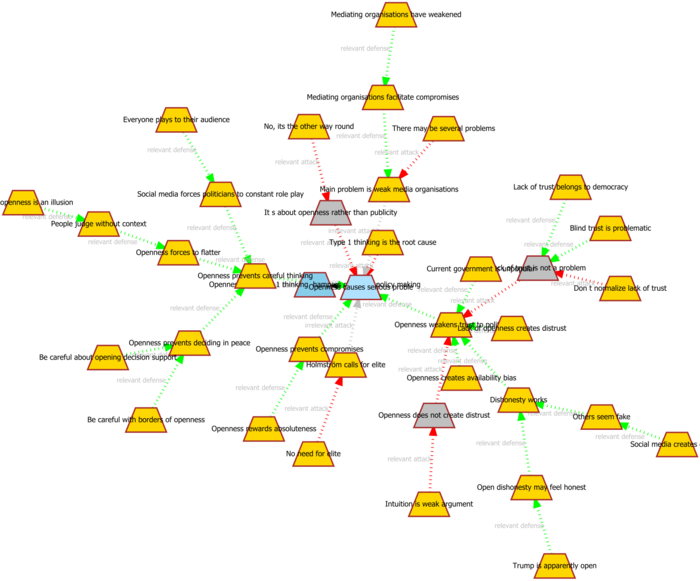

The figure above shows a discussion started by Bengt Holmstöm about problems of open governance. Each argument is shown as a trapezoid. Discussion is organised around an opening statement (pink), which develops into a closing statement (blue for facts, green for values) during the discussion process.

Structure of an argument

Each argument has the following properties (see table below).

| Id | Title | Content | Sign | Target | Type | Paradigm | Relation | Result | Comment |

|---|---|---|---|---|---|---|---|---|---|

| arg1234 | Short title for display | Actual argument | Signature | arg9876 | relevance | science | attack | 1 | If paradigm changes (all else equal), relation may change, although typically only the result changes. |

| arg1234 | Short title for display | Actual argument | Signature | arg5555 | relevance | science | comment | 0 | |

| arg1234 | Short title for display | Actual argument | Signature | arg6666 | truth | science | defense | 1 | Truth refers to the truth of the target |

| arg1234 | Short title for display | Actual argument | Signature | arg1234 | selftruth | science | attack | 0 | Selftruth refers to the truth of the argument itself, unlike other types that refer to the target. |

| arg1234 | Short title for display | Actual argument | Signature | arg9876 | relevance | toldya | comment | 0 | |

| arg1234 | Short title for display | Actual argument | Signature | arg5555 | relevance | toldya | defense | 1 | |

| arg1234 | Short title for display | Actual argument | Signature | arg6666 | truth | toldya | attack | 0 | |

| arg1234 | Short title for display | Actual argument | Signature | arg1234 | selftruth | toldya | comment | 1 | The relation in case of type=selftruth is irrelevant and is ignored. |

| These are unique to an argument | |||||||||

| These are unique to an argument-target pair | |||||||||

| These are unique to a triple of argument-target-paradigm |

Importantly, an argument always has the same id, title, content, and signature. Even if the argument is used several times in different parts of a discussion, it is still a single argument with no variation in these parameters. However, an argument may target several other arguments (as shown as an arrow on insight network graph). Each of these arrows has exactly one type (either relevance or truth); if an arguments targets itself, the type is selftruth.

Finally, people may disagree about the target relation (whether an argument is attacking, defending, or commenting a target argument) and also whether the target relation is successful or not. These disagreements are operationalised as paradigms. One paradigm has exactly one opinion about the relation and the result (e.g. that an argument is an untrue attack), while another paradigm may have another conclusion (e.g. that an argument is true defend).

Parameters are defined in the argument template of Opasnet, and they are embedded into the html code when a wiki page is parsed. It is therefore possible to collect that data by page scraping. The following properties are used to identify the properties of arguments.

| Parameter | Css selector (Opasnet page scraping) | Requirements |

|---|---|---|

| Id | .argument attr=id | Must start with a letter |

| Title | .argument .title | Short text. Is shown on insight graph as node label |

| Content | .argument .content | Text, may be long. Is shown with hover on graph |

| Sign | .argument .sign a:first-of-type | Must contain a link to participant's user page. Is shown with hover on graph |

| Target | NA | Previous argument one level up, or the statement for arguments on the first level |

| Type | .argument i.type | One of the three: relevance, truth, or selftruth (or "both", which is depreciated) |

| Paradigm | .argument .paradigm | Each paradigm should be described on a dedicated page. The rules implemented must be clear |

| Relation | .argument .relation | Is one of these: attack, defense, comment. "Branches" are typically uninteresting and ignored. |

| Result |

|

Truthlikeness of the relation. Either 1 or 0 |

Validity and relevance

- Main article: Paradigm.

Each argument may be valid or invalid meaning that it does or does not affect its target argument, respectively. Validity depends on two parameters of an argument: it is valid if and only if it is true and relevant. Arguments that are untrue or irrelevant are invalid. It should be noted that with arguments, truth and relevance are thought in a narrow, technical sense: if an argument fulfills certain straightforward truth criteria, it is considered true, and the same applies to relevance. This is not to mean that they are true or relevant in an objective sense; rather, these are grassroot-level practical rules that ideally makes the system as a whole to converge towards what we consider truth and relevance. The current default criteria (called the "scientific paradigm") are the following.

An argument is true iff

- it is backed up by a reference, and

- it is not attacked by a valid argument about its truthlikeness (a truth-type argument).

An argument is relevant iff

- it is not attacked by a valid argument about its relevance (a relevance-type argument).

Truth is a property of an argument itself, so if it is true in one discussion, it is true always. Of course, this does not mean that a sentence used in an argument is true in all contexts, but rather that the idea presented in a particular context is true in all discussions. Therefore, people should be very clear about the context when they borrow arguments from other discussions.

In contrast, relevance is a property of the relation between an argument and its target argument (or target statement). Again, this is a context-sensitive property, and in practice, it is possible to borrow relevance from another discussion only if both the argument and its target appear in that exact form and context in both discussions.

Each argument is an attack (red), a defense (green), or a comment (blue) towards its target. The nature of the argument is shown with its colour. The same argument may also attack or defend another argument, with possibly a different colour. This is because the colour is actually not the colour of the argument itself, but it is the colour of its relation with the target. There are a few possibilities to avoid confusion with these differing colours when using arguments on a wiki page.

- An argument is written once in one place, and then a copy of it (with only the arrow and the identifier) is pasted to all other relevant places, with proper colours for those relations.

- I there are several opening statements, the colour should always reflect the relationship to the first (i.e., primary) statement. If the primary statement changes, the colours should be changed respectively.

The legacy templates (Attack, Defend invalid etc.) do not differentiate between truth and relevance, but only validity. Therefore they are depreciated, and a new generic template Argument should be used instead. It is capable of showing relevance (irrelevant arguments have gray arrows) and truth (untrue arguments have gray content). If a legacy template is used, an invalid argument is assumed to be both irrelevant and untrue; it is also assumed to apply the scientific paradigm. If other paradigms are used, this must be stated clearly in the text, because the template offers no functionality for it. In Opasnet, the Argument template is capable of describing five different paradigms and the relevance and truth values of each (see #Practices in Opasnet).

Paradigms in argumentation

Paradigms are collections of rules to determine when an argument is true or relevant. The scientific paradigm is the default in Opasnet, but any paradigms can be developed as long as the rules can be explicitly described and implemented. For example, previously Opasnet implicitly applied a paradigm called unattackedstand (although the name was coined only in summer 2018 and the mere concept of paradigms was developed in early 2018). Unattackedstand has the same rules as the scientific paradigm except that a true argument does not need a reference, a user backing up an argument with their signature is enough.

Paradigms may also have other rules than direct validity rules. For example, the scientific paradigm considers an argument based on observations stronger than an argument based on (expert) opinions without observations, and an argument ad hominem is even weaker.

However, the rules in a paradigm can be anything, e.g. that the strongest arguments are those by a particular user or an authoritative source, such as a holy book. These rules will clearly lead to different validity estimates and interpretations of a discussion. But the methods of discussions and open policy practice have been developed having this in mind. The outcome of such explicitly described differences in interpretations are called shared understanding, and that is considered the main product of these methods.

Practices in Opasnet

For discussing, the #discussion structure should be used. In Opasnet, click the blue capital D in the toolbar on top of the edit window to apply the discussion template. This is how the discussion format appears:

{{discussion

|id = unique identifier of discussion on this page

|Statements =

|Resolution =

|Resolved = Yes, if respective texts updated; empty otherwise.

|Argumentation = Threaded hierarchical list of arguments. Each argument is on its own line. Hierarchy is created by using indents (colon character : in the beginning of a line). For example:

{{argument|relat1=comment|id=1|content=The blue horizontal line on the toolbar represents the comment button. It yields this blue layout, which is used for comments and remarks.}}

:{{argument|relat1=attack|id=3|content=This red arrow represents an attacking argument. }}

::{{argument|relat1=defend|id=2|content=This green arrow represents a defending argument.}}

}}

Arguments can have the parameters that are listed below (each parameter is shown on a separate line for clarity). Note that the parameters may be in any order, and it might be a good idea to show relat1 first. For details, see Template:Argument.

{{argument

| id = identifier of the argument, unique on this page, default: arg + 4 random digits

| title = a short description of the content; displayed on insight networks

| content = content of the argument

| sign = signature of the speaker, default: --~~~~

| type = type of the relation to the target argument, i.e. what is attacked or defended. Either truth or relevance.

| parad1 = main paradigm used to derive the relation between this argument and its target. This is used to format the argument.

| relat1 = relation type between the argument and its target according to the first paradigm: it has two words separated by a single space. The first is either relevant or irrelevant and the second either attack, defend, or comment.

| true1 = truthlikeness of the argument according to the first paragism: either true or untrue.

| parad2 = the second paradigm used.

| relat2 = the second relation type according to paradigm2. Default: relat1

| true2 = the second truthlikeness according to paradigm2. Default: true1

| parad3 etc. up to parad5 in this wiki

}}

Furthermore:

- If you agree with an argument made by others, you can place your signature (click the signature button in the toolbar) after that argument.

- Arguments may be edited or restructured. However, if there are signatures of other people, only minor edits are allowed without their explicit acceptance.

In order to contribute to a discussion you need to have a user account and be logged in.

Referring to a discussion in Opasnet

On a text that refers to a particular discussion (often on the content page of the respective talk page), you should make links at the relevant points to the respective discussions. There are two possibilities:

Because all discussions can be re-opened, the difference between the two is not whether people are likely to participate in the discussion in the future or not. Instead, R↻ means that the current outcome of the discussion, whether an agreement or a continuing dispute, has been transferred to the main page, i.e. the contents of the main page reflect the current status of the discussion. In contrast, D↷ means that in the discussion itself, there is some information that is not yet reflected on the main page; therefore, the reader should read the discussion as well to be fully aware of the status of the page. This way, there is not a need to constantly update the main page during an active discussion. The updating can be done when the outcome of the discussion has stabilised.

Re-organising discussions afterwards

Free-format discussions can be re-organised a posteriori (afterwards) into the discussion structure presented here. The main tasks in this work are to

- document original sources of material,

- remove redundant text,

- structure the arguments around a useful opening statement,

- clarify arguments to be understandable without the context of the original discussion,

- analyse and synthesise outcome into a closing statement,

- save and publish your work.

See an example of a re-organisation work in Discussion of health effects of PM2.5 in Finland(in Finnish)

Calculations

See also

- Individuals should own their personal data (or not?) | Kialo

- Breaking News: The Remaking of Journalism and Why It Matters Now: Alan Rusbridger: 9780374279622: Amazon.com: Books

- Aumann's agreement theorem - Wikipedia

- Discursive psychology - Wikipedia

- Usable environmental knowledge from the perspective of decision-making: the logics of consequentiality, appropriateness, and meaningfulness - ScienceDirect

- The Dilemmas of Citizen Inclusion in Urban Planning and Governance to Enable a 1.5 °C Climate Change Scenario - UP 3(2) - The Dilemmas of Citizen Inclusion in Urban Planning and Governance to Enable a 1.5 _C Climate Change Scenario.pdf

- Deliberative Democracy and Public Dispute Resolution - Oxford Handbooks - oxfordhb-9780198747369-e-17.pdf

- Lawrence Susskind - Wikipedia

- Dialogin etäfasilitointi – DialogiAkatemia

- Kialo for organised discussions

- Structured Discussions: another discussion system for MediaWiki projects

- Iframe extension: may be considered as an alternative to an inside-wiki discussion system: discussion functionality is "borrowed" from antother website using iframes.

- Discussion

- Argument

- Dealing with disputes

- Discussion structure (archived in March 2010)

- Discussion method (archived in October 2009)

- Category:Ongoing discussions

- Category:Resolved discussions

- Template:Discussion (for technical usage of the template)

- Template:Argument (for technical usage of the template)

- Pragma-dialectical argumentation theory

- Keskustelu:Keskustelu some more guidance in Finnish Opasnet

- Discourse website for intelligent discussions: the best contributions are voted to the top

- Stackoverflow about intelligent discussions on computers and ICT.

- Stack Exchange clones

- Question2Answer is a free and open source platform for Q&A sites.

References

|

|

Structure of a method

| Moderator:Nobody (see all) Click here to sign up. |

|

|

| Upload data

|

<section begin=glossary />

- Method is a systematic procedure for a particular information manipulation process that is needed as a part of an assessment work. Typically, a method is a "how-to-do" instruction to calculate a variable; it is used if the dependencies of the variable are unknown until an assessment is executed. In other words, methods can be used to calculate variables within assessments in situations where it is not practical or possible to calculate variables outside an assessment. In these cases, the method page is used within an assessment (e.g. in the dependencies slot) as if it was the variable that it is used to calculate. Method is the basic building block for describing the assessment work (not reality, like the other universal objects). Some methods can be about managing other methods.

<section end=glossary />

Question

What is a structure for a method such that it

- applies to any method that may be needed in any assessment work,

- enables a detailed enough description so that the work can be performed based on it,

- complies with the structure of the universal objects.

Answer

Methods are typically ovariables that contain case-specific dependencies. Their names are given in the dependencies, but the output cannot be calculated until the method is used in some assessment where the outputs of these case-specific ovariables are known.

In contrast, methods commonly contain generic inputs, such as emission or unit factors, for calculating the output. These inputs are given in the Rationale/Input of the method.

| Attribute | Subattribute | Comments |

|---|---|---|

| Name | An identifier | |

| Question |

|

Subattributes are not often needed as titles, because texts are short. |

| Answer |

|

Subattributes are not often needed as titles, because texts are short. |

| Rationale |

|

See also

References

Related files

<mfanonymousfilelist></mfanonymousfilelist>

Structure of a class

<section begin=glossary />

- Class is a set of items (objects) that share the same property or properties. The membership in a class is determined by an inclusion criterion. The property is utilised as a part of all objects that fulfill the criterion. Classes can be used in describing general information that is shared by more than one object. Class efficiently reduces the redundancy of information in the open assessment system. This improves the inter-assessment efficiency of the assessment work.

<section end=glossary />

- Research question about the class structure

- What is a structure for a class such that it

- unambiguously describes the common property,

- unambiguously describes the inclusion criterion, i.e. the rule to find out whether an object has the property and belongs to the class or not,

- inherits the main structure from universal objects,

- complies with the Set theory,

- complies with the PSSP ontology.

The attributes of a classD↷ closely resemble those of a variable. However, the interpretation is slightly different, as can be seen from the table. In addition, the usage of data is not clear at the moment.

| Attribute | Subattributes | Comments |

|---|---|---|

| Name | Identifier for the class. | |

| Scope | Description of a property or properties, which are shared by all the items in the class. | |

| Definition | An inclusion criterion that unambiguously distinguishes whether a particular object has the defined properties or not. In other words, definition separates objects that belong to the class from those that do not belong. The definition also contains the discussion about memberships. | |

| Result | List of items (formally structured objects) that belong to the class. |

Examples of use

A class may contain information about e.g. a good function that should be used to calculate the result, or a range of plausible values for a certain type of variable. Examples of these are given below.

A general dose-response function can be a class. For example, the multi-stage model for cancer dose-responses can be defined as

P(d) = 1-exp(-q0 -q1d -q2d2)

This function has four parameters: q0 (the "background"), q1 (the "slope" at low doses), q2 (the "curvature" parameter) and d (lifetime daily dose of the chemical of interest). The function can be applied to a particular chemical among a wide range of chemical carcinogens, if the chemical-specific parameters q0, q1, and q2 are known. The result attribute of this class is equal to the general form of the multi-stage function. The function is used in the definition/formula attribute of a dose-response variable of a particular chemical, together with the chemical-specific parameters. The result attribute of this variable is the dose-response of the particular chemical, with one parameter, d. This variable can then be applied in a case-specific risk assessment, when the parameter d is replaced by the dose in an exposure scenario in that assessment.

This is an efficient way of organising information: all discussion about the plausibility of the multi-stage model in general is located in the class. Therefore, this discussion is held only once, for all chemicals and all assessments. Also the discussion whether the multi-stage function applies to a particular chemical is located there. The resolution of that discussion applies to all risk assessments on that chemical. The chemical-specific dose-response variable contains the discussion about the best estimates of the chemical-specific parameters. And again, the variable is applicable to all risk assessments on that chemical. A particular risk assessment can focus on estimating the exposures. The whole dose-response part of the assessment is ready-made.

Prior values for variables can also be located in classes. For example, imagine a class "Plausible range of PM2.5 annual average mass concentrations in ambient air." This is a uniform probability distribution of concentrations ranging possibly from 3 µg/m3 (in Antarctic) to 300 µg/m3 (in downtown Delhi). This can be applied in PM2.5 variables for checking for implausible values. The range (i.e., the value of the result attribute of the class) can be located in the definition/data attribute of e.g. a variable "PM2.5 annual average concentration in downtown Kuopio." If we do have measurements from Kuopio, we can do Bayesian updating using the range as the prior. This way, we can operationalise the use of both the case-specific measurements and the general knowledge from the class.

In practice, when new variables are created, they can partly be described using the results of existing classes, as long as the variable belongs to these classes. Possibly there should be a possibility to overrule the class information with case-specific information, if this is explicitly mentioned. However, deviations from the general rule should be defended.

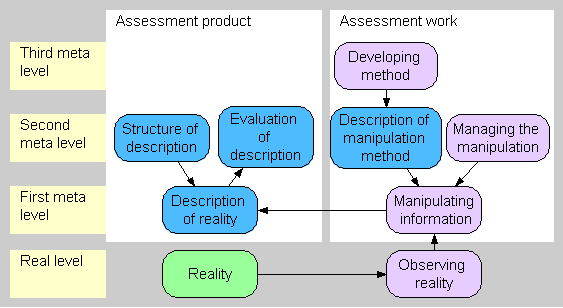

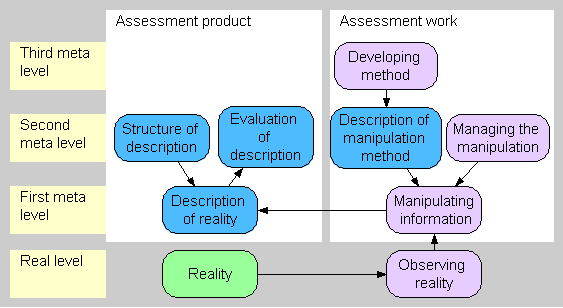

Objects in different abstraction levels

It is useful to see that the information structure has objects that lie on different levels of abstraction, on so called meta-levels. Basically, the zero or real level contains the reality. The first meta level contains objects that describe reality. The second meta level contains objects that describe first-meta-level objects, and so on. Objects on any abstraction level can be described using the objects in this article.

Discussion

There are several formal and informal methods for helping decision-making. Since 1980's, the information structure of a risk assessment has consisted of four distinct parts: hazard identification, exposure assessment, dose-response assessment, and risk characterisation[1]. Decision analysis helps in explicating the decision under consideration and value judgements related to it and finding optimal solutions, assuming rationality.[2] U.S.EPA has published guidance for increasing stakeholder involvement and public participation.[3][4] However, we have not yet seen an attempt to develop a systematic method that covers the whole assessment work, fulfils the open participation and other criteria presented in the Background, and offers both methodologically coherent and practically operational platform. The information structure presented here attempts to offer such a platform.

The information structure contains all parts of the assessment, including both the work and the report as product. All parts are described as distinct objects in a modular fashion. This approach makes it possible to evaluate, criticise, and improve each part separately. Thus, an update of one object during the work has a well-defined impact on a limited number of other objects, and this reduces the management problems of the process.

All relevant issues have a clear place in the assessment structure. This makes it easier for participants to see how they can contribute. On the other hand, if a participant already has a particular issue in mind, it might take extra work to find the right place in the assessment structure. But in a traditional assessment with public participation, this workload goes to an assessor. Organising freely structured contributions is a non-trivial task in a topical assessment, which may receive hundreds of comments.

We have tested this information structure also in practice. It has been used in environmental health assessment about e.g. metal emission risks[5], drinking water[6], and pollutants in fish.[7] So far the structures used have been plausible in practical work. Not all kinds of objects have been extensively used, and some practical needs have been identified for structures. Thus, although the information structure is already usable, its development is likely to continue. Especially, the PSSP attribute Performance has not yet found its final place in the information structure. However, the work and comments so far have convinced us that performance can be added as a part of the information structure, and that it will be very useful in guiding the evaluation of an assessment before, during, and after the work.

The assessments using the information structure have been performed on a website, where each object is a webpage (http://en.opasnet.org). The website is using Mediawiki software, which is the same as for Wikipedia. It is specifically designed for open participation and collaborative production of literary material, and it has proved very useful in the assessment work as well. Technical functionalities are being added to the website to facilitate work also by people who do not have training of the method or the tools.

Conclusions

In summary, we developed a general information structure for assessments. It complies with the requirements of open participation, falsifiability, and reusability. It is applicable in practical assessment work, and there are Internet tools available to make the use of the structure technically feasible.

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

JT and MP jointly scoped the article and developed the main parts of the information structure. AK participated in the dicussions, evaluated the ideas, demanded for solid reasoning, and developed especially the structure of a method. JT wrote the manuscript based on material produced by all authors. All authors participated in revisioning the text and conclusions. All authors accepted the final version of the manuscript.

Acknowledgements

This article is a collaboration between several EU-funded and other projects: Beneris (Food-CT-2006-022936), Intarese (018385-2), Finmerac (Tekes grant 40248/06), and the Centre for Environmental Health Risk Analysis (the Academy of Finland, grants 53307, 111775, and 108571; Tekes grant 40715/01). The unified resource name (URN) of this article is URN:NBN:fi-fe200806131553.

References

- ↑ Red book 1983.

- ↑ Cite error: Invalid

<ref>tag; no text was provided for refs namedraiffa - ↑ U.S.EPA (2001). Stakeholder involvement & public participation at the U.S.EPA. Lessons learned, barriers & innovative approaches. EPA-100-R-00-040, U.S.EPA, Washington D.C.

- ↑ U.S.EPA (2003). Public Involvement Policy of the U.S. Environmental Protection Agency. EPA 233-B-03-002, Washington D.C. http://www.epa.gov/policy/2003/policy2003.pdf

- ↑ Kollanus et al. (2008) Health impacts of metal emissions from a metal smelter in Southern Finland. Manuscript.

- ↑ Päivi Meriläinen, Markku Lehtola, James Grellier, Nina Iszatt, Mark Nieuwenhuijsen, Terttu Vartiainen, Jouni T. Tuomisto. (2008) Developing a conceptual model for risk-benefit analysis of disinfection by-products and microbes in drinking water. Manuscript.

- ↑ Leino O., Karjalainen A., Tuomisto J.T. (2008) Comparison of methyl mercury and omega-3 in fish on children's mental development. Manuscript.

Figures

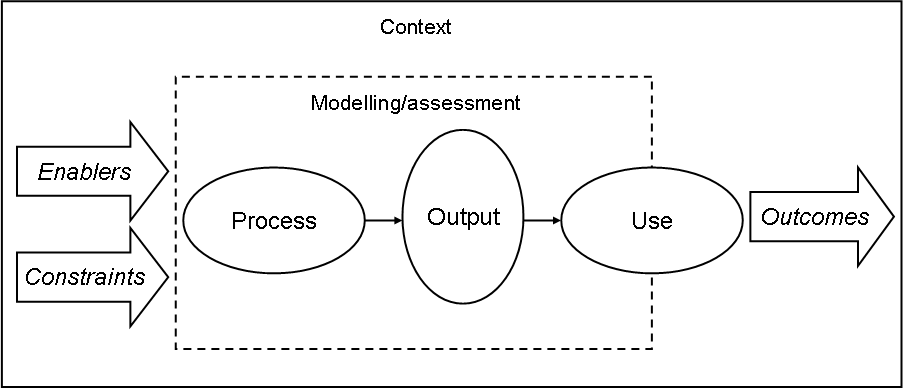

Figure 1. An assessment consists of the making of it and an end product, usually a report. The assessment is bound and structured by its context. Main parts of the context are the scientific context (methods and paradigms available), the policy context (the decision situation for which the information is needed), and the use process (the actual decision-making process where the assessment report is used).

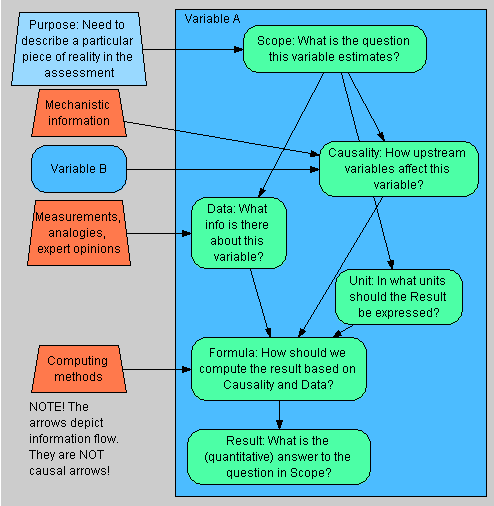

Figure 2. Subattributes of a variable and the information flow between them.

Figure 3. Information and work flow between different objects and abstraction levels of an assessment. The green node is reality that is being described, blue nodes are descriptions of some kind, and purple nodes are work processes. Higher-level objects are those that describe or change the nodes one level below. Note that the reality and usually the observation work (i.e., basic science) is outside the assessment domain.

Tables

Table 1. The attributes of a formally structured object in open assessment.

Table 2. The attributes of an assessment product (typically a report).

Table 3. The attributes of a variable.

Table 4. The attributes of a method.

Table 5. The attributes of a class.

Additional files

None.

- Pages with reference errors

- Open assessment

- Manuscript

- Glossary term

- Universal object

- Variables

- Moderator:Jouni

- Opasnet

- Opasnet training

- Moderator:Nobody

- Knowledge crystal

- Method

- THL publications 2009

- THL publications 2010

- Open policy practice

- Decision analysis and risk management

- Publication

- Fact discussion

- Resolved discussions

- Eracedu

- Guidebook