Open assessment: Difference between revisions

(summaries added; half way through) |

(further away but still not there) |

||

| Line 5: | Line 5: | ||

<section begin=glossary /> | <section begin=glossary /> | ||

:'''Open assessment''' | :'''Open assessment''' is a method that attempts to answer the following research question and to apply the answer in practical assessments: '''How can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?''' | ||

:In practice, the assessment processes are performed using Internet tools (notably [[Opasnet]]) among traditional tools. Stakeholders and other interested people are able to participate, comment, and edit its contents already since an early phase of the process. Open assessment is based on a clear information structure and [[scientific method]] as the ultimate rule for [[dealing with disputes]]. Open assessments explicitly include [[value judgement]]s, which approach spreads the use of open assessment outside the traditional area of risk assessment, to risk management area. However, [[value judgement]]s go through the same [[open criticism]] as scientific claims; the main difference is that scientific claims are based on observations, while value judgements are based on opinions of individuals. | |||

: | |||

<section end=glossary /> | <section end=glossary /> | ||

[[Open assessment]] can also refer to the actual making of such an assessment (precisely: open assessment process), or the end product of the process (precisely: open assessment product or report). Usually, the use of the term open assessment is clear, but if there is a danger of confusion, the precise term (open assessment method, process, or product) should be used. | |||

==Open assessment as a methodology== | ==Open assessment as a methodology== | ||

| Line 14: | Line 15: | ||

Open assessment is built on several different [[method]]s and principles that together make a coherent system for collecting, organising, synthesising, and using information. These [[method]]s and principles are briefly summarised here. A more detailed [[rationale]] about why exactly these [[method]]s are used and needed can be found from [[Open assessment method]]. In addition, each [[method]] or principle has a page of its own in [[Opasnet]]. | Open assessment is built on several different [[method]]s and principles that together make a coherent system for collecting, organising, synthesising, and using information. These [[method]]s and principles are briefly summarised here. A more detailed [[rationale]] about why exactly these [[method]]s are used and needed can be found from [[Open assessment method]]. In addition, each [[method]] or principle has a page of its own in [[Opasnet]]. | ||

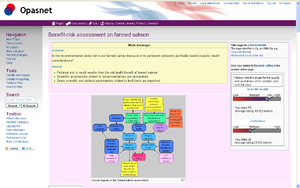

The | [[image:Assessment example.png|thumb|An example of an [[assessment]].]] | ||

The basic idea of [[open assessment]] is to collect information that is needed in a decision-making process. The information is organised as an [[assessment]] that predicts the impacts of different decision options on some outcomes of interest. An assessment is typically a quantitative model about relevant issues causally affected by the decision and affecting the outcomes. Decisions, outcomes, and other issues are modelled as separate parts of an assessment, called [[variable]]s. In practice, [[assessment]]s and [[variable]]s are web pages in [[Opasnet]], a web-workspace dedicated for making such assessments. Such a web page contains all information (text, numerical values, and software code) needed to describe and actually run that part of an assessment model. | |||

These web pages are also called '''[[information object]]s''', because they are the standard way of handling information as chunk-size pieces in [[open assessment]]s. Each object (or page) contains information about a particular issue. Each page also has the same, [[attribute|universal structure]]: a '''research question''' (what is the issue?), '''rationale''' (what do we know about the issue?), and '''result''' (what is our current best answer to the research question?). The descriptions of these issues are built on a web page, and anyone can participate in reading or writing just like in [[Wikipedia]]. Notably, the outcome is owned by everyone and therefore the original authors or assessors do not possess any copyrights or rights to prevent further editing. | |||

[[ | [[Trialogue]] is the word used about such Wikipedia-like contributing. The [[trialogue]] concept emphasises that in addition to having a dialogue or discussion, a major part of the communication and learning between the individuals in a [[group]] happens via [[information object]]s, in this case [[Opasnet]] pages. In other words, people not only talk or read about a topic but they actually contribute to an information object that represents the shared understanding of the group. [[Wikipedia]] is a famous example of [[trialogue|trialogical approach]] although the wikipedists do not use this word. | ||

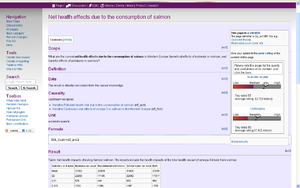

[[image:Variable example.png|thumb|An example of a [[variable]].]] | |||

'''[[ | The key concepts in [[open assessment]] that are not typical in other assessment methods are the explicit roles of [[group]]s and information use purpose. '''[[Group]]s''' are crucial because everything is (implicitly) transformed into questions with this format: "What can we as a [[group]] know about issue X?" The group considering a particular issue may be explicitly described, but it may also be implicit. In the latter case, it typically means ''anyone who wants to participate'', or alternatively, ''the whole mankind''. The '''use purpose of information''' is crucial because that is the fuel of assessments. Nothing is done just for fun (although that is a valid motivation as well) but because the information is needed for some practical, explicit use. Of course, also other assessments are done to inform decisions, but [[open assessment]]s are continuously evaluated against the use purpose; this is used to guide the assessment work, and the assessment is finished as soon as the use purpose is fulfilled. | ||

[[Open assessment]] attempts to be a coherent methodology. Everything in the methodology, as well as in all [[open assessment]]s is accepted or rejected based on observations and reasoning. However, there are a few things that cannot be verified using observations, and these are called [[axioms of open assessment]]. The six axioms are the following: 1) The reality exists. 2) The reality is a continuum without e.g. sudden appearances or disappearances of things without reason. 3) I can reason. 4) I can observe and use my observations and reasoning to learn about the reality. 5) Individuals (like me) can communicate and share information about the reality. 6) Not everyone is a systematic liar. | |||

'''[[Inference rules]]''' are used to decide what to believe. The rules are summarised here. 1) Anyone can promote a [[statement]] about anything (''promote'' = claim that the [[statement]] is true). 2) A promoted [[statement]] is considered valid unless it is invalidated (i.e., convincingly shown not to be true). 3) Uncertainty about whether a statement is true is measured with [[subjective probability|subjective probabilities]]. 4) The validity of a [[statement]] is always conditional to a particular [[group]] of people. 5) A [[group]] can develop other rules than these inference rules (such as mathematics or laws of physics) for deciding what to believe. 6) If two people within a group promote conflicting statements, the ''a priori'' belief is that each statement is equally likely to be true. 7) ''A priori'' beliefs are updated into ''a posteriori'' beliefs based on observations and [[open criticism]] that is based on shared rules. In practice, this means the use of [[scientific method]]. | |||

'''[[Benefit-risk assessment of food supplements#Result|Tiers of open assessment process]]''' describe typical phases of work when an [[open assessment]] is performed. The tiers are the following: Tier I: Definition of the use purpose and scope of an assessment. Tier II: Definition of the decision criteria. Tier III: Information production. It is noteworthy that the three tiers closely resemble the first three phases of [[IEHIA]], but the fourth phase (appraisal) is not a separate tier in [[open assessment]]. Instead, appraisal and information use happens at all tiers as a continuous and iterative process. In addition, the tiers have some similarities also to [[BRAFO]] approach. | '''[[Benefit-risk assessment of food supplements#Result|Tiers of open assessment process]]''' describe typical phases of work when an [[open assessment]] is performed. The tiers are the following: Tier I: Definition of the use purpose and scope of an assessment. Tier II: Definition of the decision criteria. Tier III: Information production. It is noteworthy that the three tiers closely resemble the first three phases of [[IEHIA]], but the fourth phase (appraisal) is not a separate tier in [[open assessment]]. Instead, appraisal and information use happens at all tiers as a continuous and iterative process. In addition, the tiers have some similarities also to [[BRAFO]] approach. | ||

'''[[ | [[Open assessment]]s contain two kinds of items (or [[variable]]s): [[fact]]s (what is?) and '''[[moral norm]]s''' (what should be?). [[Statement]]s about [[moral norm]]s are developed using the '''[[morality game]]'''. The most important rules of the [[morality game]] are the following: 1) Observations are used as the starting point when evaluating the validity of facts. 2) Opinions are used as the starting point when evaluating the validity of moral norms. 3) In addition to the starting points, the validity of facts and moral norms is evaluated using [[open criticism]] and [[scientific method]]. 4) Facts must be coherent with each other everywhere. 5) Moral norms must be coherent with each other within a particular [[group]]. 6) Moral norms may be conflicting between two [[group]]s unless they share members to which conflicting norms apply. | ||

It is clear that within a self-organised group, not all people agree on all facts and moral norms. Disputes are resolved using structured '''[[discussion]]s'''. In straightforward cases, discussions can be informal, but with more complicated or heated situations, particular discussion rules are followed. 1) Each discussion has one or more statements whose validity is the topic of the discussion. 2) A statement is valid unless it is attacked with a valid argument. 3) Statements can be defended or attacked with [[argument]]s, which are themselves treated as statements of smaller discussions. Thus, a hierarchical structure of defending and attacking arguments is created. 4) When the discussion is resolved, the content of ''all'' valid statements is incorporated into the [[information object]]. All resolutions are temporary, and anyone can reopen a discussion. | |||

[[ | Most variables have numerical values as their [[result]]s. Often these are uncertain and they are expressed as probability distributions. A web page is an impractical place to store and handle this kind of information. For this purpose, a database called [[Opasnet Base]] is used. It is a very flexible storage, and almost any results that can be expressed as two-dimensional tables can be stored in [[Opasnet Base]]. Results of a [[variable]] can be retrieved from the respective [[Opasnet]] page. [[Opasnet]] can be used to upload new results into the database. And finally, if one [[variable]] B is causally dependent on [[variable]] A, the result of A can be automatically retrieved from [[Opasnet Base]] and used in the [[formula]] for calculating B. | ||

[[ | Because [[Opasnet Base]] contains samples of distributions of variables, it is actually one huge [[Bayesian belief network]], which can be used for assessment-level analyses and optimisation of different decision options. | ||

[[ | [[Bayesian inference]] [[Value of information]] | ||

[[ | [[Roles, tasks, and functionalities in Opasnet]] | ||

[[Perspective levels of decision making]]? Do we need this? | [[Perspective levels of decision making]]? Do we need this? | ||

[[Respect theory]] | [[Respect theory]] | ||

| Line 45: | Line 52: | ||

[[Falsification]] | [[Falsification]] | ||

[[Darm]] | [[Darm]] | ||

| Line 124: | Line 115: | ||

* [[Open assessment method]] | * [[Open assessment method]] | ||

* [[Opasnet Base]] | * [[Opasnet Base]] | ||

* [[Discussion structure]] [[Discussion method]] [[Discussion]] | |||

==Keywords== | ==Keywords== | ||

Revision as of 22:13, 30 December 2010

| [show] This page is a encyclopedia article.

The page identifier is Op_en2875 |

|---|

For a brief description about open assessment and the related workspace, see Opasnet.

<section begin=glossary />

- Open assessment is a method that attempts to answer the following research question and to apply the answer in practical assessments: How can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?

- In practice, the assessment processes are performed using Internet tools (notably Opasnet) among traditional tools. Stakeholders and other interested people are able to participate, comment, and edit its contents already since an early phase of the process. Open assessment is based on a clear information structure and scientific method as the ultimate rule for dealing with disputes. Open assessments explicitly include value judgements, which approach spreads the use of open assessment outside the traditional area of risk assessment, to risk management area. However, value judgements go through the same open criticism as scientific claims; the main difference is that scientific claims are based on observations, while value judgements are based on opinions of individuals.

<section end=glossary />

Open assessment can also refer to the actual making of such an assessment (precisely: open assessment process), or the end product of the process (precisely: open assessment product or report). Usually, the use of the term open assessment is clear, but if there is a danger of confusion, the precise term (open assessment method, process, or product) should be used.

Open assessment as a methodology

Open assessment is built on several different methods and principles that together make a coherent system for collecting, organising, synthesising, and using information. These methods and principles are briefly summarised here. A more detailed rationale about why exactly these methods are used and needed can be found from Open assessment method. In addition, each method or principle has a page of its own in Opasnet.

The basic idea of open assessment is to collect information that is needed in a decision-making process. The information is organised as an assessment that predicts the impacts of different decision options on some outcomes of interest. An assessment is typically a quantitative model about relevant issues causally affected by the decision and affecting the outcomes. Decisions, outcomes, and other issues are modelled as separate parts of an assessment, called variables. In practice, assessments and variables are web pages in Opasnet, a web-workspace dedicated for making such assessments. Such a web page contains all information (text, numerical values, and software code) needed to describe and actually run that part of an assessment model.

These web pages are also called information objects, because they are the standard way of handling information as chunk-size pieces in open assessments. Each object (or page) contains information about a particular issue. Each page also has the same, universal structure: a research question (what is the issue?), rationale (what do we know about the issue?), and result (what is our current best answer to the research question?). The descriptions of these issues are built on a web page, and anyone can participate in reading or writing just like in Wikipedia. Notably, the outcome is owned by everyone and therefore the original authors or assessors do not possess any copyrights or rights to prevent further editing.

Trialogue is the word used about such Wikipedia-like contributing. The trialogue concept emphasises that in addition to having a dialogue or discussion, a major part of the communication and learning between the individuals in a group happens via information objects, in this case Opasnet pages. In other words, people not only talk or read about a topic but they actually contribute to an information object that represents the shared understanding of the group. Wikipedia is a famous example of trialogical approach although the wikipedists do not use this word.

The key concepts in open assessment that are not typical in other assessment methods are the explicit roles of groups and information use purpose. Groups are crucial because everything is (implicitly) transformed into questions with this format: "What can we as a group know about issue X?" The group considering a particular issue may be explicitly described, but it may also be implicit. In the latter case, it typically means anyone who wants to participate, or alternatively, the whole mankind. The use purpose of information is crucial because that is the fuel of assessments. Nothing is done just for fun (although that is a valid motivation as well) but because the information is needed for some practical, explicit use. Of course, also other assessments are done to inform decisions, but open assessments are continuously evaluated against the use purpose; this is used to guide the assessment work, and the assessment is finished as soon as the use purpose is fulfilled.

Open assessment attempts to be a coherent methodology. Everything in the methodology, as well as in all open assessments is accepted or rejected based on observations and reasoning. However, there are a few things that cannot be verified using observations, and these are called axioms of open assessment. The six axioms are the following: 1) The reality exists. 2) The reality is a continuum without e.g. sudden appearances or disappearances of things without reason. 3) I can reason. 4) I can observe and use my observations and reasoning to learn about the reality. 5) Individuals (like me) can communicate and share information about the reality. 6) Not everyone is a systematic liar.

Inference rules are used to decide what to believe. The rules are summarised here. 1) Anyone can promote a statement about anything (promote = claim that the statement is true). 2) A promoted statement is considered valid unless it is invalidated (i.e., convincingly shown not to be true). 3) Uncertainty about whether a statement is true is measured with subjective probabilities. 4) The validity of a statement is always conditional to a particular group of people. 5) A group can develop other rules than these inference rules (such as mathematics or laws of physics) for deciding what to believe. 6) If two people within a group promote conflicting statements, the a priori belief is that each statement is equally likely to be true. 7) A priori beliefs are updated into a posteriori beliefs based on observations and open criticism that is based on shared rules. In practice, this means the use of scientific method.

Tiers of open assessment process describe typical phases of work when an open assessment is performed. The tiers are the following: Tier I: Definition of the use purpose and scope of an assessment. Tier II: Definition of the decision criteria. Tier III: Information production. It is noteworthy that the three tiers closely resemble the first three phases of IEHIA, but the fourth phase (appraisal) is not a separate tier in open assessment. Instead, appraisal and information use happens at all tiers as a continuous and iterative process. In addition, the tiers have some similarities also to BRAFO approach.

Open assessments contain two kinds of items (or variables): facts (what is?) and moral norms (what should be?). Statements about moral norms are developed using the morality game. The most important rules of the morality game are the following: 1) Observations are used as the starting point when evaluating the validity of facts. 2) Opinions are used as the starting point when evaluating the validity of moral norms. 3) In addition to the starting points, the validity of facts and moral norms is evaluated using open criticism and scientific method. 4) Facts must be coherent with each other everywhere. 5) Moral norms must be coherent with each other within a particular group. 6) Moral norms may be conflicting between two groups unless they share members to which conflicting norms apply.

It is clear that within a self-organised group, not all people agree on all facts and moral norms. Disputes are resolved using structured discussions. In straightforward cases, discussions can be informal, but with more complicated or heated situations, particular discussion rules are followed. 1) Each discussion has one or more statements whose validity is the topic of the discussion. 2) A statement is valid unless it is attacked with a valid argument. 3) Statements can be defended or attacked with arguments, which are themselves treated as statements of smaller discussions. Thus, a hierarchical structure of defending and attacking arguments is created. 4) When the discussion is resolved, the content of all valid statements is incorporated into the information object. All resolutions are temporary, and anyone can reopen a discussion.

Most variables have numerical values as their results. Often these are uncertain and they are expressed as probability distributions. A web page is an impractical place to store and handle this kind of information. For this purpose, a database called Opasnet Base is used. It is a very flexible storage, and almost any results that can be expressed as two-dimensional tables can be stored in Opasnet Base. Results of a variable can be retrieved from the respective Opasnet page. Opasnet can be used to upload new results into the database. And finally, if one variable B is causally dependent on variable A, the result of A can be automatically retrieved from Opasnet Base and used in the formula for calculating B.

Because Opasnet Base contains samples of distributions of variables, it is actually one huge Bayesian belief network, which can be used for assessment-level analyses and optimisation of different decision options.

Bayesian inference Value of information

Roles, tasks, and functionalities in Opasnet

Perspective levels of decision making? Do we need this?

Why is open assessment a revolutionary method?

There are several things that are done differently, and arguably better, in open assessment compared with traditional ways of collecting information. These are briefly listed here and then described in more detail.

- Open assessment can be applied to most decision-making situations.

- Open assessment helps to focus on relevant issues.

- Important issues are explicated.

- The expression of values is encouraged.

- It becomes more difficult to promote non-explicated values, i.e. hidden agendas.

- Open assessment focuses on primary issues and thus gives little emphasis on secondary issues.

- Open assessment separates the policy-making (developing and evaluating potential decision options) and the actual decision-making (making of the decision by the authoritative body).

- Open assessment breaks the information monopoly of the authoritative body and motivates participation.

- Open assessment makes the information collection quicker and easier.

- Open assessment is based on the scientific method.

- Open assessment does not prevent the use of any previous methods.

Open assessment can be applied to most decision-making situations.

Open assessment helps to focus on relevant issues.

The assessment work boils down to answering the assessment question. Whatever helps in answering is useful, and whatever does not help is useless. You can always ask a practical question from a person who suggests additional tasks: "How would this task help us in answering the question?" It is a simple question to ask, but a difficult one to answer by a bureaucrat.

Important issues are explicated.

The key part of an assessment product is an answer to the assessment question. The question can easily be falsified, unless it is explicitly defended by relevant arguments found in the assessment. This simple rule forces the assessment participants to explicate all issues that might be relevant for the end users when they evaluate the acceptability of the answer.

The expression of values is encouraged.

Valuations are used to optimise decisions. Implicit values are not used to make conclusions. Therefore, if you don't like Chinese food, you must express this value in the lunch place assessment, otherwise the value is ignored. Any values can be included, and these will be taken into account.

It becomes more difficult to promote non-explicated values, i.e. hidden agendas.

Open assessment focuses on primary issues and thus gives little emphasis on secondary issues.

Secondary issues include preparation committees, meeting minutes and so on. After all, all these secondary issues are only needed to get answers to the questions. Instead of trying to get into the committee, participate in the discussions, and write the minutes, you just go to your assessment page and write down your suggestions, and you are done. Of course you can still participate in any meetings to stimulate your thinking, but many people have experience on meetings that take a lot of working time without giving any stimulation.

Open assessment separates the policy-making (developing and evaluating potential decision options) and the actual decision-making (making of the decision by the authoritative body).

Open assessment opens the policy-making so that anyone can participate and bring in their information.

Open assessment breaks the information monopoly of the authoritative body and motivates participation.

The authoritative body can still make the decision just like before. But this body has no say over issues that will be included in an open assessment. The inclusion or exclusion of issues depends only on relevance, which ultimately depends only on the assessment question.

Open assessment makes the information collection quicker and easier.

Open assessment is based on the scientific method.

Open assessment does not prevent the use of any previous methods.

Open assessment does not preclude any methods. It only determines the end product (a hierarchical thread of questions and answers, starting from the main question of the assessment), and how individual pieces of information are evaluated (based on relevance, logic, and coherence with observations). Of course, some traditional methods will perform poorly in these conditions, but those who insist to use them are free to do so.

See also

- Opasnet

- Open Assessors' Network

- Open assessment method

- Opasnet Base

- Discussion structure Discussion method Discussion

Keywords

References

Related files

<mfanonymousfilelist></mfanonymousfilelist>