From open assessment to shared understanding: practical experiences: Difference between revisions

m (→Decisions and other upstream orders: a small clarification) |

(article citation added) |

||

| (211 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

{{nugget|moderator=Jouni}} | {{nugget|moderator=Jouni}} | ||

'''From open | '''From insight network to open policy practice: practical experiences''' is a manuscript of a scientific article. The main point is to offer a comprehensive summary of the methods developed at THL/environmental health to support informed societal decision making, and evaluate their use and usability in practical examples in 2006-2018. The manuscript was published in 2020: | ||

Tuomisto, J.T., Pohjola, M.V. & Rintala, T. From insight network to open policy practice: practical experiences. Health Res Policy Sys 18, 36 (2020). https://doi.org/10.1186/s12961-020-00547-3 | |||

'''Title page | '''Title page | ||

'''From insight network to open policy practice: practical experiences | |||

Short title: From insight network to open policy practice | |||

Jouni T. Tuomisto<sup>1*</sup> ORCID 0000-0002-9988-1762, Mikko Pohjola<sup>1,2</sup> 0000-0001-9006-6510, Teemu Rintala<sup>1,3</sup> ORCID 0000-0003-1849-235X. | |||

* | <sup>1</sup> Finnish Institute for Health and Welfare, Kuopio, Finland | ||

<sup>2</sup> Kisakallio Sport Institute, Lohja, Finland | |||

<sup>3</sup> Institute of Biomedicine, University of Eastern Finland, Kuopio, Finland | |||

<sup>*</sup> Corresponding author | |||

Email: jouni.tuomisto[]thl.fi | |||

This article describes a decision support method called open policy practice. It has mostly been developed in Finnish Institute for Health and Welfare (THL, Finland) during the last 15 years. Each assessment, case study, and method has been openly described and also typically published in scientific journals. However, this is the first comprehensive summary of open policy practice as a whole (since 2007) and thus gives a valuable overview, rationale, and evaluation for several methodological choices we have made. We have combined methods from several disciplines, including toxicology, exposure sciences, impact assessment, statistical and Bayesian methods, argumentation theory, ontologies, and co-creation to produce a coherent method for scientific decision support. | |||

The article is currently under peer review. You can read about the main topics of the article from Opasnet pages [[Open policy practice]], [[Shared understanding]], [[Open assessment]], and [[Properties of good assessment]]. | |||

== Abstract == | == Abstract == | ||

| Line 27: | Line 32: | ||

'''Background''' | '''Background''' | ||

Evidence- | Evidence-informed decision making and better use of scientific information in societal decisions has been an area of development for decades but is still topical. Decision support work can be viewed from the perspective of information collection, synthesis, and flow between decision makers, experts, and stakeholders. Open policy practice is a coherent set of methods for such work. It has been developed and utilised mostly in Finnish and European contexts. | ||

'''Methods''' | '''Methods''' | ||

An overview of open policy practice is given, and theoretical and practical properties are evaluated based on properties of good policy support. The evaluation is based on information from several assessments and research projects developing and applying open policy practice and the authors' practical experiences. The methods are evaluated against their capability of producing quality of content, applicability, and efficiency in policy support, as well as how well they support close interaction among participants and understanding of each other's views. | |||

'''Results''' | '''Results''' | ||

The evaluation revealed that methods and online tools work as expected, as demonstrated by the assessments and policy support processes conducted. The approach improves the availability of information and especially of relevant details. Experts are ambivalent about the acceptability of openness: it is an important scientific principle, but it goes against many current research and decision making practices. However, co-creation and openness are megatrends that are changing science, decision making and the society at large. Against many experts' fears, open participation has not caused problems in performing high-quality assessments. On the contrary, a key challenge is to motivate and help more experts, decision makers, and citizens to participate and share their views. Many methods within open policy practice have also been used widely in other contexts. | |||

'''Conclusions''' | '''Conclusions''' | ||

Open policy practice proved to be a useful and coherent set of methods. It guided policy processes toward more collaborative approach, whose purpose was wider understanding rather than winning a debate. There is potential for merging open policy practice with other open science and open decision process tools. Active facilitation, community building and improving the user-friendliness of the tools were identified as key solutions for improving usability of the method in the future. | |||

;Keywords: environmental health, decision support, open assessment, open policy practice, shared understanding, | ;Keywords: environmental health, decision support, open assessment, open policy practice, shared understanding, policy making, collaboration, evaluation, knowledge crystal, impact assessment | ||

== Background == | == Background == | ||

This article describes and evaluates ''open policy practice'', a set of methods and tools for improving evidence-informed policy making. Evidence-informed decision support has been a hot and evolving topic for a long time, and its importance is not diminishing any time soon. In this article, decision support is defined as knowledge work that is performed during the whole decision process (ideating possible actions, assessing impacts, deciding between options, implementing decisions, and evaluating outcomes) and that aims to produce better decisions and outcomes<ref name="pohjola2013">Pohjola M. Assessments are to change the world. Prerequisites for effective environmental health assessment. Helsinki: National Institute for Health and Welfare Research 105; 2013. http://urn.fi/URN:ISBN:978-952-245-883-4. Accessed 1 Feb 2020.</ref>. Here, "assessment of impacts" means ex ante consideration about what will happen if a particular decision is made, and "evaluation of outcomes" means ex post consideration about what did happen after a decision was implemented. | |||

The area is complex, and the key players — decision makers, experts, and citizens or other stakeholders — all have different views on the process, their own roles in it, and how information should be used in the process. For example, researchers often think of information as a way to find the truth, while politicians see information as one of the tools to promote political agendas ultimately based on values.<ref name="jussila2012">Jussila H. Päätöksenteon tukena vai hyllyssä pölyttymässä? Sosiaalipoliittisen tutkimustiedon käyttö eduskuntatyössä. [Supporting decision making or sitting on a shelf? The use of sociopolitical research information in the Finnish Parliament.] Helsinki: Sosiaali- ja terveysturvan tutkimuksia 121; 2012. http://hdl.handle.net/10138/35919. Accessed 1 Feb 2020. (in Finnish)</ref> Therefore, a successful method should provide functionalities for each of the key groups. | |||

In the 1970's, the focus was on scientific knowledge and an idea that political ambitions should be separated from objective assessments especially in the US. Since the 1980's, risk assessment has been a key method to assess human risks of environmental and occupational chemicals<ref>National Research Council. Risk Assessment in the Federal Government: Managing the Process. Washington DC: National Academy Press; 1983.</ref>. National Research Council specifically developed a process that could be used by all federal US agencies. | In the late 1970's, the focus was on scientific knowledge and an idea that political ambitions should be separated from objective assessments especially in the US. Since the 1980's, risk assessment has been a key method to assess human risks of environmental and occupational chemicals<ref>National Research Council. Risk Assessment in the Federal Government: Managing the Process. Washington DC: National Academy Press; 1983.</ref>. National Research Council specifically developed a process that could be used by all federal US agencies. The report emphasised the importance of scientific knowledge in decision making and scientific methods, such as critical use of data, as integral parts of assessments. Criticism based on observations and rationality is a central idea in the scientific method<ref name="popper1963">Popper K. Conjectures and Refutations: The Growth of Scientific Knowledge, 1963, ISBN 0-415-04318-2</ref>. The report also clarified the use of causality: the purpose of an assessment is to clarify and quantify a causal path where an exposure to a chemical or other agent leads to a health risk via pathological changes described by the dose-response function of that chemical. | ||

This shortcoming was | The approach was designed for single chemicals rather than for complex societal issues. This shortcoming was approached in another report that acknowledged this complexity and offered deliberation with stakeholders as a solution, in addition to scientific analysis<ref name="nrc1996">National Research Council. Understanding risk. Informing decisions in a democratic society. Washington DC: National Academy Press; 1996.</ref>. An idea was to explicate the intentions of the decision maker but also those of the public. Also, mutual learning about the topic was seen important. There are models for describing facts and values in a coherent dual system<ref>von Winterfeldt D. Bridging the gap between science and decision making. PNAS 2013;110:3:14055-14061. http://www.pnas.org/content/110/Supplement_3/14055.full</ref>. However, practical assessments have found it difficult to successfully perform deliberation on a routine basis<ref name="pohjola2012">Pohjola MV, Leino O, Kollanus V, Tuomisto JT, Gunnlaugsdóttir H, Holm F, Kalogeras N, Luteijn JM, Magnússon SH, Odekerken G, Tijhuis MJ, Ueland O, White BC, Verhagen H. State of the art in benefit-risk analysis: Environmental health. Food Chem Toxicol. 2012;50:40-55.</ref>. Indeed, citizens often complain that even if they have been formally listened to during a process, the processes need more openness, as their concerns have not contributed to the decisions made<ref>Doelle M, Sinclair JA. (2006) Time for a new approach to public participation in EA: Promoting cooperation and consensus for sustainability. Environmental Impact Assessment Review 26: 2: 185-205 https://doi.org/10.1016/j.eiar.2005.07.013.</ref>. | ||

Western societies have shown a megatrend of increasing openness in many sectors, including decision-making and research. Openness of scientific publishing is increasing and many research funders also demand publishing of data, and research societies are starting to see the publishing of data as a scientific merit in itself<ref name="tsv2020"/>. It has been widely acknowledged that the current mainstream of proprietary (as contrast to open access) scientific publishing is a hindrance to spreading ideas and ultimately science<ref>Eysenbach G. Citation Advantage of Open Access Articles. PLoS Biol 2006: 4; e157. doi: 10.1371/journal.pbio.0040157</ref>. Also governments have been active in opening data and statistics to wide use (data.gov.uk). Governance practices have been developed towards openness and inclusiveness, promoted by international initiatives such as Open Government Partnership (www.opengovpartnership.org). | |||

As an extreme example, a successful hedge fund Bridgewater Associates implements radical openness and continuous criticism of all ideas presented by its workers rather than letting organisational status determine who is heard<ref name="dalio2017">Dalio R. Principles: Life and work. New York: Simon & Shuster; 2017. ISBN 9781501124020</ref>. In a sense, they are implementing the scientific method in much more rigorous way than what is typically done in science. | |||

The | In the early 2000's, several important books and articles were published about mass collaboration<ref>Tapscott D, Williams AD. Wikinomics. How mass collaboration changes everything. USA: Portfolio; 2006. ISBN 1591841380</ref>, wisdom of crowds<ref>Surowiecki J. The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations. USA: Doubleday; Anchor; 2004. ISBN 9780385503860</ref>, crowdsourcing in the government<ref name="noveck2010">Noveck, BS. Wiki Government - How Technology Can Make Government Better, Democracy Stronger, and Citizens More Powerful. Brookings Institution Press; 2010. ISBN 9780815702757</ref>, and co-creation<ref name="mauser2013">Mauser W, Klepper G, Rice M, Schmalzbauer BS, Hackmann H, Leemans R, Current HM. Transdisciplinary global change research: the co-creation of knowledge for sustainability. Opinion in Environmental Sustainability 2013;5:420–431; doi:10.1016/j.cosust.2013.07.001</ref>. A common idea of the authors was that voluntary, self-organised groups had knowledge and capabilities that could be much more effectively harnessed in the society than what was happening at the time. Large collaborative projects have shown that in many cases, they are very effective ways to produce high-quality information, as long as quality control systems are functional. In software development, Linux operating system, Git software, and Github platform are examples of this. Also Wikipedia, the largest and most used encyclopedia in the world, has demonstrated that self-organised groups can indeed produce high-quality content<ref>Giles J. Internet encyclopaedias go head to head. Nature 2005;438:900–901 doi:10.1038/438900a</ref>. | ||

The five principles of collaboration, openness, causality, criticism, and intentionality (Table 1) were seen as potentially important for environmental health assessment in Finnish Institute for Health and Welfare (THL; at that time National Public Health Institute, KTL), and they were adopted in the methodological decision support work of the Centre of Excellence for Environmental Health Risk Analysis (2002-2007). Open policy practice has been developed during the last twenty years especially to improve environmental health assessments<sup>a</sup>. Developers have come from several countries in projects mostly funded by EU and the Academy of Finland (see Funding and Acknowledgements). | |||

Materials for the development, testing, and evaluation of open policy practice were collected from several sources. | |||

Research projects about assessing environmental health risks were an important platform to develop, test, and implement assessment methods and policy practices. Important projects are listed in Funding. Especially the sixth framework programme of EU and its INTARESE and HEIMTSA projects (2005-2011) enabled active international collaboration around environmental health assessment methods. | |||

Assessment cases were performed in research projects and in support for national or municipality decision making in Finland. Methods and tools were developed side by side with practical assessment work (Appendix S1). | |||

=== | Literature searches were performed to scientific and policy literature and websites. Concepts and methods similar to those in open policy practice were sought. Data was searched from Pubmed, Web of Knowledge, Google Scholar, and the Internet. In addition, a snowball method was used: found documents were used to screen their references and authors' other publications to identify new publications. Articles that describe large literature searches and their results include<ref name="pohjola2013"/><ref name="pohjola2012"/><ref name="pohjola2013b"/><ref name="pohjola2011"/>. | ||

Open risk assessment workshops were organised as spin-offs of several of these projects for international doctoral students in 2007, 2008, and 2009. The workshops offered a place to share, discuss, and criticise ideas. | |||

A master's course ''Decision Analysis and Risk Management'' (6 credit points) was organised by the University of Eastern Finland (previously University of Kuopio) in 2011, 2013, 2015, and 2017. The course taught open policy practice and tested its methods in course work. | |||

Finally, general expertise and understanding was developed during practical experiences and long-term follow-up of international and national politics. | |||

The development and selection of methods and tools to open policy practice has roughly followed this iterative pattern, where an idea is improved during each iteration, or sometimes rejected. | |||

* A need is identified for improving knowledge practices of a decision process or scientific policy support. This need typically arises from scientific literature, project work or news media. | |||

* A solution idea is developed in aim to tackle the need. | |||

* It is checked whether the idea fits logically in the current framework of open policy practice. | |||

* The idea is discussed in a project team to develop it further and gain acceptance. | |||

* A practical solution (web tool, checklist or similar) is produced. | |||

* The solution is piloted in an assessment or policy process. | |||

* The solution is added into the recommended set of methods of open policy practice. | |||

* The method is updated based on practical experience. | |||

= | Development of open policy practice started with focus on opening the expert work in policy assessments. In 2007, this line of research produced a summary report about the new methods and tools developed to facilitate assessments<ref name="ora2007">Tuomisto JT, Pohjola M, editors. Open Risk Assessment. A new way of providing scientific information for decision-making. Helsinki: Publications of the National Public Health Institute B18; 2007. http://urn.fi/URN:ISBN:978-951-740-736-6.</ref>. Later, a wider question about ''open policy practice''<sup>b</sup> emerged: how to organise evidence-informed decision making in a situation where the five principles are used as the starting point? The question was challenging, especially as it was understood that societal decision making is rarely a single event, but often consists of several interlinked decisions at different time points and sometimes by several decision-making bodies. Therefore, it was seen more as a leadership guidance rather than advice about a single decision. | ||

This article gives the first comprehensive, peer-reviewed description about the current methods and tools of open policy practice since the 2007 report<ref name="ora2007"/>. Case studies have been published along the way, and the key methods have been described in different articles. Also, all methods and tools have been developed online and the full material has been available at Opasnet (http://en.opasnet.org) for interested readers since each piece was first written. | |||

The purpose of this article is to critically evaluate the performance of open policy practice. Does open policy practice have the properties of good policy support? And does it enable policy support according to the five principles in Table 1? | |||

{| {{prettytable}} | |||

|+'''Table 1. Principles of open policy practice. (COCCI principles) | |||

!Principle || Description | |||

|---- | |||

| Collaboration || Knowledge work is performed together in aim to produce shared information. | |||

|---- | |||

| Openness || All work and all information is openly available to anyone interested for reading and contributing all the time. If there are exceptions, these must be publicly justified. | |||

|---- | |||

| Causality || The focus is on understanding and describing the causal relations between the decision options and the intended outcomes. The aim is to predict what impacts will likely occur if a particular decision option is chosen. | |||

|---- | |||

{|{{prettytable}} | | Criticism || All information presented can be criticised based on relevance and accordance to observations. The aim is to reject ideas, hypotheses — and ultimately decision options — that do not hold against critique. | ||

|+'''Table 1. | |---- | ||

! | | Intentionality || The decision makers explicate their objectives and decision options under consideration. Also values of other participants or stakeholders are documented and considered. | ||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

| | |||

|---- | |---- | ||

|} | |} | ||

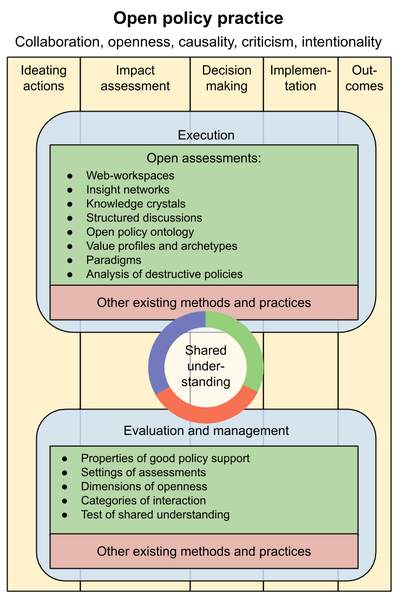

==Open policy practice== | |||

In | [[image:Information flow within open policy practice.svg|thumb|400px|Figure 1. Information flows in open policy practice. Open assessments and web-workspaces have an important role as information hubs. They collect relevant information for particular decision processes and organise and synthesise it into useful formats especially for decision makers but also for anyone. The information hub works more effectively if all stakeholders contribute to one place, or alternatively facilitators collect their contributions there.]] | ||

In this section, open policy practice is described in its current state. First, an overview is given, and then each part is described in more detail. | |||

''Open policy practice'' is a set of methods to support and perform societal decision making in an open society, and it is the overarching concept covering all methods, tools, practices, and terms presented in this article<ref>Tuomisto JT, Pohjola M, Pohjola P. Avoin päätöksentekokäytäntö voisi parantaa tiedon hyödyntämistä. [Open policy practice could improve knowledge use.] Yhteiskuntapolitiikka 2014;1:66-75. http://urn.fi/URN:NBN:fi-fe2014031821621 (in Finnish). Accessed 1 Feb 2020.</ref>. Its theoretical foundation is on the graph theory<ref name="bondy2008">Bondy, J. A.; Murty, U. S. R. (2008). Graph Theory. Springer. ISBN 978-1-84628-969-9.</ref> and systematic information structures. Open policy practice especially focuses on promoting the openness, flow and use of information in decision processes (Figure 1). Its purpose is to give practical guidance for the whole decision process from ideating possible actions to assessing impacts, deciding between options, implementing decisions, and finally to evaluating outcomes. It aims to be applicable to all kinds of societal decision situations in any administrative area or discipline. An ambitious objective of open policy practice is to be so effective that a citizen can observe improvements in decisions and outcomes, and so reliable that a citizen is reluctant to believe claims that are in contradiction with shared understanding produced by open policy practice. | |||

Open policy practice is based on the five principles presented in Table 1. The principles can be met if the purpose of policy support is set to produce '''shared understanding''' (a situation where different facts, values, and disagreements related to a decision situation are understood and documented). The description of shared understanding (and consequently improved actions) is thus the main output of open policy practice (see also Figure 2). It is a product that guides the decision and is the basis for evaluation of outcomes. | |||

This guidance is formalised as '''evaluation and management''' of the work and knowledge content during a decision process. It defines the criteria against which the knowledge process needs to be evaluated and managed. It contains methods to look at what is being done, whether the work is producing the intended knowledge and outputs, and what needs to be changed. Each task is evaluated before, during, and after the actual execution, and the work is iteratively managed based on this. | |||

The '''execution''' of a decision process is about collecting, organising and synthesising scientific knowledge and values in order to achieve objectives by informing the decision maker and stakeholders. A key part is open assessment that typically estimates the impacts of the planned decision options. Assessment and knowledge production is also performed during the implementation and evaluation steps. Execution also contains the acts of making and implementing decisions; however, they are so case-specific processes depending on the topic, decision maker, and the societal context that they are not discussed in this article. | |||

[[image:Open policy practice.png|thumb|400px|Figure 2. The three parts of open policy practice. The timeline goes roughly from left to right, but all work should be seen as iterative processes. Shared understanding as the main output is in the middle, expert-driven information production is a part of execution. Evaluation and management gives guidance to the execution.]] | |||

=== Shared understanding === | |||

Shared understanding is a situation where all participants' views about a particular topic have been understood, described and documented well enough so that people can know what facts, opinions, reasonings, and values exist and what agreements and disagreements exist and why. Shared understanding is produced in collaboration by decision makers, experts, and stakeholders. Each group brings in their own knowledge and concerns. Shared understanding aims to reflect all the five principles of open policy practice. This creates requirements to the methods that can be used to produce shared understanding. | |||

Shared understanding is always about a particular topic and produced by a particular group of participants. Depending on the participants, the results might differ, but with an increasing number of participants, it putatively approaches a shared understanding of the society as a whole. Ideally, each participant agrees that the written description correctly contains their own thinking about the topic. Participants should even be able to correctly explain what other thoughts there are and how they differ from their own. Ideally any participant can learn, understand, and explain any thought represented in the group. Importantly, there is no need to agree on things, just to agree on what the disagreements are about. Therefore, shared understanding is not the same as consensus or agreement. | |||

Shared understanding has potentially several purposes that all aim to improve the quality of societal decisions. It helps people understand complex policy issues. It helps people see their own thoughts from a wider perspective and thus increase acceptance of decisions. It improves trust in decision makers; but it may also deteriorate trust if the actions of a decision maker are not understandable based on shared understanding. It dissects each difficult detail into separate discussions and then collects statements into an overview; this helps to allocate the time resources of participants efficiently to critical issues. It improves awareness of new ideas. It releases the full potential of the public to prepare, inform, and make decisions. How well these purposes have been fulfilled in practice in assessments are discussed in Results. | |||

'''Test of shared understanding | |||

''Test of shared understanding'' can be used to evaluate how well shared understanding has been achieved. In a successful case, all participants of a decision process give positive answers to the questions in Table 2. In a way, shared understanding is a metric for evaluating how well decision makers have embraced the knowledge base of the decision situation. | |||

{| {{prettytable}} | {| {{prettytable}} | ||

|+'''Table 2. | |+'''Table 2. Test of shared understanding. | ||

! | ! Question !! Who is asked? | ||

|---- | |---- | ||

| | | Is all relevant and important information described? | ||

|rowspan="4"|All participants of the decision processes (including knowledge gathering processes) | |||

|---- | |---- | ||

| | | Are all relevant and important value judgements described? (Those of all participants, not just decision makers.) | ||

|---- | |---- | ||

| | | Are the decision maker's decision criteria described? | ||

|---- | |---- | ||

| | | Is the decision maker's rationale from the criteria to the decision described? | ||

|} | |} | ||

''' | Everything that is done aims to offer better understanding about impacts of the decision related to the decision maker's objectives. However, conclusions may be sensitive to initial values, and ignoring stakeholders' views may cause trouble at a later stage. Therefore, other values in the society are also included in shared understanding. | ||

Shared understanding may have different levels of ambition. On an easy level, shared understanding is taken as general guidance and an attitude towards other people's opinions. Main points and disagreements are summarised in writing, so that an outsider is able to understand the overall picture. | |||

On an ambitious level, the idea of documenting all opinions and their reasonings is taken literally. Participants' views are actively elicited and tested to see whether a facilitator is able to reproduce their thought processes. The objective here is to document the thinking in such a detailed way that a participant's views on the key questions of a policy can be anticipated from the description they have given. This is done by using insight networks, knowledge crystals, and other methods (see below). Written documentation with an available and usable structure is crucial, as it allows participation without being physically present. It also spreads shared understanding to decision makers and to those who were not involved in discussions. | |||

Good descriptions of shared understanding are able to quickly and easily incorporate new information or scenarios from the participants. They can be examined using different premises, i.e., a user should be able to quickly update the knowledge base, change the point of view, or reanalyse how the situation would look like with alternative valuations. Ideally, a user interface would allow the user to select input values with intuitive menus and sliders and would show impacts of changes instantly. | |||

Shared understanding as the key objective gives guidance to the policy process in general. But it also creates requirements that can be described as quality criteria for the process and used to evaluate and manage the work. | |||

=== Evaluation and management === | |||

Evaluation is about following and checking the plans and progress of the decisions and implementation. Management is about adjusting work and updating actions based on evaluation to ensure that objectives are reached. Several criteria were developed in open policy practice to evaluate and describe the decision support work. Their purpose is to help participants focus on the most important parts of open policy practice. | |||

Guidance exists about crowdsourced policymaking<ref>Aitamurto T, Landemore H. Five design principles for crowdsourced policymaking: Assessing the case of crowdsourced off-road traffic law in Finland. Journal of Social Media for Organizations. 2015;2:1:1-19.</ref>, and similar ideas have been utilised in open assessment. | |||

'' | '''Properties of good policy support | ||

There is a need to evaluate an assessment work before, during, and after it is done<ref name="pohjola2013b">Pohjola MV, Pohjola P, Tainio M, Tuomisto JT. Perspectives to Performance of Environment and Health Assessments and Models—From Outputs to Outcomes? (Review). Int. J. Environ. Res. Public Health 2013;10:2621-2642 doi:10.3390/ijerph10072621</ref>. A key question is, what makes good policy support and what criteria should be used (see Table 3)<ref name="sandstrom2014">Sandström V, Tuomisto JT, Majaniemi S, Rintala T, Pohjola MV. Evaluating effectiveness of open assessments on alternative biofuel sources. Sustainability: Science, Practice & Policy 2014;10;1. doi:10.1080/15487733.2014.11908132 Assessment: http://en.opasnet.org/w/Biofuel_assessments. Accessed 1 Feb 2020.</ref>. | |||

Fulfilling all these criteria is of course not a guarantee that the outcomes of a decision will be successful. But the properties listed have been found to be important determinants of the success of decision processes. In projects utilising open policy practice, poor performance of specific properties could be linked to particular problems observed. Evaluating these properties before or during a decision process could help to analyse what exactly is wrong, as problems with such properties are by then typically visible. Thus, using this evaluation scheme proactively makes it possible to manage the decision making process towards higher quality of content, applicability, and efficiency. | |||

{|{{prettytable}} | {|{{prettytable}} | ||

|+ '''Table 3. Properties of good assessment. | |+ '''Table 3. Properties of good policy support. Here, "assessment" can be viewed as a particular expert work producing a report about a specific question, or as a wider description of shared understanding about a whole policy process. Assessment work is done before, during, and after the actual decision. | ||

|----- | |----- | ||

! Category | ! Category | ||

! Description | ! Description | ||

! | ! Guiding questions | ||

! Related principles | |||

|----- | |----- | ||

| Quality of content | |||

| Specificity, exactness and correctness of information. Correspondence between questions and answers. | |||

| Specificity | | How exact and specific are the ideas in the assessment? How completely does the (expected) answer address the assessment question? Are all important aspects addressed? Is there something unnecessary? | ||

| Openness, causality, criticism | |||

| How completely does the answer address the assessment question? | |||

|----- | |----- | ||

| rowspan="4"| Applicability | | rowspan="4"| Applicability | ||

| Relevance | | ''Relevance'': Correspondence between output and its intended use. | ||

| How well does the assessment address the intended needs of the users? Is the assessment question good in relation to the purpose of the assessment? | |||

| How well does | | Collaboration, openness, criticism, intentionality | ||

|----- | |----- | ||

| Availability | | ''Availability'': Accessibility of the output to users in terms of e.g. time, location, extent of information, extent of users. | ||

| Is the information provided by the assessment available when, where and to whom is needed? | | Is the information provided by the assessment available when, where and to whom is needed? | ||

| Openness | |||

|----- | |----- | ||

| Usability | | ''Usability'': Potential of the information in the output to generate understanding among its user(s) about the topic of assessment. | ||

| Are the intended users able to understand what the assessment is about? Is the assessment useful for them? | |||

| | | Collaboration, openness, causality, intentionality | ||

| | |||

|----- | |----- | ||

| | | ''Acceptability'': Potential of the output being accepted by its users. Fundamentally a matter of its making and delivery, not its information content. | ||

| Is the assessment (both its expected results and the way the assessment is planned to be made) acceptable to the intended users? | |||

| Collaboration, openness, criticism, intentionality | |||

| | |||

|----- | |----- | ||

| | | Efficiency | ||

| Resource expenditure of producing assessment | | Resource expenditure of producing the assessment output either in one assessment or in a series of assessments. | ||

| | | How much effort is needed for making the assessment? Is it worth spending the effort, considering the expected results and their applicability for the intended users? Are the assessment results useful also in some other use? | ||

| Collaboration, openness | |||

|} | |} | ||

''Quality of content'' refers to the output of an assessment, typically a report, model or summary presentation. Its quality is obviously an important property. If the facts are plain wrong, it is more likely to misguide than lead to good decisions. Specificity, exactness, and correctness describe how large the remaining uncertainties are and how close the answers probably are to the truth (compared to some golden standard). In some statistical texts, similar concepts have been called precision and accuracy, although with decision support they should be understood in a flexible rather than strictly statistical sense.<ref>Cooke RM. Experts in Uncertainty: Opinion and Subjective Probability in Science. New York: Oxford University Press; 1991.</ref> Coherence means that the answers given are those to the questions asked. | |||

''Applicability'' is an important aspect of evaluation. It looks at properties that affect how well the decision support can and will be applied. It is independent of the quality of content, i.e. despite high quality, an assessment may have very poor applicability. The opposite may also be true, as sometimes faulty assessments are actively used to promote policies. However, usability typically decreases rapidly if the target audience evaluates an assessment to be of poor quality. | |||

Relevance asks whether a good question was asked to support decisions. Identification of good questions requires lots of deliberation between different groups, including decision makers and experts, and online forums may potentially help in this. | |||

Availability is a more technical property and describes how easily a user can find the information when needed. A typical problem is that a potential user does not know that a piece of information exists even if it could be easily accessed. | |||

Usability may differ from user to user, depending on e.g. background knowledge, interest, or time available to learn the content. | |||

Acceptability is a very complex issue and most easily detectable when it fails. A common situation is that stakeholders feel that they have not been properly heard and therefore any output from decision support is perceived faulty. Doubts about the credibility of the assessor also fall into this category. | |||

''Efficiency'' evaluates resource use when performing an assessment or other decision support. Money and time are two common measures for this. Often it is most useful to evaluate efficiency before an assessment is started. Is it realistic to produce new important information given the resources and schedule available? If more/less resources were available, what value would be added/lost? Another aspect in efficiency is that if assessments are done openly, reuse of information becomes easier and the marginal cost and time of a new assessment decreases. | |||

All properties of decision support, not just efficiency or quality of content, are meant to guide planning, execution, and evaluation of the whole decision support work. If they are always kept in mind, they can improve daily work. | |||

'''Settings of assessments | '''Settings of assessments | ||

Sometimes, a decision process or an assessment may be missing a clear understanding of what should be done and why. An assessment may even be launched in a hope that it will somehow reveal what the objectives or other important factors are. ''Settings of assessments'' (Table 4) are used to explicate these so that useful decision support can be provided<ref name="pohjola2014">Pohjola MV. Assessment of impacts to health, safety, and environment in the context of materials processing and related public policy. In: Bassim N, editor. Comprehensive Materials Processing Vol. 8. Elsevier Ltd; 2014. pp 151–162. doi:10.1016/B978-0-08-096532-1.00814-1</ref>. Examining the sub-attributes of an assessment question can also help: | |||

* | * Research question: the actual question of an open assessment | ||

* Boundaries: | * Boundaries: temporal, geographical, and other limits within which the question is considered | ||

* Decisions and scenarios: | * Decisions and scenarios: decisions and options to assess and scenarios to consider | ||

* Timing: | * Timing: the schedule of the assessment work | ||

* Participants: | * Participants: people who will or should contribute to the assessment | ||

* Users and intended use: | * Users and intended use: users of the final assessment report and purposes of the use | ||

{|{{prettytable}} | {|{{prettytable}} | ||

|+ '''Table 4. Important settings for environmental health | |+ '''Table 4. Important settings for environmental health and other impact assessments within the context public policy making. | ||

|---- | |---- | ||

! Attribute | ! Attribute | ||

| Line 293: | Line 247: | ||

| | | | ||

* Which impacts are addressed in assessment? | * Which impacts are addressed in assessment? | ||

* Which impacts are most significant? | * Which impacts are the most significant? | ||

* Which impacts are most relevant for | * Which impacts are the most relevant for decision making? | ||

| Environment, health, cost, equity | | Environment, health, cost, equity | ||

|----- | |----- | ||

| Line 300: | Line 254: | ||

| | | | ||

* Which causes of impacts are recognized in assessment? | * Which causes of impacts are recognized in assessment? | ||

* Which causes of impacts are most significant? | * Which causes of impacts are the most significant? | ||

* Which causes of impacts are most relevant for | * Which causes of impacts are the most relevant for decision making? | ||

| Production, consumption, transport, heating, power production, everyday life | | Production, consumption, transport, heating, power production, everyday life | ||

|----- | |----- | ||

| Line 327: | Line 281: | ||

|} | |} | ||

''' | '''Interaction and openness | ||

In open | In open policy practice, the method itself is designed to facilitate openness in all its dimensions. The ''dimensions of openness'' help to identify if and how the work deviates from the ideal of openness, so that the work can be improved in this respect (Table 5)<ref name="pohjola2011">Pohjola MV, Tuomisto JT. Openness in participation, assessment, and policy making upon issues of environment and environmental health: a review of literature and recent project results. Environmental Health 2011;10:58 http://www.ehjournal.net/content/10/1/58.</ref>. | ||

{| {{prettytable}} | {| {{prettytable}} | ||

|+ '''Table 5. Dimensions of openness. | |+ '''Table 5. Dimensions of openness in decision making. | ||

! Dimension | ! Dimension | ||

! Description | ! Description | ||

|----- | |----- | ||

| Scope of participation | | Scope of participation | ||

| Who | | Who is allowed to participate in the process? | ||

|----- | |----- | ||

| Access to information | | Access to information | ||

| Line 346: | Line 300: | ||

|----- | |----- | ||

| Scope of contribution | | Scope of contribution | ||

| | | Which aspects of the issue are participants invited or allowed to contribute to? | ||

|----- | |----- | ||

| Impact of contribution | | Impact of contribution | ||

| How much are participant contributions allowed to have influence on the outcomes? | | How much are participant contributions allowed to have influence on the outcomes? How much weight is given to participant contributions? | ||

|} | |} | ||

Openness can also be examined based on how intensive it is and what kind of collaboration | Openness can also be examined based on how intensive it is and what kind of collaboration between decision makers, experts, and stakeholders is aimed for<ref name="pohjola2012"/><ref>van Kerkhoff L, Lebel L. Linking knowledge and action for sustainable development. Annu. Rev. Environ. Resour. 2006. 31:445-477. doi:10.1146/annurev.energy.31.102405.170850</ref>. Different approaches are described in Table 6. | ||

{|{{prettytable}} | {|{{prettytable}} | ||

|+ '''Table 6. Categories of interaction within the knowledge-policy interaction framework. | |+ '''Table 6. Categories of interaction within the knowledge-policy interaction framework. | ||

! Category | ! Category | ||

! | ! Description | ||

|----- | |----- | ||

| Isolated | | Isolated | ||

| Assessment and use of assessment results are strictly separated. Results are provided | | Assessment and use of assessment results are strictly separated. Results are provided for intended use, but users and stakeholders can not interfere with the making of the assessment. | ||

|----- | |----- | ||

| Informing | | Informing | ||

| Assessments are designed and conducted according to specified needs of intended use. Users and limited groups of stakeholders may have a minor role in providing information to assessment, but mainly serve as recipients of assessment results. | | Assessments are designed and conducted according to specified needs of intended use. Users and limited groups of stakeholders may have a minor role in providing information to the assessment, but mainly serve as recipients of assessment results. | ||

|----- | |----- | ||

| Participatory | | Participatory | ||

| | | Broader inclusion of participants is emphasized. Participation is, however, treated as an add-on alongside the actual processes of assessment and/or use of assessment results. | ||

|----- | |----- | ||

| Joint | | Joint | ||

| Involvement | | Involvement and exchange of summary-level information among multiple actors is emphasised in scoping, management, communication, and follow-up of assessment. On the level of assessment practice, actions by different actors in different roles (assessor, manager, stakeholder) remain separate. | ||

|----- | |----- | ||

| Shared | | Shared | ||

| Different actors | | Different actors engage in open collaboration upon determining assessment questions, seeking answers to them, and implementing answers in practice. However, the actors involved in an assessment retain their roles and responsibilities. | ||

|} | |} | ||

=== | These evaluation methods guide the actual execution of a decision process. | ||

=== Execution and open assessment === | |||

''Execution'' is the work during a decision process, including ideating possible actions, assessing impacts, deciding between options, implementing decisions, and evaluating outcomes. Execution is guided by information produced in evaluation and management. The focus of this article is on knowledge processes that support decisions. Therefore, methods to reach or implement a decision are not discussed here. | |||

''Open assessment'' is a method for performing impact assessments using insight networks, knowledge crystals, and web-workspaces (see below). Open assessment is an important part of execution and the main knowledge production method in open policy practice. | |||

An assessment aims to quantify important objectives, and especially compare differences in impacts resulting from different decision options. In an assessment, current scientific information is used to answer policy-relevant questions that inform decision makers about the impacts of different options. | |||

Open assessments are typically performed before a decision is made (but e.g. the city of Helsinki has used both ex ante and ex post approaches with its climate strategy<ref name="hnh2035"/>). The focus is by necessity on expert knowledge and how to organise it, although prioritisation is only possible if the objectives and valuations of the decision maker and stakeholders are known. For a list of major open assessments, see Appendix S1. | |||

As a research topic, open assessment attempts to answer this question: "How can factual information and value judgements be organised for improving societal decision making in a situation where open participation is allowed?" As can be seen, openness, participation, and values are taken as given premises. This was far from common practice but not completely new, when the first open assessments were performed in the early 2000's<ref name="nrc1996"/>. | |||

Since the beginning, the main focus has been to think about information and information flows, rather than jurisdictions, political processes, or hierarchies. So, open assessment deliberately focuses on impacts and objectives rather than questions about procedures or mandates of decision support. The premise is that if the information production and dissemination are completely open, the process can be generic, and an assessment can include information from any contributor and inform any kind of decision-making body. Of course, quality control procedures and many other issues must be functional under these conditions. | |||

==== Co-creation ==== | |||

''Co-creation'' is a method for producing open contents in collaboration, and in this context specifically knowledge production by self-organised groups. It is a discipline in itself<ref name="mauser2013"/>, and guidance about how to manage and facilitate co-creation can be found elsewhere. Here, only a few key points are raised about facilitation and structured discussion. | |||

Information has to be collected, organised, and synthesised; facilitators need to motivate and help people to share their information. This requires dedicated work and skills that are not typically available among experts nor decision makers. Co-creation also contains practices and methods, such as motivating participation, facilitating discussions, clarifying and organising argumentation, moderating contents, using probabilities and expert judgement for describing uncertainties, or developing insight networks (see below) or quantitative models. Sometimes the skills needed are called interactional expertise. | |||

Facilitation helps people participate and interact in co-creation processes using hearings, workshops, online questionnaires, wikis, and other tools. In addition to practical tools, facilitation implements principles that have been seen to motivate participation<ref name="noveck2010"/>. Three are worth mentioning here, because they have been shown to significantly affect the motivation to participate. | |||

* ''Grouping'': Facilitation methods are used to promote the participants' feeling of being important members of a group that has a meaningful, shared purpose. | |||

* ''Trust'': Facilitation builds trust among people that they can safely express their ideas and concerns, and that other members of the group support participation even if they disagree on the substance. | |||

* ''Respect'': Contributions are systematically evaluated according to their merit so that each participant receives the respect they deserve based on their contributions as individuals or members of a group. | |||

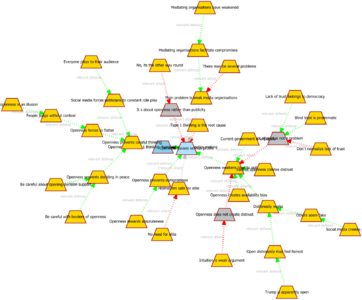

''Structured discussions'' are synthesised and reorganised discussions, where the purpose is to highlight key statements, and argumentations that lead to acceptance or rejectance of these statements. Discussions can be organised according to pragma-dialectical argumentation rules<ref>Eemeren FH van, Grootendorst R. A systematic theory of argumentation: The pragma-dialectical approach. Cambridge: Cambridge University Press; 2004.</ref> or argumentation framework<ref>Dung PM. (1995) On the acceptability of arguments and its fundamental role in nonmonotonic reasoning, logic programming, and n–person games. Artificial Intelligence. 77 (2): 321–357. doi:10.1016/0004-3702(94)00041-X.</ref>, so that arguments form a hierarchical thread pointing to a main statement or statements. Attack arguments are used to invalidate other arguments by showing that they are either untrue or irrelevant in their context; defend arguments are used to protect from attacks; and comments are used to clarify issues. For an example, see Figure S2-5 in Appendix S2 and links thereof. | |||

The discussions can be natural discussions that are reorganised afterwards or online discussions where the structure of contributions is governed by the tools used. A test environment exists for structured argumentation<ref>Hastrup T. Knowledge crystal argumentation tree. https://dev.tietokide.fi/?Q10. Web tool. Accessed 1 Feb 2020.</ref>, and Opasnet has R functions for analysing structured discussions written on wiki pages. | |||

==== Insight networks ==== | |||

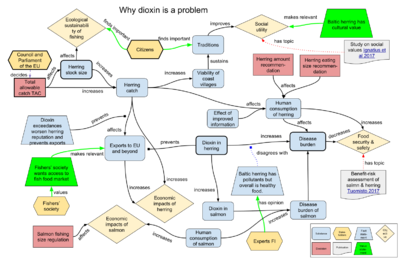

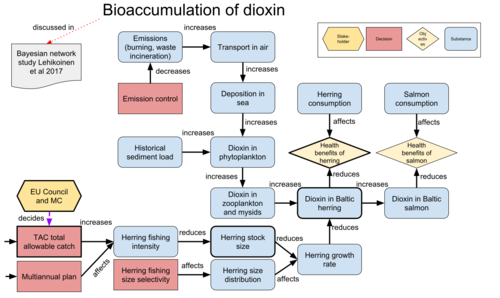

''Insight networks'' are graphs as defined by the graph theory<ref name="bondy2008"/>. In an insight network, actions, objectives, and other issues are depicted with nodes, and their causal and other relations are depicted with arrows (aka edges). An example is shown in Figure 3, which describes a potential dioxin-related decision to clean up emissions from waste incineration. The logic of such a decision can be described as a chain or network of causally dependent issues: Reduced dioxin emissions to air improve air quality and dioxin deposition into the Baltic Sea; this has a favourable effect on concentrations in the Baltic herring; this reduces human exposures to dioxins via fish; and this helps to achieve an ultimate objective of reduced health risks from dioxin. Insight networks aim to facilitate understanding, analysing, and discussing complex policy issues. | |||

[[image:Bioaccumulation of dioxin.svg|thumb|500px|Figure 3. Insight network about dioxins, Baltic fish, and health as described in the BONUS GOHERR project<ref name="goherr2020">Tuomisto JT, Asikainen A, Meriläinen P et Haapasaari P. Health effects of nutrients and environmental pollutants in Baltic herring and salmon: a quantitative benefit-risk assessment. BMC Public Health 20, 64 (2020). https://doi.org/10.1186/s12889-019-8094-1 Assessment: http://en.opasnet.org/w/Goherr_assessment, data archive: https://osf.io/brxpt/. Accessed 1 Feb 2020.</ref>. Decisions are shown as red rectangles, decision makers and stakeholders as yellow hexagons, decision objectives as yellow diamonds, and substantive issues as blue nodes. The relations are written on the diagram as predicates of sentences where the subject is at the tail of the arrow and the object is at the tip of the arrow. For other insight networks, see Appendix S2.]] | |||

Causal modelling and causal graphs as such are old ideas, and there are various methods developed for them, both qualitative and quantitative. However, the additional ideas with insight networks were that a) also all non-causal issues can and should be linked to the causal core in some way, if they are relevant to the decision, and therefore b) they can be effectively used in clarifying one's ideas, contributing, and then communicating a whole decision situation rather than just the causal core. In other words, a participant in a policy discussion should be able to make a reasonable connection between what they are saying and some node in an insight network developed for that policy issue. If they are not able to make such a link, their point is probably irrelevant. | |||

The first implementations of insight networks were about toxicology of dioxins<ref name="tuomisto1999">Tuomisto JT. TCDD: a challenge to mechanistic toxicology [Dissertation]. Kuopio: National Public Health Institute A7; 1999.</ref> and restoration of a closed asbestos mine area<ref name="paakkila1999">Tuomisto JT, Pekkanen J, Alm S, Kurttio P, Venäläinen R, Juuti S et al. Deliberation process by an explicit factor-effect-value network (Pyrkilo): Paakkila asbestos mine case, Finland. Epidemiol 1999;10(4):S114.</ref><sup>c</sup>. In the early cases, the main purpose was to give structure to discussion about and examination of an issue rather than to be a backbone for quantitative models. In later implementations, such as in the composite traffic assessment<ref name="tuomisto2005">Tuomisto JT; Tainio M. An economic way of reducing health, environmental, and other pressures of urban traffic: a decision analysis on trip aggregation. BMC PUBLIC HEALTH 2005;5:123. http://biomedcentral.com/1471-2458/5/123/abstract Assessment: http://en.opasnet.org/w/Cost-benefit_assessment_on_composite_traffic_in_Helsinki. Accessed 1 Feb 2020.</ref> or BONUS GOHERR project<ref name="goherr2020"/>, diagrams have been used for both purposes. Most open assessments discussed later (and listed in Appendix S1) have used insight networks to structure and illustrate their content. | |||

==== Knowledge crystals ==== | |||

''Knowledge crystals'' are web pages where specific research ''questions'' are collaboratively ''answered'' by producing ''rationale'' with any data, facts, values, reasoning, discussion, models, or other information that is needed to convince a critical, rational reader (Table 7). | |||

Knowledge crystals have a few distinct features. The web page of a knowledge crystal has a permanent identifier or URL and an explicit topic, or question, which does not change over time. A user may come to the same page several times and find an up-to-date answer to the same topic. The answer changes as new information becomes available, and anyone is allowed to bring in new relevant information as long as certain rules of co-creation are followed. In a sense, the answer of a knowledge crystal is never final but it is always usable. | |||

Knowledge crystal is a practical information structure that was designed to comply with the principles of open policy practice. Open data principles are used when possible<ref>Open Knowledge International. The Open Definition. http://opendefinition.org/. Accessed 1 Feb 2020.</ref>. For example, openness and criticism are implemented by allowing anyone to contribute but only after critical examination. Knowledge crystals differ from open data, which contains little to no interpretation, and scientific articles, which are not updated. Rationale is the place for new information and discussions, and resolutions about new information may change the answer. | |||

The purpose of knowledge crystals is to offer a versatile information structure for nodes in an insight network that describes a complex policy issue. They handle research questions of any topic and describe all causal and non-causal relations from other nodes (i.e. the nodes that may affect the answer of the node under scrutiny). They contain information as necessary: text, images, mathematics, or other forms, both quantitative and qualitative. They handle facts or values depending on the questions, and withstand misconceptions and fuzzy thinking as well. Finally, they are intended to be found online by anyone interested, and their main message to be understood and used even by a non-expert. | |||

{|{{prettytable}} | |||

|+'''Table 7. The ''attributes'' of a knowledge crystal. | |||

! Attribute | |||

! Description | |||

|----- | |||

| '''Name''' | |||

| An identifier for the knowledge crystal. Each page has a permanent, unique name and identifier or URL. | |||

|----- | |||

| '''Question''' | |||

| A research question that is to be answered. It defines the scope of the knowledge crystal. Assessments have specific sub-attributes for questions (see section Settings of assessments) | |||

|----- | |||

| '''Answer''' | |||

| An understandable and useful answer to the question. It is the current best synthesis of all available data. Typically it has a descriptive easy-to-read summary and a detailed quantitative ''result'' published as open data. An answer may contain several competing hypotheses, if they all hold against scientific critique. This way, it may include an accurate description of the uncertainty of the answer, often in a probabilistic way. | |||

|----- | |||

| '''Rationale''' | |||

| Any information that is necessary to convince a critical rational reader that the answer is credible and usable. It presents to a reader the information required to derive the answer and explains how it is formed. It may have different sub-attributes depending on the page type, some examples are listed below. | |||

* '''Data''' tell about direct observations (or expert judgements) about the topic. | |||

* '''Dependencies''' tell what is known about how upstream knowledge crystals (i.e. causal parents) affect the answer. Dependencies may describe functional or probabilistic relationships. In an insight network, dependencies are described as arrows pointing toward the knowledge crystal. | |||

* '''Calculations''' are an operationalisation of how to calculate or derive the answer. It uses algebra, computer code, or other explicit methods if possible. | |||

* '''Discussions''' are structured or unstructured discussions about the details of the substance, or about the production of substantive information. On a wiki, discussions are typically located on the talk page of the substance page. | |||

|---- | |||

| Other | |||

| In addition to attributes, it is practical to have clarifying subheadings on a knowledge crystal page. These include: See also, Keywords, References, Related files | |||

|} | |||

There are different types of knowledge crystals for different uses. ''Variables'' contain substantive topics such as emissions of a pollutant, food consumption or other behaviour of an individual, or disease burden in a population (for examples, see Figure 3 and Appendix S2.) ''Assessments'' describe the information needs of particular decision situations and work processes designed to answer those needs. They may also describe whole models (consisting of variables) for simulating impacts of a decision. ''Methods'' describe specific procedures to organise or analyse information. The question of a method typically starts with "How to...". For a list of all knowledge crystal types used at Opasnet web-workspace, see Appendix S3. | |||

Openness and collaboration are promoted by design: knowledge crystals are modular, re-usable, and readable for humans and machines. This enables their direct use in several assessment models or internet applications, which is important for the efficiency of the work. Methods are used to standardise and facilitate the work across assessments. | |||

==== Open web-workspaces ==== | |||

Insight networks, knowledge crystals, and open assessments are information objects that were not directly applicable at any web-workspace available at the time of development. Therefore, web-workspaces have been developed specifically for open policy practice. There are two major web-workspaces for this purpose: Opasnet (designed for expert-driven open assessments) and Climate Watch (designed for evaluation and management of climate mitigation policies). | |||

'''Opasnet | |||

''Opasnet'' is an open wiki-based web-workspace and prototype for performing open policy practice, launched in 2006. It is designed to offer functionalities and tools for performing open assessments so that most if not all work can be done openly online. Its name is a short version of ''Open Assessors' Network'' and also from Finnish word for guide, "opas". The purpose was to test and learn co-creation among environmental health experts and start opening the assessment process to interested stakeholders. | |||

Opasnet is based on MediaWiki platform because of its open-source code, wide use and abundance of additional packages, long-term prospects, functionalities for good research practices (e.g. talk pages for meta-level discussions), and full and automatic version control. Two language versions of Opasnet exist. English Opasnet (en.opasnet.org) contains all international projects and most scientific information. Finnish Opasnet (fi.opasnet.org) contains mostly project material for Finnish projects and pages targeted for Finnish audiences. A project wiki Heande (short for Health, the Environment, and Everything) requires a password and contains information that can not (yet) be published, but the open alternatives are preferred. | |||

Opasnet facilitates simultaneous development of theoretical framework, assessment practices, assessment work, and supporting tools. This includes e.g. information structures, assessment methods, evaluation criteria, and online software models and libraries. | |||

For modelling functionalities, the statistical software R is used via an R–Mediawiki interface. R code can be written directly to a wiki page and run by clicking a button. The resulting objects can be stored to the server and fetched later by a different code. Complex models can be run with a web browser without installing anything. The server has automatic version control and archival of the model description, data, code, and results. | |||

An R package ''OpasnetUtils'' is available (CRAN repository cran.r-project.org) to support knowledge crystals and impact assessment models. It contains the necessary functions and information structures. Specific functionalities facilitate reuse and explicit quantitation of uncertainties: Scenarios can be defined at a wiki page or via a model user interface, and these scenarios can then be run without changing the model code. If input values are uncertain, uncertainties are automatically propagated through the model using Monte Carlo simulation. | |||

For data storage, ''Opasnet Base'', a MongoDB no-sql database, is used. Each dataset must be linked to a single wiki page, which contains all the necessary descriptions and metadata about the data. Data can be uploaded to the database via a wiki page or a file uploader. The database has an open application programming interface for data retrieval. | |||

For more details, see Appendix S4. | |||

'''Climate Watch | |||

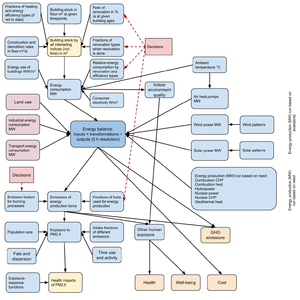

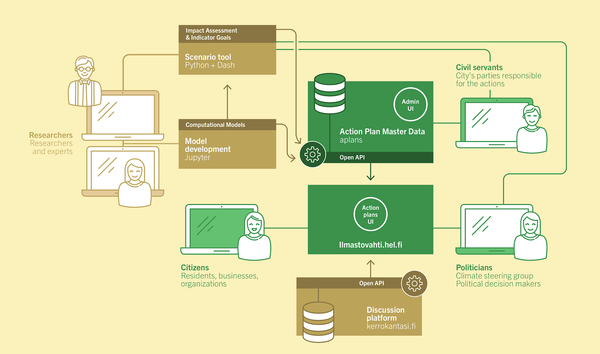

[[File:System architecture of Climate Watch.png|thumb|600px|Figure 4. System architecture of the Climate Watch web-workspace.]] | |||

Climate Watch is a web-workspace primarily for evaluating and managing climate mitigation actions (Figure 4). It was originally developed in 2018-2019 by the city of Helsinki for its climate strategy. Already from the beginning, scalability was a key priority: the web-workspace was made generic enough so that it could be easily used by other municipalities in Finland and globally, and used for evaluation and management of other topics than climate mitigation. | |||

Climate Watch is described in more detail by Ignatius and coworkers<ref>Ignatius S-M, Tuomisto JT, Yrjölä J, Muurinen R. (2020) From monitoring into collective problem solving: City Climate Tool. EIT Climate-KIC project: 190996 (Partner Accelerator).</ref>. In brief, Climate Watch consists of actions that aim to reduce climate emissions, and indicators that are supposedly affected by the actions and give insights about progress. Actions and indicators are knowledge crystals, and they are causally connected, thus forming an insight network. Each action and indicator has one or more contact people who are responsible for the reporting of progress (and sometimes for actually implementing the actions). | |||

The requirements for choosing the technologies were wide availability, ease of development, and an architecture based on open application programming interfaces or APIs. The public-facing user interface uses the NextJS framework (https://nextjs.org/). It provides support for server-side rendering and search engine optimisation which is based on the React user interface framework (https://reactjs.org/). The backend is built using the Django web framework (https://www.djangoproject.com/) which provides the contact people with an administrator user interface. The data flows to the Climate Watch interface over a GraphQL API (https://graphql.org/). GraphQL is a standard that has the most traction in the web development community because of its flexibility and performance. | |||

Opasnet and Climate Watch have functional similarities but different technical solutions. The user interfaces for end-users and administrators in Climate Watch have similar purposes as MediaWiki in Opasnet; and while impact assessment and model development are performed by using R at Opasnet, Climate Watch uses Python, Dash, and Jupyter. | |||

==== Open policy ontology ==== | |||

''Open policy ontology'' is used to describe all the information structures and policy content in a systematic, coherent, and unambiguous way. The ontology is based on the concepts of open linked data and resource description framework (RDF) by the World Wide Web Consortium<ref>W3C. Resource Description Framework (RDF). https://www.w3.org/RDF/. Accessed 1 Feb 2020.</ref>. | |||

The ontology is based on vocabularies with specified terms and meanings. Also the relations of terms are explicit. Resource description framework is based on the idea of triples, which have three parts: subject, predicate (or relation), and object. These can be thought as sentences: an item (subject) is related to (predicate) another item or value (object), thus forming a claim. Claims can further be specified using qualifiers and backed up by references. Insight networks can be documented as triples, and a set of triples using this ontology can be visualised as diagrams of insight network. Triple databases enable wide, decentralised linking of various sources and information. | |||

Open policy ontology (see Appendix S3) describes all information objects and terms described above, making sure that there is a relevant item type or relation to every critical piece of information that is described in an insight network, open assessment, or shared understanding. "Critical piece of information" means something that is worth describing as a separate node, so that it can be more easily found, understood, and used. A node itself may contain large amounts of information and data, but for the purpose of producing shared understanding about a particular decision, there is no need to highlight the node's internal data on an insight network. | |||

The ontology was used with indicator production in the climate strategy of Helsinki<ref name="hnh2035">City of Helsinki. The Carbon-neutral Helsinki 2035 Action Plan. Publications of the Central Administration of the City of Helsinki 2018:4. http://carbonneutralcities.org/wp-content/uploads/2019/06/Carbon_neutral_Helsinki_Action_Plan_1503019_EN.pdf Assessment: https://ilmastovahti.hel.fi. Accessed 1 Feb 2020.</ref> and a visualisation project of insight networks<ref>Tuomisto JT. Näkemysverkot ympäristöpäätöksenteon tukena [Insight networks supporting the environmental policy making](in Finnish) Kokeilunpaikka. Website. https://www.kokeilunpaikka.fi/fi/kokeilu/nakemysverkot-ymparistopaatoksenteon-tukena. Accessed 1 Feb 2020.</ref>. | |||

For a full description of the current vocabulary in the ontology, see Appendix S3 and Figures S2-3 and S2-4 in Appendix S2. | |||

==== Novel concepts ==== | |||

This section presents novel concepts that have been identified as useful for a particular need and conceptually coherent with open policy practice. However, they have not been thoroughly tested in practical assessments of policy support. | |||

''Value profile'' is a documented list of values, preferences, and choices of a participant. Voting advice applications are online tools that ask electoral candidates about their values, world views, or decisions they would make if elected. The voters can then answer the same questions and analyse which candidates share their values. Nowadays, such applications are routinely developed by all major media houses for every national election in Finland. Thus, voting advice applications produce a kind of value profile. However, these tools are not used to collect value profiles from the public for actual decision making or between elections although such information could be used in decision support. Value profiles are mydata, i.e. data of an individual where they themself can decide who is able see and use it. This requires trusted and secure information systems. | |||

''Archetype'' is an internally coherent value profile of an anonymised group of people. Coherence means that when two values are in conflict, the value profile describes which one to prefer. Archetypes are published as open data describing the number of supporters but not their identities. People may support an archetype in full or by declaring partial support to some specific values. Archetypes aim to save effort in gathering value data from the public, as when archetypes are used, not everyone needs to answer all possible questions. It also increases security since there is no need to handle individual people's potentially sensitive value profiles, when open aggregated data about archetypes suffices. | |||

Political strategy papers typically contain explicit values of that organisation, aggregated in some way from their members' individual values. The strategic values are then used in the organisation in a normative way, implying that the members should support these values in their membership roles. An archetype differs from this, because it is descriptive rather than normative and a "membership" in an archetype does not imply any rights or responsibilities. Yet, political parties could use also archetypes to describe the values of their members. | |||

The use of archetypes is based on an assumption that although their potential number is very large, most of a population's values relevant for a particular policy can be covered with a manageable amount of archetypes. As a comparison, there are usually from two to a dozen significant political parties in a democratic country rather than hundreds. There is also research on human values showing that they can be systematically evaluated using a fairly small amount (e.g., 4, 10, or 19) of different dimensions<ref>Schwartz SH, Cieciuch J, Vecchione M, Davidov E, Fischer R, Beierlein C, Ramos A, Verkasalo M, Lönnqvist J-E. Refining the theory of basic individual values. Journal of Personality and Social Psychology. 2012: 103; 663–688. doi: 10.1037/a0029393.</ref>. | |||

''Paradigms'' are collections of rules to describe inferences that participants would make from data in the system. For example, scientific paradigm has rules about criticism and a requirement that statements must be backed up by data or references. Participants are free to develop paradigms with any rules of their choosing, as long as they can be documented and operationalised within the system. For example, a paradigm may state that when in conflict, priority is given to the opinion presented by a particular authority. Hybrid paradigms are also allowed. For example, a political party may follow the scientific paradigm in most cases but when economic assessments are ambiguous, the party chooses an interpretation that emphasises the importance of an economically active state (or alternatively market approach with a passive state). | |||

''Destructive policy'' is a policy that a) is actually being implemented or planned, making it politically relevant, b) causes significant harm to most or all stakeholder groups, as measured using their own interests and objectives, and c) has a feasible, less harmful alternative. Societal benefits are likely to be greater if a destructive policy is identified and abandoned, compared with a situation where an assessment only focuses on showing that one good policy option is slightly better than another one. | |||

There are a few mechanisms that may explain why destructive policies exist. First, a powerful group can dominate the policymaking to their own benefit, causing harm to others. Second, the "prisoner's dilemma" or "tragedy of commons" makes a globally optimal solution to be unoptimal for each stakeholder group, thus draining support from it. Third, the issue is so complex that the stability of the whole system is threatened by changes<ref>Bostrom N. (2019) The Vulnerable World Hypothesis. Global Policy 10: 4: 455-476. https://doi.org/10.1111/1758-5899.12718.</ref>. Advice about destructive policies may produce support for paths out of these frozen situations. | |||

An analysis of destructive policies attempts to systematically analyse policy options and identify, describe, and motivate rejection of those that appear destructive. The tentative questions for such an analysis include the following. | |||

* Are there relevant policy options or practices that are not being assessed? | |||

* Do the policy options have externalities that are not being assessed? | |||

* Are there relevant priorities among stakeholders that are not being assessed? | |||

* Is there strong opposition against some options among the experts or stakeholders? What is the reasoning for and science behind the opposition? | |||

* Is there scientific evidence that an option is unable to reach the objectives or is significantly worse than another option? | |||

The current political actions to mitigate the climate crisis are so far from the global sustainability goals that there must be some destructive policies in place. Identification of destructive policies often requires that an assessor looks out of the box and is not restricted to default research questions. In this example, such questions could be: "What is such a policy B that fulfils the objectives of the current policy A but with less climate emissions?", and "Can we reject the null hypothesis that A is better than B in the light of data and all major archetypes?" This approach has a premise that rejection is more effective than confirmation; an idea that was already presented by Karl Popper<ref name="popper1963"/>. | |||

Parts of open policy practice have been used in several assessments. In this article, we will evaluate how these methods have performed. | |||

== Methods == | |||