Open assessment: Difference between revisions

(→Open assessment as a methodology: renewed according to INTARESE purpose & basic concepts) |

|||

| (25 intermediate revisions by 4 users not shown) | |||

| Line 2: | Line 2: | ||

[[Category:Opasnet]] | [[Category:Opasnet]] | ||

[[Category:Glossary term]] | [[Category:Glossary term]] | ||

{{encyclopedia|moderator=Jouni}} | [[Category:THL publications 2011]] | ||

{{encyclopedia|moderator=Jouni | |||

| reference = {{publication | |||

|authors = Jouni T. Tuomisto, Mikko V. Pohjola | |||

|publishingyear = 2011 | |||

}} | |||

}} | |||

''For a description about a web-workspace related to open assessment, see [[Opasnet]]. | ''For a description about a web-workspace related to open assessment, see [[Opasnet]]. | ||

| Line 53: | Line 59: | ||

# Not everyone is a systematic liar. | # Not everyone is a systematic liar. | ||

===Important methods=== | |||

{| {{prettytable}} | |||

|+ '''Some of the important methods used in pragmatic approach to knowledge-based policy. The list is not exhaustive but should give the reader a flavor of the kinds of methods are needed. See links in References for more detailed descriptions. [http://heande.opasnet.org/heande/index.php?title=Effective_knowledge-based_policy_-_a_pragmatic_approach&oldid=36849#Framework_for_knowledge-based_policy_making] | |||

! Method | |||

! Description | |||

! Implementation | |||

! References | |||

|---- | |||

! colspan="4"| Knowledge support | |||

|---- | |||

| Open assessment | |||

| Overall method for knowledge support in a situation where open participation is allowed. Specifically, it deals with the issue of systematically combining scientific information and value judgements for improving societal decision making. | |||

| Opasnet (open web workspace) http://en.opasnet.org. For each topic, an assessment page is opened in Opasnet. Detailed content is located on several web pages, each of which deals with one topic and can be used by several assessments. | |||

| Tuomisto and Pohjola 2007, Pohjola et al 2011: Pragmatic knowledge services [http://en.opasnet.org/w/Open_assessment] | |||

|---- | |||

| Openness (participation, contribution, availability of information) | |||

| All information is made available to everyone at all times of the work. Also, comments and contributions are accepted at all times and incorporated in the relevant information products. | |||

| Opasnet enables impact assessments or discussions to be started immediately when a need emerges. A web page can be used as a home for the work, and the link is used to invite readers and contributors. | |||

| | |||

|---- | |||

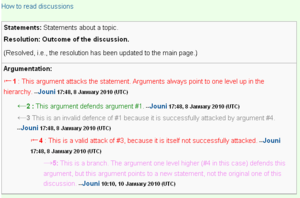

| Discussion and argumentation | |||

| Discussions are organised into formal argumentations, where a statement is presented, and it is defended or attacked by arguments. Also other arguments can be attacked and defended in a hierarchical way. Discussion rules are based on pragma-dialectic argumentation theory. | |||

| In practice, a formal argumentation is usually created afterwards based on written, online, or live discussions and materials. Opasnet pages can be used to create and show hierarchical argument structures. | |||

| van Eemeren and Grootendorst 2005## [http://en.opasnet.org/w/Discussion] | |||

|---- | |||

| Falsification | |||

| Falsification is based on the idea that anyone can suggest a hypothesis as an answer to a scientific question. The hypothesis is assumed plausible until it is convincingly falsified based on irrelevance, illogicality, or inconsistence with observations. The approach is in contrast with a common scientific practice where a hypothesis is ignored until it is validated (to the satisfaction of the establishment). | |||

| Anyone is allowed to edit a page in Opasnet. A contribution, unless falsified, may also affect the statement (of a discussion), the answer (to the question on a page), or the conclusion (of an assessment). Each page has a moderator who checks for relevance, prevents vandalism and requests further comments from experts in a controversial situation. | |||

| Popper 1939## | |||

|---- | |||

| Information structure | |||

| Information is collected, synthesised and made available based on topic (instead of owner, time, or organisation as usually). Information is organised in a standard form (question, answer, rationale) that is not dependent on the topic or level of maturity of content. Topics can be split into sub-topics and handled separately before making a synthesis, hence the structure facilitates contributions without being easily disrupted. | |||

| Opasnet pages have the same general structure: question (a research question about the topic), answer (the current best answers to the question, including uncertainties), and rationale (anything that is needed to convince a reader about the answer). There are also some detailed structure guidelines to support the general structure such as references or categories. | |||

| Tuomisto and Pohjola 2007, [http://en.opasnet.org/w/Attribute] | |||

|---- | |||

| Shared understanding | |||

| A situation where all participants are able to describe, what views there are about the topic at hand among the participants, where the agreements and disagreements are and why people disagree. | |||

| In practice, shared understanding is a written description about agreements and disagreements. Shared understanding is properly described when all participants think that their own point of view is adequatly described. Assessments in Opasnet aim at shared understanding. | |||

| Tuijhuis et al 2012 [http://en.opasnet.org/w/Shared_undestanding] | |||

|---- | |||

! colspan="4"| Evaluation | |||

|---- | |||

| Properties of good assessment | |||

| List of properties that should be evaluated about the quality of content, applicability, and efficiency of an assessment or assessment work. The properties evaluate the potential of an assessment to fulfil its purpose of informing and improving decision making and thus actually improve the outcome. | |||

| The properties are used to evaluate and manage the assessment work during the whole process. The question is constantly asked: Does what we are planning to do actually improve the properties of our assessment in the best possible way? | |||

| Tuomisto and Pohjola 2007, [http://en.opasnet.org/w/Properties_of_good_assessment] | |||

|---- | |||

| Dimensions of openness | |||

| Five different perspectives to openness in external participation of assessment processes: scope of participation, access to information, timing of openness, scope of contribution, and impact of contribution. | |||

| Perspectives are used to evaluate assessment work (past, ongoing, or planned) to identify problems with openness and participation and tackle them. | |||

| Pohjola and Tuomisto: Environmental Health 2011, [http://en.opasnet.org/w/Dimensions_of_openness] | |||

|---- | |||

| Purposes, goals and evaluation criteria | |||

| The purpose and objectives of the upcoming decision, and criteria to evaluate success, are made explicit in the very beginning of the process. The purpose of this is to make it possible to a) evaluate and manage the planned work, b) comment the work, and c) comment the objectives. | |||

| | |||

| | |||

|---- | |||

| Relational evaluation approach | |||

| | |||

| | |||

| [http://www.innokyla.fi] | |||

|---- | |||

! colspan="4"| Management | |||

|---- | |||

| Constant evaluation and adjustment | |||

| Based on the evaluation criteria described above, the decision support, decision making, and implementation are constantly being evaluated and adjusted. | |||

| | |||

| | |||

|---- | |||

| Facilitation and moderation | |||

| The key players (experts, decision makers, interst groups) are typically experienced with neither the practices of open assessment nor with tools and web workspaces used. Therefore, there is a continuous need for moderation of the information content from a format it was originally produced into a format that follows the information structures needed. In addition, there is a need to facilitate meetings, discussions, committees, and evaluations to obtain the knowledge from people and facilitate learning. | |||

| | |||

| | |||

|---- | |||

| Guiding decisions/topics? | |||

| | |||

| | |||

| | |||

|---- | |||

| Interaction, effectiveness? | |||

| | |||

| | |||

| | |||

|---- | |||

|} | |||

===Basic procedures=== | ===Basic procedures=== | ||

'''[[Inference rules]]''' are used to decide what to believe. The rules are summarised | '''[[Inference rules]]''' are used to decide what to believe. The rules are summarised as follows: | ||

# Anyone can promote a [[statement]]about anything (promote = claim that the [[statement]] is true). | |||

# A promoted [[statement]] is considered '''valid''' unless it is invalidated (i.e., convincingly shown not to be true). | |||

# The validity of a [[statement]] is always conditional to a particular [[group]] (which is or is not convinced). | |||

# A [[statement]] always has a field in which it can be applied. By default, a scientific [[statement]] applies in the whole universe and a moral [[statement]] applies within a group that considers it valid. | |||

# Two moral [[statement]] by a single group may be conflicting only if the fields of application do not overlap. | |||

# There may be [[uncertainty]] about whether a [[statement]] is true (or whether it should be true, in case of moral [[statement]]). This can be quantitatively measured with [[subjective probability|subjective probabilities]]. | |||

# There can be other rules than these inference rules for deciding what a [[group]] should believe. Rules are also [[statement]]and they are validated or invalidated just like any [[statement]]. | |||

# If two people within a [[group]] promote conflicting [[statement]], the ''a priori'' belief is that each statement is equally likely to be true. | |||

# ''A priori'' beliefs are updated into ''a posteriori'' beliefs based on observations (in case of scientific [[statement]]) or opinions (in case of moral [[statement]]) and open criticism that is based on shared rules. In practice, this means the use of [[scientific method]]. Opinions of each person are given equal weight. | |||

Tiers of open assessment process describe typical phases of work when an open assessment is performed. There are three tiers recognised as follows: | |||

* Tier I: Definition of the use purpose and scope of an assessment. | |||

* Tier II: Definition of the decision criteria. | |||

* Tier III: Information production. | |||

It is noteworthy that the three tiers closely resemble the first three phases of integrated environmental health impact assessment ([[IEHIA]]), but the fourth phase (appraisal) is not a separate tier in open assessment. Instead, appraisal and information use happens at all tiers as a continuous and iterative process. In addition, the tiers have some similarities also to the approach developed by the [[BRAFO]] project. | |||

[[image:Discussion example.png|thumb|An example of [[discussion]].]] | [[image:Discussion example.png|thumb|An example of [[discussion]].]] | ||

t is clear that within a self-organised group, not all people agree on all scientific or moral statements. The good news is that it is neither expected nor hoped for. There are strong but simple rules to resolve disputes, namely rules of structured '''[[discussions]]'''. In straightforward cases, discussions can be informal, but with more complicated or heated situations, the discussion rules are followed: | |||

# Each discussion has one or more [[statement]]s as a starting point. The validity of the statements is the topic of the discussion. | |||

# A [[statement]] is valid unless it is attacked with a valid [[argument]]. | |||

# [[Statement]]s can be defended or attacked with [[argument]]s, which are themselves treated as [[statement]]s of smaller [[discussion]]s. Thus, a hierarchical structure of defending and attacking arguments is created. | |||

# When the discussion is resolved, the content of ''all'' valid statements is incorporated into the [[information object]]. All resolutions are temporary, and anyone can reopen a discussion. Actually, a resolution means nothing more than a situation where the currently valid statements are included in the content of the relevant [[information object]]. | |||

===Technical functionalities supporting open assessment=== | ===Technical functionalities supporting open assessment=== | ||

'''[[Opasnet]]''' is the web-workspace for making open assessments. The user interface is a wiki and it is | '''[[Opasnet]]''' is the web-workspace for making open assessments. The user interface is a wiki and it is in many respects similar to [[Wikipedia]], although it also has enhanced functionalities for making assessments. One of the key ideas is that all work needed in an assessment can be performed using this single interface. Everything required to undertake and participate in an assessmeent is therefore provided, whether it be information collection, numerical modelling, [[discussion]]s, statistical analyses on original data, publishing original research results, [[peer review]], organising and distributing tasks within a group, or dissemination of results to decision-makers. | ||

In practice, [[Opasnet]] is an overall name for many other functionalities than the wiki, but because the wiki is the interface for users, [[Opasnet]] is often used as a synonym for the Opasnet wiki. Other major functionalities exist as well, aas outlined below. The main article about this topic is [[Opasnet structure]]. | |||

Most variables have numerical values as their [[result]]s. Often these are uncertain and they are expressed as probability distributions. A web page is an impractical place to store and handle this kind of information. For this purpose, a database called '''[[Opasnet | Most variables have numerical values as their [[result]]s. Often these are uncertain and they are expressed as probability distributions. A web page is an impractical place to store and handle this kind of information. For this purpose, a database called '''[[Opasnet]]''' Base is used. This provides a very flexible storage platform, and almost any results that can be expressed as two-dimensional tables can be stored in [[Opasnet Base]]. Results of a variable can be retrieved from the respective [[Opasnet]] page. [[Opasnet]] can be used to upload new results into the database. Further, if one [[variable]] (B) is causally dependent on [[variable]] (A), the result of A can be automatically retrieved from [[Opasnet Base]] and used in a formula for calculating B. | ||

Because [[Opasnet Base]] contains samples of distributions of variables, it is actually | Because [[Opasnet Base]] contains samples of distributions of variables, it is actually a very large [[Bayesian belief network]], which can be used for assessment-level analyses and conditioning and optimising different decision options. In addition to finding optimal decision options, [[Opasnet Base]] can be used to assess the value of further information for a particular decision. This statistical [[method]] is called '''[[Value of information]]'''. | ||

[[Opasnet]] contains '''modelling functionalities''' for numerical models. It is an object-oriented functionality based on [[R]] statistical software and the results in [[Opasnet Base]]. Each [[information object]] (typically a [[variable]]) contains a [[formula]] which has detailed instructions about how its [[result]] should be computed, often based on results of upstream [[variable]]s in a model. | [[Opasnet]] contains '''modelling functionalities''' for numerical models. It is an object-oriented functionality based on the [[R]] statistical software and the results in [[Opasnet Base]]. Each [[information object]] (typically a [[variable]]) contains a [[formula]] which has detailed instructions about how its [[result]] should be computed, often based on results of upstream [[variable]]s in a model. | ||

===Meta level functionalities=== | ===Meta level functionalities=== | ||

In addition to work and discussions about the actual topics related to real-world decision-making, there is also a meta level in [[Opasnet]]. Meta level means that there are discussions and work | In addition to work and discussions about the actual topics related to real-world decision-making, there is also a meta level in [[Opasnet]]. Meta level means that there are discussions and work about the contents of [[Opasnet]]. The most obvious expression of this are the '''[[rating bar]]s''' in the top right corner of many [[Opasnet]] pages. '''[[Peer rating]]''' means that users are requested to evaluate the scientific quality and usefulness of that page on a scale from 0 to 100. This information can then be used by the assessors to evaluate which parts of an assessment require more work, or by readers who want to know whether the presented estimates are reliable for their own purpose. | ||

The users are also allowed to make '''[[peer review]]s''' of pages. These are similar to [[peer review]]s in scientific journals with written evaluations of the scientific quality of content. Another form of written evaluation | The users are also allowed to make '''[[peer review]]s''' of pages. These are similar to [[peer review]]s in scientific journals, with written evaluations of the scientific quality of content. Another form of written evaluation are '''[[acknowledgements]]''', which are a description about who has contributed what to the page, and what fraction of the merit should be given to which contributor. | ||

Estimates of scientific quality, peer reviews and acknowledgements can be used to | Estimates of scientific quality, peer reviews and acknowledgements can be used systematically to calculate how much each contributor has done in [[Opasnet]], though these practices are not yet well developed: [[Special:ContributionScores|Contribution scores]] are so far the only systematic method even roughly to estimate contributions quantitatively. | ||

'''[[Respect theory]]''' is a [[method]] for estimating the value of freely usable [[information object]]s to a [[group]]. This method is under development, and hopefully it will provide practical guidance for distributing merit among contributors in [[Opasnet]]. | '''[[Respect theory]]''' is a [[method]] for estimating the value of freely usable [[information object]]s to a [[group]]. This method is under development, and hopefully it will provide practical guidance for distributing merit among contributors in [[Opasnet]]. | ||

===Why does open assessment work?=== | ===Why does open assessment work?=== | ||

Many people are (initially at least) sceptical about the effectiveness of open assessment. In part, this is because their approach is new and has not yet been widely applied and validated. Most examples of its use are for demonstration purposes. A number of reasons can nevertheless be advanced, supporting its use: | |||

* In all [[assessment]]s, there is a lack of resources, and this limits the quality of the outcome. With important (and controversial) topics, opening up an [[assessment]] to anyone will bring new resources to the [[assessment]] in the form of interested volunteers. | |||

* In all | * The rules of open assessment make it feasible to organise the increased amount of new data (which may at some points be of low quality) into high-quality syntheses within the limits of new resources. | ||

* The rules of open assessment make it | * Participants are relaxed with the idea of freely sharing important information - a prerequisite of an effective open assessment - because open assessments are motivated by the shared hope for societal improvements and not by monetary profit. This is unlike in many other areas where information monopolies and copyrights are promoted as means to gain competitive advantage in a market, but as a side effect result in information barriers. | ||

* Participants are relaxed with the idea of | * Problems due to too narrow initial scoping of the issue are reduced by having with more eyes look at the topic throughout the assessment process. | ||

* | * It becomes easy systematically to apply the basic principles of the [[scientific method]], namely rationale, observations and, especially, [[open criticism]]. | ||

* It becomes | * Any information organised for any previous [[assessment]] is readily available for a new [[assessment]] on an analogous topic. The work time for data collection and the calendar time from data collection to utilisation are also reduced, thus increasing efficiency. | ||

* Any information organised for any previous [[assessment]] is readily available for a new [[assessment]] | |||

* All information is organised in a standard format which makes it possible to develop powerful standardised methods for data mining and manipulation and consistency checks. | * All information is organised in a standard format which makes it possible to develop powerful standardised methods for data mining and manipulation and consistency checks. | ||

* It is technically easy to prevent malevolent attacks against the content of an assessment (on a topic page in Opasnet wiki) without restricting the discussion about | * It is technically easy to prevent malevolent attacks against the content of an assessment (on a topic page in Opasnet wiki) without restricting the discussion about, or improvement of, the content (on a related discussion page); the resolutions from the discussions are simply updated to the actual content on the topic page by a trusted moderator. | ||

These points support the contention that open assessment (or approaches adopting similar principles) will take over a major part of information production motivated by societal needs and improvement of societal decision-making. The strength of this argument is already being shown by social interaction initiatives, such as Wikipedia and Facebook, However, an economic rationale also exists: open assessment is cheaper to perform and easier to utilise, and can produce higher quality outputs than current alternative methods to produce societally important information. | |||

==See also== | ==See also== | ||

{{Help}} | |||

* [[Open Assessors' Network]] | * [[Open Assessors' Network]] | ||

* [[ | * [[Opasnet structure]] | ||

* [[Assessment]] | |||

* [[Variable]] | |||

* [[Opasnet Base]] | * [[Opasnet Base]] | ||

* [[Using Opasnet in an assessment project]] | |||

* [[Benefit-risk assessment of food supplements]] more information about tiers | |||

* [http://en.opasnet.org/w/Special:TaskList/Opasnet Task list for Opasnet] | |||

* [[Discussion structure]] [[Discussion method]] [[Discussion]] | * [[Discussion structure]] [[Discussion method]] [[Discussion]] | ||

* [http://en.opasnet.org/en-opwiki/index.php?title=Open_assessment&oldid=17193 A previous version] containing topics ''Basics of Open assessment'' and ''Why is open assessment a revolutionary method?''. | * [http://en.opasnet.org/en-opwiki/index.php?title=Open_assessment&oldid=17193 A previous version] containing topics ''Basics of Open assessment'' and ''Why is open assessment a revolutionary method?''. | ||

* [[Falsification]] | * [[Falsification]] | ||

===EFSA literature=== | |||

# mikä on jutun pääviesti | |||

# mitä juttu tarjoaa tiedon hyödyntämiselle päätöksenteossa | |||

# mitä juttu sanoo erityisesti vaikutusarvioinnista | |||

* '''Brabham D.C. (2013). Crowdsourcing. Cambridge, Massachusetts: The MIT Press. | |||

** What crowdsourcing is, what it's not and how it works? Also describes related concepts, such as collective intelligence and the wisdom of crowds, that are both used also in open assessment. "Crowdsourcing is an online, distributed problem solving and production model that leverages the collective intelligence of online communities for specific purposes set forth by a crowdsourcing organization." These ideas are similar to that oof open assessment, and knowing crowdsourcing could help raise participation in open assessments. | |||

* '''European Commission, Open Infrastructures for Open Science. Horizon 2020 Consultation Report. [http://cordis.europa.eu/fp7/ict/e-infrastructure/docs/open-infrastructure-for-open-science.pdf] | |||

** Paints a picture of the future of science: talks about how can we store and manage all the information science will produce in the future and how it will be accessible. Talks about how data will be made more open in the future. Not only the publications, but the raw data will be open for reuse. This not only helps other researchers but also gets the general public more involved in science making and more informed, which leads to better decisions. Talks about Open Scientific e-Infrastructure. Also discusses modelling as a new way of making science as opposed to direct observation. | |||

* '''European Commission, 2013. Digital Science in Horizon 2020. [https://ec.europa.eu/digital-agenda/en/open-science] | |||

** Open science aims to make science more open, easily accessible, transparent and thus make making of science more efficient. This is done by integrating ICT into scientific processes. Various aspects of Digital science improve its trustworthiness and availability of science for policy making, in the same way as Opasnet. Talks about potentials and challenges for this kind of way of making science. | |||

* '''European Food Safety Authority (EFSA), 2014. Discussion Paper: Transformation to an Open EFSA. [http://www.efsa.europa.eu/en/corporate/doc/openefsadiscussionpaper14.pdf] | |||

** This paper is designed to promote a discussion and to seek the input of EFSA’s partners and stakeholders as well as experts and practitioners in the field of open government and open science. EFSA is planning to become more transparent and open, also with research, so that anyone interested can follow the progress of a research project and wee the raw data, not only results. This also gives the public in EU the chance to participate. | |||

* '''European Food Safety Authority (EFSA), 2015. Principles and process for dealing with data and evidence in scientific assessments. [http://www.efsa.europa.eu/sites/default/files/scientific_output/files/main_documents/4121.pdf] | |||

** Illustrates the principles and process for evidence use in EFSA. In EFSA the principles for evidence use are impartiality, excellence in scientific assessments (specifically related to the concept of methodological quality), transparency and openness, and responsiveness. Also talks about the process of dealing with data and evidence in scientific assessments. | |||

* '''Edited by Wilsdon J, and Doubleday R (2015). Future Directions for scientific advice in Europe. [http://www.csap.cam.ac.uk/projects/future-directions-scientific-advice-europe/] | |||

** A book about scientific advice in Europe. Contains 16 essays by policymakers, practitioners, scientists and scholars from across Europe about how to use knowledge and scientific information as basis for decision making in Europe and thoughts about how the relationship between science and policies will develop in the future. To know exactly what Opasnet could get out of it, one would have to read the book. | |||

* '''F1000 Research, 2015. Guide to Open Science Publishing. [http://f1000.com/resources/F1000R_Guide_OpenScience.pdf] | |||

** What is open data and open science and what are the benefits of them and open peer review. Also tells about F1000Research Publishing. Doesn't talk about how to use that open data for decision making. | |||

* '''Gartner's 2013 Hype Cycle for Emerging Technologies Maps Out Evolving Relationship Between Humans and Machines, 2013. [http://www.gartner.com/newsroom/id/2575515] | |||

** About the relationship between humans and machines, and where it's going. Doesn't involve anything to do with decision making or modelling. | |||

* '''Hagendijk R, and Irwin A, 2006. Public deliberation and governance: Engaging with science and technology in contemporary Europe. Minerva 44:167–184 | |||

** Draws upon case studies developed during the STAGE (‘Science, Technology and Governance in Europe’) project, sponsored by the European Commission. The case studies focused on three main areas: information and communication technologies, biotechnology, and the environment. Drawing on these studies, this analysis suggests a number of conclusions about the current ways in which the governance of science is undertaken in Europe, and the character and outcome of recent initiatives in deliberative governance.<ref>[http://www.ecsite.eu/activities-and-services/resources/public-deliberation-and-governance-engaging-science-and-technology]</ref> | |||

* '''Howe J, 2008, 2009. Crowdsourcing: Crown Business Why the Power of the Crowd Is Driving the Future of Business. Crown Business | |||

** The economic, cultural, business, and political implications of crowdsourcing. A look at how the idea of crowdsourcing began and how it will effect the future. From the backcover text it seems like the book mostly concentrates on how to do science and business with the help of crowdsourcing, but might easily also talk about ways to use crowdsourcing in politics. | |||

* '''Jasanoff S, 2003. Technologies of humility: citizen participation in governing science. Minerva 41:223–244 [https://wiki.umn.edu/pub/UMM_IS1091_sp13/TechHumility/jasanoff2003.pdf] | |||

** Governments should reconsider existing relations among decision-makers, experts, and citizens in the management of technology. Talks about different ways of seeing how science should be done in the future. The pressure for science's accountability is seen in many ways, one of the important ones being the demand for greater transparency and participation in scientific assessments. Participatory decision-making is also getting increasingly popular. Involving the crowd in scientific assessments then used in decision-making will make it possible to gather knowledge about the issue at hand that couldn't be found anywhere else, and rises the masses to be an active participant instead of a passive one who just happens to for example get exposed in a model. | |||

* '''Nowotny H, 2003. Democratising expertise and socially robust knowledge. Science and Public Policy 30:151–156 | |||

** This paper presents arguments for the inherent ‘transgressiveness’ of expertise. Moving from reliable knowledge towards socially robust knowledge may be one step forward in negotiating and bringing about a regime of pluralistic expertise. I can only find an abstract, so it's hard to say much more. | |||

* '''Open Data Institute and Thomson Reuters, 2014. Creating Value with Identifiers in an Open Data World. [http://site.thomsonreuters.com/site/data-identifiers/] | |||

** Identifiers are simply labels used to refer to an object being discussed or exchanged, such as products, companies or people. Identifiers are fundamentally important in being able to form connections between data, which puts them at the heart of how we create value from structured data to make it meaningful. Doesn't talk about using information in assessments or decision-making, only about how to organise the data so that it can be easily used. | |||

* '''Sarewitz D, 2006. Liberating science from politics. American Scientist 94:104–106 | |||

** Scientific inquiry is inherently unsuitable for helping to resolve political disputes. Even when a disagreement seems to be amenable to technical analysis, the nature of science itself usually acts to inflame rather than quench debate. This is because there is almost always science to support completely opposite points of views, so decisions eventually boil down to political values anyway. This is why the values and goals should be decided first, and then science will only be used as a guide, not as a way to choose between paths. | |||

* '''Stilgoe, J, Lock SJ, and Wilsdon J, 2014. Why should we promote public engagement with science? Public Understanding of Science, 23 :4-15. | |||

** Looks back on the last couple of decades on how of continuity and change around the practice and politics of public engagement with science has changed. Public engagement would seem to be a necessary but insufficient part of opening up science and its governance. Again only the abstract is available online, so not much about the content can be said | |||

* '''Surowiecki J, 2004. The Wisdom of crowds. United States: Doubleday. | |||

** A book about how under the right circumstances, groups are incredibly intelligent, often more so that the most intelligent person within them. The book concentrates on three kinds of problems: cognition problems (problems that have definitive answers or answers that are clearly better than other answers), coordination problems (how to coordinate one's own behaviour in a group, knowing every one else is doing the same) and cooperation problems (challenge of getting self-interested, distrustful people to work together, even when self-interest would suggest no one wants to take part). This wisdom of groups should be used in decision-making, because groups often make on average better decisions than individuals. | |||

==Keywords== | ==Keywords== | ||

Opasnet, open assessment, collaborative work, mass collaboration, web-workspace, variable, assessment, information object, statement, validity, discussion, group | |||

==References== | ==References== | ||

| Line 120: | Line 280: | ||

{{mfiles}} | {{mfiles}} | ||

[[Category:Open assessment]] | |||

[[Category:Opasnet]] | |||

Latest revision as of 09:03, 15 September 2015

This page is a encyclopedia article.

The page identifier is Op_en2875 |

|---|

| Moderator:Jouni (see all) |

|

|

| Upload data

|

For a description about a web-workspace related to open assessment, see Opasnet.

<section begin=glossary />

- Open assessment is a method that attempts to answer the following research question and to apply the answer in practical assessments: how can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?

- In practice, the assessment processes are performed using Internet tools (notably Opasnet) along with more traditional tools. Stakeholders and other interested people are able to participate, comment, and edit the content as it develops, from an early phase of the process. Open assessments explicitly include value judgements, thereby extending its application beyond the traditional realm of risk assessment into the risk management arena. It is based, however, on a clear information structure and scientific methodolgy in order to provide clear rules for dealing with disputes. value judgements thus go through the same open criticism as scientific claims; the main difference is that scientific claims are based on observations, while value judgement are based on opinions of individuals.

Like other terms in the field of assessment 'open assessment' is subject to some confusion. It is therefore useful to distinguish clearly between:

- the open assesment methodology;

- the open assessment process - i.e. the actual mechanism of carrying out an open assessment, and

- the open assessment product or report - i.e. the end product of the process.

To ensure clarity, open assessment also attempts to apply terms in a very strict way. In the summary below, therefore, links are given to further information on, and definitions of, many of the terms and concepts used. <section end=glossary />

Open assessment as a methodology

Open assessment is built on several different methods and principles that together make a coherent system for collecting, organising, synthesising, and using information. These methods and principles are briefly summarised here. A more detailed rationale about why exactly these methods are used and needed can be found in the Open assessment method. In addition, each methodor principle has a page of its own in Opasnet.

Purpose

The basic idea of open assessment is to collect information that is needed in a decision-making process. The information is organised as an assessment that predicts the impacts of different decision options on some outcomes of interest. Information is organised to the level of detail that is necessary to achieve the objective of informing decision-makers. An assessment is typically a quantitative model about relevant issues causally affected by the decision and affecting the outcomes. Decisions, outcomes, and other issues are modelled as separate parts of an assessment, called variables. In practice, assessment and variables are web pages in Opasnet, a web-workspace dedicated for making these assessments. Such a web page contains all information (text, numerical values, and software code) needed to describe and actually run that part of an assessment model.

Basic concepts

These web pages are also called information objects, because they are the standard way of handling information as chunk-sized pieces in open assessments. Each object (or page) contains information about a particular issue. Each page also has the same, universal structure: a research question (what is the issue?), rationale (what do we know about the issue?), and result (what is our current best answer to the research question?). The descriptions of these issues are built on a web page, and anyone can participate in reading or writing just as in Wikipedia. Notably, the outcome is owned by everyone and therefore the original authors or assessors do not possess any copyrights or rights to prevent further editing.

The structure of information objects can be likened to a like fractal: an object with a research question may contain sub-questions that could be treated as separate objects themselves, and a discussion about a topic could be divided into several smaller discussions about sub-topics. For example, there may be a variable called Population of Europe with the result indexed by country. Instead, this information could have been divided into several smaller population variables, one for each country - for example in the form of a variable called Population of Finland. How information is divided or aggregated into variables is a matter of taste and practicability and there are no objective rules. Instead, the rules only state that if there are two overlapping variables, the information in them must be coherent. In theory, there is no limit to how detailed the scope of an information object can be.

Trialogue is the term used to define Wikipedia-like contributions. The trialogue concept emphasises that, in addition to having a dialogue or discussion, a major part of the communication and learning between the individuals in a group happens via information objects, in this case Opasnet pages. In other words, people not only talk or read about a topic but actually contribute to an information object that represents the shared understanding of the group. Wikipedia is a famous example of trialogical approach although the wikipedists do not use this word.

Groups are crucial in open assessment because all research questions are (implicitly) transformed into questions with the format: "What can we as a group know about issue X?" The group considering a particular issue may be explicitly described, but it may also be implicit. In the latter case, it typically means anyone who wants to participate, or alternatively, the whole of humanity.

The use purpose of information is crucial because it is the fuel of assessments. Nothing is done just for fun (although that is a valid motivation as well) but because the information is needed for some practical, explicit use. Of course, other assessments are also done to inform decisions, but open assessments are continuously being evaluated against the use purpose; this is done to guide the assessment work, and the assessment is finished as soon as the use purpose is fulfilled.

Open assessment attempts to be a coherent methodology. Everything in the open assessment methodology, as well as in all open assessment process, is accepted or rejected based on observations and reasoning. However, there are several underlying principles that cannot be verified using observations, called axioms of open assessment. The six axioms, which are essentially Cartesian in origin, are:

- The reality exists;

- The reality is a continuum without, for example, sudden appearances or disappearances of things without reason;

- I can reason;

- I can observe and use my observations and reasoning to learn about the reality;

- Individuals (like me) can communicate and share information about the reality;

- Not everyone is a systematic liar.

Important methods

| Method | Description | Implementation | References |

|---|---|---|---|

| Knowledge support | |||

| Open assessment | Overall method for knowledge support in a situation where open participation is allowed. Specifically, it deals with the issue of systematically combining scientific information and value judgements for improving societal decision making. | Opasnet (open web workspace) http://en.opasnet.org. For each topic, an assessment page is opened in Opasnet. Detailed content is located on several web pages, each of which deals with one topic and can be used by several assessments. | Tuomisto and Pohjola 2007, Pohjola et al 2011: Pragmatic knowledge services [4] |

| Openness (participation, contribution, availability of information) | All information is made available to everyone at all times of the work. Also, comments and contributions are accepted at all times and incorporated in the relevant information products. | Opasnet enables impact assessments or discussions to be started immediately when a need emerges. A web page can be used as a home for the work, and the link is used to invite readers and contributors. | |

| Discussion and argumentation | Discussions are organised into formal argumentations, where a statement is presented, and it is defended or attacked by arguments. Also other arguments can be attacked and defended in a hierarchical way. Discussion rules are based on pragma-dialectic argumentation theory. | In practice, a formal argumentation is usually created afterwards based on written, online, or live discussions and materials. Opasnet pages can be used to create and show hierarchical argument structures. | van Eemeren and Grootendorst 2005## [5] |

| Falsification | Falsification is based on the idea that anyone can suggest a hypothesis as an answer to a scientific question. The hypothesis is assumed plausible until it is convincingly falsified based on irrelevance, illogicality, or inconsistence with observations. The approach is in contrast with a common scientific practice where a hypothesis is ignored until it is validated (to the satisfaction of the establishment). | Anyone is allowed to edit a page in Opasnet. A contribution, unless falsified, may also affect the statement (of a discussion), the answer (to the question on a page), or the conclusion (of an assessment). Each page has a moderator who checks for relevance, prevents vandalism and requests further comments from experts in a controversial situation. | Popper 1939## |

| Information structure | Information is collected, synthesised and made available based on topic (instead of owner, time, or organisation as usually). Information is organised in a standard form (question, answer, rationale) that is not dependent on the topic or level of maturity of content. Topics can be split into sub-topics and handled separately before making a synthesis, hence the structure facilitates contributions without being easily disrupted. | Opasnet pages have the same general structure: question (a research question about the topic), answer (the current best answers to the question, including uncertainties), and rationale (anything that is needed to convince a reader about the answer). There are also some detailed structure guidelines to support the general structure such as references or categories. | Tuomisto and Pohjola 2007, [6] |

| Shared understanding | A situation where all participants are able to describe, what views there are about the topic at hand among the participants, where the agreements and disagreements are and why people disagree. | In practice, shared understanding is a written description about agreements and disagreements. Shared understanding is properly described when all participants think that their own point of view is adequatly described. Assessments in Opasnet aim at shared understanding. | Tuijhuis et al 2012 [7] |

| Evaluation | |||

| Properties of good assessment | List of properties that should be evaluated about the quality of content, applicability, and efficiency of an assessment or assessment work. The properties evaluate the potential of an assessment to fulfil its purpose of informing and improving decision making and thus actually improve the outcome. | The properties are used to evaluate and manage the assessment work during the whole process. The question is constantly asked: Does what we are planning to do actually improve the properties of our assessment in the best possible way? | Tuomisto and Pohjola 2007, [8] |

| Dimensions of openness | Five different perspectives to openness in external participation of assessment processes: scope of participation, access to information, timing of openness, scope of contribution, and impact of contribution. | Perspectives are used to evaluate assessment work (past, ongoing, or planned) to identify problems with openness and participation and tackle them. | Pohjola and Tuomisto: Environmental Health 2011, [9] |

| Purposes, goals and evaluation criteria | The purpose and objectives of the upcoming decision, and criteria to evaluate success, are made explicit in the very beginning of the process. The purpose of this is to make it possible to a) evaluate and manage the planned work, b) comment the work, and c) comment the objectives. | ||

| Relational evaluation approach | [10] | ||

| Management | |||

| Constant evaluation and adjustment | Based on the evaluation criteria described above, the decision support, decision making, and implementation are constantly being evaluated and adjusted. | ||

| Facilitation and moderation | The key players (experts, decision makers, interst groups) are typically experienced with neither the practices of open assessment nor with tools and web workspaces used. Therefore, there is a continuous need for moderation of the information content from a format it was originally produced into a format that follows the information structures needed. In addition, there is a need to facilitate meetings, discussions, committees, and evaluations to obtain the knowledge from people and facilitate learning. | ||

| Guiding decisions/topics? | |||

| Interaction, effectiveness? | |||

Basic procedures

Inference rules are used to decide what to believe. The rules are summarised as follows:

- Anyone can promote a statementabout anything (promote = claim that the statement is true).

- A promoted statement is considered valid unless it is invalidated (i.e., convincingly shown not to be true).

- The validity of a statement is always conditional to a particular group (which is or is not convinced).

- A statement always has a field in which it can be applied. By default, a scientific statement applies in the whole universe and a moral statement applies within a group that considers it valid.

- Two moral statement by a single group may be conflicting only if the fields of application do not overlap.

- There may be uncertainty about whether a statement is true (or whether it should be true, in case of moral statement). This can be quantitatively measured with subjective probabilities.

- There can be other rules than these inference rules for deciding what a group should believe. Rules are also statementand they are validated or invalidated just like any statement.

- If two people within a group promote conflicting statement, the a priori belief is that each statement is equally likely to be true.

- A priori beliefs are updated into a posteriori beliefs based on observations (in case of scientific statement) or opinions (in case of moral statement) and open criticism that is based on shared rules. In practice, this means the use of scientific method. Opinions of each person are given equal weight.

Tiers of open assessment process describe typical phases of work when an open assessment is performed. There are three tiers recognised as follows:

- Tier I: Definition of the use purpose and scope of an assessment.

- Tier II: Definition of the decision criteria.

- Tier III: Information production.

It is noteworthy that the three tiers closely resemble the first three phases of integrated environmental health impact assessment (IEHIA), but the fourth phase (appraisal) is not a separate tier in open assessment. Instead, appraisal and information use happens at all tiers as a continuous and iterative process. In addition, the tiers have some similarities also to the approach developed by the BRAFO project.

t is clear that within a self-organised group, not all people agree on all scientific or moral statements. The good news is that it is neither expected nor hoped for. There are strong but simple rules to resolve disputes, namely rules of structured discussions. In straightforward cases, discussions can be informal, but with more complicated or heated situations, the discussion rules are followed:

- Each discussion has one or more statements as a starting point. The validity of the statements is the topic of the discussion.

- A statement is valid unless it is attacked with a valid argument.

- Statements can be defended or attacked with arguments, which are themselves treated as statements of smaller discussions. Thus, a hierarchical structure of defending and attacking arguments is created.

- When the discussion is resolved, the content of all valid statements is incorporated into the information object. All resolutions are temporary, and anyone can reopen a discussion. Actually, a resolution means nothing more than a situation where the currently valid statements are included in the content of the relevant information object.

Technical functionalities supporting open assessment

Opasnet is the web-workspace for making open assessments. The user interface is a wiki and it is in many respects similar to Wikipedia, although it also has enhanced functionalities for making assessments. One of the key ideas is that all work needed in an assessment can be performed using this single interface. Everything required to undertake and participate in an assessmeent is therefore provided, whether it be information collection, numerical modelling, discussions, statistical analyses on original data, publishing original research results, peer review, organising and distributing tasks within a group, or dissemination of results to decision-makers.

In practice, Opasnet is an overall name for many other functionalities than the wiki, but because the wiki is the interface for users, Opasnet is often used as a synonym for the Opasnet wiki. Other major functionalities exist as well, aas outlined below. The main article about this topic is Opasnet structure.

Most variables have numerical values as their results. Often these are uncertain and they are expressed as probability distributions. A web page is an impractical place to store and handle this kind of information. For this purpose, a database called Opasnet Base is used. This provides a very flexible storage platform, and almost any results that can be expressed as two-dimensional tables can be stored in Opasnet Base. Results of a variable can be retrieved from the respective Opasnet page. Opasnet can be used to upload new results into the database. Further, if one variable (B) is causally dependent on variable (A), the result of A can be automatically retrieved from Opasnet Base and used in a formula for calculating B.

Because Opasnet Base contains samples of distributions of variables, it is actually a very large Bayesian belief network, which can be used for assessment-level analyses and conditioning and optimising different decision options. In addition to finding optimal decision options, Opasnet Base can be used to assess the value of further information for a particular decision. This statistical method is called Value of information.

Opasnet contains modelling functionalities for numerical models. It is an object-oriented functionality based on the R statistical software and the results in Opasnet Base. Each information object (typically a variable) contains a formula which has detailed instructions about how its result should be computed, often based on results of upstream variables in a model.

Meta level functionalities

In addition to work and discussions about the actual topics related to real-world decision-making, there is also a meta level in Opasnet. Meta level means that there are discussions and work about the contents of Opasnet. The most obvious expression of this are the rating bars in the top right corner of many Opasnet pages. Peer rating means that users are requested to evaluate the scientific quality and usefulness of that page on a scale from 0 to 100. This information can then be used by the assessors to evaluate which parts of an assessment require more work, or by readers who want to know whether the presented estimates are reliable for their own purpose.

The users are also allowed to make peer reviews of pages. These are similar to peer reviews in scientific journals, with written evaluations of the scientific quality of content. Another form of written evaluation are acknowledgements, which are a description about who has contributed what to the page, and what fraction of the merit should be given to which contributor.

Estimates of scientific quality, peer reviews and acknowledgements can be used systematically to calculate how much each contributor has done in Opasnet, though these practices are not yet well developed: Contribution scores are so far the only systematic method even roughly to estimate contributions quantitatively.

Respect theory is a method for estimating the value of freely usable information objects to a group. This method is under development, and hopefully it will provide practical guidance for distributing merit among contributors in Opasnet.

Why does open assessment work?

Many people are (initially at least) sceptical about the effectiveness of open assessment. In part, this is because their approach is new and has not yet been widely applied and validated. Most examples of its use are for demonstration purposes. A number of reasons can nevertheless be advanced, supporting its use:

- In all assessments, there is a lack of resources, and this limits the quality of the outcome. With important (and controversial) topics, opening up an assessment to anyone will bring new resources to the assessment in the form of interested volunteers.

- The rules of open assessment make it feasible to organise the increased amount of new data (which may at some points be of low quality) into high-quality syntheses within the limits of new resources.

- Participants are relaxed with the idea of freely sharing important information - a prerequisite of an effective open assessment - because open assessments are motivated by the shared hope for societal improvements and not by monetary profit. This is unlike in many other areas where information monopolies and copyrights are promoted as means to gain competitive advantage in a market, but as a side effect result in information barriers.

- Problems due to too narrow initial scoping of the issue are reduced by having with more eyes look at the topic throughout the assessment process.

- It becomes easy systematically to apply the basic principles of the scientific method, namely rationale, observations and, especially, open criticism.

- Any information organised for any previous assessment is readily available for a new assessment on an analogous topic. The work time for data collection and the calendar time from data collection to utilisation are also reduced, thus increasing efficiency.

- All information is organised in a standard format which makes it possible to develop powerful standardised methods for data mining and manipulation and consistency checks.

- It is technically easy to prevent malevolent attacks against the content of an assessment (on a topic page in Opasnet wiki) without restricting the discussion about, or improvement of, the content (on a related discussion page); the resolutions from the discussions are simply updated to the actual content on the topic page by a trusted moderator.

These points support the contention that open assessment (or approaches adopting similar principles) will take over a major part of information production motivated by societal needs and improvement of societal decision-making. The strength of this argument is already being shown by social interaction initiatives, such as Wikipedia and Facebook, However, an economic rationale also exists: open assessment is cheaper to perform and easier to utilise, and can produce higher quality outputs than current alternative methods to produce societally important information.

See also

| Basics of Opasnet: What is Opasnet · Welcome to Opasnet · Opasnet policies · Open assessment · What is improved by Opasnet and open assessment? · FAQ |

| How to participate?: Contributing to Opasnet · Discussions in Opasnet · Watching pages · Open assessment method |

| How to edit pages?: Basic editing · More advanced editing · Quick reference for wiki editing · Wikipedia cheatsheet · Templates |

| Help for more advanced participation: Copyright · Archiving pages · Copying from Wikipedia · ImageMap · SQL-queries · Analytica conventions · Developing variables · Extended causal diagram · GIS tool · Risk assessment · M-files · Stakeholders · Heande · Todo · Text from PDFs and pictures · Word2MediaWiki · Glossary terms · Formulae |

- Open Assessors' Network

- Opasnet structure

- Assessment

- Variable

- Opasnet Base

- Using Opasnet in an assessment project

- Benefit-risk assessment of food supplements more information about tiers

- Task list for Opasnet

- Discussion structure Discussion method Discussion

- A previous version containing topics Basics of Open assessment and Why is open assessment a revolutionary method?.

- Falsification

EFSA literature

- mikä on jutun pääviesti

- mitä juttu tarjoaa tiedon hyödyntämiselle päätöksenteossa

- mitä juttu sanoo erityisesti vaikutusarvioinnista

- Brabham D.C. (2013). Crowdsourcing. Cambridge, Massachusetts: The MIT Press.

- What crowdsourcing is, what it's not and how it works? Also describes related concepts, such as collective intelligence and the wisdom of crowds, that are both used also in open assessment. "Crowdsourcing is an online, distributed problem solving and production model that leverages the collective intelligence of online communities for specific purposes set forth by a crowdsourcing organization." These ideas are similar to that oof open assessment, and knowing crowdsourcing could help raise participation in open assessments.

- European Commission, Open Infrastructures for Open Science. Horizon 2020 Consultation Report. [11]

- Paints a picture of the future of science: talks about how can we store and manage all the information science will produce in the future and how it will be accessible. Talks about how data will be made more open in the future. Not only the publications, but the raw data will be open for reuse. This not only helps other researchers but also gets the general public more involved in science making and more informed, which leads to better decisions. Talks about Open Scientific e-Infrastructure. Also discusses modelling as a new way of making science as opposed to direct observation.

- European Commission, 2013. Digital Science in Horizon 2020. [12]

- Open science aims to make science more open, easily accessible, transparent and thus make making of science more efficient. This is done by integrating ICT into scientific processes. Various aspects of Digital science improve its trustworthiness and availability of science for policy making, in the same way as Opasnet. Talks about potentials and challenges for this kind of way of making science.

- European Food Safety Authority (EFSA), 2014. Discussion Paper: Transformation to an Open EFSA. [13]

- This paper is designed to promote a discussion and to seek the input of EFSA’s partners and stakeholders as well as experts and practitioners in the field of open government and open science. EFSA is planning to become more transparent and open, also with research, so that anyone interested can follow the progress of a research project and wee the raw data, not only results. This also gives the public in EU the chance to participate.

- European Food Safety Authority (EFSA), 2015. Principles and process for dealing with data and evidence in scientific assessments. [14]

- Illustrates the principles and process for evidence use in EFSA. In EFSA the principles for evidence use are impartiality, excellence in scientific assessments (specifically related to the concept of methodological quality), transparency and openness, and responsiveness. Also talks about the process of dealing with data and evidence in scientific assessments.

- Edited by Wilsdon J, and Doubleday R (2015). Future Directions for scientific advice in Europe. [15]

- A book about scientific advice in Europe. Contains 16 essays by policymakers, practitioners, scientists and scholars from across Europe about how to use knowledge and scientific information as basis for decision making in Europe and thoughts about how the relationship between science and policies will develop in the future. To know exactly what Opasnet could get out of it, one would have to read the book.

- F1000 Research, 2015. Guide to Open Science Publishing. [16]

- What is open data and open science and what are the benefits of them and open peer review. Also tells about F1000Research Publishing. Doesn't talk about how to use that open data for decision making.

- Gartner's 2013 Hype Cycle for Emerging Technologies Maps Out Evolving Relationship Between Humans and Machines, 2013. [17]

- About the relationship between humans and machines, and where it's going. Doesn't involve anything to do with decision making or modelling.

- Hagendijk R, and Irwin A, 2006. Public deliberation and governance: Engaging with science and technology in contemporary Europe. Minerva 44:167–184

- Draws upon case studies developed during the STAGE (‘Science, Technology and Governance in Europe’) project, sponsored by the European Commission. The case studies focused on three main areas: information and communication technologies, biotechnology, and the environment. Drawing on these studies, this analysis suggests a number of conclusions about the current ways in which the governance of science is undertaken in Europe, and the character and outcome of recent initiatives in deliberative governance.[1]

- Howe J, 2008, 2009. Crowdsourcing: Crown Business Why the Power of the Crowd Is Driving the Future of Business. Crown Business

- The economic, cultural, business, and political implications of crowdsourcing. A look at how the idea of crowdsourcing began and how it will effect the future. From the backcover text it seems like the book mostly concentrates on how to do science and business with the help of crowdsourcing, but might easily also talk about ways to use crowdsourcing in politics.

- Jasanoff S, 2003. Technologies of humility: citizen participation in governing science. Minerva 41:223–244 [18]

- Governments should reconsider existing relations among decision-makers, experts, and citizens in the management of technology. Talks about different ways of seeing how science should be done in the future. The pressure for science's accountability is seen in many ways, one of the important ones being the demand for greater transparency and participation in scientific assessments. Participatory decision-making is also getting increasingly popular. Involving the crowd in scientific assessments then used in decision-making will make it possible to gather knowledge about the issue at hand that couldn't be found anywhere else, and rises the masses to be an active participant instead of a passive one who just happens to for example get exposed in a model.

- Nowotny H, 2003. Democratising expertise and socially robust knowledge. Science and Public Policy 30:151–156

- This paper presents arguments for the inherent ‘transgressiveness’ of expertise. Moving from reliable knowledge towards socially robust knowledge may be one step forward in negotiating and bringing about a regime of pluralistic expertise. I can only find an abstract, so it's hard to say much more.

- Open Data Institute and Thomson Reuters, 2014. Creating Value with Identifiers in an Open Data World. [19]

- Identifiers are simply labels used to refer to an object being discussed or exchanged, such as products, companies or people. Identifiers are fundamentally important in being able to form connections between data, which puts them at the heart of how we create value from structured data to make it meaningful. Doesn't talk about using information in assessments or decision-making, only about how to organise the data so that it can be easily used.

- Sarewitz D, 2006. Liberating science from politics. American Scientist 94:104–106

- Scientific inquiry is inherently unsuitable for helping to resolve political disputes. Even when a disagreement seems to be amenable to technical analysis, the nature of science itself usually acts to inflame rather than quench debate. This is because there is almost always science to support completely opposite points of views, so decisions eventually boil down to political values anyway. This is why the values and goals should be decided first, and then science will only be used as a guide, not as a way to choose between paths.

- Stilgoe, J, Lock SJ, and Wilsdon J, 2014. Why should we promote public engagement with science? Public Understanding of Science, 23 :4-15.

- Looks back on the last couple of decades on how of continuity and change around the practice and politics of public engagement with science has changed. Public engagement would seem to be a necessary but insufficient part of opening up science and its governance. Again only the abstract is available online, so not much about the content can be said

- Surowiecki J, 2004. The Wisdom of crowds. United States: Doubleday.

- A book about how under the right circumstances, groups are incredibly intelligent, often more so that the most intelligent person within them. The book concentrates on three kinds of problems: cognition problems (problems that have definitive answers or answers that are clearly better than other answers), coordination problems (how to coordinate one's own behaviour in a group, knowing every one else is doing the same) and cooperation problems (challenge of getting self-interested, distrustful people to work together, even when self-interest would suggest no one wants to take part). This wisdom of groups should be used in decision-making, because groups often make on average better decisions than individuals.

Keywords

Opasnet, open assessment, collaborative work, mass collaboration, web-workspace, variable, assessment, information object, statement, validity, discussion, group

References

Related files

<mfanonymousfilelist></mfanonymousfilelist>