Wikidata

| Moderator:Jouni (see all) |

|

|

| Upload data

|

Wikidata is an open database alongside Wikipedia and it contains all kinds of information that can be automatically used on Wikipedia pages. It can also be called from outside using SPARQL language. It is based on a semantic structure of statement triplets: item, property, value. Each statement should be backed up with credible sources.

Question

How to combine Opasnet, Wikidata, and Wikipedia in a systematic and useful way so that

- high-quality information is updated from Opasnet to Wikidata,

- Wikipedia pages are updated to reflect this data,

- the penetration of environmental health knowledge is as good as possible?

Answer

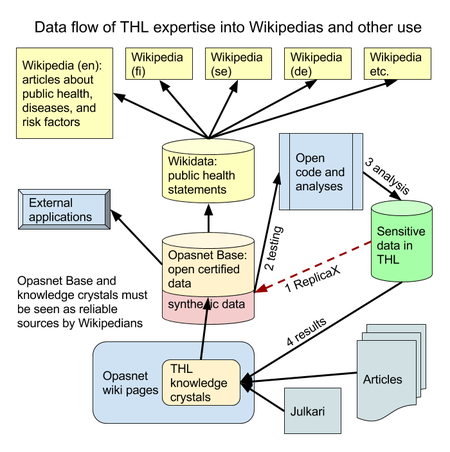

Open data flow from THL

This is a description of an idea about how THL could open up its large pool of sensitive data. Opasnet and Wikidata have central roles there, because a) there needs to be an open place where the data is shared and worked on, and b) from Wikidata, the information can be spread to all Wikipedias in any language; and Wikipedias are the most read websites in any language.

The traditional scientific knowledge production is shown in bottom right corner. In addition, THL has large sensitive datasets for administrative and research work. From there, synthetic data could be opened up (1) using ReplicaX and other anonymising tools. Synthetic data is located in Opasnet Base and described on Opasnet wiki. Each dataset can have an own page. Then, anyone can develop and test statistical analysis code for the data (2). Synthetic data can be accessed via Opasnet but also directly from user's own computer using either R (easier) or JSON (trickier) interface. When ready, THL takes the code and runs it with real data without releasing the data outside the institute (3). Finally, analysis results are published as knowledge crystals in Opasnet, and the actual analysis result is stored in Opasnet Base as open data. There is a need for a research policy discussion: how should the results be published? Is it the basic rule that all code and results are immediately published in Opasnet when the code is run (and after checking that results do not reveal sensitive data)? Or do we give the code developer a head start of e.g. six months, after which the results are published? Or, is this a service that is free of charge if the results are published immediately, but the author must pay to keep them hidden? The price could go up exponentially as a function of time to make sure that results are published eventually.

My first guess is that the actual policy is a matter of experimenting about which options attract code developers. The strength of nudging toward openness is also a value decision by THL.

Pilot for Opasnet - Opasnet Base - Wikidata - Wikipedia connection

- Main article: User page Jtuom in Wikidata

We start the pilot by adding some existing disease burden data from a credible source (IHME Institute) into Wikidata and link to those data from Wikipedia.

The first set of data that is to be uploaded to Wikidata is the burden of disease estimates. Global estimates from the IHME Institute to English Wikipedia to populate disease and risk factor pages. For details of this work, see Burden of disease.

When adding data to Wikidata, there are some tricks you should know. These can be found from the documentation as well, but I highlight some issues that may save time and trouble.

Rationale

Based on some practical learning and testing of the Wikidata website.

Possible functionalities in Wikipedia and WIkidata:

- en:Wikipedia:Lua a programming language on wiki pages using {{#invoke}} functionality. en:Module:Wikidata uses invoke.

- en:Template:Infobox medical condition an example of an advanced template, can be used for Wikidata.

- Relevant Wikidata pages: Disease burden of air pollution, en:Air pollution air pollution

- Technical pages related to use of Wikidata: Inclusion syntax, en:Template:Wikidata value, en:Template:Infobox medical condition/testcases en:Template:Infobox medical condition/sandbox,

- en:Wikipedia:Wikidata

- en:Wikipedia:Requests for comment/Wikidata Phase 2

- Discussion on {{#statements}} parser function

See also

- op_fi:File:Tautitaakka avoimissa tietojärjestelmissä.pptx

- Burden of disease

- Wikidata query system

- IdeaLab: Strengthen links between primary research and Wikidata.R↻

- Related idea: Compare Wikidata content with other sources

- Wikidata property proposal: Natural science: incidence, disease burden, knowledge crystal result.