Shared information objects in policy support

This page is a nugget.

The page identifier is Op_en6997 | |

|---|---|

| Moderator:Jouni (see all) | |

|

| |

| Upload data

|

Unlike most other pages in Opasnet, the nuggets have predetermined authors, and you cannot freely edit the contents. Note! If you want to protect the nugget you've created from unauthorized editing click here |

- This page is a manuscript to be submitted as a software tool article. The main focus is on variable and ovariable structure and use, but their use within OpasnetUtils and implementation in Urgenche building model are described as well, thus making this valid as software tool article. Look also Knowledge crystal. There is also a (password-protected) manuscript draft heande:Effective knowledge-based policy - a pragmatic approach; it is a previous idea of this manuscript.

Authors

Jouni T. Tuomisto1*, Mikko Pohjola1, Teemu Rintala1, Einari Happonen1

- 1 National Institute for Health and Welfare, Department of Environmental Health. P.O.Box 95, FI-70701 Kuopio, Finland.

- *corresponding author

- Jouni T. Tuomisto: jouni.tuomisto [] thl.fi

- Mikko Pohjola: mikko.pohjola [] thl.fi

- Teemu Rintala: teemu.rintala [] thl.fi

- Einari Happonen: einari.happonen [] soketti.fi

Title

Shared information objects in policy support

Abstract

- Up to 300 words long

There is an increasing demand for evidence-based decision-making and for more intimate discussions between experts and policy-makers. However, one of the many problems is that the information flow from research articles to policy briefings is very fragmented. We developed shared information objects with standard structures so that they can be used for both scientific and policy analyses and that all participants can discuss, develop, and use the same objects for different purposes.

We identified a need for three distinct objects: First, assessment describes a practical decision-specific information need with decision options and preferences about their impacts. Second, variables describe scientific estimates of relevant issues such as exposures, health impacts, or costs. Variables are reusable in several assessments, and they can be continuously developed and updated based on the newest data. Third, structured discussions collect and organise free-format contributions related to relevant topics (called statements) within the assessment.

We implemented assessments and variables as wikipages to enable discussions about and public development of their contents. In addition, variables were quantitatively implemented as objects in statistical software R, and assessments used them in object-oriented programming. All functions and objects are freely distributed as R package OpasnetUtils. Several important properties for decision analysis were developed with variables, e.g. flexible indexing of variable results, propagation of uncertainties and scenarios through models, and possibility to write and run models on a web-workspace simply with a web browser.

The concept was tested in several projects with different decision situations related to environmental health and vaccination. We present here a city-level building model to assess health impacts of energy consumption and fine particle emissions. Technically, the data collection on the wiki pages and the model development using shared information objects succeeds as expected. The main challenge is to develop a user community that is willing to and capable of sharing information between other groups and using these tools to improve shared understanding among policy-makers, experts, and stakeholders.

Keywords

Decision making, science-policy interface, expertise, open policy practice, decision analysis, object-oriented programming, R software, shared understanding.

Main Body

Introduction

- The Introduction should provide context as to why the software tool was developed and what need it addresses. It is good scholarly practice to mention previously developed tools that address similar needs, and why the current tool is needed.

- Need for better discussion between experts and decision makers (SA program, VNK money)

Ogp evidence-based decision-making

- Do tank

- Policy demonstrations

- there is a need for more systematic information flow that is shared by both experts and politicians.

We wanted to develop a website for sharing and developing information between decision-makers, experts, and stakeholders. For this purpose, we realised that it is important to have shared information objects that would have a straightforward, standardised structure. The purpose of this is to facilitate communication and participation, and ensure efficient criticism and re-use of information. We identified three different types of information objects that would benefit from standardised structures. First, an assessment would contain all information needed in a decision support work related to a particular decision at a specified point in time. Second, an assessment would consist of smaller pieces of information called variables. These are small and specific enough so that the topic can be expressed in a single question and that the answer can be found using scientific methods, notably open criticism. Third, within variables there is a need for organising and synthesising related contributions and discussions into structured discussions that then enrich the answer of the variable.

To develop structures for assessments, variables, and structured discussions, we first clarified their use purpose and properties. These are briefly presented here as research questions.

What should be the structure of a variable such that it

- is generic enough to be a standard information object in decision support work,

- is able to systematically describe causal relationships and uncertainties as probabilities,

- is suitable for both scientific information and value judgements,

- can be re-used in several assessments,

- can be implemented both on a website and in a computational model system, and

- is easy enough to be usable and understood by interested non-experts?

What should be the structure of an assessment such that it

- is based on the structure and properties of variables,

- contains a useful description of the actions, impacts, value judgements, and scientific information relevant for the decision at hand,

- the description is produced to serve the needs of the decision making based on information provided by decision-makers, experts, and stakeholders?

How should discussions be organised in such a way that

- they can capture all kinds of written and spoken information,

- there are straightforward rules about how the information should be handled,

- the structure of the documented discussion clearly shows the topic and the outcome of the discussion and how it was resolved,

- the approach facilitates the convergence to the truth by easily eliminating false information.

Methods

- The Methods should include a subsection on Implementation describing how the tool works and any relevant technical details required for implementation; and a subsection on Operation, which should include the minimal system requirements needed to run the software and an overview of the workflow.

Shared info objects. Why & requirements

- Continuous flow of information synthesis. You only need the tip of iceberg but its there if you check

- followup of the process

- moderated discussion forum

Implementation

Shared information objects are a possible solution to the problems of information flow between experts and decision-makers, if the objects are suitable for synthesising scientific information, and if they are at the same time useful parts of policy support. Therefore we decided to design objects, and a workspace supporting them, that is based on the idea of decision analysis. In decision analysis, the decision at hand is described as a set of alternative and exclusive options. Scientific information is used to assess health and other impacts of the actions related to each option. Impacts are valuated based on decision-maker's preferences. Uncertainties are described as probabilities. This may result in a complex quantitative probabilistic model with a lot of expertise embedded in the details of the model. Finally, recommendations are produced by integrating over uncertainties and ranking options based on their expected merit.

We wanted to produce a system where every part of such an assessment and a related model is described in both a generic language so that a decision-maker can familiarise herself with the details of the decision and a specific language so that an expert can contribute to or criticise the content. We also kept in mind that stakeholders might have interest in participating in the assessment process, and therefore we were careful not to design structures that prevent open participation. On the contrary, we designed structured discussions as a tool to capture free-format contributions into assessments. We also wanted to make sure that the generic and specific descriptions are not separate structures but a continuum. Another continuum was that the assessment process was designed to happen using the same information objects from the start to the end; just the content and quality would improve during the assessment work. This would facilitate the follow-up of the process and reduce the risk of information loss due to discontinuities.

Already early on we realised that assessments and variables should have different life spans. Assessments are case and time-specific objects that have a practical policy purpose of giving support to a particular decision at some point in time. In contrast, variables are more scientifically focussed objects whose questions and answers are more independent of time. They also continuously evolve based on new emerging information, even if an assessment that used them has been finalised and closed.

Other important properties of these objects include the possibility for open criticism and subsequent updates if criticism is warranted; and ability to describe causal and other connections between variables. These properties in mind we designed information objects for assessments, variables, and structured discussions.

An assessment is implemented as a set of web pages in a web-workspace. The main page contains information about the decision situation and the assessment, and each variable that is needed for performing the assessment is implemented as a separate web page. Each page thus has a specific topic, i.e. that of the assessment or variable located on that page. Discussions are not separate web pages, but they are located on the page to which the statements of the discussion relate. All the information structures and functionalities are implemented on the website. We have used a wiki system called Opasnet (http://en.opasnet.org, accessed Dec 5, 2014) as the user interface.

Next, we will present the information structure of each object. This structure is used on the web-workspace. Later we will present how assessments and variables are implemented in R software; there are a few adjustments to enable necessary computational properties.

- The structure of a variable

| Attribute | Sub-attribute | Description |

|---|---|---|

| Name | An identifier for the variable. | |

| Question | Gives the question that is to be answered. It defines the scope of the variable. The question should be defined in a way that it has relevance in many different situations, i.e. makes the variable re-usable. (Compare to the scope of an assessment, which is more specific to time, place and user need.) | |

| Answer | Gives an understandable and useful answer to the question. Its core is often a machine-readable and human-readable probability distribution (which can in a special case be a single number), but an answer can also be non-numerical text or description. Typically the answer contains an R code that fetches the ovariable created under Rationale/Calculations and evaluates it. | |

| Rationale | Rationale contains anything that is necessary for convincing a critical reader that the answer is credible and usable. It presents the reader the information required to derive the answer and explains how it is formed. Typically it has the following sub-attributes, but also other attributes are possible. Rationale may also contain structured and unstructured discussions about relevant topics. | |

| Data | Data tells about direct observations (or expert judgements) about the variable itself. | |

| Dependencies | Dependencies tells what we know about how upstream variables (i.e. causal parents) affect the variable. In other words, we attempt to estimate the answer indirectly based on information of causal parents. Also reverse inference is possible based on causal children. Dependencies list the causal parents and expresses their functional relationships (the variable as a function of its parents) or probabilistic relationships (conditional probability of the variable given its parents). | |

| Calculations | Calculations is an operationalisation of how to calculate or derive the answer. It uses algebra, computer code, or other explicit methods if possible. Typically it is R code that produces and stores the necessary ovariable objects to compute the current best answer to the question. The user can run codes simply by clicking a button on a web page. |

In addition, it is practical to have additional subtitles on an assessment or variable page. These are not attributes, though.

- See also

- Keywords

- References

- Related files

- The structure of an assessment

All assessments aim to have a common structure so as to enable effective, partially automatic tools for the analysis of information. An assessment has a set of attributes, which have their own sub-attributes.

| Attribute | Sub-attribute | Description |

|---|---|---|

| Name | Identifier for the assessment. | |

| Scope | Defines the purpose, boundaries and contents of the assessment. Scope consist of six sub-attributes. | |

| Question | What is the research question or questions whose answers are sought by means of the assessment? What is the purpose of the assessment? | |

| Boundaries | Where are the boundaries of observation drawn? In other words, which factors are noted and which are left outside the observation? | |

| Intended use and users | Who is the assessment made for? Whose information needs does the assessment serve? What are these needs? | |

| Participants | Those who may participate in the making of the assessment. The minimum group of people for a successful assessment is always described. If some groups must be excluded, this must be explicitly motivated. | |

| Decisions | Which decisions and their options are included in the assessment? Furthermore, which scenarios (plausible and coherent sets of variable values) are assessed? The assessment model is run separately for each decision option and scenario. | |

| Timing | When does the assessment work take place? When will it be finished? When will the actual decision be made? | |

| Answer | What are the current best answers to the questions asked in the scope based on the information collected? The answer is divided into two parts: | |

| Results | Answers to all of the research questions asked in all of the variables and the assessment. Also includes the results of the analyses mentioned in the rationale. | |

| Conclusions | Based on the results, what can be concluded regarding the purpose of the assessment? | |

| Rationale | How can we convince a critical reader that the answer is a good one? Includes all the information that is required for a meaningful answer. Rationale includes four sub-attributes. | |

| Stakeholders | What stakeholders relate to the subject of the assessment? What are their interests and goals? | |

| Variables | What variables or descriptions of particular phenomena are needed in order to carry out the assessment? How do they relate to each other (through causation or other ways)? Among these it is necessary to distinguish three kinds of objects.

| |

| Analyses | What statistical or other analyses should be made based on the information gathered? Typical analyses are decision analysis (which of the decision options produces the best expected outcome?) and value of information analysis (how much should the decision-maker be willing to pay to reduce uncertainty before the decision is made?). | |

| Indices | What indices (classifications of subgroups used to specify the results) are used in the assessment and what are cut out as unnecessary and when? |

- The structure of a formal discussion

| Fact discussion: . |

|---|

| Opening statement: Statements about a topic.

Closing statement: Outcome of the discussion. (A closing statement, when resolved, should be updated to the main page.) |

| Argumentation:

⇤--1: . This argument attacks the statement. Arguments always point to one level up in the hierarchy. --Jouni 17:48, 8 January 2010 (UTC) (type: truth; paradigms: science: attack)

|

- Discussion rules

- A discussion is organised around an explicit statement or statements. The purpose of the discussion is to resolve whether the statement is acceptable or not, or which of the statements, if any, are acceptable.

- The statement is defended or attacked using arguments, which also can be defended and attacked. This forms a hierarchical thread structure.

- An argument is valid unless it is attacked by a valid argument. Defending arguments are used to protect arguments against attacks, but if an attack is successful, it is stronger than the defense.

- Attacks must be based on one of the three kinds of arguments:

- The attacked argument is irrelevant in its context.

- The attacked argument is illogical.

- The attacked argument is not consistent with observations.

- Other attacks such as those based on evaluation of the speaker (argumentum ad hominem) are weak and are treated as comments rather than attacks.

- Discussions are continuous. This means that anyone can write down a resolution based on the current situation at any point. Discussion can still continue, and the resolution is updated if it changes based on new arguments.

Important in the structures of all objects is to keep descriptions about options that are abandoned and common beliefs that are shown false. This is because the purpose of assessments is not only to find a recommendation to the decision-maker but also to increase shared understanding among all participants.

Implementation in R

Many decision situations are so complex and detailed that for an individual it is difficult or impossible to learn and manage all relevant information. There is a clear need to organise assessments and variables in a machine-readable way. Therefore, computational models are used to describe the decision situations, and the assessment and variable objects are designed to enable this. In statistical software R, variables are implemented as objects called ovariables. Assessments are implemented as computational models that consist of ovariables that are linked to each other based on causal relations. Structured discussions are not needed as separate objects in R, because their resolutions are incorporated into variables and thus ovariables.

The ovariable is a class S4 object defined by OpasnetUtils package in R software system. It can be obtained from the CRAN repository (http://www.r-project.org, accessed Dec 5, 2014). Its purpose is to contain the current best answer in a machine-readable format (including uncertainties when relevant) to the question asked by the respective variable. In addition, it contains information about how to derive the current best answer. The respective variable may have an own page in Opasnet, or it may be implicit so that it is only represented by the ovariable and descriptive comments within a code.

It is useful to clarify terms here. Answer is the overall answer to the question asked, so it is the reason for producing the Opasnet page in the first place. This is why it is typically located near the top of an Opasnet page. The answer may contain text, tables, or graphs on the web page. It typically also contains an R code with a respective ovariable, and the code produces these representations of the answer when run. (However, the ovariable is typically defined and stored under Rationale/Calculations, and the code under Answer only evaluates and plots the result.) Output is the key part (or slot) of the answer within an ovariable. All other parts of the ovariable are needed to produce the output, and the output contains what the reader wants to know about the answer. Finally, Result is the key column of the Output table (or data.frame) and contains the actual numerical values for the answer.

The ovariable has seven separate slots that can be accessed using X@slot:

- @name

- Name of <self> (the ovariable object) is a requirement since R doesn't support self reference.

- @output

- The current best answer to the variable question.

- A single data.frame (a 2D table type in R)

- Not defined until <self> is evaluated.

- Possible types of columns:

- Result is the column that contains the actual values of the answer to the question of the variable. There is always a result column, but its name may vary; it is of type ovariablenameResult.

- Indices are columns that define or restrict the Result in some way. For example, the Result can be given separately for males and females, and this is expressed by an index column Sex, which contains values Male and Female. So, the Result contains rows separately for results of males and of females.

- Iter is a specific kind of index. In Monte Carlo simulation, Iter is the number of the iteration.

- Unit contains the unit of the Result. It may be the same for all rows, but it may also vary from one row to another. Unit is not an index.

- Other, non-index columns can exist. Typically, they are information that were used for some purpose during the evolution of the ovariable, but they may be useless in the current ovariable. Due to these other columns, the output may sometimes be a very wide data.frame.

- @data

- A single data.frame that defines <self> as such.

- data slot answers this question: What measurements are there to answer the question? Typically, when data is used, the result can be directly derived from the information given (with possibly some minimal manipulation such as dropping out unnecessary rows).

- May include textual regular expressions that describe probability distributions which can be interpreted by OpasnetUtils/Interpret. For example, "5 - 7" and "2.3 (1.5 - 3.1)" are intepreted as probability distributions.

- @ddata

- A string containing an Opasnet identifier e.g. "Op_en1000". May also contain a subset specification e.g. "Op_en1000/dataset".

- This identifier is used to download data from the Opasnet database for the @data slot (only if empty by default) upon <self> evaluation.

- By default, the data defined by @ddata is downloaded when an ovariable is created. However, it is also possible to create and save an ovariable in such a way that the data is downloaded only when the ovariable is evaluated.

- @dependencies

- A data.frame that contains names and Rtools or Opasnet tokens/identifiers of variables required for <self> evaluation (list of causal parents).

- A way of enabling references in R (for in ovariables at least) by virtue of OpasnetUtils/ComputeDependencies which creates variables in .GlobalEnv so that they are available to expressions in @formula.

- Variables are fetched and evaluated (only once by default) upon <self> evaluation.

- @formula

- A function that defines <self>.

- Should return either a data.frame or an ovariable.

- @formula and @dependencies slots are always used together. They answer this question: How can we estimate the answer indirectly? This is the case if we have knowledge about how the result of this variable depends on the results of other variables (called parents). The @dependencies is a table of parent variables and their identifiers, and @formula is a function that takes the results of those parents, applies the defined code to them, and in this way produces the @output for this variable.

- @marginal

- A logical vector that indicates full marginal indices (and not parts of joint distributions, result columns or other row-specific descriptions) of @output.

Decisions and other upstream orders

⇤--#: . This section goes pretty far into details. Should we drop it, or describe it more thoroughly so that the reader can actually understand these ideas? --Jouni (talk) 15:20, 7 December 2014 (UTC) (type: truth; paradigms: science: attack)

- ←--#: . If they accept this paper, I suggest that we'll soon publish another one with OpasnetUtils as the focus. Then we'll talk about these things. Now it is enough to mention the functionalities such as causal links, recursion, and scenarios/decisions. --Jouni (talk) 18:42, 7 December 2014 (UTC) (type: truth; paradigms: science: defence)

The general idea of ovariables is such that they should be re-usable in many different assessments and models with no or minimal modification. Therefore, the variable in question should be defined as extensively as possible under its scope. In other words, it should answer its question in a re-usable way so that the question and answer would be useful in many different situations. (Of course, this should be kept in mind already when the question is defined.)

To match the scope of specific models, ovariables can be modified by supplying orders upstream (outwards in the causal recursion tree). These orders are checked for upon evaluation. For example decisions in decision analysis can be supplied this way:

- pick an endpoint

- make decision variables for any upstream variables (this means that you create new scenarios with particular deviations from the actual or business-as-usual answer of that variable)

- evaluate endpoint

- optimise between options defined in decisions.

Other orders include:

- collapse of marginal columns by sums, means or sampling to reduce data size (using function CollapseMarginal)

- passing input from model level without redefining the whole variable.

- It is also possible to redefine any specific variable before starting the recursive evaluation, in which case the recursion stops at the defined variable (dependencies are only fetched if they do not already exist; this is to avoid unnecessary computation).

Important functionalities:

- Compute dependencies

- Decision tables

- Data tables in wiki and database

Operation

OpasnetUtils in CRAN. Rcode in Opasnet Wiki working Opbase.data to obtain parameters Objects.store and objects.latest to save and retrieve ovariables, respectively. EvalOutput for evaluating ovariables

Use Cases

### ⇤--#: . Update the colours of the nodes. --Jouni (talk) 09:02, 5 December 2014 (UTC) (type: truth; paradigms: science: attack)

- Please include a section on Use Cases if the paper does not include novel data or analyses. Examples of input and output files should be provided with some explanatory context. Any novel or complex variable parameters should be explained in sufficient detail to enable users to understand and use the tool's functionality.

We have successfully used shared information objects in several decision support situations. In 2011, we assessed the health impacts of H1N1 influenza ("swine flu") vaccination that surprisingly turned out to cause narcolepsy in children (http://en.opasnet.org/w/Assessment_of_the_health_impacts_of_H1N1_vaccination, accessed Dec 5, 2014). More recently, we assessed health effects and cost effectiveness of pneumococcal vaccines (http://en.opasnet.org/w/Pneumococcal_vaccine, accessed Dec 5, 2014). We have assessed health benefits and risks of eating Baltic herring, a fish that contains dioxin (http://fi.opasnet.org/fi/Silakan_hy%C3%B6ty-riskiarvio, available in Finnish, accessed Dec 5, 2014).

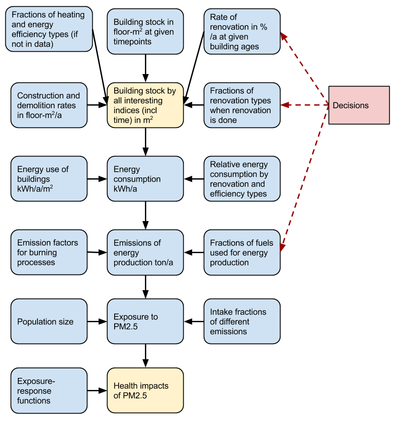

In this article, we will present an assessment about climate and health impacts of building stock, energy consumption, and district heat production. The case study is about Kuopio, a city in Finland with 100000 inhabitants.

- Urgenche building model

- HIA

Describe the models Explain the reusability and its importance

Discussion/Conclusions

- A Discussion (e.g. if the paper includes novel data or analyses) or Conclusions should include a brief discussion of allowances made (if any) for controlling bias or unwanted sources of variability, and the limitations of any novel datasets.

Reproducibility: F1000Research is committed to serving the research community by ensuring that all articles include sufficient information to allow others to reproduce the work. With this in mind, Methods sections should provide sufficient details of the materials and methods used so that the work can be repeated by others. The section should also include a brief discussion of allowances made (if any) for controlling bias or unwanted sources of variability. Any limitations of the datasets should be discussed.

Other tools Analytica Patrycja bbn Heat website: black box partly, not connected with the rest of the decision support process.

All kinds of DSS but sharing and openness is always lacking as they are designed for single-user work. In addition little support to combine policy need discussion with scientific assessment.

Critical development need to establish a community to share info and open up decision processes.

Data and Software Availability

All articles reporting new research findings must be accompanied by the underlying source data - see our policies for more information. Please include details of how the data were analysed to produce the various results (tables, graphs etc.) shown (i.e. what statistical tests were used). If a piece of software code was used, please provide details of how to access this code (if not proprietary). See also our Data Preparation guidelines for further guidance on data presentation and formatting.

If you have already deposited your datasets or used data that are already available online or elsewhere, please include a ‘Data Availability’ section, providing full details of how and where the data can be accessed. This should be done in the style of, for example:

Source code for new software should be made available on a Version Control System (VCS) such as GitHub, BitBucket or SourceForge, and provide details of the repository and the license under which the software can be used in the article. The F1000Research team will assist with data and/or software deposition and help generate this section, where needed.

Author Contributions

JT and MP developed the idea and information structure of assessments and variables. TR developed their implementation in R with help from JT and EH. JT, MN, TR and CS developed the city building case study and its model. All authors were involved in the revision of the draft manuscript and have agreed to the final content.

Competing Interests

No competing interests were disclosed.

Grant Information

This work was funded by EU FP6 project INTARESE Grant ### (for Mikko Pohjola), EU FP7 project URGENCHE Grant#### (for Marjo Niittynen and Einari Happonen), and National Institute for Health and Welfare.

Acknowledgments

This section should acknowledge anyone who contributed to the research or the writing of the article but who does not qualify as an author; please clearly state how they contributed. Please note that grant funding should be listed in the “Grant Information” section.

Supplementary Material

- Assessment and variable pages of the assessment Climate change policies and health in Kuopio in one pdf file.

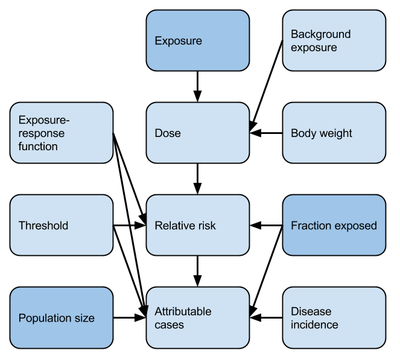

- Method page for Health impact assessment, containing R objects for calculating attributable cases based on exposure and other case-specific information (see Figure 2) in one pdf file.

- OpasnetUtils package: link provided to CRAN repository.

- Key functions of OpasnetUtils package as pdf file: Ovariable, objects, opbase, EvalOutput, CheckDependnencies

- Something else?

- There are no figure or table limits for articles in F1000Research and most material can be included in the main article if desired. Other types of additional information, e.g. questionnaires, extra or supporting images or tables can be submitted as supplementary material.

Please include a section entitled ’Supplementary Material’ at the end of the manuscript, providing a title and short description for each supplementary file. Please also include citations to the supplementary files in the text of the main body of the article. The F1000Research editorial team will liaise with authors to determine the most appropriate way to display this material.

References

- Journal abbreviations should follow the Index Medicus/MEDLINE abbreviation approach.

- Only articles, datasets and abstracts that have been published or are in press, or are available through public e-print/preprint servers/data repositories, may be cited. Unpublished abstracts, papers that have been submitted but not yet accepted, and personal communications should instead be included in the text; they should be referred to as ‘personal communications’ or ‘unpublished reports’ and the researchers involved should be named. Authors are responsible for getting permission to quote any personal communications from the cited individuals.

Figures and Tables

⇤--#: . Move tables and figures here before submitting. --Jouni (talk) 09:02, 5 December 2014 (UTC) (type: truth; paradigms: science: attack)

All figures and tables should be cited and discussed in the article text. Figure legends and tables should be added at the end of the manuscript. Tables should be formatted using the ‘insert table’ function in Word, or provided as an Excel file. For larger tables or spreadsheets of data, please see our Data Preparation guidelines. Files for figures are usually best uploaded as separate files through the submission system (see below for information on formats).

Figure formats: For all figures, the color mode should be RGB or grayscale. Line art: Examples of line art include graphs, diagrams, flow charts and phylogenetic trees. Please make sure that text is at least 8pt, the lines are thick enough to be clearly seen at the size the image will likely be displayed (between 75-150 mm width, which converts to one or two columns width, respectively), and that the font size and type is consistent between images. Figures should be created using a white background to ensure that they display correctly online.

If you submit a graph, please export the graph as an EPS file using the program you used to create the graph (e.g. SPSS). If this is not possible, please send us the original file in which the graph was created (e.g. if you created the graph in Excel, send us the Excel file with the embedded graph). ----#: . EPS printer2 in Jouni's computer prints EPS files. --Jouni (talk) 09:02, 5 December 2014 (UTC) (type: truth; paradigms: science: comment)