Value of information

| Moderator:Jouni (see all) |

|

|

| Upload data

|

<section begin=glossary />

- Value of information (VOI) in decision analysis is the amount a decision maker would be willing to pay for information prior to making a decision.[1]<section end=glossary />

Scope

How can value of information be calculated?

Definition

Input

To calculate value of information, you need

- a decision to be made,

- an outcome indicator to be optimised,

- an optimising function to be used as the criterion for the best decision,

- an uncertain variable of interest (optional, needed only if partial VOI is calculated for the variable)

Output

Value of information, i.e. the amount of money that the decision-maker is willing, in theory, to pay to obtain a piece of information.

Rationale

- See Decision theory, Value of information, and Expected value of perfect information.

- There are different kinds of indicators under value of information, depending on what level of information is compared with the current situation:

- EVPI

- Expected value of perfect information (everything is known perfectly)

- EVPPI

- Expected value of partial perfect information (one variable is known perfectly, otherwise current knowledge)

- EVII

- Expected value of imperfect information (things are known better but not perfectly)

- EVPII

- Expected value of partial imperfect information (one variable is known better but not perfectly)

Result

Procedure

EVPI = E(Max(U(di,θ))) - Max(E(U(di,θ))),

where E=expectation over uncertain parameters θ, Max=maximum over decision options i, U=utility of decision d.

The general formula for EVPII is:

EVPII = Eθ2(U(Max(Eθ2(U(di,θ2))),θ2)) - Eθ2(U(Max(Eθ1(U(di,θ1))),θ2)),

where θ1 is the prior information and θ2 is the posterior (improved) information. EVPPI can be calculated with the same formula in the case where P(θ2)=1 iff θ2=θ1. If θ includes all variables of the assessment, the formula gives total, not partial, value of information.

The interpretation of the formula is the following (starting from the innermost parenthesis). The utility of each decision option di is estimated in the world of uncertain variables θ. Expectation over θ is taken (i.e. the probability distribution is integrated over θ), and the best option i of d is selected. The point is that in the first part of the formula, θ is described with the better posterior information, while the latter part is based on the poorer prior information. Once the decision has been made, the expected utility is estimated again based on the better posterior information in both the first and second part of the formula. Finally, the difference between the utility after the better and poorer information, respectively, gives the value of information.

Management

Computing counter-factual world descriptions with the decision as an index

Counter-factual world descriptions mean that we are looking at two or more different world descriptions that are equal in all other respects except for a decision that we are assessing. In the counter-factual world descriptions, different decision options are chosen. By comparing these worlds, it is possible to learn about the impacts of the decision. With perfect information, we could make the theoretically best decision by always choosing the right option. If we think about these worlds as Monte Carlo simulations, we run our model several times to create descriptions about possible worlds. Each iteration (or row in our result table about our objective) is a possible world. For each possible world (i.e., row), we create one or more counter-factual worlds. They are additional columns which differ from the first column only by the decision option. With perfect information, we can go through our optimising table row by row, and for each row pick the decision option (i.e., the column) that is the best. The expected outcome of this procedure, subtracted by the outcome we would get by optimising the expectation (net benefit under uncertainty), is the expected value of perfect information (EVPI).

<anacode> Parameters: (out:prob;deci:indextype;input:prob;input_ind:indextype;classes)

Definition: index a:= ['Total VOI']; index variable:= concat(a,input_ind); var ncuu:= min(mean(sample(out)),deci); var evpi:= (if a='Total VOI' then mean(min(sample(out),deci))-ncuu else 0);

for x[]:= classes do ( index varia:= sequence(1/x,1,1/x); var evppi:= ceil(rank(input,run)*x/samplesize)/x; evppi:= if evppi=Varia then out else 0; evppi:= sum(min(mean(evppi),deci),varia)-ncuu; concat(evpi,evppi,a,input_ind,variable) ) </anacode>

Description

Value of information (VOI) Version 2.

This function calculates the total VOI (expected value of perfect information, EVPI) for a given decision, and VOI (expected value of partial perfect information, EVPPI) for certain variables. The outcome to be optimised is out; the decision to be made must be indexed by deci; the variables for EVPPI calculation must be listed in input, which is indexed by input_ind. The solution is numerical, and for this purpose, the outcome is classified into a number of bins (the number is defined by classes, which may be a number or an array of numbers). The VOI function assumes that costs are calculated and that the correct optimising function is MIN.

Procedure

First, a new index is generated. It contains 'Total VOI' in the first row and the EVPPI variables in the subsequent rows. Then, net cost under uncertainty (ncuu) and evpi are calculated.

The rest of the procedure is calculated separetely for each value of classes. Varia is a temporary index that has classes number of bins. Each iteration is located in one of the bins depending on the value of input. After this classification, the value of out is located into the bin for that iteration. When the mean is taken, the result is the average of outcome multiplied by the probability that the true value of input is in the same bin. The best decision is made given the bin, and then the expected outcomes of each bin are summed up. When ncuu is subtracted from this value, we get EVPPI. Finally, the EVPI and EVPPI are concatenated into a single index.

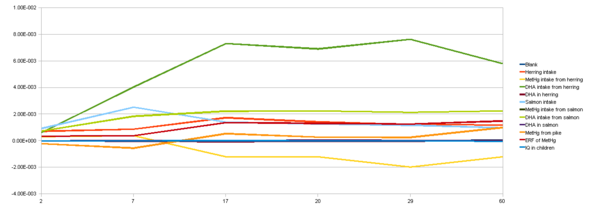

It may be a good idea to include a row 'Blank' in the input_ind and use it for a random variable that is NOT part of the model. This gives a rough estimate on how much random noise may produce VOI in the system. It might also be good to use different values for Classes, because there may be numerical instability with low iteration numbers, and it is not obvious what low is in each case.

Developed by Jouni Tuomisto and Marko Tainio, National Public Health Institute (KTL), Finland, 2005. (c) CC-BY-SA.

Computing one world with the decision as a random variable

In this case, we do not create counter-factual world descriptions, but only a large number of possible world descriptions. The decision that we are considering is treated like any other uncertain variable in the description, with a probability distribution describing the uncertainty about what actually will be decided. In this case, we are comparing world descriptions that contain a particular decision option with other world descriptions that contain another decision option. It is important to understand that we are not comparing two counter-factual world descriptions, but we are comparing a group or possible world descriptions to another group of world descriptions. Therefore, it is not possible to calculate EVPI in such a straightforward way as with counter-factual worlds. Therefore, with this approach, we are pretty much restricted to calculating expected value of partial perfect (and imperfect) information, or EVPPI and EVPII, respectively. This is reflected in the code below: although the result table has a row "Total VOI", it always returns zero. (It might be a good idea to remove the row, unless a way to compute it is developed.) Some sophisticated mathematical methods may be developed to calculate this, but it is beyond my competence. One approach sounds promising to me at them moment. It is used with probabilistic inversion, i.e. using bunches of probability functions instead of point-wise estimates.[2]

<anacode> Parameters: (b, d, c:prob; k:indextype; e:atom optional=0.5; x: atom optional=20)

Definition: d:= (d<=getfract(d,e)); index j:= ['Below cutpoint','Above cutpoint']; d:= array(j,[d, 1-d]); index m:= concat(['Total VOI','Blank'],k); var ncuu:= min(sum(b*d,run)/sum(d,run),j); var a:= c[@k=@m-2]; a:= if @m>2 then a else array(m,[0/*b*/,sample(uniform(0,1))]); index L:= 1..x; a:= ceil(rank(a,run)*x/samplesize); d:= if a=L then d else 0; a:= sum(b*d,run)/sum(d,run); d:= if sum(isnan(a),j)>0 then 0 else d; a:= if sum(isnan(a),j)>0 then null else a; a:= a[j=argmin(a,j)]; d:= sum(sum(d,run),j); d:= d/sum(d,L); a:= sum(a*d,L)-ncuu </anacode>

Description:

Value of information (VOI) Version 3.

This function calculates VOI (expected value of partial perfect information, EVPPI) for certain variables for a given decision. This version is used in a situation where the decision is NOT a decision index, but is a criterion that is used to categorise iterations of a variable into two or more decision option categories.

b: outcome objective to be minimised c: list of uncertain variables whose VOI is estimated d: decision node to be categorised based on the criterion e: cutpoint fractile for the criterion j: the index of the decision categories k: the index of the list c L: the index of uncertainty categories

Originally developed by Jouni Tuomisto and Marko Tainio, National Public Health Institute (KTL), Finland, 2005. Version 3 by Jouni Tuomisto, National Institute for Health and Welfare (THL), 2009. (C) CC-BY-SA.

How to use the method

Value of information score

The VOI score is the current expected value of perfect information (EVPI) for that variable in an assessment where it is used. If the variable is used is several assessments, it is the sum of EVPIs across all assessments.

See also

References

- ↑ Value of information in Wikipedia

- ↑ Jouni Tuomisto's notebook P42, dated 29.10.2009.