Information structure of Open Assessment: Difference between revisions

mNo edit summary |

(other pages merged into this page were removed temporarily) |

||

| Line 57: | Line 57: | ||

== Results == | == Results == | ||

The results are based on the object descriptions of the following objects. | |||

<nowiki> | |||

===Universal objects=== | ===Universal objects=== | ||

| Line 84: | Line 87: | ||

{{{{ns:0}}:Class}} | {{{{ns:0}}:Class}} | ||

</nowiki> | |||

===Objects in different abstraction levels=== | ===Objects in different abstraction levels=== | ||

Revision as of 14:19, 2 October 2008

This page is a nugget.

The page identifier is Op_en2328 | |

|---|---|

| Moderator:Nobody (see all) Click here to sign up. | |

|

| |

| Upload data

|

Unlike most other pages in Opasnet, the nuggets have predetermined authors, and you cannot freely edit the contents. Note! If you want to protect the nugget you've created from unauthorized editing click here |

<accesscontrol>Members of projects,,Workshop2008,,beneris,,Erac,,Heimtsa,,Hiwate,,Intarese</accesscontrol>

This is a manuscript about the information structure of Open Assessment.

Information structure of open assessment

Jouni T. Tuomisto1, Mikko Pohjola1, Alexandra Kuhn2

1National Public Health Institute, P.O.Box 95, FI-70701 Kuopio, Finland

2IER, Universitaet Stuttgart, Hessbruehlstr. 49 a, 70565 Stuttgart, Germany

Abstract

Background

Methods

Results

Conclusions

Background

Many future environmental health problems are global, cross administrative boundaries, and are caused by everyday activities of billions of people. Urban air pollution or climate change are typical problems of this kind. The traditional risk assessment procedures, developed for single-chemical, single-decision-maker, pre-market decisions do not perform well with the new challenges. There is an urgent need to develop new assessment methods that could deal with the new fuzzy but far-reaching and severe problems[1].

A major need is for a systematic approach that is not limited to any particular kind of situation (such as chemical marketing), and is specifically designed to offer guidance for decision-making. Our main interest is in societal decision-making related to environmental health, formulated into the following research question: How can scientific information and value judgements be organised for improving societal decision-making in a situation where open participation is allowed?

As the question shows, we find it important that the approach to be developed covers wider domains than environmental health. In addition, the approach should be flexible enough to subsume the wide range of current methods that are designed for specific needs within the overall assessment process. Examples of such methods are source-apportionment of exposures, genotoxicity testing, Monte Carlo simulation, or elicitation of expert judgement. As openness is one of the starting points for method development, we call the new kinds of assessments open assessments. Practical lessons about openness have been described in another paper[2]

In another paper from our group, we identified three main properties that the new assessment method should fulfil [3]. These are the following: 1) The whole assessment work and a subsequent report are open to critical evaluation by anyone interested at any point during the work; 2) all data and all methods must be falsifiable and they are subject to scientific criticism, and 3) all parts of one assessment must be reusable in other assessments.

In this study, we developed an information structure that fulfils all the three criteria. Specifically, we attempted to answer the following question: What are the object types needed and the structures of these objects such that the three criteria of openness, falsifiability, and reusability are fulfilled; the resulting structure can be used in practical assessment; and the assessment can be operationalised using modern computer technology?

Methods

The work presented here is based on research questions. The main question presented in the Background was divided into several smaller questions, which were more detailed questions about particular objects and their requirements. The majority of work performed was a series of exploratory (and exhaustive, according to most observers) discussions about the essence of the objects. The discussions were iterative so that we repeatedly came back to the same topics until we found that they are coherent with other parts of our information structure, covered all major parts of assessment work and report, and seemed to be conceptually clear enough to be practical.

The current answers to these questions should be seen as hypotheses that will be tested against observations and practical experience as the work goes on. The research questions are presented in the Results section together with the current answer. In the Methods section, we briefly present the most important scientific methodologies that were used as the basis for this work.

PSSP is a general ontology for organising information and process descriptions[4]. PSSP offers a uniform and systematic information structure for all systems, whether small details or large integrated models. The four attributes of PSSP (Purpose, Structure, State, Performance) enable hierarchical descriptions where the same attributes are used in all levels.

A central property in science is asking questions, and giving answers that are falsifiable hypotheses. Falsification is a process where a hypothesis is tested against observations and is falsified if it is inconsistent with them[5]. The idea of falsification and scientific criticism is the reason why we have organised the whole assessment method development as research questions and attempts to answer them.

Bayesian network is a probabilistic graphical model that represents a set of variables and their probabilistic independencies. It is a directed acyclic graph whose nodes represent variables, and whose arcs encode conditional independencies between the variables[6]. Nodes can represent any kind of variable, be it a measured parameter, a latent variable, or a hypothesis. They are not restricted to representing random variables. Generalizations of Bayesian networks that can represent and solve decision problems under uncertainty are called influence diagrams.

Decision analysis is concerned with identifying the best decision to take, assuming an ideal decision maker who is fully informed, able to compute with perfect accuracy, and fully rational. The practical application is aimed at finding tools, methodologies and software to help people make better decisions[7].

The pragma-dialectical theory is an argumentation theory that is used to analyze and evaluate argumentation in actual practice[8]. Unlike strictly logical approaches (which focus on the study of argument as product), or purely communication approaches (which emphasize argument as a process), pragma-dialectics was developed to study the entirity of an argumentation as a discourse activity. Thus, the pragma-dialectical theory views argumentation as a complex speech act that occurs as part of natural language activities and has specific communicative goals.

Results

The results are based on the object descriptions of the following objects.

===Universal objects=== {{{{ns:0}}:Universal products}} ===Structure of an attribute=== {{{{ns:0}}:Attribute}} ===Structure of an assessment product=== {{{{ns:0}}:Assessment}} ===Structure of a variable=== {{{{ns:0}}:Variable}} ===Structure of a discussion === {{{{ns:0}}:Discussion}} ===Structure of a method=== {{{{ns:0}}:Method}} ===Structure of a class=== {{{{ns:0}}:Class}}

Objects in different abstraction levels

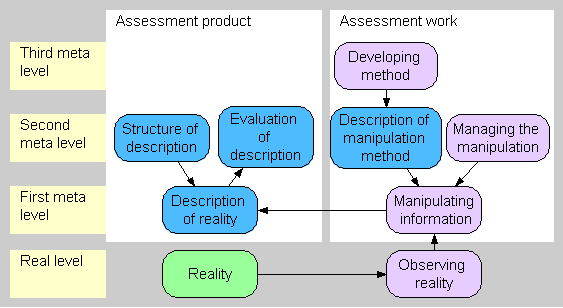

It is useful to see that the information structure has objects that lie on different levels of abstraction, on so called meta-levels. Basically, the zero or real level contains the reality. The first meta level contains objects that describe reality. The second meta level contains objects that describe first-meta-level objects, and so on. Objects on any abstraction level can be described using the objects in this article.

Discussion

There are several formal and informal methods for helping decision-making. Since 1980's, the information structure of a risk assessment has consisted of four distinct parts: hazard identification, exposure assessment, dose-response assessment, and risk characterisation[9]. Decision analysis helps in explicating the decision under consideration and value judgements related to it and finding optimal solutions, assuming rationality.[7] U.S.EPA has published guidance for increasing stakeholder involvement and public participation.[10][11] However, we have not yet seen an attempt to develop a systematic method that covers the whole assessment work, fulfils the open participation and other criteria presented in the Background, and offers both methodologically coherent and practically operational platform. The information structure presented here attempts to offer such a platform.

The information structure contains all parts of the assessment, including both the work and the report as product. All parts are described as distinct objects in a modular fashion. This approach makes it possible to evaluate, criticise, and improve each part separately. Thus, an update of one object during the work has a well-defined impact on a limited number of other objects, and this reduces the management problems of the process.

All relevant issues have a clear place in the assessment structure. This makes it easier for participants to see how they can contribute. On the other hand, if a participant already has a particular issue in mind, it might take extra work to find the right place in the assessment structure. But in a traditional assessment with public participation, this workload goes to an assessor. Organising freely structured contributions is a non-trivial task in a topical assessment, which may receive hundreds of comments.

We have tested this information structure also in practice. It has been used in environmental health assessment about e.g. metal emission risks[12], drinking water[13], and pollutants in fish.[14] So far the structures used have been plausible in practical work. Not all kinds of objects have been extensively used, and some practical needs have been identified for structures. Thus, although the information structure is already usable, its development is likely to continue. Especially, the PSSP attribute Performance has not yet found its final place in the information structure. However, the work and comments so far have convinced us that performance can be added as a part of the information structure, and that it will be very useful in guiding the evaluation of an assessment before, during, and after the work.

The assessments using the information structure have been performed on a website, where each object is a webpage (http://en.opasnet.org). The website is using Mediawiki software, which is the same as for Wikipedia. It is specifically designed for open participation and collaborative production of literary material, and it has proved very useful in the assessment work as well. Technical functionalities are being added to the website to facilitate work also by people who do not have training of the method or the tools.

Conclusions

In summary, we developed a general information structure for assessments. It complies with the requirements of open participation, falsifiability, and reusability. It is applicable in practical assessment work, and there are Internet tools available to make the use of the structure technically feasible.

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

JT and MP jointly scoped the article and developed the main parts of the information structure. AK participated in the dicussions, evaluated the ideas, demanded for solid reasoning, and developed especially the structure of a method. JT wrote the manuscript based on material produced by all authors. All authors participated in revisioning the text and conclusions. All authors accepted the final version of the manuscript.

Acknowledgements

This article is a collaboration between several EU-funded and other projects: Beneris (Food-CT-2006-022936), Intarese (018385-2), Finmerac (Tekes grant 40248/06), and the Centre for Environmental Health Risk Analysis (the Academy of Finland, grants 53307, 111775, and 108571; Tekes grant 40715/01). The unified resource name (URN) of this article is URN:NBN:fi-fe200806131553.

References

- ↑ Briggs et al (2008) Manuscript.

- ↑ Tuomisto et al. (2008) Open participation in the environmental health risk assessment. Submitted

- ↑ Pohjola and Tuomisto. (2008) Purpose determines the structure of environmental health assessments. Manuscript.

- ↑ Pohjola et al. (2008). PSSP - A top-level event/substance ontology and its application in environmental health assessment. Manuscript

- ↑ Popper, Karl R. (1935). Logik der Forschung. Julius Springer Verlag. Reprinted in English, Routledge, London, 2004.

- ↑ Pearl, Judea (2000). Causality: Models, Reasoning, and Inference. Cambridge University Press. ISBN 0-521-77362-8.

- ↑ 7.0 7.1 Howard Raiffa. (1997) Decision Analysis: Introductory Readings on Choices Under Uncertainty. McGraw Hill. ISBN 0-07-052579-X

- ↑ Eemeren, F.H. van, & Grootendorst, R. (2004). A systematic theory of argumentation: The pragma-dialectical approach. Cambridge: Cambridge University Press.

- ↑ Red book 1983.

- ↑ U.S.EPA (2001). Stakeholder involvement & public participation at the U.S.EPA. Lessons learned, barriers & innovative approaches. EPA-100-R-00-040, U.S.EPA, Washington D.C.

- ↑ U.S.EPA (2003). Public Involvement Policy of the U.S. Environmental Protection Agency. EPA 233-B-03-002, Washington D.C. http://www.epa.gov/policy/2003/policy2003.pdf

- ↑ Kollanus et al. (2008) Health impacts of metal emissions from a metal smelter in Southern Finland. Manuscript.

- ↑ Päivi Meriläinen, Markku Lehtola, James Grellier, Nina Iszatt, Mark Nieuwenhuijsen, Terttu Vartiainen, Jouni T. Tuomisto. (2008) Developing a conceptual model for risk-benefit analysis of disinfection by-products and microbes in drinking water. Manuscript.

- ↑ Leino O., Karjalainen A., Tuomisto J.T. (2008) Comparison of methyl mercury and omega-3 in fish on children's mental development. Manuscript.

Figures

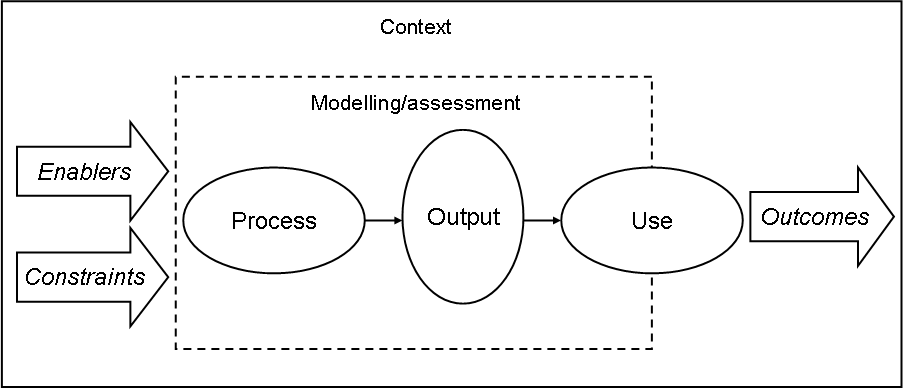

Figure 1. An assessment consists of the making of it and an end product, usually a report. The assessment is bound and structured by its context. Main parts of the context are the scientific context (methods and paradigms available), the policy context (the decision situation for which the information is needed), and the use process (the actual decision-making process where the assessment report is used).

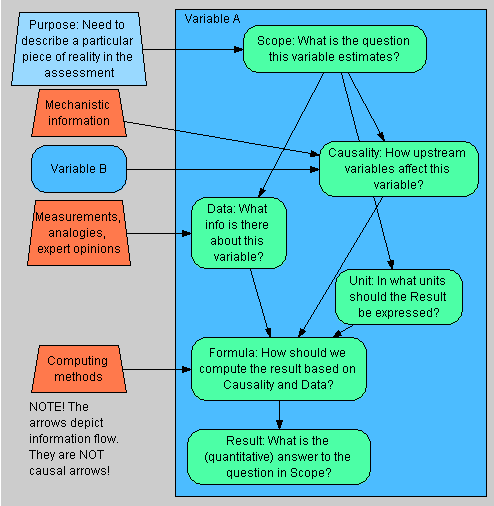

Figure 2. Subattributes of a variable and the information flow between them.

Figure 3. Information and work flow between different objects and abstraction levels of an assessment. The green node is reality that is being described, blue nodes are descriptions of some kind, and purple nodes are work processes. Higher-level objects are those that describe or change the nodes one level below. Note that the reality and usually the observation work (i.e., basic science) is outside the assessment domain.

Tables

Table 1. The attributes of a formally structured object in open assessment.

Table 2. The attributes of an assessment product (typically a report).

Table 3. The attributes of a variable.

Table 4. The attributes of a method.

Table 5. The attributes of a class.

Additional files

None.