Piloting: Finding and evaluating data: Difference between revisions

(Done) |

((in progress)) |

||

| Line 42: | Line 42: | ||

A number of tools to help design monitoring systems and sampling strategies exist, including the Visual Sample Plan (VSP), developed by Battelle Pacific Northwest National Laboratory. This provides an interactive and highly flexible, map-based system for estimating sample size and selecting between different sampling configurations, for a wide range of different user-defined purposes, including estimation of the sample mean, trend detection and exceedance analysis. | A number of tools to help design monitoring systems and sampling strategies exist, including the Visual Sample Plan (VSP), developed by Battelle Pacific Northwest National Laboratory. This provides an interactive and highly flexible, map-based system for estimating sample size and selecting between different sampling configurations, for a wide range of different user-defined purposes, including estimation of the sample mean, trend detection and exceedance analysis. | ||

==Exposure-response functions== | |||

Exposure response functions (ERF) describe quantitatively how much a certain health effect increases when the exposure of a certain stressor increases. The ERF needs to relate specifically to the exposure metric and health endpoint used in the assessment. | |||

The ERF can be derived in a number of ways. Where a published and up-to-date ERF is available from an authoritative organisation, such as the World Health Organization, this should preferably be used. If not, a systematic review (including if appropriate a meta-analysis) should ideally be conducted to derive an ERF for the key impact pathways. Where this, too, is not possible, an alternative is to make use of the formal methods of an expert panel. Systematic reviews and expert panels are both time-consuming to design and run, so modified, less-time consuming versions may be more appropriate. Options include: | |||

adopting the ERF used in previous HIAs; | |||

taking results from an already published and good-quality meta-analysis; | |||

using results from a key multi-centre study. | |||

If these informal methods are used it is advisable to consult expert(s) in the relevant policy field (not a formal expert panel). | |||

These methods can be applied to animal and human studies. However, if applied to animal studies, extrapolation to humans is necessary. In this case, a qualitative assessment should first be carried out to evaluate whether an observed effect in animal studies applies to humans. | |||

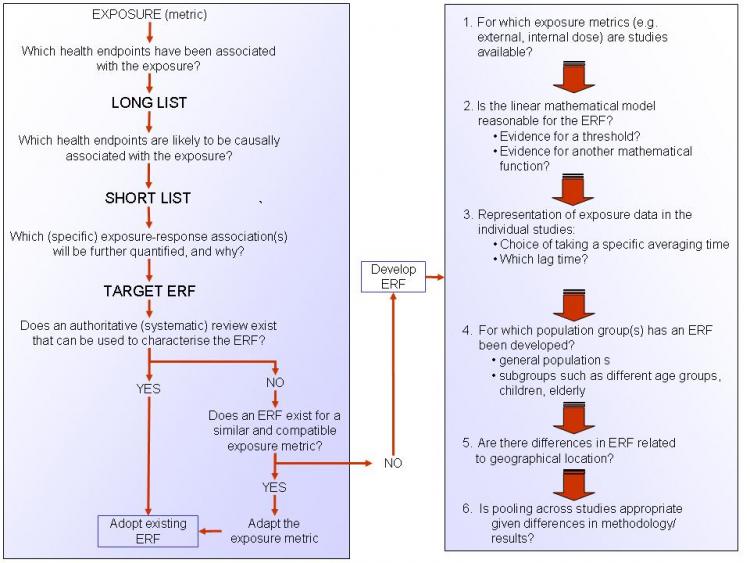

A protocol, giving guidelines on how to develop ERFs, is available via the link under See also, below. Figure 1 (below) provides a summery of this protocol and presents a checklist of questions that should be considered in order to derive an ERF. | |||

An ERF data set, providing suggested exposure-response functions for common exposures and health endpoints is also included in the Data section of the Toolkit. | |||

[[File:ERF method_1.jpg|800px]] | |||

Figure 1. A checklist for deriving an ERF | |||

Revision as of 11:39, 19 August 2014

Finding and evaluating data

Good data are clearly essential for any assessment. Rarely, however, are data initially collected or produced for the purpose of assessment - nor can many assessments wait until new, primary data are generated by purpose-designed studies or surveys. Instead, in most cases, assessments have to make use of data that already exist, and to supplement or enhance these as necessary through the use of modelling or estimation techniques.

Conducting a data review

Assessments are consequently servants of the available data, and have to be designed to make the best possible use of what exists. A vital purpose of feasibility testing is thus to review and evaluate the available data. This is not a question of simply applying some predefined criteria and deciding whether or not the data meet these requirements. The intention must be to identify what data do exist, and determine what can be done with them, and what resources might be needed to make this possible.

To ensure that the review is thorough, but does not lead us too far away from our initial purpose, it is therefore crucial to start by defining the key data we need and the criteria that they must satisfy (e.g. in terms of geographic coverage and resolution, timeframe, cost). Data sources should be evaluated against this list, and any deficiencies noted. In addition, however, possibilities offered by these data should also be identified, and if these imply other data needs (e.g. for the purpose of validation or to help make good their deficiencies), then these need to be added into the list and followed up. In this way a detailed log of the review can be maintained, which can be fed into the process of devising and discussing the assessment protocol. Examples, from case studies carried out during the development of this Toolbox, are given via the links below.

The links in the panel to the left provide access to useful sources, including inventories and metadatabases summarising relevant data in the EU.

Environmental and exposure data

Information on the exposures of the population to the environmental hazards of concern are essential in any environmental health impact assessment. Ideally, these would come from direct measurement or monitoring of the population, either using biomarkers or by personal monitoring. Unfortunately, this is rarely feasible, both because of the lack (and cost) of such monitoring, and because most assessments are concerned with situations that have not yet happened, or with past conditions which can no longer be directly observed. In the absence of such data, recourse is therefore often made to environmental monitoring data. Nevertheless, albeit to a lesser degree, these suffer from many of the same limitations: namely the sparseness of the data and their restriction only to existing and some past conditions. As a consequence most assessments ultimately rely on some form of modelling to estimate exposures under the various scenarios of interest.

None of this is to mean that direct measurements of exposure, dose or environmental conditions (e.g. concentrations) are not valuable for integrated environmental health impact assessments. In most cases, indeed, they are vital - either as inputs to models, or to validate models based on other predictors. Such data, however, are rarely sufficient. Most assessments also have to call on a range of other forms of environmental data, in order to estimate exposures and to model the way in which changes propagate through the causal chain. Thus, data are typically required on a wide range of environmental factors, such as the topography, weather, soil, hydrology and land cover.

Traditionally, most environmental data were obtained by ground-based field surveys. With the development of methods of remote sensing, however, airborne and satellite observations have become far more important, and these now provide the primary source for many types of environmental data. As survey and monitoring technologies have advanced, so the range, size and complexity of environmental databases has grown. Today, therefore, the main challenge is to find relevant data from the myriad of sources available, and to extract and combine them in an efficient way.

In Europe, the European Environment Agency provides an invaluable source of many data. At national level, environmental ministries and their associated agencies also hold, or can give access to, a wide range of data. Another valuable source, specific to environmental health, is the ExpoPlatform. Factsheets for many of these data (along with selected data sets) are provided in the Environmental data section of the Toolkit, and these give links to many of the data sources.

Representivity of environmental monitoring networks

Routine monitoring networks provide a valuable source of data on environmental conditions, which can be used in integrated assessments. Their ability truly to represent the full range of environmental conditions (or human exposures to them) is nevertheless limited, for the environment itself is highly variable, over both space and time, while monitoring is costly and technically constrained, so networks are limited both in their extent and what they measure. These limitations need to be borne in mind whenever monitoring data are used for integrated assessments - whether as a basis for exposure assessment in their own right, as inputs to models, or as a basis for model validation. By the same token, considerable care is needed in designing monitoring systems or measurement campaigns for the purpose of assessment.

Estimating representivity

Determining the representivity of monitoring networks is not easy. On the one hand, the concept of representivity is somewhat vague (and there is no agreement even about what to call it!), so that relevant measures are not always obvious (see references below). Simple statistical measures are available to estimate sample sizes. All such analyses, however, depend on assumptions about the underlying statistical distributions of the properties being measured - and these can only be deduced from the data provided by the existing monitoring network. Inadequacies in the sample design of this network inevitably means that these assumptions are uncertain. Unless additional monitoring can be carried out, at a sampling density sufficient to detect any unseen regularities or local variabilities in the environment, therefore, the estimates of sample requirements are likely to be unreliable (and in many cases to under-estimate the true sampling density that is required, or to define the most effective sampling configuration.

Nor, in the case of assessment, is the the need usually just to minimise the standard errors of the estimates. Instead, monitoring may have to ensure that:

hotspots and vulnerable population sub-groups are correctly identified; uncertainties are reasonably equal across different parts of the study area and population; a strong correlation exists between predicted and actual conditions across the whole study area/population; monitoring costs are kept within necessary limits. This implies the use of a number of different measures, reflecting different design criteria, to estimate representivity. Optimising them all is rarely possible, for the different criteria are to some extent in conflict, so trades off have to be made between them. Representivity is not an absolute, therefore, but depends on the needs of the study, and can only be judged in terms of context.

An illustration of many of these considerations, and an example of how different network designs can affect estimates of population exposures to environmental pollution is given in the attached document, on the representivity of air pollution monitoring networks. This was developed through a simulation exercise, using the SIENA urban simulator. A detailed report can be downloaded below.

Tools for sampling design

A number of tools to help design monitoring systems and sampling strategies exist, including the Visual Sample Plan (VSP), developed by Battelle Pacific Northwest National Laboratory. This provides an interactive and highly flexible, map-based system for estimating sample size and selecting between different sampling configurations, for a wide range of different user-defined purposes, including estimation of the sample mean, trend detection and exceedance analysis.

Exposure-response functions

Exposure response functions (ERF) describe quantitatively how much a certain health effect increases when the exposure of a certain stressor increases. The ERF needs to relate specifically to the exposure metric and health endpoint used in the assessment.

The ERF can be derived in a number of ways. Where a published and up-to-date ERF is available from an authoritative organisation, such as the World Health Organization, this should preferably be used. If not, a systematic review (including if appropriate a meta-analysis) should ideally be conducted to derive an ERF for the key impact pathways. Where this, too, is not possible, an alternative is to make use of the formal methods of an expert panel. Systematic reviews and expert panels are both time-consuming to design and run, so modified, less-time consuming versions may be more appropriate. Options include:

adopting the ERF used in previous HIAs; taking results from an already published and good-quality meta-analysis; using results from a key multi-centre study. If these informal methods are used it is advisable to consult expert(s) in the relevant policy field (not a formal expert panel).

These methods can be applied to animal and human studies. However, if applied to animal studies, extrapolation to humans is necessary. In this case, a qualitative assessment should first be carried out to evaluate whether an observed effect in animal studies applies to humans.

A protocol, giving guidelines on how to develop ERFs, is available via the link under See also, below. Figure 1 (below) provides a summery of this protocol and presents a checklist of questions that should be considered in order to derive an ERF.

An ERF data set, providing suggested exposure-response functions for common exposures and health endpoints is also included in the Data section of the Toolkit.

Figure 1. A checklist for deriving an ERF

References:

Morvan, X., Saby, N.P.A., Arrouays, D., Le Bas, C., Jones, R.J.A.., Verheijen, F.G.A., Bellamy, P.H.,Stephens, M., and Kibblewhite, M.G. 2008 Soil monitoring in Europe: a review of existing systems and requirements for harmonisation. Science of the Total Environment391-1-12.