Guidance and methods for indicator selection and specification: Difference between revisions

mNo edit summary |

Juha Villman (talk | contribs) m (162 revisions: moved from Intarese wiki) |

||

| (104 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

This | This document attempts to explain what is meant with the term '''indicator''' in the context of Intarese, defines what indicators are needed for and how indicators can be used in integrated risk assessments. A related [[:media:Indicator Guidance May07_v2.ppt | Powerpoint presentation]] exists. This document is also harmonizes with the Intarese Appraisal Framework report. | ||

KTL | KTL (E. Kunseler, M. Pohjola and J. Tuomisto | ||

MNP (L. van Bree, M. van der Hoek and K. van Velze) | |||

{{Secondary content| | |||

* [[Help:Variable]] | |||

* [[Help:Risk assessment structure]] | |||

* [[Help:Scoping a risk assessment]] | |||

* [[Help:Drawing a causal diagram]] | |||

}} | |||

==Summary== | |||

* The full chain approach (causal network) consists of ''variables''. Variables that are of special interest and will be reported are called ''indicators''. | |||

* There are several types of indicators used by different organisations. Intarese indicators differ from previous definitions and practices, e.g. WHO indicators do not emphasize causality and are thus not applicable as Intarese indicators. | |||

* The assessment process has several phases, which can be described in the following way. Note that the process is iterative and you may need to go back to previous phases several times. | |||

** Define the purpose of the assessment (e.g. a key question) | |||

** Frame the issue: what are the boundaries and settings of the assessment? | |||

** Define the variables of special interest, i.e. indicators, and other important (i.e. key) variables. | |||

** Draft a causal chain that covers your framing. | |||

** Use clairvoyant test, causality test, and unit test to find development needs in your causal chain. | |||

** Quantify variables and links as much as needed and possible. | |||

* The '''clairvoyant test''' determines the ''clarity'' of a variable. When a question is stated in such a precise way that a putative clairvoyant can give an exact and unambiguous answer, the question is said to pass the test. | |||

* The '''causality test''' determines the ''nature of the relation'' between two variables. If you alter the value of a particular variable (all else being equal), the variables downstream (i.e., the variables that are expected to be causally affected by the tested variable) should change. | |||

* The '''unit test''' determines the ''coherence of the variable definitions'' throughout the network. The functions describing the links between the variables must result in coherent units for the downstream variables. | |||

* The goodness of a variable is described by the following properties: informativeness, calibration, relevance, usability, acceptability of premises, and acceptability of the specification. | |||

* All variables should have the same basic attributes. These are 1) name, 2) scope, 3) description, 4) definition, 5) unit, 6) result, and 7) discussion. | |||

==Introduction== | |||

At this project stage of Intarese (18 months - May 2007), the integrated risk assessment methodology is starting to take its form and is about to become ready for application in policy assessment cases. Within subproject 3, case studies have been selected and development of protocols for case study implementation is in process. After scoping documents have been prepared framing the environmental health issues, full chain frameworks and 1e draft assessment protocols needed for policy assessments of the various case studies are being developed correspondingly. | |||

Indicator selection and specification can be used as a bridge from the issue framing phase to actually carrying out the assessment. This guidance document provides information on selecting and specifying indicators and variables and on how to proceed from the issue framing to the full-chain causal network description of the phenomena that are assessed. | |||

There are several different interpretations of the term indicator and several different approaches to using indicators. This document is written in order to clarify the meaning and use of indicators as is seen applicable in the context of integrated risk assessment. This guidance emphasizes causality in the full-chain approach and the applicability of the indicators in relation to the needs of each particular risk assessment case. In the subsequent sections, the term indicator in the context of integrated risk assessment is clarified and an Intarese-approach to indicator selection and specification is suggested. | |||

This indicator guidance document closely harmonizes with the Intarese Appraisal Framework (AF) report. The AF report concludes that indicators may have various goals and aims, as listed below: | |||

#Policy development and priority-setting | |||

#Health impact assessment and monitoring | |||

#Policy implementation or economic consequence assessment | |||

#Public information and awareness rising or risk perception | |||

In this indicator document, practical guidance is given for variable and indicator and index development; subsequently each work package (WP3.1-3.7) can develop its own set at a later point in time when developing their specific assessment protocols. | |||

=== | ===Integrated risk assessment=== | ||

Before going any further in discussing indicators and their role in integrated risk assessment, it is necessary to consider some general features of integrated risk assessment. The following text in ''italics'' is a slightly adapted excerpt from the Scoping for policy assessments - guidance document by David Briggs <ref>[[Scoping for policy assessments (Intarese method)]]</ref>: | |||

''Integrated risk assessment, as applied in the Intarese project, can be defined as the assessment of risks to human health from environmental stressors based on a whole system approach. It thus endeavours to take account of all the main factors, links, effects and impacts relating to a defined issue or problem, and is deliberately more inclusive (less reductionist) than most traditional risk assessment procedures. Key characteristics of integrated assessment are:'' | |||

#''It is designed to assess complex policy-related issues and problems, in a more comprehensive and inclusive manner than that usually adopted by traditional risk assessment methods'' | |||

#''It takes a '''full-chain approach''' – i.e. it explicitly attempts to define and assess all the important links between source and impact, in order to allow the determinants and consequences of risk to be tracked in either direction through the system (from source to impact, or from impact back to source)'' | |||

#''It takes account of the additive, interactive and synergistic effects within this chain and uses assessment methods that allow these to be represented in a consistent and coherent way (i.e. without double-counting or exclusion of significant effects)'' | |||

#''It presents results of the assessment as a linked set of policy-relevant indicators'' | |||

#''It makes the best possible use of the available data and knowledge, whilst recognising the gaps and uncertainties that exist; it presents information on these uncertainties at all points in the chain'' | |||

Building on what was stated above, some further statements about the essence of integrated assessment can be given: | |||

*Integrated environmental health risk assessment is a process that produces as its product a description of a certain piece of reality | |||

*The descriptions are produced according to the (use) purposes of the product | |||

*The risk assessment product is a description of all the relevant phenomena in relation to the chosen endpoints and their relations as a causal network | |||

*The risk assessment product combines value judgements with the descriptions of physical phenomena | |||

*The basic building block of the description is a '''variable''', i.e. everything is described as variables | |||

*All variables in a causal network description must be causally related to the endpoints of the assessment | |||

===Terminology=== | |||

In order to make the text in the following sections more comprehensible, the main terms and their uses in this document are explained here briefly. Note that the meanings of terms may be overlapping and the categories are not exclusive. For example a description of a phenomenon, say mortality due to traffic PM<sub>2.5</sub>, can be simultaneously belong to the categories of variable, endpoint variable and indicator in a particular assessment. See also [[Help:Variable]]. | |||

'''Variable''' | |||

Variable is a description of a particular piece of reality. It can be a description of physical phenomena, e.g. daily average of PM<sub>2.5</sub> concentration in Kuopio, or a description of a value judgement, e.g. willingness to pay to avoid lung cancer. Variables (the scopes of variables) can be more general or more specific and hierarchically related, e.g. air pollution (general variable) and daily average of PM<sub>2.5</sub> concentration in Kuopio. | |||

'''Endpoint variable''' | |||

Endpoint variables are variables that describe phenomena which are outcomes of the assessed causal network, i.e. there is no variables downstream from an endpoint variable according to the scope of the assessment. In practice endpoint variables are most often also chosen as indicators. | |||

'''Intermediate variable''' | |||

All other variables besides endpoint variables. | |||

'''Key variable''' | |||

Key variable is a variable which is particularly important in carrying out the assessment successfully and/or assessing the endpoints adequately. | |||

'''Indicator''' | |||

Indicator is a variable that is particularly important in relation to the interests of the intended users of the assessment output or other stakeholders. Indicators are used as means of effective communication of the assessment results. Communication here refers to conveying information about certain phenomena of interest to the intended target audience of the assessment output, but also to monitoring the statuses of the certain phenomena e.g. in evaluating effectiveness of actions taken to influence that phenomena. In the context of integrated assessment indicators can generally be considered as pieces of information serving the purpose of communicating the most essential aspects of a particular risk assessment to meet the needs of the uses of the assessment. Indicators can be endpoint variables, but also any other variables located anywhere in the causal network. | |||

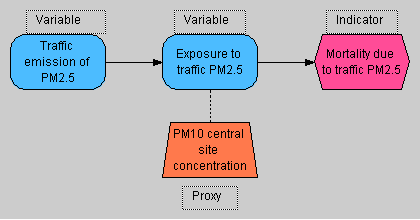

'''Proxy''' | |||

The term indicator is sometimes also (mistakenly) used in the meaning of a proxy. Proxies are used as replacements for the actual objects of interest in a description if adequate information about the actual object of interest is not available. Proxies are indirect representations of the object of interest that ususally have some identified correlation with the actual object of interest. At least within the context of integrated risk assessment as applied in Intarese, proxy and indicator have clearly different meanings and they should not be confused with each other. The figure below attempts to clarify the difference between proxies and indicators: | |||

In order to make the text in the following sections more comprehensible, the main terms and their uses in this document are explained here briefly. Note that the meanings of terms may be overlapping and the catogories are not exclusive. For example a description of a phenomenon, say mortality due to traffic PM<sub>2.5</sub>, can be simultaneously belong to the categories of variable, endpoint variable and indicator in a aprticular assessment. | |||

[[Image:Indicators and proxies.PNG]] | |||

2. to | In the example, a proxy (PM<sub>10</sub> site concentration) is used to indirectly represent and replace the actual object of interest (exposure to traffic PM<sub>2.5</sub>). Mortality due to traffic PM<sub>2.5</sub> is identified as a variable of specific interest to be reported to the target audience, i.e. selected as an indicator. The other two nodes in the graph are considered as ordinary variables. The above graph has been made with Analytica, here is the [[:media:Indicator guidance.ANA| the original Analytica file]] | ||

==Different approaches to indicators== | |||

As mentioned above, there are several different interpretations of and ways to use indicators. The different approaches to indicators have been developed for certain situations with differing needs and thus may be built on very different underlying principles. Some currently existing approaches to indicators are applicable in the context of integrated risk assessment, whereas some do not adequately meet the needs of integrated risk assessment. | |||

In the next section three different currently existing approaches to indicators are presented as examples. Following that a general approach classify indicators is given. The general classification is then used to identify the types of indicators and ways to use indicators that are applicable in different cases of making integrated risk assessments. | |||

===Examples of approaches=== | |||

''' | '''World Health Organization (WHO)''' | ||

One of the most recognized approaches to indicators is the one by the World Health Organization (WHO). The WHO is based on the DPSEEA model and emphasizes that there are important phenomena in each of these steps of the model that can be represented as indicators. | |||

The WHO approach treats indicators as relatively independent objects, i.e. for example exposure indicators are defined independently from the point of view of exposure assessment and health impact indicators are defined independently from the point of view of estimating health impacts. Linkages between different indicators are recognized based on their relative locations within the DPSEEA model, but causality between individual indicators is not explicitly emphasized. | |||

In the WHO approach the indicator definitions are mainly intended as ''how to estimate this indicator?'' -type guidances or workplans. The WHO approach can be considered as an attempt to standardize or harmonize the assessments of different types of phenomena within the DPSEEA model. | |||

'''EEA''' | |||

The approach to indicators by the European Environmental Agency (EEA) attempts to clarify the use of indicators in different situations for different purposes. EEA has developed a typology of indicators that can be applied for selecting right types of indicator sets to meet the needs of the particular case at hand. | |||

The | The EEA typology includes the following types of indicators: | ||

*Descriptive indicators (Type A – What is happening to the environment and to humans?) | |||

Performance indicators | *Performance indicators (Type B – Does it matter?) | ||

*Efficiency indicators (Type C – Are we improving?) | |||

*Total welfare indicators (Type D – Are we on whole better off?) | |||

The EEA indicator typology is built on the need to use environmental indicators for three major purposes in policy-making: | |||

#To supply information on environmental problems in order to enable policy-makers to value their seriousness | |||

#To support policy development and priority setting by identifying key factors that cause pressure on the environment | |||

#To monitor the effects of policy responses | |||

The | The EEA approach considers indicators primarily as means of communicating important information about the states of environmental and related phenomena to policy-makers. | ||

''' | '''MNP/RIVM''' | ||

MNP/RIVM has also developed indicator typologies, which is also strongly present in the integrated risk assessment method development work in Intarese. MNP/RIVM considers two sets of indicators: | |||

# Weighing and appraisal indices (aggregated indicators policy evaluation) | |||

# Topic indicators (according to causal-effect DSEEA/DPSIR chain) | |||

The two differents sets by MNP/RIVM are grouped somewhat similarly as in EEA typology to represent certain kinds of phenomena to give answers to different kinds of environmental and health concerns. However, the MNP/RIVM indicator sets are more defined in relation to the audience which the particular indicator set is intended to convey its messages to. | |||

In the MNP/RIVM approach the different sets of indicators address different phenomena from different perspectives in order to convey the messages about the states of affairs in right forms to the right audiences. By combining the different sets of indicators, a whole view of the assessed phenomena can be created for weighing and appraisal and to support decision making in targeting actions in relation to the assessed phenomena. As in EEA approach, MNP/RIVM considers indicators primarily as means for effective communication. | |||

The MNP/RIVM ‘Weighing and Appraisal’ indices consider the overall integrated assessment and appraisal and decision-making processes using the following aggregation and summary indices, which are closely linked to EEA indicator types B, C, and D: | |||

#Policy deficit index | |||

#Burden of disease index | |||

#Economic consequence index | |||

#Risk perception and acceptability index | |||

The overall appraisal process may combine these four indices into a total outcome or welfare index, closely in harmony with the EEA type D indicator. | |||

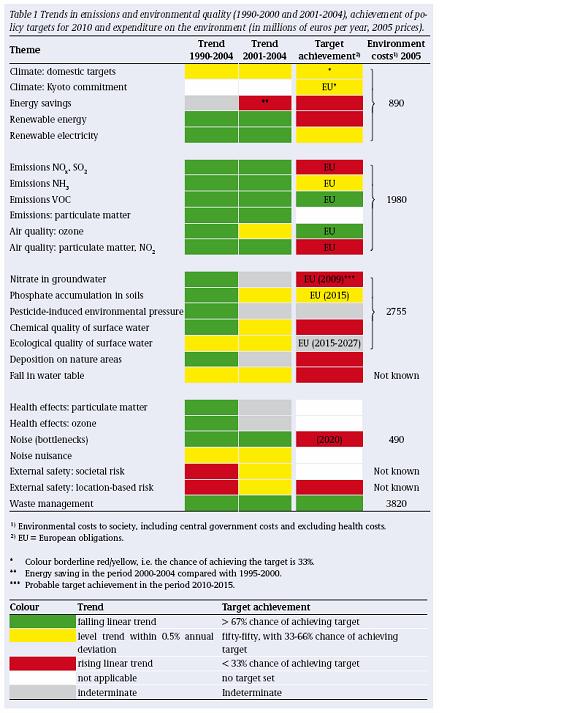

A policy deficit assessment in the output of a comprehensive, overall policy assessment, is defined as the assessment of the extent of the policy deficit, i.e. the degree to which stated policy goals are or are not met and the degree to which standards and guidelines are reached or violated at present and under alternative scenarios. Policy deficit indices are therefore crucial to 1) recognize trends and 2) monitor target achievements. An example that illustrates using of this sort of indices in integrated risk assessment and policy evaluation is provided in Appendix 1. | |||

Policy deficit indicators according to the draft Appraisal Framework report can therefore be listed as follows: | |||

#Distance to emission standards | |||

#Distance to environmental quality standards | |||

#Distance to exposure / intake / body burden standards | |||

#Distance to health risk / disease burden reduction targets | |||

#Weighing risk perception burden and degree of risk acceptation | |||

#Weighing monetary burden / cost-effectivity arguments | |||

===General classification of indicators=== | ===General classification of indicators=== | ||

So, there are many ways to classify indicators and three of these approaches were presented briefly above and there are several more approaches in addition to these examples. In order to clarify the meanings and uses of indicators, below is a general classification of indicators that attempts to explain all different approaches in one framework by considering three different aspects of different indicator approaches. | |||

'''Topic-based classification''' | '''Topic-based classification''' | ||

The topic describes the scientific discipline to which the indicator content mostly belongs to. | The topic describes the scientific discipline to which the indicator content mostly belongs to. E.g. the RIVM classification mostly follows this thinking, although the ''Policy-deficit'' indicators do not fall within this classification, but instead within the reference-based classification explained below. Below are some commonly applied topics suitable for classifying indicator types in integrated risk assessments: | ||

* Health indicators | * Source emission indicators | ||

* Economic indicators. | * Exposure indicators | ||

* Perception indicators | * Health indicators | ||

* Ecological indicators | * Economic indicators | ||

* Total outcome indicators (may combine source emission, exposure, health, economic issues, e.g. EEA type D indicators) | |||

* Perception indicators (including equity and other ethical issues.) | |||

* Ecological indicators (not fully covered in Intarese, as they are out of the scope of the project.) | |||

The indicators in topic-based classification can be descriptions of endpoints of assessments or intermediate phenomena. The list above is by no means exhaustive, but rather provides examples of possible topics. More detailed descriptions of indicators according to the topic classes is provided in Appendix 1. | |||

'''Causality-based classification''' | '''Causality-based classification''' | ||

Many existing indicators | Many currently existing and applied approaches to indicators consider and describe independent pieces of information without a context of a causal chain (or full chain approach). WHO indicators are famous examples of this. The causality issue is also further addressed within the next classification. In integrated risk assessment the causality is necessary to be emphasized in all variables, including indicators. | ||

'''Reference-based classification''' | '''Reference-based classification''' | ||

The reference is something that the indicator is compared | The reference is something that the indicator is compared to, and this comparison creates the actual essence of the indicator. The classification according to the reference is independent of the topic-based classification. The EEA typology mainly follows reference-based classification, except for the total welfare indicators that fall into the topic-based classification. | ||

{| {{prettytable}} | {| {{prettytable}} | ||

!Type of indicator | !Type of indicator | ||

! | !Point of reference | ||

!Examples of use | !Examples of use | ||

! | !Causality addressed? | ||

|----- | |----- | ||

|Descriptive indicators | |Descriptive indicators | ||

|Not explicitly compared to anything. | |Not explicitly compared to anything. | ||

|EEA type A indicators | | | ||

| | *EEA type A indicators | ||

*Burden of disease | |||

*WHO indicators also often this type. | |||

|Possibly, often not | |||

|----- | |----- | ||

|Performance indicators | |Performance indicators | ||

|Some predefined policy target | |Some predefined policy target | ||

|EEA type B indicators | | | ||

| | *EEA type B indicators | ||

*Policy deficit indicators in RIVM indicator sets | |||

|Possibly | |||

|----- | |----- | ||

|Efficiency indicators | |Efficiency indicators | ||

|Compared with the activity or service that causes the impact. | |Compared with the activity or service that causes the impact. | ||

|EEA type C indicators | | | ||

*EEA type C indicators | |||

*Cost-effectiveness analysis | |||

|Yes | |Yes | ||

|----- | |----- | ||

| | |Scenario indicators{{reslink|classification}} | ||

| | |||

|Some predefined policy action, usually a policy scenario compared with business as usual. | |Some predefined policy action, usually a policy scenario compared with business as usual. | ||

|Cost-benefit analysis. | | | ||

*Cost-benefit analysis. | |||

|Yes | |Yes | ||

|} | |} | ||

The reference-based classification also covers indicators that can describe endpoints or intermediate phenomena in the causal network of a particular assessment. Again the above list of reference classes is not exhaustive, but rather describes some commonly used indicator types in environmental health. The indicator types according to these classes are described in more detail in Appendix 1. | |||

===Suggested Intarese approach to indicators=== | |||

In integrated risk assessment as applied in Intarese, the full chain approach is an integral part of the method, and therefore all Intarese indicators should reflect causal connections to relevant variables. According to the full-chain approach, all variables within an integrated risk assessment, and thus also indicators, must be in a causal relation to the endpoints of the assessment. Independently defined indicators, such as WHO indicators and other non-causally defined indicators, are thus not applicable as building blocks of integrated risk assessment in Intarese. In principle, all types of indicators in the abovementioned classifications of indicators can be used as applicable, as long as causality is explicitly addressed. The indicator sets may well be built on either topic-based or reference-based classification as is seen practical in each particular case. An example that illustrates using of the general classification of indicators in an integrated risk assessment case is provided in Appendix 2. | |||

The communicative needs in an assessment are the ones that fundamentally define the suitable types of indicators for each assessment. Therefore, the right set(s) of indicators can vary significantly between different assessments. Whether the selection of indicators is done along the lines of either topic-based classification of reference-based classification, the set(s) of indicators should anyhow represent: | |||

#The use purpose of the assessment | |||

#The target audience of communicating the outputs of the assessment | |||

#The importance of the indicators in relation to the assessment and its use | |||

Indicators can be helpful in constructing and carrying out the integrated risk assessment case. They can be used for targeting assessment efforts to the most relevant aspects of the assessed phenomena, especially from the point of view of addressing the purpose and targeted users or audiences of the assessments. Indicators can also be used as the ''backbone'' of the assessment when working out the way how to proceed from issue framing to creating the final output of the assessment, the causal network description of the assessed phenomena. | |||

==Carrying out integrated risk assessments== | |||

The Intarese approach to risk assessment emphasizes on creation of causal linkages between the determinants and consequences in the integrated assessment process. The full-chain approach includes interconnected variables and indicators as the leading components of the full-chain description. The variables in the full-chain description should cover the whole source-impact chain. | |||

The output of an integrated risk assessment is a causal network description of the relevant phenomena related directly or indirectly to the endpoints of the assessment, in accordance with the purpose of the assessment. The final description should thus: | |||

*Address all the relevant issues as variables | |||

*Describe the causal relations between the variables | |||

*Explain how the variable result estimates are come up with | |||

*Report the variables of greatest interest as indicators | |||

The greatest improvement, and at the same time challenge, in the full-chain approach is the explicit emphasis on causality throughout the source-impact chain. Although it may often be very difficult to exactly describe causal relations in the form of e.g. mathematical formulae, the causalities should not be neglected. By means of coherent causal network descriptions that cover the whole source-impact chains it is possible to understand the phenomena and to be able to deal with changes that may take place in any variables in the causal network. | |||

Even if the result estimates for individual variables were come up with by means of measurements, model runs, external reference data or expert judgements, the causalities should be attempted to be defined simultaneously. The least that should be done is to have statements about the existence of causal linkages, although they may be vaguely understood and defined. During the assessment the estimation of variable results should be an iterative interplay between information from data sources and definition of causal definitions. | |||

It is quite a long, and not necessarily at all a straightforward, way from having a general view of the assessment scoping to creating a relevantly complete causal network description of the phenomena of interest. Some of the biggest challenges on this way are: | |||

*How are variables defined and described? | |||

*How can the causal relations between variables be defined and described? | |||

*What is the right level of detail in describing variables? | |||

*What is the role of indicators in the assessment process? | |||

These questions are addressed in following sections. First a general description of the assessment process is given, then more detailed guidance and explanation of the role of indicators within the process is presented. Eventually a general variable and indicator structure to be used in Intarese is suggested. | |||

===from issue framing to a causal network description=== | |||

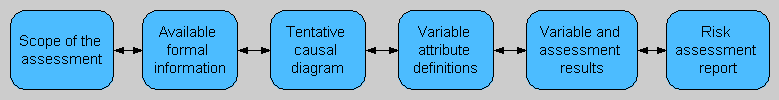

Below is a diagram that schematically describes the different steps of how an assessment product develops from identification of the assessment purpose to a complete causal network desription. The diagram is a rough simplification of the process, although the arrows between the nodes pointing both ways try to emphasize the iterative nature of the process. The process resembles rather a gradual transition of focus along the progrees of work than clearly happening in separate phases. | |||

In the diagram below, the boxes represent different developmental steps of the assessment product which are representations of the improving understanding about the assessed phenomena and simultaneously the focus of attention as the process progresses towards its goals. Arrows refer to the activities that are done in between the steps to improve the understanding about the assessed phenomena. The diagram is made in the form of a workflow description and it does not explicitly address e.g. questions of collaboration and interaction between different contributors to the assessment. The process is explained in more detail below. | |||

[[Image:Assessment process.PNG]] | |||

The graph above has been made with Analytica. Here is the [[Media:Assessment process.ANA | original Analytica file]]. | |||

The assessment process starts in identifying the purpose of the assessment and the intended uses, users and possible other interested audiences (usually referred to as stakeholders). The purpose of the assessment should be explained and carried along the whole process, since it affects everything that is to be done in the assessment. The process then proceeds to issue framing, where a more detailed description of the assessment is created. In issue framing especially the inclusion and exclusion of phenomena according to their relevance in relation to the endpoints of the assessment and the purpose of the assessment is addressed. Also understanding about the most interesting and important issues within the assessment scope is created. | |||

After the issue framing phase, there should be a good general level understanding of what is to be included in the assessment and what is to be excluded. Also, there should a strong enough basis for identifying what are the endpoints of the assessment, what are the most important variables within the assessment scope in order to carry out the assessment successfully, and what are the most interesting variables within the assessment scope from the point of view of the users and other audiences of the assessment output. These overlapping sets of endpoint variables, key variables and indicators provide a good starting point for taking the first steps in carrying out the assessment after the issue framing phase. Having been identified, the endpoint variables, key variables and indicators can be located on the source-impact chain of full-chain approach. This forms the backbone of the causal network description. | |||

Because all of the variables must be causally interconnected, the linkages between the causal relations between the key variables and indicators need to be defined. Additional variables are added to the description as needed to create the causal relations across the network that interconnect all the key variables and indicators to the endpoint variables and to cover the whole chain from sources to impacts. At this point there should be a complete tentative causal network description covering the whole source-impact chain and including about all of the variables that are seen necessary to estimate within the assessment. It is enough to have identified the names and tentative scopes of variables and have statements of the existence of causal relations between all the variables. | |||

The process proceeds with more detailed definitions of the variables and their causal relations. This happens as an iterative process of e.g. combining detailed variables into more general variables, dividing general level variables into more detailed ones, adding necessary variables to the chain, removing variables that turn out irrelevant, changing the causal links etc. The process typically proceeds from more general level, e.g. from general full-chain description, to a more detailed level as is reasonable for the particular assessment. | |||

As an example, the general air pollutant variable can be divided into specific pollutant variables, e.g. for NOx, PM2.5 /PM10, BS etc and also further specified in terms of limitations in relation to e.g. time and space as needed in the particular assessment. Each of these specific variables naturally then have different causal relations to the consequent health effect variables. In practice, the level of detail might need to be iteratively adjusted also to meet e.g. data availability and existing understanding of causal relations between variables. At this point it should be mentioned that there are plans within Intarese subproject 4 to develop a scoping tool as a part of the toolbox to help the early stages of creating the causal network description, but unfortunately this tool is non-existent as yet. | |||

When iteratively developing the causal network description, there are two critical tests that can be used in determining the causal relations between variables and the right scope for individual variables: | |||

*''clairvoyant test'' for variables | |||

*''causality test'' for variable relations | |||

*''unit test'' for variables coherence | |||

All variables in a properly defined full-chain description should pass both of these tests. | |||

Variables should describe some real-world entities, preferably described in a way that they pass a so called clairvoyant test. The '''clairvoyant test''' determines the clarity of a variable. When a question is stated in such a precise way that a putative clairvoyant can give an exact and unambiguous answer, the question is said to pass the test. So in case of a variable, the scope of the variable should be defined so that a clairvoyant, if one existed, could give an exact and unambiguous answer to what is its result. | |||

All variables should also be related to each causally. '''Causality test''' determines the nature of the relation between two variables. If you alter the value of a particular variable (all else being equal), the variables downstream (i.e., the variables that are expected to be causally affected by the tested variable) should change. If no change is observed, the causal link does not exist. If the change appears unreasonable, the causal link may be different than originally stated. | |||

Most often the variables in the causal network are quantitative descriptions of the phenomena. In order to test the coherence of the variable definitions throughout the network, a '''unit test''' can be easily made. It means checking that the units in which the result estimates of the variables are presented in are calculable throughout the network. | |||

When the causal network has been developed into a relevantly complete and coherent description of the phenomena of interest, the attention may be turned into evaluating the quality of the content of the description. This means e.g. deliberating if the result estimates of variables are good representations of the real-world entities that they describe, are the causalities properly defined, and are the assessment endpoints, key variables and indicators thus reliably estimated. This phase includes e.g. considerations of uncertainties, importance of variables, data quality etc. When the marginal benefit of additional efforts becomes small enough, i.e. additional work does not improve the output remarkably anymore, the assessment can be considered ready. | |||

It should yet again be reminded that the above description is a rough simplification and that in practice there are several different iteration loops of defining, refining and redefining the description as the process progresses. | |||

===Importance of indicators in the assessment process=== | |||

Indicators have a special role in making the assessment. As mentioned above, indicators are the variables of most interest from the point of view of the use, users and other audiences of the assessment. The idea thus behind the indicator selection, specification and use is to highlight the most important and/or significant parts of the source-impact chain which are to be assessed and subsequently reported. The selected set of indicators guides the assessment process to address the relevant issues within the assessment scope according to the purpose of the assessment. It could be said that indicators are the ''leading variables'' in carrying out the assessment, other variables are subsidiary to specifying the indicators. | |||

However, within the context of integrated risk assessment, selecting and specifying indicators may sound more straightforward than it actually is. Maybe, identification of indicators and specification of the causal network in line with the identified indicators, could grasp the essence of the process better. Instead of merely picking from a predefined set of indicators, selection here refers rather to identifying the most interesting phenomena within the scope of the assessment in order to describe and report them as indicators. Specification of indicators then is similar to specification of all other variables, although indicators are the ones that are primarily considered while other variables are considered secondarily, and mainly in relation to the indicators. | |||

In principle, any variable could be chosen as an indicator and the set(s) of indicators could be composed of any types of indicators across the full-chain description. In practice, the generally relevant types of indicators, such as performance indicators can be somewhat predefined and even some detailed indicators can be defined in relation to commonly existing purposes and user needs. This kind of generality is also helpful in bringing coherence between the assessments. | |||

We suggest that all variables, and thus also all indicators, are specified using a fixed set of attributes. The reasoning behind is to secure coherence between variable/indicator specifications and to enhance efficiency of assessment work and re-usability of the outputs of assessment work. Moreover, it helps in ensuring that all the terms used in the assessment are consistent and explicit. | |||

===Evaluation of indicators=== | |||

In this aspect it may also not be relevant to talk about selection criteria for indicators, because the indicators may not be predefined. Instead, criteria for ''evaluating the goodness of indicators'' should be considered. In essence, indicators can be evaluated using the same principles as in evaluating risk assessments, i.e. along the lines of the [[General properties of good risk assessments | general properties of good risk assessments]]. In evaluating individual indicators, perhaps the effectiveness attributes should be given more weight, whereas the efficiency attributes are considered more on the level of the whole assessment. The evaluation criteria (or properties of good indicators) can be used as means for identifying and specifying the indicators as well as reflecting on the performance of the indicators. | |||

In the Scoping for policy assessments - guidance document <ref>[[Scoping for policy assessments (Intarese method)]]</ref>, David Briggs listed the most necessary aspects of indicators as (i) relevance to users and acceptability, (ii) consistency, (iii) measurability. In addition (a) scientific credibility, (b) sensitivity and robustness and understandability user-friendliness are pointed out as important. In order to promote coherence in terminology within Intarese, the evaluation criteria of indicators (and all other variables as well) are described below in the terms applied also for evaluating risk assessments. The properties of individual indicators naturally also affect the properties of the whole assessment. | |||

'''Quality of content''' | |||

Quality of content refers to how well does an indicator describe the real-world entity that is in the scope of the indicator. The quality of content can be described in terms of informativeness, calibration and relevance. Relevance can be further divided into internal and external relevance. | |||

'''''Informativeness''''' means the ''tightness of spread'' in a distribution (All results estimates of indicators and variables should be considered rather as distribution estimates, not point value estimates). The tighter the spread, the smaller the variance and the better the informativeness. | |||

'''''Calibration''''' is the ''correctness'' of the result estimate of an indicator or other variable, i.e. how close it is to the ''real value''. Evaluating calibration can be complicated in many situations, but it is necessary to realize it as an important property, since all variables should be descriptions of real-world entities. | |||

'''''Internal relevance''''' refers to the suitability of the indicator in relation to assessing the endpoints of the assessment. | |||

'''''External relevance''''' refers to the suitability of the indicator in relation to the purpose (user needs) of the assessment. | |||

'''Applicability''' | |||

Applicability refers to the potential of conveying the information content of the indicator description to the users or other audiences of the assessment. The applicability attributes that are most important in terms of indicators are usability and acceptability. Acceptability can be further defined as acceptability of premises taken in specifying the indicator and acceptablity of the indicator specification process. The applicability attributes are strongly influenced by the capabilities of the users and the target audience so they should also be taken into account when specifying indicators. | |||

'''''Usability''''' means the aspects of the indicator description that affect the understanding of the content. These are e.g. clarity and transparency of representation, illustrativeness of displaying results, comparability to referential indicators etc. | |||

'''''Acceptability of the premises''''' refers to the assumptions applied in describing the indicator. | |||

'''''Acceptability of the specification''''' refers to the way the indicator result has been derived and reasoned. | |||

===General structure of variables and indicators=== | |||

Before proceeding to consider how individual variables and indicators are specified as part of the process of turning the purposes and goals of the assessment into a causal network description of the phenomena of interest, it is better to present a suggestion for the general structure of variables for Intarese. Specification of variables and indicators will be subsequently explained based on the general variable structure. | |||

Predefined sets of indicators may be practical in terms of efficiency, coherence and comparability, but on the other hand also stagnating and limiting. This is due to the multitude of assumptions that underlie the definitions, and which may not be applicable in all cases. | |||

Another approach to increase efficiency, coherence and comparability, but less limitations, is to have a unified structure for variables, the basic building blocks of integrated risk assessments. A well designed attribute structure allows all real-world entities, including physical reality and value judgements, to be described as variables. A unified structure within assessments also makes it possible to have control over the hierarchical relations between variables. Most of all, uniformity on a very basic level enhances the efficiency in making assessments while still being very flexible. E.g. the names and scopes of individual variables can be defined in any way as seen reasonable and practical within the context of the assessment. | |||

Indicators are special cases of variables and thus also have the same structure as all other variables. | |||

The suggested structure of an Intarese variable is shown below and attributes subsequently explained in more detail: | |||

#Name | #Name | ||

#Scope | #Scope | ||

#Description | #Description | ||

#*Scale | #*E.g. Scale, Averaging period, References | ||

#Unit | #Unit | ||

#Definition | #Definition | ||

| Line 339: | Line 356: | ||

#*Data | #*Data | ||

#*Formula | #*Formula | ||

# | #*Variations and alternatives | ||

#Result | #Result | ||

#Discussion | #Discussion | ||

The name attribute is the identifier of the variable, which of course already more or less describes what the real-world entity the variable describes is. The variable names should be chosen so that they are descriptive, unambiguous and not easily confused with other variables. An example of a good variable name could be e.g. ''daily average of PM<sub>2.5</sub> concentration in Helsinki''. | |||

Scope attribute defines the boundaries of the variable - what does it describe and what not? The boundaries can be e.g. spatial, temporal or abstract. In the above example variable, at least the geographical boundary restricts the coverage of the variable to Helsinki and the considered phenomena are restricted to PM<sub>2.5</sub> daily averages. There could also be some further boundary settings defined in the scope of the variable, which are not explicitly mentioned in the name of the variable. | |||

Description attribute is needed to describe what is needed in order to understand the particular variable description and especially its result. Description attribute may include various kinds of information that is necessary to understand the variable, e.g. references, explanations about the data collection or manipulation, other background information etc. | |||

Unit attribute describes what units the result is presented in. The units of interconnected variables need to be coherent with each other in a causal network description. The units of variables can be used to check the coherence of the causal network description by the ''unit test'' explained above in the general assessment process description. | |||

Definition attribute describes how the result of the variable is derived. It consists of sub-attributes to describe the causal relations, data used to estimate the result, and the mathematical formula to calculate the result. Also alternative identified ways to derive the variable result can be described in the definition attribute as reference. The minimum requirement for defining the causality in all variables is to express the existence of a causal relation, i.e. that a change in an ''upstream'' variable affects the variables ''downstream''. | |||

Result attribute describes the result value of the variable. This is often the most interesting part of the whole variable description. The result value can be represented in various forms, but are usually distributions of some kind. | |||

The integrated risk assessment process builds on the idea of deliberative iteration in developing and improving the description towards adequate level of quality. Therefore discussion attribute is also important to facilitate and document the deliberation that takes place along the assessment process. The discussion attribute thus is the attribute where the contents of all other attributes of the particular variable can be questioned. The discussion attribute also documents the deliberation process to describe the development of the variable description. | |||

===Specifying indicators and other variables=== | |||

When the endpoints, indicators and key variables have been identified, they should be specified in more detail. Additional variables are created and specified in addition to the endpoints, indicators and key variables as is necessary to complete the causal network. Specifying these variables means defining the contents of the attributes of each variable. This can be aided by using the following set of questions for different attributes: | |||

#Name and scope - What is the question about some real-world entity that the variable attempts to give an answer to? | |||

#Description - What do you need to know to understand the question and its answer? | |||

#Definition - How can you derive the answer? | |||

#Unit - How to measure it? | |||

#Result and discussion - What is the answer to the question and why? | |||

As stressed several times already, the specification of variables proceeds in iterative steps, going into more detail as the overall understanding of the assessed phenomena increases. The indicators are the ''leading variables'' that guide the specification process. First, it is most crucial to specify the scopes (and names) of the variables and their causal relations. As part of the specification process, in particular the name and scope attributes, the '''clairvoyant test''' can be applied. The test helps to ensure the clarity and unambiguity of the variable scope. | |||

Addressing causalities means in practice that all changes in any variable description should be reflected in all the variables that the particular variables is causally linked to. At this point, the '''causality test''' can be used, although not always necessarily quantitatively. In the early phases of the process, it is probably most convenient to describe causal networks as diagrams, representing the indicators, endpoints, key variables and other variables as nodes (or boxes) and causal relations as arrows from ''upstream'' variables to ''downstream'' variables. In the graphical representations of causal networks the arrows are only statements of existence of a causal relation between particular variables, more detailed definitions of the relations should be described within the definition attribute of each variable according to how well the causal relation is known or understood. | |||

Once a relatively complete and coherent graphical representation of the causal network has been created, the specification process for the identified indicators may continue to more detail. The indicators, the ''leading variables'', are of crucial importance in the assessment process. If, during the specification process, it turns out that the indicator would conflict with one or several of the properties of good indicators, such as calibration, it may be necessary to consider revising the scoping of the indicator or choosing another ''leading variable'' in the source - impact chain to replace it. This may naturally bring about a partial revision of the whole causal network affecting a bunch of key variables, endpoints and indicators. For example, it may happen that no applicable exposure-response function is available for calculating the health impact from intake of ozone. In this case, the exposure-response indicator may be replaced with an intake fraction indicator affecting both the ''downstream'' and ''upstream'' variables in the causal network in the form of e.g. bringing about a need to change the units the variables are described in. | |||

The description, unit and definition attributes are specified as is explained in the previous section. The '''unit test''' can be applied to check the calculability, and thus descriptive coherence, of the causal network. When all the variables in the network appear to pass the required tests, the indicator and variable results can be computed across the network and the first round of iteration is done. Improvement of the description takes place through deliberation and re-specification of the variables, especially definition and result attributes, until an adequate level of quality of description throughout the network has been reached. The discussion attribute provides the place for deliberating and documenting deliberation throughout the process. | |||

==Lessons learned from Intarese training session - 22, 23 May 2007 at Schiphol Airport== | |||

# Distinction between flowcharts or mindmaps and influence diagrams was never explained to SP3 members. Consequently, most issue frameworks in Intarese SP3 have been set up as flow charts. Since Intarese emphasizes on causality, the issue frameworks should be redrawn as influence diagrams or causal networks. A first guidance towards creating causality was given in the training sessions. Each SP3 issue framework has been discussed with the specific WP group members. Further guidance might be needed. | |||

## Contact each WP in SP3, ask for indicator contact persons. | |||

# Causality does not necessarily need to be proven. A plausible causal relationship is enough basis for creation of causal links. | |||

# Variable attribute list should be adjusted, see document page on 'Building blocks..' for suggestions. | |||

# Leendert's presentation on policy-deficit indicators should be included. | |||

==References== | |||

<References/> | |||

==Appendices== | |||

===Appendix 1. Types of indicators applicable to Intarese=== | |||

''This section is still being worked on'' | |||

====Descriptions of indicators in topic-based classification==== | |||

'''Source emission indicators''' | |||

'''Exposure indicators''' | |||

'''Health indicators''' | |||

'''Economic indicators''' | |||

'''Total outcome indicators''' | |||

(may combine source emission, exposure, health, economic issues, e.g. EEA type D indicators) | |||

Some measure of total sustainability is needed in order to answer this question, for example, a kind of ‘Green GDP’, such as the Index of Sustainable Economic Welfare (ISEW). As these indicators are, however, currently outside of the EEA’s work programme, there are not further covered here. | |||

'''Perception indicators (including equity and other ethical issues.)''' | |||

'''Ecological indicators (not fully covered in Intarese, as they are out of the scope of the project.)''' | |||

====Descriptions of indicators in reference-based classification==== | |||

'''Descriptive indicators''' | |||

''The text in this section has been adapted from the EEA Technical report No 25, Environmental indicators: Typology and overview, EEA, Copenhagen,1999).'' | |||

Most sets of indicators presently used by nations and international bodies are based on the DPSIR-framework or a subset of it. These sets describe the actual situation with regard to the main environmental issues, such as climate change, acidification, toxic contamination and wastes in relation to the geographical levels at which these issues manifest themselves. With respect to environmental health, these indicators may also be specified with respect to (personal) (source-specific) exposure indicators and health effects indicators (number of people affected, YLL, DALY, or QALY). | |||

'''Performance indicators''' | |||

''The text in this section has been adapted from the EEA Technical report No 25, Environmental indicators: Typology and overview, EEA, Copenhagen,1999).'' | |||

Performance indicators compare (f)actual conditions with a specific set of reference conditions. They measure the ''distance(s)'' between the current environmental situation and the desired situation (target): ''distance to target'' assessment. Performance indicators are relevant if specific groups or institutions may be held accountable for changes in environmental pressures or states. | |||

Most countries and international bodies currently develop performance indicators for monitoring their progress towards environmental targets. These performance indicators may refer to different kind of reference conditions/values, such as: | |||

*national policy targets; | |||

*international policy targets, accepted by governments; | |||

*tentative approximations of sustainability levels. | |||

The first and second type of reference conditions, the national policy targets and the internationally agreed targets, rarely reflect sustainability considerations as they are often compromises reached through (international) negotiation and subject to periodic review and modification. Up to now, only very limited experience exists with so-called sustainability indicators that relate to target levels of environmental quality set from the perspective of sustainable development (Sustainable Reference Values, or SRVs). | |||

Performance indicators monitor the effect of policy measures. They indicate whether or not targets will be met, and communicate the need for additional measures. | |||

Examples of performance indicators: | |||

*EEA Type B indicators | |||

*RIVM/MNP policy deficit indicators | |||

'''Efficiency indicators''' | |||

''The text in this section has been adapted from the EEA Technical report No 25, Environmental indicators: Typology and overview, EEA, Copenhagen,1999).'' | |||

It is important to note that some indicators express the relation between separate elements of the causal chain. Most relevant for policy-making are the indicators that relate environmental pressures to human activities. These indicators provide insight in the efficiency of products and processes. Efficiency in terms of the resources used, the emissions and waste generated per unit of desired output. | |||

The environmental efficiency of a nation may be described in terms of the level of emissions and waste generated per unit of GDP. The energy efficiency of cars may be described as the volume of fuel used per person per mile travelled. Apart from efficiency indicators dealing with one variable only, also | |||

aggregated efficiency indicators have been constructed. The best-known aggregated efficiency indicator is the MIPS-indicator (not covered in this report). It is used to express the Material Intensity Per Service unit and is very useful to compare the efficiency of the various ways of performing a similar function. | |||

Efficiency indicators present information that is important both from the environmental and the economic point of view. ‘Do more with less’ is not only a slogan of environmentalists. It is also a challenge to governments, industries and researchers to develop technologies that radically reduce the level of environmental and economic resources needed for performing societal functions. Since the world population is expected to grow substantially during the next decades, raising environmental efficiency may be the only option for preventing depletion of natural resources and controlling the level of pollution. | |||

The relevance of these and other efficiency indicators is that they reflect whether or not society is improving the quality of its products and processes in terms of resources, emissions and waste per unit output. | |||

Examples of efficiency indicators: | |||

*EEA Type C indicators | |||

'''Scenario indicators''' | |||

====MNP example on policy-deficit indicators==== | |||

To illustrate policy deficit indicators used in various environmental themes, an example has been taken from the (annual) Environmental Balance report (2006) of the Netherlands Environmental Assessment Agency visualizing a simple table format, using different colours to show what the time trends and target achievements are. | |||

[[Image:Example.jpg]] | |||

Figure 4 MNP example of policy deficit indicators | |||

===Appendix 2. Example of process from issue framing to causal network description=== | |||

[[Image:SP3frameworks.doc]] | |||

''This section is still being worked on'' | |||

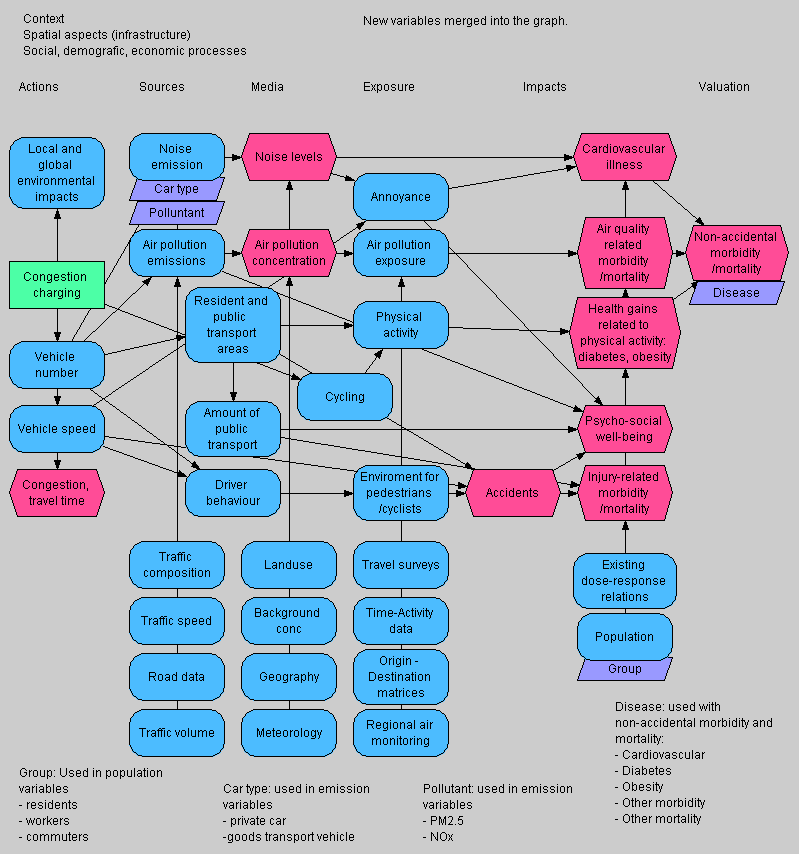

The guidance becomes practical by demonstrating how the Intarase approach from issue framing to causal network description functions in a policy assessment case. We selected the WP3.1 Transport Congestion Charge, since enough material available in order to derive the necessary information that could be used within the example. The material used to create the example consisted of general information about the workpackage 3.1, an issue framing diagram decribing the outline of the congestion charge case study plan and more detailed information about the exposure assessment modeling plan in London case study, one of the cases chosen for the study. | |||

'''purpose''' | |||

*origin of the case: | |||

** A process of literature review and consultation of Dutch decision makers resulted in the identification of congestion charging as a potential case study: issue framework. | |||

** Contact with IC about London's CCZ resulted in a study protocol which could serve as a basis for case study development and implementation in London, Bucharest, Helsinki, Barcelona. | |||

*use and users: | |||

**''municipal authorities, risk assessor community'' | |||

**Target audience of communication: The policy intervention assessment method results will be reported to (municipal) traffic policy experts and published to policy assessors, risk assessors etc. | |||

*other interested audiences: | |||

**The key stakeholder groups to be involved in the assessment include the government of different levels (EU, national, regional), car manufacturers companies, branch organizations of mobility related enterprises and consumer organizations for each of the countries in which the assessments are carried out. | |||

*pursued effects (of policy intervention, of assessment not explified) | |||

*The purpose of the congestion charge policy intervention assessment study is to assess the changes in exposures and health effects from traffic noise and air pollution as well as in road accidents due to the introduction of a congestion charge. | |||

* Rationale (derived from draft case study protocol of London Congestion Charge Zone (CCZ) by John Gulliver, Imperial College London): There have been a number of reported potential health benefits for noise, air quality and accidents in relation to the implementation of the CCZ. Reductions in congestion between 10% and 15% have been quoted (TfL, 2007), and 70 less accidents have occurred each year which corresponds to about a 5% reduction. Improvements in air quality as a result of the CCZ have come in the form reductions of as much as 10% in ambient PM10 and 12% in ambient NOX. These figures are based on data from roadside automatic air pollution monitoring devices, which form part of a network of monitors across Greater London (www.londonair.org.uk), and represent both the long-term (i.e. annual) reductions and relate to information from only one or two locations. | |||

*no figure | |||

'''issue framing''' | |||

inclusions & exclusions (as for London CCZ case, to be adapted for other cases): | |||

*congestion charge policy as introduced in february 2003 '''included''' | |||

**new policy as introduced in feb 2007 '''excluded''' (too recent to obtain information) | |||

*London shopping and business area (15 km<sup>2</sup>) | |||

*resident population (36000 people) '''included''' | |||

**commuters '''excluded''' | |||

*Congestion charge: 5£/day 08:00 - 18:30, monday-friday | |||

**residents can apply for up to 90% discount → min. 4£/week | |||

*sources: private cars + private goods vehicles (using normal fuel) | |||

**exclusions: alternative fuels, cars with >9 seats, disabled people, small motorcycles | |||

*emissions: PM<sub>10</sub>, NO<sub>x</sub> | |||

*noise: ? | |||

*accidents: ? | |||

*only measurement data 2002 & 2003 on emissions will be used for exposure modelling | |||

*issue framing figure (drawn independently from London case) | |||

*Analytica diagrams 1-3 | |||

*explanation of notation | |||

**arrows are statements about existence of causal relations | |||

Scope: The congestion charge policy intervention has been introduced in London's central shopping & business area (total of 15km2) in February 2003. Data from 2003 are compared to data from 2002 in order to assess the changes in the source - impact chain which are due to the introduction of the congestion charge. Besides London, a similar assessment will be done in other cities, suggested are Helsinki, Bucharest, Barcelona. However, in these cities the policy intervention assessment will be completely modelled. | |||

'''endpoints, indicators & key variables''' | |||

*references to purpose and endpoints | |||

**chosen indicator class(es)? → scenario indicators | |||

*clairvoyant test to identify scoping clarity | |||

**from description of change to description of entity itself | |||

*Analytica diagrams 1-3 | |||

Endpoints are health impacts from exposure to traffic mobile emission sources and direct economic and social impacts from the policy intervention. | |||

Key variables and indicators are explicitly mentioned in the case study protocol to be of interest for the first pass assessment process. | |||

Indicators are identified throughout the source - impact chain. All indicators can be interpreted as scenario indicators, since the effect of the policy intervention (post-) is compared to the situation before the intervention was introduced (pre-). In topic-related terms, we can identify a source emission indicator, exposure indicator, several health indicators and economic indicators. In addition the air concentrations, noise levels and accident rates are identified as important and interesting for reporting since the purpose of the study is to assess changes in exposure attributed to each of these three environmental stressors. | |||

'''backbone of network''' | |||

*fitting along the full-chain | |||

**endpoint (compound?) and intermediate indicators | |||

*Analytica diagram 4 | |||

'''tentative causal network''' | |||

*causal relations across the full-chain | |||

**causality test for checking the relations | |||

*necessary variables added to cover the full-chain | |||

*re-"selection" and -specification of variable scoping etc.? | |||

*Analytica diagrams 5-6 | |||

'''coherent network''' | |||

*coherence of variable definitions | |||

**unit test → calculability through the network | |||

*availablity and quality of data? | |||

*re-"selection" and -specification variables → effects through the network | |||

*finding the right level of detail in description | |||

*no figure → building on Analytica diagram 6 | |||

'''good quality network''' | |||

*detailed specification of all variables | |||

**indicators as the leading (most interesting) variables in the network | |||

*evaluation against properties of good risk assessments & indicators | |||

**quality of information content (→ e.g. data quality) | |||

**goodness of applicability (→ e.g. clarity of representation) | |||

*deliberation and iteration | |||

**discussion attribute as deliberation facility and documentation of progress & rationale | |||

**probably the longest phase in the assessment process | |||

*no figure → building on Analytica diagram 6 | |||

[[Image:Congestion_charge_case_study.PNG]] | |||

[[:image:Congestion charge case study.ANA|The Analytica file with this graph.]] | |||

[[Category: | [[Category:Intarese general method]] | ||

[[category:Intarese]] | |||

Latest revision as of 10:54, 20 November 2009

This document attempts to explain what is meant with the term indicator in the context of Intarese, defines what indicators are needed for and how indicators can be used in integrated risk assessments. A related Powerpoint presentation exists. This document is also harmonizes with the Intarese Appraisal Framework report.

KTL (E. Kunseler, M. Pohjola and J. Tuomisto

MNP (L. van Bree, M. van der Hoek and K. van Velze)

Summary

- The full chain approach (causal network) consists of variables. Variables that are of special interest and will be reported are called indicators.

- There are several types of indicators used by different organisations. Intarese indicators differ from previous definitions and practices, e.g. WHO indicators do not emphasize causality and are thus not applicable as Intarese indicators.

- The assessment process has several phases, which can be described in the following way. Note that the process is iterative and you may need to go back to previous phases several times.

- Define the purpose of the assessment (e.g. a key question)

- Frame the issue: what are the boundaries and settings of the assessment?

- Define the variables of special interest, i.e. indicators, and other important (i.e. key) variables.

- Draft a causal chain that covers your framing.

- Use clairvoyant test, causality test, and unit test to find development needs in your causal chain.

- Quantify variables and links as much as needed and possible.

- The clairvoyant test determines the clarity of a variable. When a question is stated in such a precise way that a putative clairvoyant can give an exact and unambiguous answer, the question is said to pass the test.

- The causality test determines the nature of the relation between two variables. If you alter the value of a particular variable (all else being equal), the variables downstream (i.e., the variables that are expected to be causally affected by the tested variable) should change.

- The unit test determines the coherence of the variable definitions throughout the network. The functions describing the links between the variables must result in coherent units for the downstream variables.

- The goodness of a variable is described by the following properties: informativeness, calibration, relevance, usability, acceptability of premises, and acceptability of the specification.

- All variables should have the same basic attributes. These are 1) name, 2) scope, 3) description, 4) definition, 5) unit, 6) result, and 7) discussion.

Introduction

At this project stage of Intarese (18 months - May 2007), the integrated risk assessment methodology is starting to take its form and is about to become ready for application in policy assessment cases. Within subproject 3, case studies have been selected and development of protocols for case study implementation is in process. After scoping documents have been prepared framing the environmental health issues, full chain frameworks and 1e draft assessment protocols needed for policy assessments of the various case studies are being developed correspondingly.

Indicator selection and specification can be used as a bridge from the issue framing phase to actually carrying out the assessment. This guidance document provides information on selecting and specifying indicators and variables and on how to proceed from the issue framing to the full-chain causal network description of the phenomena that are assessed.

There are several different interpretations of the term indicator and several different approaches to using indicators. This document is written in order to clarify the meaning and use of indicators as is seen applicable in the context of integrated risk assessment. This guidance emphasizes causality in the full-chain approach and the applicability of the indicators in relation to the needs of each particular risk assessment case. In the subsequent sections, the term indicator in the context of integrated risk assessment is clarified and an Intarese-approach to indicator selection and specification is suggested.

This indicator guidance document closely harmonizes with the Intarese Appraisal Framework (AF) report. The AF report concludes that indicators may have various goals and aims, as listed below:

- Policy development and priority-setting

- Health impact assessment and monitoring

- Policy implementation or economic consequence assessment

- Public information and awareness rising or risk perception

In this indicator document, practical guidance is given for variable and indicator and index development; subsequently each work package (WP3.1-3.7) can develop its own set at a later point in time when developing their specific assessment protocols.

Integrated risk assessment

Before going any further in discussing indicators and their role in integrated risk assessment, it is necessary to consider some general features of integrated risk assessment. The following text in italics is a slightly adapted excerpt from the Scoping for policy assessments - guidance document by David Briggs [1]:

Integrated risk assessment, as applied in the Intarese project, can be defined as the assessment of risks to human health from environmental stressors based on a whole system approach. It thus endeavours to take account of all the main factors, links, effects and impacts relating to a defined issue or problem, and is deliberately more inclusive (less reductionist) than most traditional risk assessment procedures. Key characteristics of integrated assessment are:

- It is designed to assess complex policy-related issues and problems, in a more comprehensive and inclusive manner than that usually adopted by traditional risk assessment methods

- It takes a full-chain approach – i.e. it explicitly attempts to define and assess all the important links between source and impact, in order to allow the determinants and consequences of risk to be tracked in either direction through the system (from source to impact, or from impact back to source)

- It takes account of the additive, interactive and synergistic effects within this chain and uses assessment methods that allow these to be represented in a consistent and coherent way (i.e. without double-counting or exclusion of significant effects)

- It presents results of the assessment as a linked set of policy-relevant indicators

- It makes the best possible use of the available data and knowledge, whilst recognising the gaps and uncertainties that exist; it presents information on these uncertainties at all points in the chain

Building on what was stated above, some further statements about the essence of integrated assessment can be given:

- Integrated environmental health risk assessment is a process that produces as its product a description of a certain piece of reality

- The descriptions are produced according to the (use) purposes of the product

- The risk assessment product is a description of all the relevant phenomena in relation to the chosen endpoints and their relations as a causal network

- The risk assessment product combines value judgements with the descriptions of physical phenomena

- The basic building block of the description is a variable, i.e. everything is described as variables

- All variables in a causal network description must be causally related to the endpoints of the assessment

Terminology

In order to make the text in the following sections more comprehensible, the main terms and their uses in this document are explained here briefly. Note that the meanings of terms may be overlapping and the categories are not exclusive. For example a description of a phenomenon, say mortality due to traffic PM2.5, can be simultaneously belong to the categories of variable, endpoint variable and indicator in a particular assessment. See also Help:Variable.

Variable

Variable is a description of a particular piece of reality. It can be a description of physical phenomena, e.g. daily average of PM2.5 concentration in Kuopio, or a description of a value judgement, e.g. willingness to pay to avoid lung cancer. Variables (the scopes of variables) can be more general or more specific and hierarchically related, e.g. air pollution (general variable) and daily average of PM2.5 concentration in Kuopio.

Endpoint variable

Endpoint variables are variables that describe phenomena which are outcomes of the assessed causal network, i.e. there is no variables downstream from an endpoint variable according to the scope of the assessment. In practice endpoint variables are most often also chosen as indicators.

Intermediate variable

All other variables besides endpoint variables.

Key variable

Key variable is a variable which is particularly important in carrying out the assessment successfully and/or assessing the endpoints adequately.

Indicator

Indicator is a variable that is particularly important in relation to the interests of the intended users of the assessment output or other stakeholders. Indicators are used as means of effective communication of the assessment results. Communication here refers to conveying information about certain phenomena of interest to the intended target audience of the assessment output, but also to monitoring the statuses of the certain phenomena e.g. in evaluating effectiveness of actions taken to influence that phenomena. In the context of integrated assessment indicators can generally be considered as pieces of information serving the purpose of communicating the most essential aspects of a particular risk assessment to meet the needs of the uses of the assessment. Indicators can be endpoint variables, but also any other variables located anywhere in the causal network.

Proxy