State of the art in benefit–risk analysis: Food and nutrition

This page is a nugget.

The page identifier is Op_en5568 | |

|---|---|

| Moderator:Essi Vuorinen (see all) | |

|

| |

| Upload data

|

Unlike most other pages in Opasnet, the nuggets have predetermined authors, and you cannot freely edit the contents. Note! If you want to protect the nugget you've created from unauthorized editing click here |

This page (including the files available for download at the bottom of this page) contains a draft version of a manuscript, whose final version is published and is available in the Food and Chemical Toxicology 50 (2012) 5–25. If referring to this text in scientific or other official papers, please refer to the published final version as: M.J. Tijhuis, N. de Jong, M.V. Pohjola, H. Gunnlaugsdóttir, M. Hendriksen, J. Hoekstra, F. Holm, N. Kalogeras, O. Leino, F.X.R. van Leeuwen, J.M. Luteijn, S.H. Magnússon, G. Odekerken, C. Rompelberg, J.T. Tuomisto, Ø. Ueland, B.C. White, H. Verhagen: State of the art in benefit–risk analysis: Food and nutrition. Food and Chemical Toxicology 50 (2012) 5–25 doi:10.1016/j.fct.2011.06.010 .

Contents

- 1 Title

- 2 Authors and contact information

- 3 Article info

- 4 Abstract

- 5 Keywords

- 6 Introduction to the scope of this paper

- 7 Key terms in benefit–risk assessment of food and nutrition

- 8 Risk assessment

- 8.1 Food toxicology

- 8.2 Nutritional epidemiology

- 8.2.1 Basic principles

- 8.2.2 Study design and dose–response characterization in epidemiology

- 8.2.3 Proposed frameworks for use of epidemiologic data in risk assessment

- 8.2.4 Scope of risk assessment

- 8.2.5 Benefit assessment

- 8.2.6 Basic principles and concepts

- 8.2.7 Study design and strength of evidence

- 8.2.8 Proposed framework

- 8.3 Scope of benefit assessment

- 9 Benefit–risk assessment

- 10 Benefit–risk assessment in food and nutrition: case studies

- 11 Benefit–risk management of food and nutrition

- 12 Benefit–risk communication of food and nutrition

- 13 Conclusions and recommendations

- 14 Conflict of Interest

- 15 Acknowledgements

- 16 References

Title

Editing State of the art in benefit–risk analysis: Food and nutrition

Authors and contact information

- M.J. Tijhuis, correspondence author

- (mariken.tijhuis@rivm.nl)

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- (Maastricht University, School of Business and Economics, Maastricht, The Netherlands)

- N. de Jong

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- M.V. Pohjola

- (National Institute for Health and Welfare (THL), Kuopio, Finland)

- H. Gunnlaugsdóttir

- (Matís, Icelandic Food and Biotech R&D, Reykjavík, Iceland)

- M. Hendriksen

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- J. Hoekstra

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- F. Holm

- FoodGroup Denmark & Nordic NutriScience, Ebeltoft, Denmark

- N. Kalogeras

- (Maastricht University, School of Business and Economics, Maastricht, The Netherlands)

- O. Leino

- (National Institute for Health and Welfare (THL), Kuopio, Finland)

- F.X.R. van Leeuwen

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- J.M. Luteijn

- University of Ulster, School of Nursing, Northern Ireland, United Kingdom

- S.H. Magnússon

- (Matís, Icelandic Food and Biotech R&D, Reykjavík, Iceland)

- G. Odekerken

- (Maastricht University, School of Business and Economics, Maastricht, The Netherlands)

- C. Rompelberg

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- J.T. Tuomisto

- (National Institute for Health and Welfare (THL), Kuopio, Finland)

- Ø. Ueland

- (Nofima, Ås, Norway)

- B.C. White

- (University of Ulster, Dept. of Pharmacy & Pharmaceutical Sciences, School of Biomedical Sciences, Northern Ireland, United Kingdom)

- H. Verhagen i,j

- (National Institute for Public Health and the Environment (RIVM), Bilthoven, The Netherlands)

- (Maastricht University, NUTRIM School for Nutrition, Toxicology and Metabolism, Maastricht, The Netherlands)

- (University of Ulster, Northern Ireland Centre for Food and Health (NICHE), Northern Ireland, United Kingdom)

Article info

Article history: Available online 12 June 2011

Abstract

Benefit–risk assessment in food and nutrition is relatively new. It weighs the beneficial and adverse effects that a food (component) may have, in order to facilitate more informed management decisions regarding public health issues. It is rooted in the recognition that good food and nutrition can improve health and that some risk may be acceptable if benefit is expected to outweigh it. This paper presents an overview of current concepts and practices in benefit–risk analysis for food and nutrition. It aims to facilitate scientists and policy makers in performing, interpreting and evaluating benefit–risk assessments.

Historically, the assessments of risks and benefits have been separate processes. Risk assessment is mainly addressed by toxicology, as demanded by regulation. It traditionally assumes that a maximum safe dose can be determined from experimental studies (usually in animals) and that applying appropriate uncertainty factors then defines the ‘safe’ intake for human populations. There is a minor role for other research traditions in risk assessment, such as epidemiology, which quantifies associations between determinants and health effects in humans. These effects can be both adverse and beneficial. Benefit assessment is newly developing in regulatory terms, but has been the subject of research for a long time within nutrition and epidemiology. The exact scope is yet to be defined. Reductions in risk can be termed benefits, but also states rising above ‘the average health’ are explored as benefits. In nutrition, current interest is in ‘optimal’ intake; from a population perspective, but also from a more individualised perspective.

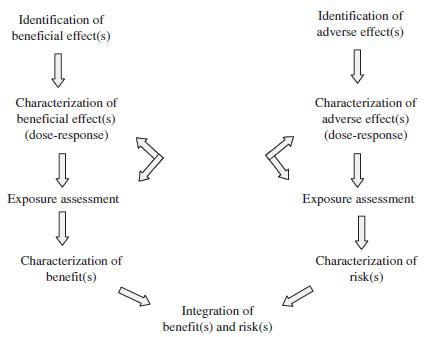

In current approaches to combine benefit and risk assessment, benefit assessment mirrors the traditional risk assessment paradigm of hazard identification, hazard characterization, exposure assessment and risk characterization. Benefit–risk comparison can be qualitative and quantitative. In a quantitative comparison, benefits and risks are expressed in a common currency, for which the input may be deterministic or (increasingly more) probabilistic. A tiered approach is advocated, as this allows for transparency, an early stop in the analysis and interim interaction with the decision-maker. A general problem in the disciplines underlying benefit–risk assessment is that good dose–response data, i.e. at relevant intake levels and suitable for the target population, are scarce.

It is concluded that, provided it is clearly explained, benefit–risk assessment is a valuable approach to systematically show current knowledge and its gaps and to transparently provide the best possible science- based answer to complicated questions with a large potential impact on public health.

Abbreviations

ADI, acceptable daily intake; AICR, Association for International Cancer Research; ALARA, as low as reasonably achievable; AR, Average Requirement; ATBC, alpha-tocopherol, beta carotene cancer prevention (trial); BCRR, Benefit Cancer Risk Ratio; BENERIS, benefit–risk assessment for food: an iterative value-of-information approach; BMD, benchmark dose; BMDL, lower one-sided confidence limit on the BMD; BNRR, Benefit Noncancer Risk Ratio; BRA, Benefit–risk analysis; BRAFO, benefit risk analysis of foods; CARET, beta-carotene and retinol efficacy trial; DALY, disability adjusted life years; EAR, Estimated Average Requirement; EFSA, European Food Safety Authority; FAO, [United Nations] Food and Agriculture Organization; FOSIE, food safety in Europe: risk assessment of chemicals in the food and diet; FUFOSE, Functional Food Science in Europe; ILSI, International Life Sciences Institute; JECFA, Joint FAO/WHO Expert Committee on Food Additives; LLAB, lower level of additional benefit; LOAEL, Lowest Observed Adverse Effect Level; MOE, margin of exposure; NOAEL, No Observed Adverse Effect Level; OECD, Organisation for Economic Co-operation and Development; PAR, population attributable risk; PASSCLAIM, process for the assessment of scientific support for claims on foods; POD, point of departure; PRI, population reference intake; RDA, recommended dietary allowance; RfD, Reference Dose; RIVM, [Dutch] National Institute for Public Health and the Environment; RR, relative risk; SCF, Scientific Committee on Food; (P)TDI/WI/MI, (provisional) tolerable daily/weekly/monthly intake; UL, tolerable upper intake level; ULAB, upper level of additional benefit; WHO, World Health Organization; QALIBRA, quality of life – integrated benefit and risk analysis; QALY, quality adjusted life years; WCRF, World Cancer Research Fund.

Keywords

Keywords: Benefit–risk, Benefit, Risk, Food, Nutrition

Introduction to the scope of this paper

Food and nutrition are in essence necessary and beneficial to, but may also have adverse effects on, human health. Public health professionals have realized, while acknowledging the success of the current food safety system, that the health loss due to unhealthy food and nutrition is many times greater than that attributable to unsafe food; and that the health gains to be made through the consumption of certain foods are many times greater than the health risks involved (van Kreijl et al., 2006). The beneficial and adverse effects may occur concurrently through a single food item (for example fish or whole grain products) or a single food component (for example folic acid or phytosterols), within the same population range of dietary intake. This means that any policy action directed at the adverse effects also affects the degree of beneficial effects. The challenge in the relatively new field of benefit–risk assessment in food and nutrition is to scientifically measure and weigh these two sides.

Historically, the assessments of benefits and risks have been separate processes, where by far most attention has been directed at the assessment of risks, as required by regulation (EU, 2000, 2002b). Focus has been on food safety, with precaution as a risk management option (EC, 2000a) surrounded by much debate as to when and how it should be applied. Worded differently, it is a political decision whether or not to include benefits. From a public health perspective, however, a decision-making process which strives for the lowest risk does not by definition lead to the optimal population health outcome. If the benefits are high enough, some risk may be acceptable. As such, there is a broader picture to be assessed.

The benefit–risk assessment approach entails a paradigm shift from traditional risk analysis, as known from toxicology, to benefit– risk analysis. Essentially, it brings together different scientific disciplines, which have their own history, perspective, tools and uncertainties:

- Toxicology, the discipline that traditionally investigates the risk

of ‘too much’ (acute or chronic adverse effects), as required by the regulatory process.

- Nutrition, a younger discipline that investigates the risk of ‘too

little’ (nutritional deficiencies), the risk of ‘too much’ (nutritional intoxications or affluence-associated problems) and the benefits of optimal nutrient intakes.

In benefit–risk assessment, there is an important role for epidemiology, the methodology-oriented discipline that investigates associations between (real-life human) exposures and health outcomes, adverse or beneficial.

In order to weigh the risks and benefits of foods or their components, they must be evaluated and expressed in a comparable way. Methodologies have been developed and applied to actual cases. New grounds have been explored, yet consensus on the general principles or approaches for conducting benefit– risk analysis for foods and food components still needs to be reached.

This paper presents an overview of current approaches to an integrated weighing of benefits and risks, a state of the art in benefit– risk analysis, in the field of food and nutrition. In this, most attention is directed at the assessment phase, but benefit–risk management and benefit–risk communication are also recognized as an integral part of the benefit–risk paradigm. The perspective is broad, rather than technical. The aim is to facilitate scientists and policy makers in carrying out and judging benefit–risk assessments, to eventually come to better informed decisions about food-related health issues.

We start with an overview of key terms in the field. We then describe risk assessment and benefit assessment separately to build up to a description of current approaches to combine the two. This is illustrated by an overview of benefit–risk case studies and followed by a brief description of benefit–risk management and communication. We end with conclusions and recommendations.

Key terms in benefit–risk assessment of food and nutrition

As benefit–risk assessment of food and nutrition involves different disciplines using different scientific jargon, we first define the key terms as they are used in this review: food, nutrition, risk, benefit and benefit–risk assessment.

In this review, food is defined as any substance or product, whether processed, partially processed or unprocessed, intended to be, or reasonably expected to be ingested by humans (EU, 2002a). Foods are made up of many different components affecting body functions. ‘‘Food’’ and ‘‘food component’’ are viewed as a broad concept: macronutrients, micronutrients, bioactive nonnutrients, additives, contaminants, residues, phyto/phyco/mycotoxins, micro-organisms, allergens, supplements, whole foods and novel foods. The total of these components that are ingested via food, naturally occurring or added, can be seen as nutrition. Notably, ‘nutritious’ has the connotation of ‘healthy’. As a science, ‘‘nutrition’’ studies the total of processes by which food or its components are ingested, digested, absorbed, metabolized, utilized, needed and excreted, and the interactions between these components and between these processes, and their effect on human health and disease.

In the food and nutrition context, we see risk as the probability and severity of an adverse health effect occurring to humans following exposure (or lack of exposure) to a food or food component. In risk assessment, a distinction is made between ‘‘risk’’ and ‘‘hazard’’. In this context, the intrinsic potential of a food (component) to result in adverse health effects is called a hazard. A hazard, then, becomes a risk if there is sufficient exposure. Risk can result from both action and lack of action and both can be analyzed (Wilson and Crouch, 2001). An ‘‘adverse health effect’’ is defined by the WHO as ‘a change in morphology, physiology, growth, development or life span of an organism, which results in impairment of functional capacity or impairment of capacity to compensate for additional stress or increase in susceptibility to the harmful effects of environmental influences’ (WHO, 1994). A ‘‘beneficial health effect’’ can analogously be seen as ‘an improvement of functional capacity or improvement of capacity to deal with stress or decrease in susceptibility to the harmful effects of environmental influences’ in the above definition (Palou et al., 2009). On the benefit side, no parallel words exist for ‘‘hazard’’ and ‘‘risk’’. In order to make the parallel distinction on the benefit side but also have similarity in nomenclature, we will use ‘‘adverse health effects’’ and ‘‘beneficial health effects’’ instead of hazards and benefits. In this review we use benefit analogously to ‘‘risk’’, as the probability and degree of a beneficial health effect occurring to humans following exposure (or lack of exposure) to a food (component). Beneficial health effects may postpone the onset of disease and thus benefit can also be measured as a reduction of risk.

Benefit–risk assessment is seen here as a science-based process intended to estimate the benefits and risks for humans following exposure (or lack of exposure) to a particular food or food component and to integrate them in comparable measures, thus facilitating better informed decisions by decision-makers.

Risk assessment

Risk assessment is an established field, that is mainly addressed by toxicology (Faustman and Omenn, 2008). The traditional risk assessment paradigm (EC, 2000b) consists of

- hazard identification (what effect?),

- hazard characterization (at what dose? how?),

- exposure assessment (how much is taken in?) and

- risk characterization (what is the probability and severity of the effect?).

A risk assessment framework specifically for food has been developed in the context of the FOSIE project (Food Safety in Europe, www.ilsi.org/Europe) (Smith, 2002). The basic concepts and approaches to risk assessment in toxicology will be addressed in Section 3.1. A separate paragraph is dedicated to micronutrients, which is placed under toxicology for practical reasons. There is an additional (not yet clearly defined) role for epidemiology, which will be addressed in Section 3.2. Focus will be on those characteristics where the most pronounced differences exist between toxicology, epidemiology and later benefit assessment, and which need to be considered when integrating the different disciplines. Table 1 presents a summary of some of the characteristics of toxicological and epidemiologic risk assessment.

| Characteristic | Tox | Epi |

|---|---|---|

| Initiation | Mainly regulatory | Mainly academic |

| Starting point | Usually suspicious substance | Both substance and endpoint |

| Study design | Mainly experimental | Mainly observational |

| Formal procedures defined | Yes | No |

| Study population | Selected, genetically homogeneous animals | Mainly free-living, genetically heterogeneous humans |

| Exposure under study | Controlled (administered), mostly one agent, supraphysiological doses | Uncontrolledb (measured), multiple agents likely, physiological doses |

| Extrapolation | To lower doses (‘actual exposure’) using default value of 10 or chemical specific adjustment value | No, actual exposure |

| Presentation of exposure and risk | Usually absolute | Usually relative (ranking of study subjects) |

| Quantification of effect (with chronic exposure) | Incidence (proportion of animals with adverse effect over duration of study; usually lifetime risk;) or continuous measure | Incidence rate (proportion with adverse effect per person-year, standardized to a particular age distribution; lifetime risk rare) or continuous measure |

| Comparison | Difference | Usually ratioc |

| Evaluation of evidence | Selection of high-quality study with the most sensitive effect in the most sensitive subgroup (worst-case scenario or positive evidence approach) | All high-quality studies (weight of evidence approach, calculating overall effect) |

| Some sources of uncertainty |

|

|

- a With focus on chronic exposures.

- b Unless randomized clinical trial (RCT).

- c Necessary for case-control study; for cohort studies calculation of risk differences is also possible.

Food toxicology

Classical food toxicology focuses on the absence of risks, viz. on safety (EU, 2000, 2002b). Risk assessment may be initiated for a variety of purposes, most commonly it is triggered by a legal requirement (EC, 2000b).

Basic principles

The basic tenet of quantitative risk assessment is that data on health effects detected in small populations of animals exposed to relatively high concentrations of an agent can be used to predict health effects in large human populations exposed to lower concentrations of the same agent. Generally, risk is measured as the fraction of the population exceeding a defined upper intake or ‘guidance’ level. This level is established by working down from the most sensitive hazardous effect (the so-called critical effect) in animals and applying uncertainty factors for extrapolation to the most sensitive human subgroup. The toxicological approach differs between compounds that are assumed to show a threshold effect and those that are not, between compounds that are avoidable in the diet and those that are not, and depends on the amount of scientific information available and the policy of decision-makers towards acceptance of risk. First we briefly address the identification of adverse health effects, then we address their characterization and translation into risk.

Identification of adverse effects

The better toxicity studies have an appropriate number of dose levels, sufficient sample sizes and focus on relevant endpoints in a relevant species (WHO-Harmonization-Project, 2005–2003). These are mostly animal toxicity tests, with an experimental design following OECD guidelines for the testing of chemicals (OECD). Tests of acute toxicity, i.e. the toxicity that manifests itself immediately or within 14 days after exposure to a single administration of a chemical by ingestion, inhalation or dermal application, are often not useful for hazard identification and risk assessment in relation to foods and food chemicals (Barlow et al., 2002), because human exposure is generally of chronic nature and is usually much lower than the dose that is identified in these tests. Tests of subacute or subchronic toxicity, i.e. repeated dose toxicity studies mostly performed in rodents for a period of 28 or 90 days, should reveal the major toxic effects. The effects may include changes in body and organ weight, organ histopathology, hematological parameters, serum and urine clinical chemistry and sometimes extensions to screen for neurotoxicity or immunotoxicity. Tests of chronic toxicity cover a larger part of the animal’s life span, e.g. 12 or 24 months in rodents. There are specific tests and procedures for establishing reproductive and developmental toxicity, for neurotoxicity, for genotoxicity, for carcinogenicity, for immunitoxicity and for food allergies (Barlow et al., 2002).

Characterization of adverse effects and risk

The standard approach to characterize a dose–response relationship,

for substances assumed to show non-carcinogenic

threshold effects, is the derivation of the No Observed Adverse Effect

Level (NOAEL). This is the largest amount of a substance that

the most sensitive animal model can consume without adverse

health effects (see Fig. 1a). Usually, the most robust datasets are

chosen with adverse effects occurring at the lowest levels of exposure

from studies using the most relevant exposure routes (Faustman

and Omenn, 2008). If there are no adequate data

demonstrating a NOAEL, then a LOAEL (the lowest amount at

which adverse effect are observed) may be used. Based on the

NOAEL (or LOAEL), health-based guidance levels are established,

under the rationale that these will ensure protection against all

other adverse health effects which may be caused by the compound

considered. This means that although different endpoints

may yield different NOAEL’s, one NOAEL is chosen as a point of

departure from the experimental dose–response range for setting

acceptable exposure levels and this is the one for the most relevant

sensitive endpoint in the most sensitive species; Based on this

NOAEL, a health based guidance value is established by means of uncertainty factors. By default (i.e. unless specified data are available

to do otherwise) the NOAEL is divided by 10 to take into account

differences between animals and humans (interspecies),

and again by 10 to take into account differences in sensitivity

between humans (interindividual) (Dorne and Renwick, 2005;

Renwick, 1993).

The guidance level for nutrients (see also Section 3.1.4) is the tolerable upper intake level (UL), for additives is termed the ADI (acceptable daily intake) and for contaminants in food the terminology in Europe is tolerable daily (or weekly/monthly) intake (TDI/TWI/TMI). In the USA, Reference Dose (RfD) is preferred as a less value-laden term for the latter. All these terms refer to an estimate of the intake of a substance over a lifetime that is considered to be ‘without appreciable health risk’ (WHO, 1994). They are meant to protect 98% of the population. To most toxicologists, guidance levels are ‘‘soft’’ estimates and not a matter of marking acceptability from non-acceptability (EPA, 1993). Exposures somewhat higher than the guidance level are associated with increased probability of adverse effects, but that probability is not a certainty. Similarly, the absence of a risk to all people cannot be assured at the level below the guidance level (EPA, 1993). As a consequence, more and more dose–response modeling is being advocated as a more science-based way to go. Different mathematical and physiological approaches are being explored. Edler et al. (2002) describe a number of statistical and mechanistic modeling approaches with differing data requirements, degree of complexity, applicability and type and quality of resulting risk estimates, including: structure–activity relationships and the threshold of toxicological concern; the benchmark dose (BMD); probabilistic risk assessment; and physiologicallybased pharmacokinetic modeling. The latter fits in the development towards a ‘systems toxicology’; in vivo dose–response curves could be predicted by combining in vitro toxicity data and in silico kinetic modeling (Louisse et al., 2010; Punt et al., 2008). Other initiatives exist, e.g. the Key Events Dose–Response Framework (KEDRF) incorporates information on the substance’s mode of action and examines critical events that occur between the initial dose of a bioactive agent and the effect of concern (Julien et al., 2009).

By default, the threshold approach is not applied to genotoxic carcinogens, because theoretically (although the organism is equipped with some degree of cytoprotection) one molecule can initiate the cancer process. The Joint FAO/WHO Expert Committee on Food Additives (JECFA) and in Europe the SCF (the predecessor of EFSA, the European Food Safety Authority) advised that the presence of carcinogenic contaminants in the diet should be reduced to ‘irreducible levels’ by current technological standards (Edler et al., 2002), which is complicated by the fact that detection limits are increasingly more sensitive. This approach is captured in the ALARA (as low as reasonably achievable) management option to regulate avoidable genotoxic carcinogens. These committees considered that there was no adequate science basis for low-dose extrapolation to quantify a ‘‘virtual safe dose’’, an acceptable additional cancer risk for lifetime exposure to the compound of interest which can be set at, for example, one additional cancer case in a population of one million people. Current methods that do quantify a virtual safe dose are based on linear extrapolation from a point of departure on the dose–response curve (Boobis et al., 2009). It is argued that for genotoxic carcinogens, both the threshold concept and the low-dose linearity concept are in need of refinement (Boobis et al., 2009; Rietjens and Alink, 2006; Waddell, 2006). This will require better mechanistic insight and risk–benefit analysis (Boobis et al., 2009; Rietjens and Alink, 2006; Waddell, 2006). It can help overcome the critique with regard to the precautionary principle that it does not provide a transparent way to weigh and account for different risks, or in other words, that it does not help regulators to decide which risks to regulate (EFSA, 2005; Post, 2006).

EFSA recommends using the margin of exposure (MOE) approach as a harmonised methodology for assessing the risk of substances in food and feed, which are genotoxic and carcinogenic (EFSA, 2005). The MOE approach uses a reference point, that is often taken from an animal study and that corresponds to a dose that causes a low but measurable response in animals. This reference point is then compared with various dietary intake estimates in humans, taking into account differences in consumption patterns in the population (EFSA, 2005). The MOE essentially shows whether the human levels of exposure are close to effect levels and enables the comparison of the risks posed by different genotoxic and carcinogenic compounds. It is for the risk manager to decide if the magnitude of the MOE is acceptable, and if further action is needed taking into consideration additional aspects, such as the feasibility of removing the substance from the food supply. In general a MOE of 10,000 or higher, if it is based on a bench mark dose lower confidence limit for a 10% change in effect (BMDL10, see below) from an animal study, might be considered of low concern from a public health point of view and as a low priority for risk management actions. However, this number is arbitrary and the judgment is ultimately a matter for decision-makers. Moreover an MOE of that magnitude in itself should not preclude the application of risk management measures to reduce human exposure. Recently, an outline framework for calculating an MOE was proposed in order to help to ensure transparency in the results (Benford et al., 2010).

The MOE approach could also be applied for non-carcinogenic substances. EFSA recommends the use of the benchmark dose (BMD) to estimate the MOE, for instance where the human exposure is close to the ADI (EFSA, 2005, 2009b). The BMD approach estimates the dose that causes a low but measurable critical effect, e.g. a 5% increase in the incidence of kidney toxicity or a 10% percent change in the level of liver weight. By calculating the lower confidence limit of the estimated dose (BMDL), the uncertainty and variability in the data is taken into account (EFSA, 2009b). In this BMD approach more information is used than in the NOAEL approach.

Micronutrients

For micronutrients, a somewhat different approach needs to be followed, as risk can come from intakes that are too high, but also from intakes that are too low. Micronutrients, home in the field of nutrition, are addressed here because toxicology-related measures are used in their risk assessment. The higher end guidance level, the UL (see Fig. 1a), differs in two principal aspects from the ADI and TDI. First, micronutrients, as opposed to additives or contaminants, are subject to homeostatic control. This means that the body may adapt its functioning to intake and deal with some level of excess or shortage. Second, UL’s may be set for different life stage groups.

The other often used guidance level, the recommended dietary allowance (RDA, see Fig. 1b) (also known as population reference intake, PRI) (EFSA, 2010a), is by default set for different life stage groups and by sex and represents the nutritional needs of most healthy individuals (thereby exceeding the minimal requirements for almost all). It is calculated from the Estimated Average Requirement (EAR, see Fig. 1b) or Average Requirement (AR) (EFSA, 2010a), which is the daily intake value that is estimated to meet the requirement, as defined by a specified indicator of adequacy, in 50% of the individuals in a life stage or sex group (Renwick et al., 2004). Often, a normal distribution of requirements and a 10– 15% coefficient of variation (CV) for the EAR are assumed. The RDA must be considered when deriving the UL, as it is possible that default uncertainty factors would yield a UL below the RDA. The intake range between deficiency and toxicity varies greatly between nutrients. For example, it is large for vitamin C and narrow for zinc. In addition, it may overlap between different populations. Related to this given, there is critique on current methods of establishment of the upper level for micronutrients and maximum permitted levels of vitamins and minerals in food supplements working from precaution, and a call for using ‘decision science’ is made (Verkerk, 2010). Here, the point is that dosages that induce risks in a few might actually at the same time induce benefits in many (Verkerk, 2010).

Nutritional epidemiology

In the traditional risk assessment of foods, the use of epidemiologic data has been limited. There is no mandatory role (and there are no clear criteria) for epidemiologic data in risk assessment for regulatory purposes, despite the fact that (nutritional) epidemiologists are conceptually and practically already used to describe effects at physiological intake levels and despite the limitations of animal studies due to the requirement of both interspecies and high-to-low dose extrapolations.

Basic principles

Epidemiology is about identifying and quantifying associations between (real-life) human exposures and health outcomes, adverse or beneficial. The exposure and the health outcome, usually a disease or marker for a disease, are most often a priori specified and hypothesis-driven. If the association between exposure and outcome is found to be inverse, i.e. higher exposure leads to lower disease occurrence, this signifies a reduction in risk. In other words, in epidemiology, an ‘exposure’ is a factor or condition that may increase or decrease the risk of disease (WCRF/AICR, 2007). This work often serves the quest for knowledge and understanding more than a practical regulatory goal, but it is also possible to quantify the effect on (public) health to directly aid in decision making. Reflecting the above, three types of measures can broadly be distinguished in epidemiology: measures of disease occurrence, measures of association and measures of effect (Rothman et al., 2008). Disease occurrence can be expressed as cases that are prevalent (current) or incident (new). The measures of association are most often expressed as relative differences (or ‘ratio’ measures), for example: the relative risk (RR) to acquire disease X among those consuming food (component) Y in the 5th quintile of the intake distribution is 1.5 compared to those in the 1st quintile (the reference, set to 1); but often absolute difference measures, such as the ‘excess risk’ that consuming food (component) Y contributes to acquiring disease X, can also be calculated. The measures of effect have impact on those exposed or on the population. An example of the latter is the population attributable risk (PAR), which can be seen as the reduction in occurrence of disease X that would be achieved if the population had been entirely unexposed to food (component) Y, compared with its current exposure pattern (Rothman et al., 2008). In the risk assessment context, epidemiologic studies are mainly observational in design.

Study design and dose–response characterization in epidemiology

The great advantage of epidemiology is the fact that it actually studies the species it wants to make inferences about. Yet this is also its hurdle, as humans are free-living and subject to many influences. Both exposure and outcome are difficult to measure accurately and subject to systemic bias and measurement error (Kroes et al., 2002; Rothman et al., 2008; Willett, 1998). These are not insurmountable problems, however. The possibility of bias occurring can be reduced through good study design. Study design can be experimental or observational. Experimental design in humans, encompassing randomized controlled trials (RCT’s), is not ethical or feasible in case of (severe) risks or long latency (van den Brandt et al., 2002). In some cases, unintended risks turn up in trials, such as in the ATBC and CARET trials (ATBC-Study-Group, 1994; Omenn et al., 1996) and in the Norwegian Vitamin Trial and Western Norway B Vitamin Intervention Trial for folate (Ebbing et al., 2009), where an increased percentage of cancer cases was seen where a decrease was expected. These trials were, however, limited to one dose, and thus dose–response relationships could not be characterized. Observational studies include cohort studies (starting with exposure assessment and following up on disease development) and case-control studies (starting with diseased and non-diseased and assessing past exposure). They allow for long latencies between exposure and health outcome of interest. These studies do not provide direct evidence for causal relationships, but a number of methodological considerations have been proposed to judge whether an observed association is a causal relationship and to help in making decisions based on epidemiologic evidence (Hill, 1965; Phillips and Goodman, 2004; Rothman et al., 2008). All factors suspected to be related to the factor of interest need to be measured accurately and in unbiased samples of the target population. Statistical modeling techniques can then control for their influence on the outcome (confounding) or detect associations that are different in subgroups (effect modification or interaction). In studies that do not show an effect, it is often not clear whether the effect is null or simply smaller than can be detected by the studies (insufficient power). Combining data from different studies will increase the power and estimate dose–response functions with more precision. However, gathering the appropriate dose–response data will not always be possible. An example of successful use of epidemiologic studies is the case of aflatoxins and liver cancer (van den Brandt et al., 2002). Animal models had been found to be inappropriate, showing a large degree of variability in the carcinogenic potential of aflatoxins across species. From human studies looking into Hepatitis B and liver cancer, which included aflatoxin intake, the aflatoxin potency to induce liver cancer (cancers/ year/100.000 people/ng aflatoxin/kg body weight/day) could be calculated. Another example, in which dose–response relations have been estimated from human observational studies, is the relation between methyl mercury in fish and cognitive development, as recently summarized by the FAO/WHO expert consultation (FAO/WHO, 2010).

Proposed frameworks for use of epidemiologic data in risk assessment

The need for a more structured use and presentation of epidemiologic data is risk assessment is long recognized (Hertz-Picciotto, 1995). Hertz-Picciotto (1995) has proposed a classification framework for the use of individual epidemiologic studies in dietary quantitative risk assessment, with special focus on dose– response assessment, and this has been modified by van den Brandt et al. (2002). They identified three classification categories for epidemiologic studies: (1) the study can be used to derive a dose–response relationship; (2) the study can be used to check on plausibility of an animal-based risk assessment; (3) the study can contribute to the weight-of-evidence determination of whether the agent is a health hazard. For the classification of a study into the three categories a number of criteria are used addressing its validity and utility. Recently, a three tier framework has been proposed through which human observational studies can be selected for use in quantitative risk assessment, with specific focus on exposure assessment (Vlaanderen et al., 2008). General recommendations for reporting of observational studies have been provided by STROBE, which stands for Strengthening the Reporting of Observational Studies in Epidemiology (http:// www.strobe-statement.org/ (Vandenbroucke et al., 2007)).

Scope of risk assessment

In contrast to benefit assessment, the scope of risk assessment is fairly well established (see Section 4.4). It deals with the assessment of adverse health effects caused by physical or chemical agents. These can be occurring naturally in foods, resulting from food preparation or manufacturing processes or environmental contaminants. At the moment there is further development towards a better understanding of the nature and mechanisms of toxic effects caused by these agents, the inclusion of data other than the traditional toxicological data (Eisenbrand et al., 2002; van den Brandt et al., 2002), and consideration of the shape of the dose–response relationships (linear, threshold, J-shape, Ushape) (Calabrese et al., 2007; Zapponi and Marcello, 2006).

Benefit assessment

Benefit assessment is not an established field. Although beneficial effects conferred by food have been the topic of investigation for a long time (for example within nutritional epidemiology, see Section 3.2), the structured characterization of benefits has only recently begun to develop. Benefit assessment has recently gained more attention because of the developments in the form of health claims on foods. In December 2006, the European Union published its Regulation 1924/2006 on nutrition and health claims made on foods, which, several decades after risk assessment, places a legal perspective also on benefit assessment. Below we will address some concepts and approaches in benefit assessment.

Basic principles and concepts

In the past decades, the goal of ‘‘adequate’’ nutrition, as defined by the absence of nutritional deficiencies, has shifted to the ambitious goal of ‘‘optimal nutrition’’. This change goes beyond the concept of ‘risk reduction’ and does not only apply to traditional foods and food components, but has also led to the concept of ‘‘functional foods’’. Food is seen as a possibility to improve health. Taking beneficial effects into account, may also uncover a discrepancy. The RDA (see Section 3.1.4 micronutrients) allows a small percentage of the population to be at inadequate intake for the specified indicator of adequacy; however, if the intake needed to gain a theoretical benefit (as indicated by the dashed line in Fig. 1b) is higher than this level, then a substantial part of the population may not have the advantage of this theoretical benefit. In the benefit assessment context, epidemiologic studies can be both observational and experimental, but experimental (‘intervention’) studies are preferred.

Study design and strength of evidence

As described in Section 3.2 both observational and experimental methodologies can be used to assess risks and benefits. From observational studies, there is ample experience in describing associations between human exposure and health outcomes. The level of evidence they provide is most commonly assessed by using

- the WCRF/AICR criteria, resulting in the judgment ‘‘convincing’’,

‘‘probable’’, ‘‘limited-suggestive’’, ‘‘limited-no conclusion’’ or ‘‘substantial effect on risk unlikely’’ (WCRF/AICR, 2007); or

- the WHO criteria, resulting in the judgment ‘‘convincing’’,

‘‘probable’’, ‘‘possible’’ or ‘‘insufficient’’ (WHO, 2003).

For ethical reasons, human experiments (or ‘‘intervention studies’’) are generally pre-eminently carried out to study beneficial and not adverse health effects, once observational data have shown that the benefit is reasonably probable and/or toxicological screening has given reasonable proof of safety. However, it is not uncommon that these trials are short-term and limited to only one or two doses, and these doses are generally rather high to yield the best chance of ‘success’ (van den Brandt et al., 2002). This hampers the construction of dose–response curves that are relevant for long-term human exposure. Short-term trials may not always be appropriate to characterize effects of food and nutrition, because after long-term exposure response mechanisms may adapt to a different state (tolerance) or wear out (threshold). Guidelines for the design and reporting of human intervention studies and for a standardized approach to prove the efficacy of foods and food constituents are being developed (ILSI, 2010). The created knowledge and methodology can be applied in a broader context, and this includes answering a benefit–risk question.

Proposed framework

There is agreement in the field to consider benefits according to standardized assessment procedures and specific definitions (Palou et al., 2009). Benefits should be scientifically proven. It is advocated to structure benefit assessment in the same framework as risk assessment; For example, the EU advisory working group on harmonization of risk assessment procedures identifies a 4-stage approach consisting of

- ‘value identification’,

- ‘value characterization’,

- ‘use assessment’, and

- ‘benefit characterization’(EC, 2000b).

EFSA uses the terminology

- ‘identification of positive / reduced adverse health effect’,

- ‘characterization of positive / reduced adverse health effect’,

- ‘exposure assessment’, and

- ‘benefit characterization’ (EFSA, 2010b).

Examples of initiatives specifically focusing on benefit assessment, in the form of claims made by industry, are the FUFOSE and PASSCLAIM projects, coordinated by ILSI Europe (www. ilsi.org/Europe). One of the goals for the FUFOSE project was to assess critically the science base required to provide evidence that specific nutrients and food components beneficially affect target functions in the body (Bellisle and et al., 1998; Diplock et al., 1999). Its follow-up, the PASSCLAIM project, aimed to define a set of generally applicable criteria for the scientific substantiation of claims on foods (Aggett et al., 2005; Asp and Bryngelsson, 2008). The PASSCLAIM criteria cover: (1) the characterization of the food or food ingredients for the health claim, (2) the necessity of human data for the substantiation of a health claim (by preference data coming from human intervention studies but also accepting human observational data), (3) the use of valid endpoints or biomarkers as well as statistically significant and biological relevant changes therein, and (4) the requirement for the totality of the data and weighing of the evidence before making a judgment whether a health claim is or is not substantiated (Aggett et al., 2005; Asp and Bryngelsson, 2008). Addressed in the claims regulation 1924/2006 is the particular beneficial nutritional property of a food (in so-called nutrition claims) or the relationship between a food or food category and health (in so-called health claims) (Verhagen et al., 2010). Examples of the latter are the relationship between calcium and development of bones and teeth, the relationship between plant sterols and coronary heart disease through reduced blood cholesterol and the relationship between iron and cognition in children. A state of the art in nutrition and health claims in Europe is given by Verhagen et al. (2010).

Scope of benefit assessment

Claims are a driver of, but not the only, development in benefit assessment. There are other initiatives to increase benefit from food, such as voluntary food reformulation by industry (for example reducing the amount of salt, sugar or trans fatty acids) (van Raaij et al., 2009). With respect to traditional foods there has been a development in research to look at whole foods or whole diets (dietary patterns), for example the Mediterranean diet.

Benefits are often measured on a disease scale, either directly as a reduction in disease risk or indirectly as a change in disease marker. Currently, markers of optimal health are also being explored (Elliott et al., 2007). This is a fundamentally different process, as subjects are healthy individuals and effects are expected to typically be small. Two bottlenecks have been identified in the development of the newly explored biomarkers to quantify health optimization (van Ommen et al., 2009): the robustness of homeostasis and inter-individual differences in what appear to be normal values. Many markers are maintained within a limited range and effects are masked unless homeostasis is challenged. Nutritional challenge tests (e.g., a high-fat diet) are being developed in this context. These will bring imbalance to normal physiology. Quicker recovery then signifies more optimal health. A combination of perturbation of homeostasis and systems biology and the -omics technologies have been proposed as a means to quantify the system’s robustness and to measure all the relevant components that describe the system and discriminate between individuals (Elliott et al., 2007; Hesketh et al., 2006; Keijer et al., 2010; van Ommen et al., 2009). This development also signifies a shift from a population orientation to a more individual based orientation of benefit– risk analysis.

So far, benefits are based in the biological health domain. Decision- makers are bound by a limited amount of (public) money and (thus) require management options based on physiological mechanisms, not on for example individual ‘preferences’. However, certain perceptions and behaviors may well influence the quality of life in such a way that they are relevant for benefit–risk weighing (for a detailed description of consumer perception, see Ueland et al., 2011). Also, long-term benefits, in the form of ecological considerations, could be relevant. Thus, it might be reasonable to accept a broad scope of what entails a benefit.

Benefit–risk assessment

From a legal perspective, benefit assessment is about the permission to carry a claim on a product and risk assessment is about defining a maximum intake dose below which the population intake is safe (without appreciable adverse effects). From a public health perspective, benefit assessment and risk assessment are not yes/no or safe/unsafe decisions, but describe a range and continuum of doses and likelihoods of effect. Benefit–risk assessment is about evaluating the whole scope of human health and disease. It involves more than adding an assessment of benefits to an assessment of risks; the scientific processes to be integrated are not symmetric. Below, we will first briefly address the integration of the science. Next, we will discuss a number of frameworks for performing a benefit–risk analysis, the integration of outcome measures, uncertainty in the assessment and other (problematic) issues.

Integration of disciplines

Until recently, benefit assessment and risk assessment were approached as separate processes and the domain of different underlying scientific disciplines. In benefit–risk analysis, all inevitably comes together. There are differences in the process and in the output which need to be integrated. Table 2 presents some of these differences. The integration requires adaptations from all disciplines involved.

There is increasing interest to know which effects occur on the entire range of human exposure to be able to characterize benefits and risks. Toxicologists have suggested that toxicology should redirect its focus from looking at adverse effects at high levels of experimental exposure, to characterizing the complex biological effects, both adverse and beneficial, at low levels of exposure, the so-called ‘low-dose toxicology’ (Rietjens and Alink, 2006). This will help risk quantification of realistic intakes and is also interesting from the idea that some low (physiologic) doses actually turn out to be beneficial (Son et al., 2008). Epidemiologists and nutritionists have urged all who are generating human data to improve the quality of their data presentation and expand it, by presenting more detailed information focusing on dose–response necessities, and/or by sharing primary data (de Jong et al., submitted for publication). Some also stress the important role for early, quantifiable biomarkers of exposure and of effect as well as for the -omics technologies (Palou et al., 2009). This is expected to improve exposure assessments and provide early response profiles.

Ideally the (old and new) disciplines could complement each other within the benefit–risk assessment process, with their roles differing depending on e.g. the type of food (component) and its history of use, but always from the aim to end up with data most relevant to humans.

| Characteristic | Benefit assessment | Risk assessment |

|---|---|---|

| Study design | Experimental (observational may also be accepted) | Experimental (observational may also be accepted) |

| Study population | Human | Animal (Human is possible) |

| Widely accepted methodology | In development | Yes |

| Widely accepted endpoints | In development; also measured as reduction in adverse endpoint | Yes |

| Exposure | Usually few (supraphysiological) doses in experimental study; physiological doses/observational design may also be accepted | Usually few (supraphysiological) doses in experimental study |

| Study duration | Usually short (days/weeks) in experimental design, years/decades can be covered in observational design | Usually lifetime of animal until adverse endpoint occurs, years/decades can be covered in observational design |

| Evidence required | Grading ‘convincing’ (sometimes ‘probable’ admitted) in weight of evidence approach | Strongest effect/steepest slope in positive evidence approach |

| Desired outcome in benefit–risk assessment setting | Benefit characterization, including low dose–response information | Risk characterization, including low dose–response information |

| Desired outcome in regulatory setting | Benefit/no benefit | Safe/unsafe or acceptable/non-acceptable |

- aWith focus on chronic exposures.

Benefit–risk assessment approaches

A number of research efforts have been dedicated specifically to the development of benefit–risk methodology in the field of food and nutrition. There have been three recent Europe-based projects, each with its own focus: BRAFO (2007–2010), QALIBRA (2006– 2009) and BENERIS (2006–2009); in addition, the topic has been subject of investigation by the European Food Safety Authority (EFSA, 2007, 2010b), by national food and health related institutes (Fransen et al., 2010; van Kreijl et al., 2006) and by individual groups.

Generalities in approaches

Conceptually, those exploring the field of benefit–risk assessment in food and nutrition agree that much health gain can come from investing in a better diet, more than from further improving food safety (see Table 3). Practically, there is consensus about the importance of a well formulated and well described benefit– risk question. This prior narrative needs address the exposure, target population, health effects and scenarios. As there is more experience with risk assessment than with benefit assessment, it is generally suggested that the steps taken in benefit–risk assessment should mirror the traditional risk assessment paradigm of hazard identification, hazard characterization, exposure assessment and risk characterization, with a risk arm and a benefit arm running parallel until comparison (see Fig. 2). A fully quantitative benefit–risk analysis requires a large amount of data (see Fig. 3) and time. Assumptions about missing or incomplete data are inevitable. A tiered (stepwise) approach is advocated, allowing transparency and an early stop in the analysis in case the question is answered or in case there is substantial lack of data. In most approaches there is not only room for in-between scientific evaluation, but also for interim consultation with the decision-maker. This means that if a risk is found which is acceptable to the decision- maker, then the benefit–risk assessment may also come to a stop (i.e., a management judgement). In fact, policy makers are most often the intended user and initiator of the benefit–risk assessment. In general, a distinction can be made between a qualitative comparison and a quantitative comparison of benefits and risks. In a qualitative comparison the benefits and risks are compared in their own currency. This is sufficient when there is clear dominance of either the benefits or the risks, e.g. low gastritis incidence versus high cancer incidence, or when comparison with guidance values indicates that either the benefits or the risks are negligible and there is no true benefit–risk question left. In cases where benefits or risks do not clearly dominate, quantitative comparison may answer the benefit–risk question. Quantitative comparison of benefits and risks is seen as an (optional) end stage and can be achieved by expressing the effect of each endpoint in the same metric (e.g. quality or disability adjusted life years). In calculating these measures, the nature of the estimate may be deterministic, i.e. using point estimates, or probabilistic, i.e. using distributions. In general, some kind of screening is incorporated in the assessment to evaluate whether the benefits clearly prevail over risks or vice versa, and can remain qualitative. This may entail that exposure assessment is performed before the dose–response characterization (see Fig. 2). For transparency and best insight in who receives the benefits and risks, all separate health effects need to be communicated apart from the net health effect. In the process of developing the methodology, case studies have been performed to test and refine the approaches.

| Factor | Number of deaths/year | DALYsa/year | Health gain through improvement of food safety versus improvement of diet (composition, amount) |

|---|---|---|---|

| Diet compositionb | 13,000 | 245,000 | |

| Bodyweight | 7000 | 215,000 | |

| Diet in totalc | >350.000 | ||

| Foodborne infections by known pathogens | 20–200 | 1000–4000 | |

| Chemical constituents | 100–200 | 1500–2000 | |

| Food safety in total | 2500–6000 |

- a DALYs = disability adjusted life years; important here is the message, not the exact numbers: annual health gain to be achieved through improvements to the diet is many times greater than that possible through further improving food safety.

- b Diet composition including five factors (fruits, vegetables, fish, saturated fatty acids and trans fatty acids).

- c Counting diet composition and part of bodyweight linked to diet.

Specific approaches

Below we describe specific initiatives to approach the benefit– risk assessment field.

The ILSI Europe project BRAFO (www.brafo.org) follows up the projects FOSIE (see under ‘risk assessment’), FUFOSE and PASSCLAIM (see under ‘benefit assessment’). The primary aim of BRAFO has been to develop a framework that allows for the quantitative comparison of human health risks and benefits in relation to a wide range of foods and food compounds. The BRAFO approach consists of 4 tiers, preceded by a pre-assessment and problem formulation (Hoekstra et al., 2010). The problem is worded as a comparison in net health effect between a reference scenario and an alternative scenario. In tier 1, the individual risks and benefits are assessed. Should only risks or only benefits be identified, the assessment stops. When both risks and benefits are identified, the assessment proceeds to tier 2, which entails a qualitative integration of risks and benefits. In this, four main dimensions are considered: the incidence, the severity/magnitude of the health effects, their duration and the additional (reduction in) mortality caused by the effect and consequent (reduction in) years of life lost. Should either the risks or the benefits clearly dominate, then the assessment stops. Special table formats are suggested to present the data and information relevant in tier 1 and 2, for clarity and transparency. In tier 3, the benefits and risks are weighed quantitatively. Tier 3 is closely related to a 4th tier, which also entails a quantitative assessment. These tiers are actually a continuum, starting fully deterministically, using fixed estimates, and ending more and more probabilistically, using probability distributions. The approach has been tested and further developed with several case studies.

The tiered approach developed by the National Institute for Public Health and the Environment (RIVM) of the Netherlands also distinguishes steps separated by ‘‘stop’’-moments to allow for a timely evaluation to conclude whether the gathered information is sufficient to answer the initial benefit–risk question (Fransen et al., 2010). As compared with the BRAFO-approach, the exposure assessment has been moved upward and the dose–response modeling as part of hazard and benefit characterization is moved to a later stage. The characterization step includes an extensive literature search and modeling to estimate dose–response functions for the selected effects. The advantage of giving priority to the exposure assessment is that one gets early information of the population distribution of the exposure. In case of no or very limited exposure in risk groups, one can terminate the risk–benefit assessment at an early stage, before going to a benefit–risk characterization. The decision tree has been tested with case studies.

QALIBRA (www.qalibra.eu) has developed methods for quantitative assessment that integrate the risks and benefits of dietary change into a single measure of net health impact (See Fig. 3), and that allow quantification of associated uncertainties. It is a typical example of the 3-rd and 4-th tier in BRAFO (Hoekstra et al., 2010). QALIBRA also follows a stepwise approach, the consecutive steps consisting of: (1) Problem formulation including specification of the dietary scenarios and the population to be considered. (2) Identification of the adverse and beneficial health effects to be assessed, including influential factors and affected population. (3) Estimation of the intakes or exposures that cause those health effects. (4) Modeling of the dose–response relationship for each effect, including the probability of onset at the current age and, if the effect is continuous, the magnitude of the effect. (5) Estimation of the probabilities of recovery and mortality for affected individuals. (6) Selection of a common currency (e.g. DALY or QALY, see Section 5.3). (7) Specification of the severity and duration of the effect. (8) Calculation of the net health impact. (9) Evaluation of (quantifiable and unquantifiable) uncertainties and variabilities.

These methods are implemented in the QALIBRA tool, which is available to registered users on the QALIBRA website. The user is responsible for supplying the data such as intake scenarios, dose–response functions and if needed uncertainty intervals. The tool can be used in BRAFO’s quantitative tiers 3 and 4. QALIBRA recommends that practitioners follow the BRAFO tiered approach, resolving benefit–risk questions without quantitative integration where possible, and reserve the QALIBRA framework for those cases where assessment at tiers 3 and 4 is necessary. The tool works according to the annual directly attributable health impact method (van Kreijl et al., 2006). This approach considers a single year and only health effects that have their onset during that year. Their direct potential impact is considered, without taking into account interactions with other health effects. It is a less datademanding, but less realistic, alternative for the ‘simulation approach’, which takes into account a longer period, in which a proportion of the population will change class with respect to age, risk factor and disease status. QALIBRA advises to start with the simpler approach (addressing in a narrative how the overall impact might be affected by the way individual effects combine) and reserve the simulation of health over whole lifetimes as a higher tier option should the direct attributable health impacts method not answer the benefit–risk question sufficiently. The RIVM Chronic Disease Model is an example of a simulation approach; it has been used in (a part of) a large Dutch case study (Hoogenveen et al., 2010; van Kreijl et al., 2006).

The BENERIS (http://en.opasnet.org/w/Beneris) project has made a significant contribution to the methodological foundation of ‘open assessment’, which considers assessments as open collaborative processes of creating shared knowledge and understanding (see also Pohjola et al., this issue). The open process brings scientific experts, decision-makers, and any other stakeholders to the same collaborative process of tackling public health problems. Collaboration is facilitated by the Opasnet web-workspace (http:// en.opasnet.org), which is the major output of the project and consists of a wiki-interface, a modeling environment, and a database. In the BENERIS approach, open criticism has a crucial role. The objective in each tier is to convince a critical outsider (‘an impartial spectator’) about the current conclusions. Thus, the assessment work is directed towards the aim to gain acceptability in the eyes of a critical outsider. Openness is a critical feature of an assessment to make it possible in practice for outsiders to join and criticize the current content of the assessment. Another characteristic of BENERIS is the use of ‘value-of-information’, a scientific method for estimating the expected benefit that occurs when an uncertainty is resolved, to give guidance about the critical uncertainties that should be focussed on in the next tier. The main tools are probability distributions and Bayesian nets.

EFSA has organized a colloquium on ‘risk–benefit analysis of foods: methods and approaches’ in 2006 (EFSA, 2007) and has recently published a guidance document with respect to methodology, approaches, tools and potential pitfalls in the risk–benefit assessment (EFSA, 2010b). It proposes a three step approach, after the problem formulation: (1) initial assessment, addressing the question whether the health risks far outweigh the health benefits or vice versa, (2) refined assessment, aiming at providing semiquantitative or quantitative estimates of risks and benefits at relevant exposure by using common metrics, and (3) comparison of risks and benefits using a composite metric such as DALYs or QALYs (see Section 5.3) to express the outcome of the risk benefit assessment as a single net health impact value (EFSA, 2010b).

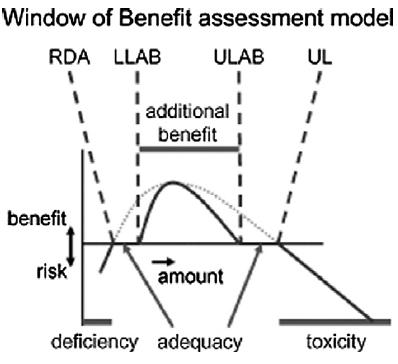

Along a slightly different line, the ‘‘window of benefit assessment’’ has been proposed as a concept to integrate nutritional optimization and safety considerations, see Fig. 4 (Palou et al., 2009). In this concept, the threshold model and a score model are combined as a framework for weighing risks and benefits. The traditional threshold model works from the guidance values for each (non-) nutrient that must not be exceeded or that must be reached, for example the UL and RDA in the case of nutrients (see Section 3.1.4 nutrients). Two new thresholds are introduced: the upper level of additional benefit (ULAB) and the lower level of additional benefit (LLAB). These are defined as the upper and lower levels of daily nutrient intake that can provide detectable additional benefits, apart from benefits derived from avoiding excessive intake or deficiency. Intake, then, should be within UL and RDA (absence of excess or shortage), but preferably within ULAB and LLAB (improved health). LLAB and ULAB will not be necessarily within the window defined by the RDA and UL; it is possible, for example, that the UL is based on a small and mildly adverse effect but that a larger beneficial effect occurs at a higher dose. Thus, type and severity of effect are relevant. It is postulated that, based on the robustness of homeostasis, LLAB and ULAB will delimit the optimal intake, that is needed when the biological system is challenged, while RDA and UL indicate levels of intake that are not permissible on a population- wide scale (Palou et al., 2009). It is proposed to score nutritionally beneficial and adverse characteristics of foods and combine them into a global score (Palou et al., 2009). The use of a score model allows the weighing of risks and benefits. A tool like this is still under development, for example by the PASSCLAIM project (see Section 4). The window of benefit is targeted to the needs of specific population subgroups (Palou et al., 2009). The same intake of a nutrient leads to an individually different windows of benefit depending on age, sex, physiological or pathological conditions, individual genetic constitution and lifestyle history (Hesketh et al., 2006; Palou et al., 2009).

Similarly, Verkerk describes a conceptual model which shows how beneficial effects may arise above the UL and that benefit–risk evaluation is required across a ‘zone of overlap’ (Verkerk, 2010; Verkerk and Hickey, 2010). Significant benefits occurring at intake levels above the UL are postulated to be the norm rather than the exception (Verkerk, 2010). It is argued that current management by regulatory prohibition and using a single most sensitive risk factor in the most sensitive population denies benefits to large parts of the population (Verkerk, 2010; Verkerk and Hickey, 2010). Also, it is argued that different management strategies are required for different molecular forms of the micronutrients, for example in the case of folates, carotenoids and niacin, as adverse effects differ between them (Verkerk, 2010; Verkerk and Hickey, 2010).

Renwick et al. (2004) have been one of the first to recognize that the difference in approach between establishing an RDA and a UL means that any benefit–risk analysis is based on different underlying criteria and approaches. They have provided a basis of how different aspects of the intake–incidence data for either the benefit or toxicity can be taken into account by suitable modeling, and how the output of the model can be used to provide usable advice for risk managers.

Integrated measures

One important question in weighing benefits and risks is which unit of measurement to use. A distinction can be made between single outcome health metrics and ‘‘composite’’ or ‘‘integrated’’ health measures. Single outcome health measures reflect a single measurement dimension and unit, for example mortality, disease incidence or functioning (quality of life). Composite health measures capture a combination of single outcome health metrics into one currency using a valuation function to weight the different health states or outcomes. It then becomes possible to unite mortality and morbidity and also to weigh different health states or different outcomes such as a reduced incidence of angina against an increased breast cancer incidence. Currently, the most used integrated health measures in food-related benefit–risk analysis are the DALY (disability adjusted life years) and the QALY (quality adjusted life years), measures which use the value, or preference, that people have for health outcomes, along a continuum between 0 and 1 (Gold et al., 2002).

In the QALY concept, 1 represents full health and 0 represents death, see Fig. 5a. To generate QALY values, (often theoretical) health states reflecting physical, social and emotional well-being (not associated with a particular disease or particular condition) are valued by members of the general public. The values (also termed ‘‘utilities’’) are derived either directly, using methods such as standard gamble, time trade-off and visual analog scales (Gold et al., 2002); or indirectly, using generic quality of life/health status questionnaires, in which some health states can be used to estimate the value of (a large number of) other health states, varying in sensitivity, administrative burden, cost, bias, etc. QALY’s are traditionally mostly used to measure health gains at micro scale, for example to compare two interventions. QALY’s of all individuals are summed, and the scenario with the highest number of QALY’s represents the highest health maximization. Per individual, QALY’s are calculated by multiplying the duration of the disease (for example the last 17 years of one’s life) by a quality weight (for example quality is reduced from 1 to 0.7) and adding this to the age of onset of disease (for example age 45), in other words: the life years reached at age of death (for example age 62 years) or at an earlier reference point are adjusted for their lack of quality and result generally in a number which is lower than the true age of death (for example age 57 years) or age at an earlier reference point, see Fig. 5a. When comparing two treatment groups or alternative scenarios, the average areas under the curve are compared and this difference is the number of QALY’s that the ‘‘better’’ intervention or scenario may gain over the ‘‘worse’’ intervention or scenario.

In the DALY context, 0 represents no disability and 1 represents death (Gold et al., 2002), see Fig. 5b. DALYs tend to be based on a universal set of standard weights covering specific diseases, based on expert judgments using ‘trade-off’ methods, the most wellknown being those defined by Murray (Murray, 1994). For many diseases, disability weights, which are currently being revised in the Global Burden of Disease 2010 study, are available at the WHO website (WHO, 2004). For example, the disability weight for uncomplicated diabetes mellitus is 0.015, for angina pectoris 0.124, for cancer in the metastatic phase 0.75 and first ever stroke 0.92. Some countries have established national weighing factors (Schwarzinger et al., 2003). DALY’s are based on disease years, but for short-term discomforts, such as (minor) food poisoning of influenza, there is also a solution (though the weight itself can be hard to find); the condition can either be weighed as a full year and then corrected to the actual duration or weighing can be adapted to represent a year which includes an episode of disease (the ‘year profile approach’) (van Kreijl et al., 2006). DALYs were introduced to primarily communicate a population-aggregate measure of (loss of) health – the Burden of Disease used by WHO. DALY’s of all individuals are summed; the scenario with the lowest number of DALY’s represents the highest health maximization or lowest health loss. One DALY represents the loss of the equivalent of one year of full health. It counts down from a standard ‘‘ideal’’ age of life expectancy at birth (for all population subgroups, but separate for men and women). From this reference point (for example 82 years for women, see Fig. 5b), years spent with a disease (for example 17) are multiplied by a disability weight (for example 0.3, where 0 is healthy) and added to the years that death (for example at age 62) prevented one from reaching full life expectancy. In other words, the years of life lost (YLL, in this example 20 years) and the years lived with a disease (YLD, which count more with higher disease burden) are added and thus result in a number which is higher than the true years lost (in this example 25 years). When comparing two intervention groups or alternative scenarios, the average areas above the curve are compared and this difference is the number of DALY’s that the ‘‘worse’’ intervention or scenario loses over the ‘‘better’’ intervention or scenario.

Often, the choice for DALY or QALY is a pragmatic one, based on data availability or experience of use rather than on a fundamental choice. Ethical and equity issues are not accounted for in QALY’s and DALY’s. For example, when calculating QALY’s, the elderly can be at a disadvantage, because they by definition have fewer life years left and thus have less opportunity to increase quality adjusted life years. Also, benefits and risks incorporated in one integrated measure may occur in different population subgroups. Folic acid is a good example, where prevention of neural tube defects and masking of B12 deficiency or possibly increased cancer risk apply to different subgroups. Decision-makers need to be aware of this and thus it is important to always accompany the DALY or QALY with the distribution of separate risks and benefits in subgroups.

In the area of benefits and risks of fish consumption some less ‘intensive’ integrating measures have been explored. Gochfeld and Burger (2005), have developed a composite benefit and risk by dose curve to identify a zone of benefit, above the benefit threshold and below the harm threshold. Separate dose–response curves for benefit and harm are combined into net benefit–harm composites. Foran et al. (2005) have calculated the Benefit Cancer Risk Ratio (BCRR) and the Benefit Noncancer Risk Ratio (BNRR). These ratios represent the rates of consumption of n-3 fatty acids (g/d) while controlling for the cumulative level of acceptable carcinogenic or noncarcinogenic risk of contaminants in fish. The risks are assessed via the increased probability of death from cancer (compared to a target risk of 1 in 10�5 and using a substance-specific cancer slope factor) and the RfD (compared to a target noncancer risk of 1), respectively. Their population values represent the long run trade-off of benefits and risks over repeated consumption. If the distribution of the estimates is not expected to be normal, upper and lower 95% CI’s can be constructed by bootstrap methods. Foran et al. have calculated the BCRR and BNRR to compare wholesale farmed, retail farmed and wild salmon. Along a somewhat similar line, Ginsberg and Toal (2009) have developed a risk–benefit equation, calculated separately for each endpoint, using dose– response functions and exposure information per fish meal. The increased risk (for example on coronary heart disease, through methylmercury) is then subtracted from the decreased risk/benefit (on coronary heart disease, through n-3 fatty acids). Different fish species can be compared, values >1 indicate a net benefit and values <1 indicate an increased risk.

Dealing with uncertainties